"Giants compete for layout, VC frantically throws money around, in a mad rush to make companies like 'AI Mind Reading' thrive"

![]() 10/16 2024

10/16 2024

![]() 486

486

Author | Lexie, Editor | Lu

"The First Step for AI to Disrupt Humanity: Understanding the Mind"

In the grand debate about AI, it is often portrayed as either our most capable and efficient assistant or the 'robot army' that will upend us. Regardless of whether it is friend or foe, AI must not only accomplish tasks assigned by humans but also 'understand' human emotions. This ability to read minds has been a major highlight in the AI field this year.

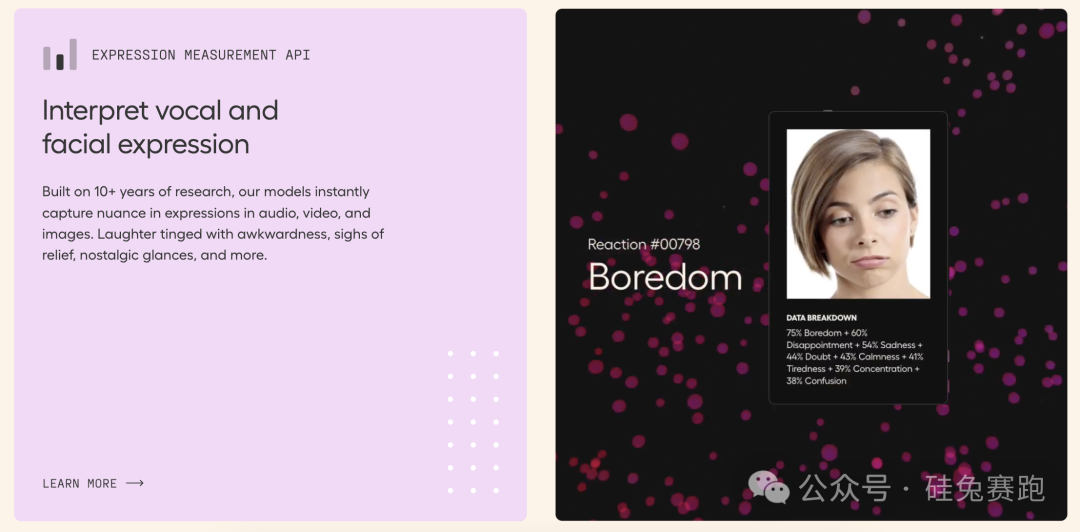

In PitchBook's recently released report on emerging enterprise SaaS technologies, 'Emotion AI' emerged as a significant technological highlight. It refers to the use of affective computing and artificial intelligence techniques to perceive, understand, and interact with human emotions, attempting to comprehend human sentiments through the analysis of text, facial expressions, voice, and other physiological signals. Simply put, Emotion AI aspires for machines to 'understand' emotions like humans, or even better.

Its core technologies include:

Facial Expression Analysis: Detecting microexpressions and facial muscle movements through cameras, computer vision, and deep learning. Voice Analysis: Identifying emotional states through voiceprints, intonation, and rhythm. Text Analysis: Interpreting sentences and contexts with the aid of Natural Language Processing (NLP) technology. Physiological Signal Monitoring: Enhancing personalized interaction and emotional richness by analyzing heart rate, skin reactions, etc., using wearable devices.

Emotion AI

Emotion AI's predecessor is sentiment analysis technology, primarily analyzing text interactions, such as extracting and analyzing user sentiments from text on social media. With AI's support, integrating various input methods like vision and audio, Emotion AI promises more accurate and comprehensive sentiment analysis.

01

VCs Shower Money, Startups Receive Huge Funding

Rabbit Insight observes that the potential of Emotion AI has attracted the attention of many investors. Startups specializing in this field, like Uniphore and MorphCast, have secured significant investments in this race.

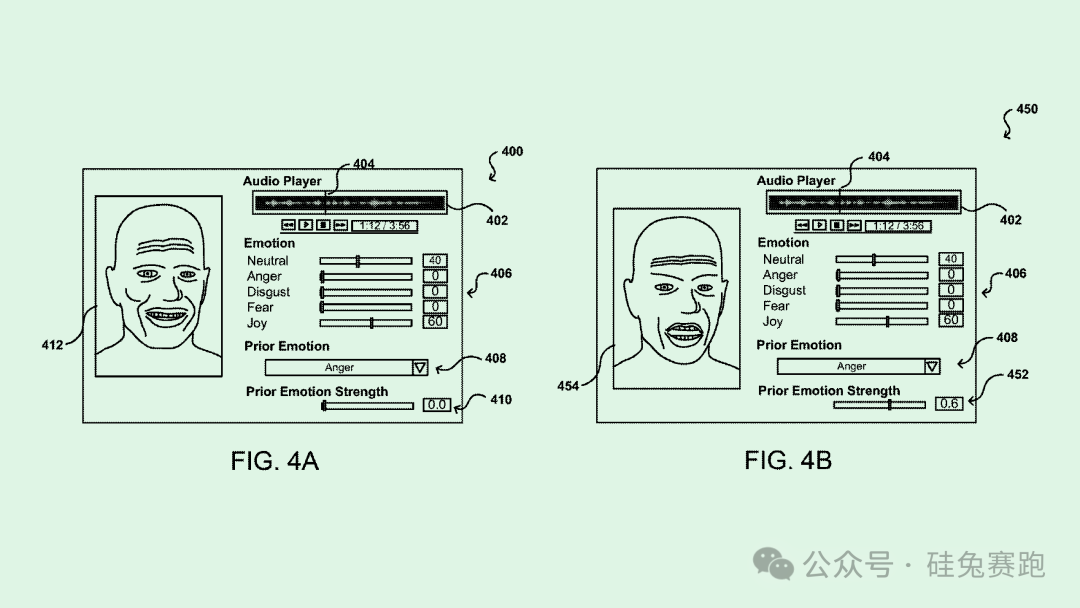

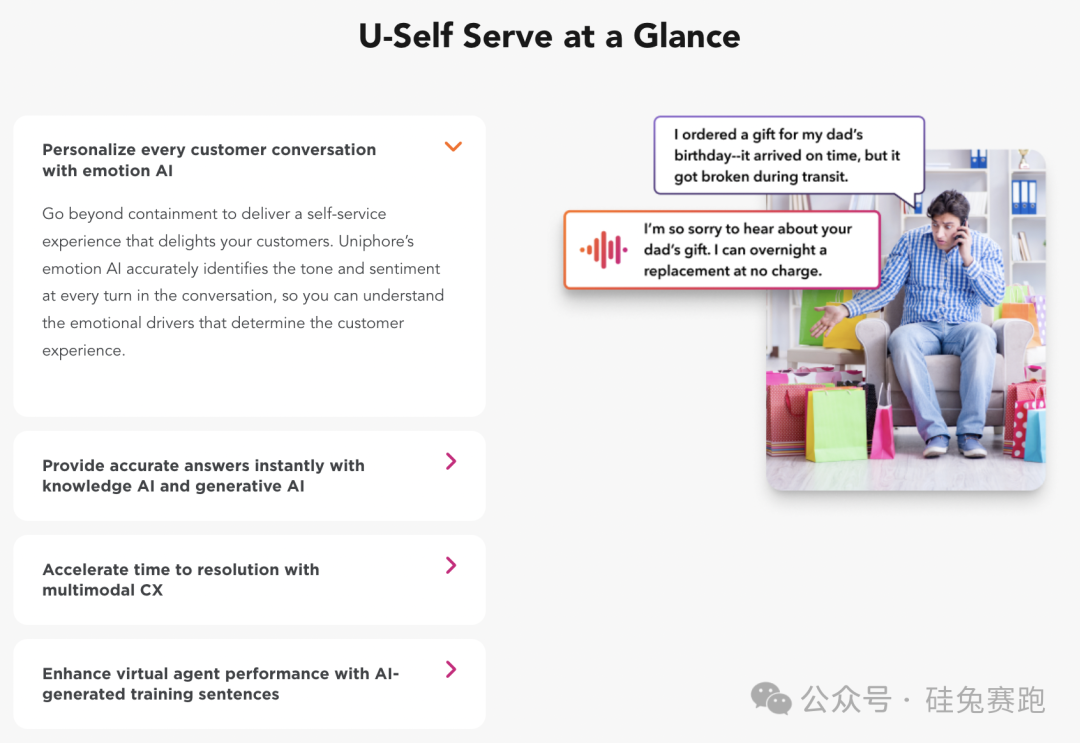

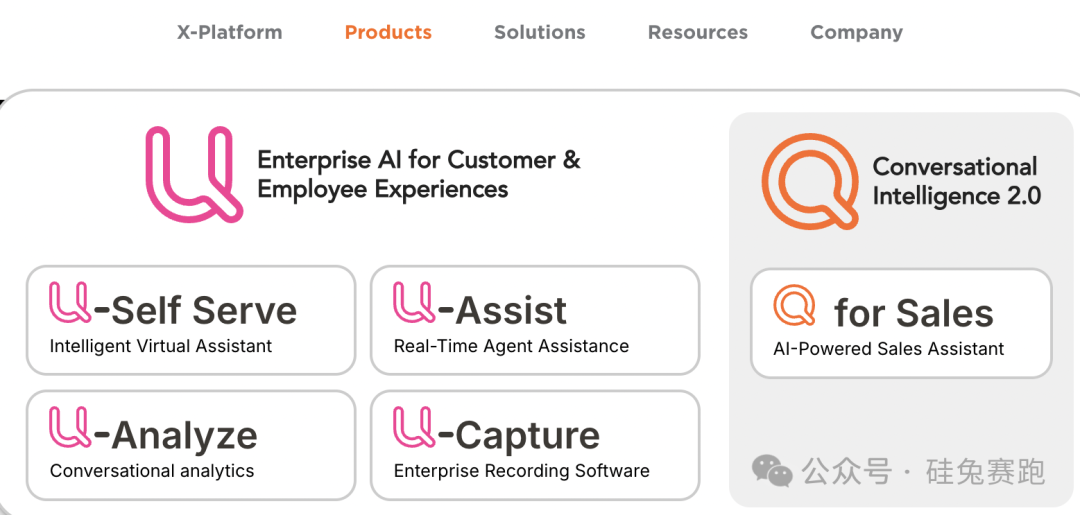

Uniphore, based in California, has been exploring automated conversation solutions for enterprises since 2008. It has developed multiple product lines, including U-Self Serve, U-Assist, U-Capture, and U-Analyze, helping clients engage in more personalized and emotionally rich interactions through voice, text, vision, and Emotion AI technology. U-Self Serve focuses on accurately identifying emotions and tones in conversations, enabling businesses to provide more personalized services and enhance user engagement and satisfaction.

U-Self Serve

U-Assist improves customer service agent efficiency through real-time guidance and workflow automation. U-Capture enables enterprises to gain deep insights into customer needs and satisfaction through automated emotional data collection and analysis. U-Analyze aids clients in identifying critical trends and emotional shifts in interactions, providing data-driven decision support to enhance brand loyalty.

Uniphore's technology goes beyond machine understanding of language; it aims for machines to capture and interpret emotions hidden behind tone and facial expressions when interacting with humans. This capability allows businesses to better fulfill customers' emotional needs, transcending mechanical responses. By using Uniphore, enterprises can achieve an 87% user satisfaction rate and a 30% improvement in customer service performance.

Uniphore has raised over US$620 million in funding to date, with its latest round of US$400 million led by NEA in 2022, and participation from existing investors like March Capital. Post-funding, its valuation reached US$2.5 billion.

Uniphore

Hume AI has launched the world's first empathetic voice AI, founded by Alan Cowen, a former Google scientist renowned for his groundbreaking work on semantic space theory. This theory reveals nuances in voice, facial expressions, and gestures to understand emotional experiences and expressions. Cowen's research has been published in prestigious journals like Nature and Trends in Cognitive Sciences, covering the most extensive and diverse range of emotional samples to date.

Driven by this research, Hume developed the conversational speech API - EVI, which combines large language models and empathy algorithms to deeply understand and analyze human emotional states. It not only recognizes emotions in speech but also responds with more nuanced and personalized reactions during user interactions. Developers can access these features with just a few lines of code, integrating them into any application.

Hume AI

One of the primary limitations of most current AI systems lies in their reliance on human-generated instructions, which are prone to errors and fail to tap AI's full potential. Hume's Empathetic Large Language Model (eLLM) adjusts its word choices and tone based on context and user emotional expressions. By making human happiness its first principle, Hume's AI learns, adapts, and interacts, delivering more natural and authentic experiences across mental health, education, emergency response, brand analysis, and more.

In March this year, Hume AI completed a US$50 million Series B funding round led by EQT Ventures, with participation from Union Square Ventures, Nat Friedman & Daniel Gross, Metaplanet, and Northwell Holdings.

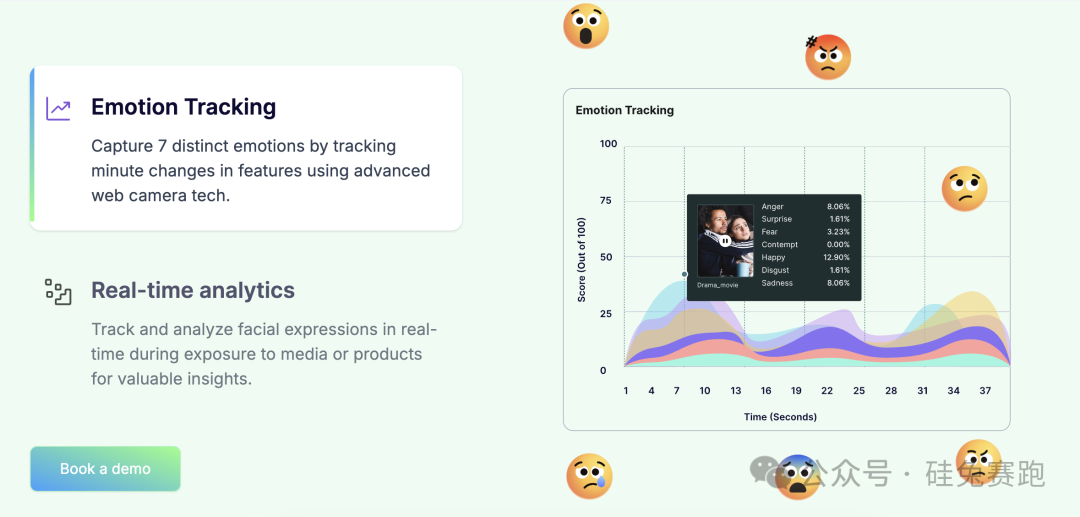

Another player in this field is Entropik, specializing in measuring consumer perceptions and emotional reactions. Through Decode, a comprehensive feature integrating Emotion AI, Behavioral AI, Generative AI, and Predictive AI, Entropik better understands consumer behavior and preferences, offering more personalized marketing advice. Recently, in February 2023, Entropik secured US$25 million in Series B funding from SIG Venture Capital and Bessemer Venture Partners.

Entropik

02

Giants Join the Fray, Leading to a Chaotic Landscape

Leveraging their strengths, tech giants have also made inroads into Emotion AI.

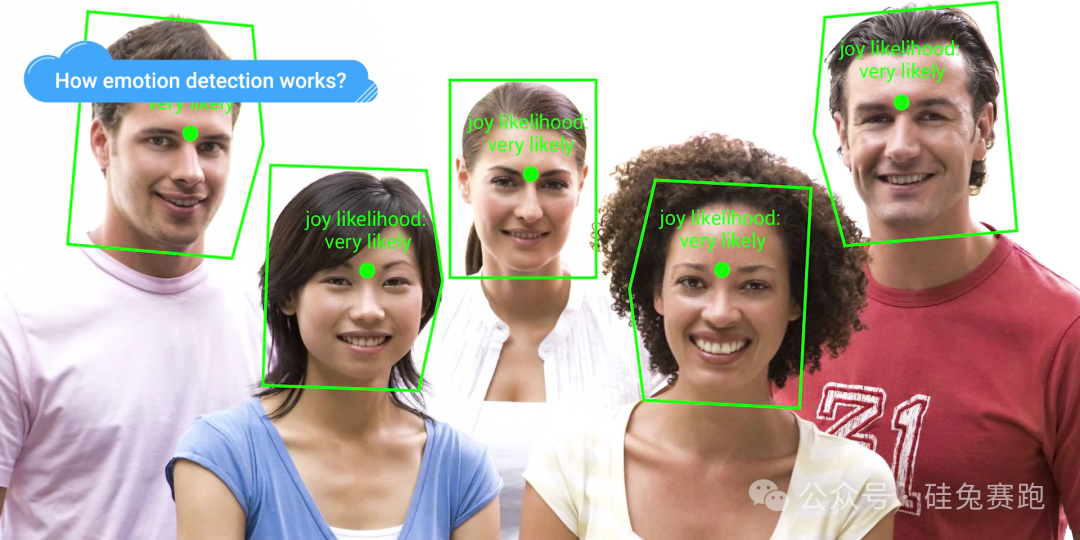

Microsoft Azure's Cognitive Services Emotion API identifies emotions like joy, anger, sadness, and surprise in images and videos by analyzing facial expressions.

IBM Watson's Natural Language Understanding API processes vast amounts of text data to identify underlying emotional tendencies (positive, negative, or neutral), enhancing user intent interpretation.

Google Cloud AI's Cloud Vision API boasts powerful image analysis capabilities, quickly recognizing emotional expressions in images, supporting text recognition and emotional associations.

AWS Rekognition detects emotions, identifies facial features, tracks expression changes, and integrates with other AWS services for comprehensive social media analysis or Emotion AI-driven marketing applications.

Cloud Vision API

Some startups have progressed faster in Emotion AI, attracting even tech giants to 'poach' talent. For instance, after investing US$1.3 billion in Inflection AI alongside Bill Gates, Eric Schmidt, and NVIDIA, Microsoft offered a role to Mustafa Suleyman, co-founder and AI leader at Inflection AI, who then joined Microsoft with over 70 employees, earning Microsoft nearly US$650 million.

Nonetheless, Inflection AI quickly regrouped, forming a new team with backgrounds in Google Translate, AI consulting, and AR, continuing to develop its core product, Pi. Pi is a personal assistant that understands and responds to users' emotions. Unlike traditional AIs, Pi prioritizes emotional connections with users, perceiving emotions through voice and text inputs and demonstrating empathy in conversations. Inflection AI views Pi as a coach, confidant, listener, and creative partner, transcending the role of a mere AI assistant. Additionally, Pi boasts robust memory capabilities, remembering users' conversation histories to enhance interaction continuity and personalization.

Inflection AI Pi

03

A Path Forward Amid Concerns and Skepticism

While Emotion AI embodies our aspirations for more humanized interactions, like all AI technologies, it faces scrutiny and skepticism. Firstly, can Emotion AI accurately interpret human emotions? While theoretically enriching service, device, and technology experiences, human emotions are inherently ambiguous and subjective. As early as 2019, researchers questioned the reliability of facial expressions in reflecting true human emotions, highlighting limitations in solely relying on machines to mimic facial expressions, body language, and tone for emotional understanding.

Secondly, stringent regulatory oversight hinders AI development. For instance, the EU's AI Act prohibits the use of computer vision emotional detection systems in sectors like education, limiting the promotion of certain Emotion AI solutions. States like Illinois in the US also prohibit the unauthorized collection of biometric data, directly restricting the use of Emotion AI technologies. Data privacy and protection are paramount, as Emotion AI often applies to sectors with stringent data privacy requirements like education, healthcare, and insurance, necessitating companies to ensure the secure and legal use of emotional data.

Lastly, intercultural emotional communication poses challenges even for humans, let alone AIs. Different regions interpret and express emotions differently, potentially impacting the effectiveness and integrity of Emotion AI systems. Furthermore, Emotion AI may struggle with biases related to race, gender, and gender identity when processing emotions.

Emotion AI promises both efficiency and empathy, but will it truly revolutionize human interactions or remain a smart assistant akin to Siri, performing mediocrely in tasks requiring genuine emotional understanding? Perhaps in the future, AI's 'mind-reading' will disrupt human-machine and even human-to-human interactions, but for now, genuinely understanding and responding to human emotions likely necessitates human involvement and prudence.

References: Uniphore Announces $400 Million Series E (Uniphore), Hume AI Announces $50 Million Fundraise and Empathic Voice Interface (Yahoo Finance), Introducing Pi, Your Personal AI (Inflection AI), 'Emotion AI' may be the next trend for business software, and that could be problematic (TechCrunch), EMERGING TECH RESEARCH Enterprise SaaS Report (PitchBook)