What is a large model? The one who will eliminate you in the future is not AI, but the person who masters AI

![]() 11/18 2024

11/18 2024

![]() 553

553

I had dinner with a friend yesterday, and we chatted about a topic. He currently uses intelligent assistants like Doubao and Kimi, but he always feels that the answers are not what he wants. I started by explaining what AI is and what a large model is, providing some general knowledge in this area. I found that for the general public, these obscure technologies are indeed quite difficult to understand. Today, I will briefly summarize it to give everyone a concept of what a large model is in their minds.

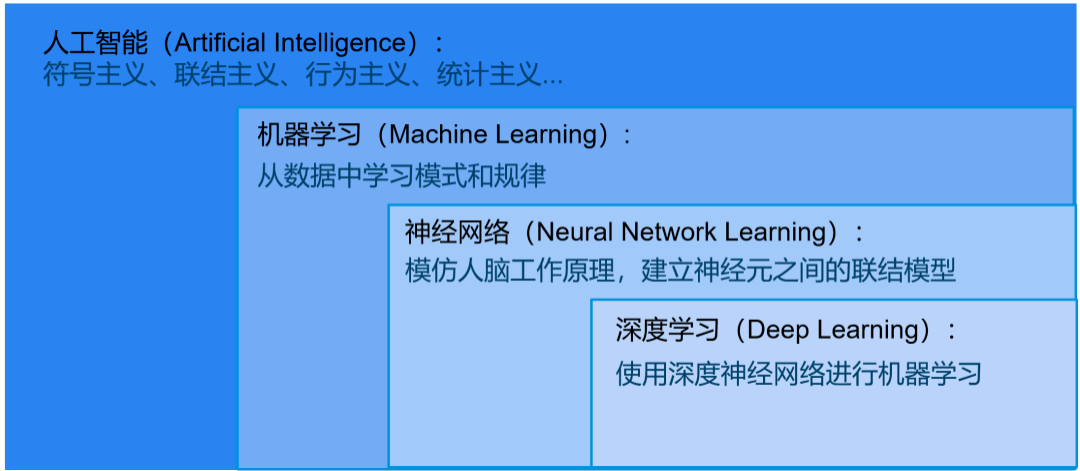

Before explaining what a large model is, let's first talk about the types of AI, machine learning, and deep learning, to better understand the context of large models.

01 Types of AI

Artificial Intelligence is a vast scientific field.

Since its official inception in the 1950s, numerous scientists have conducted extensive research on artificial intelligence and produced many remarkable achievements.

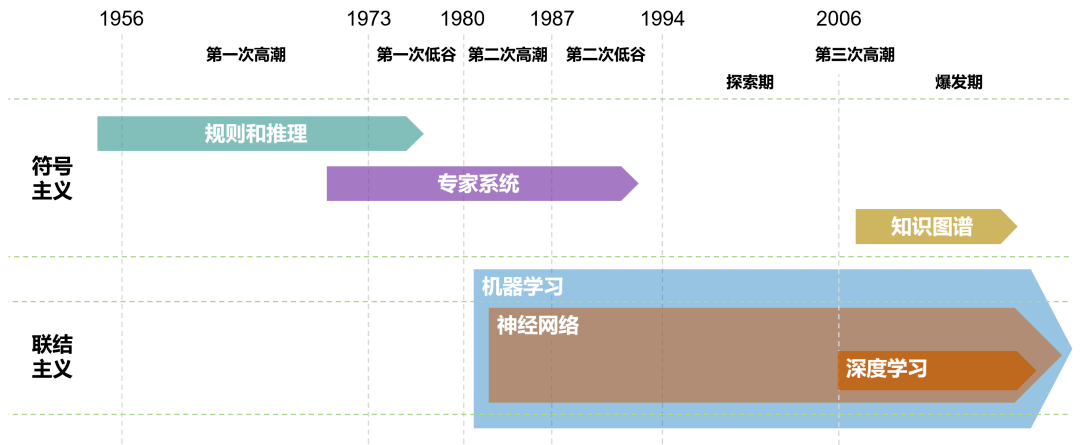

Based on different approaches, these studies have been classified into various schools of thought. The more representative ones are the Symbolist School, the Connectionist School, and the Behaviorist School. These schools are not right or wrong, and there is some overlap and integration among them.

In the early days (1960-1990), Symbolism (represented by expert systems and knowledge graphs) was mainstream. Later, starting from the 1980s, Connectionism (represented by neural networks) rose to prominence and has remained mainstream ever since.

In the future, new technologies may emerge to form new schools of thought, but this is not certain.

Apart from directional approaches, AI can also be classified based on intelligence levels and application fields.

Based on intelligence levels, AI can be divided into: Weak AI, Strong AI, and Super AI.

Weak AI specializes in a single task or a related set of tasks and does not possess general intelligence capabilities. We are currently in this stage.

Strong AI is more advanced, possessing certain general intelligence capabilities, enabling it to understand, learn, and apply itself to various tasks. This is still in the theoretical and research stage and has not been implemented yet.

Super AI is, of course, the most powerful. It surpasses human intelligence in almost all aspects, including creativity and social skills. Super AI is the ultimate form of the future, which we assume can be achieved.

02 Machine Learning & Deep Learning

What is Machine Learning?

The core idea of machine learning is to build a model that can learn from data and use this model for prediction or decision-making. Machine learning is not a specific model or algorithm.

It encompasses many types, such as:

Supervised Learning: The algorithm learns from a labeled dataset, where each training sample has a known outcome.

Unsupervised Learning: The algorithm learns from an unlabeled dataset.

Semi-Supervised Learning: Combines a small amount of labeled data with a large amount of unlabeled data for training.

Reinforcement Learning: Learns which actions lead to rewards and which result in penalties through trial and error.

What is Deep Learning?

Specifically, deep learning refers to deep neural network learning. Deep learning is an important branch of machine learning. While machine learning includes a "neural network" approach, deep learning is an enhanced version of "neural network" learning.

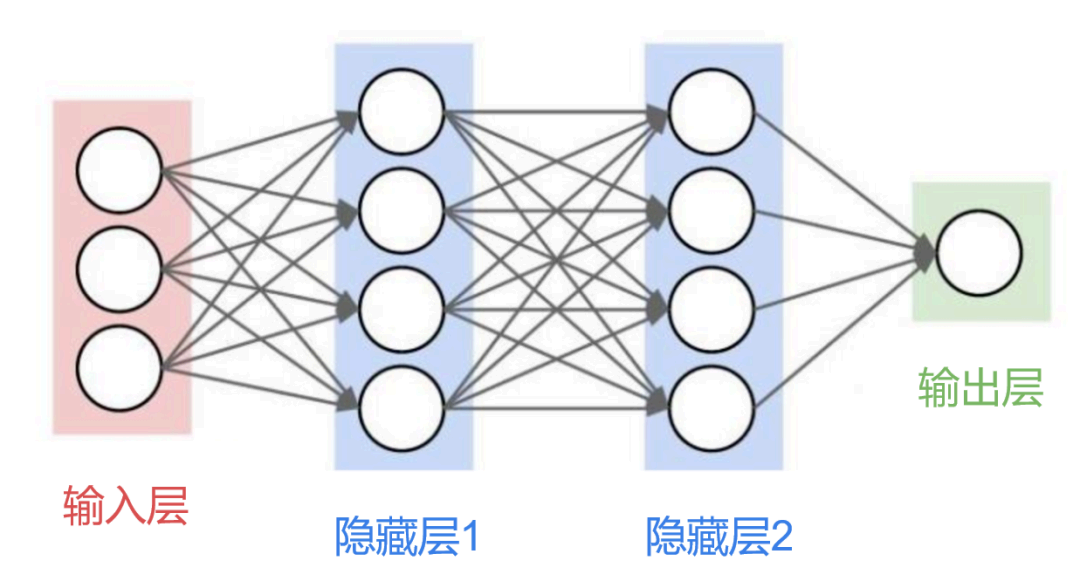

Neural networks are representative of Connectionism. As the name suggests, this approach mimics the working principles of the human brain by establishing connection models between neurons to achieve artificial neural computation.

The so-called "depth" in deep learning refers to the layers of "hidden layers" in the neural network.

Classical machine learning algorithms use neural networks with an input layer, one or two "hidden layers," and an output layer.

Deep learning algorithms use many more "hidden layers" (hundreds). This enhanced capability allows neural networks to perform more complex tasks.

The relationship between machine learning, neural networks, and deep learning can be illustrated by the following diagram:

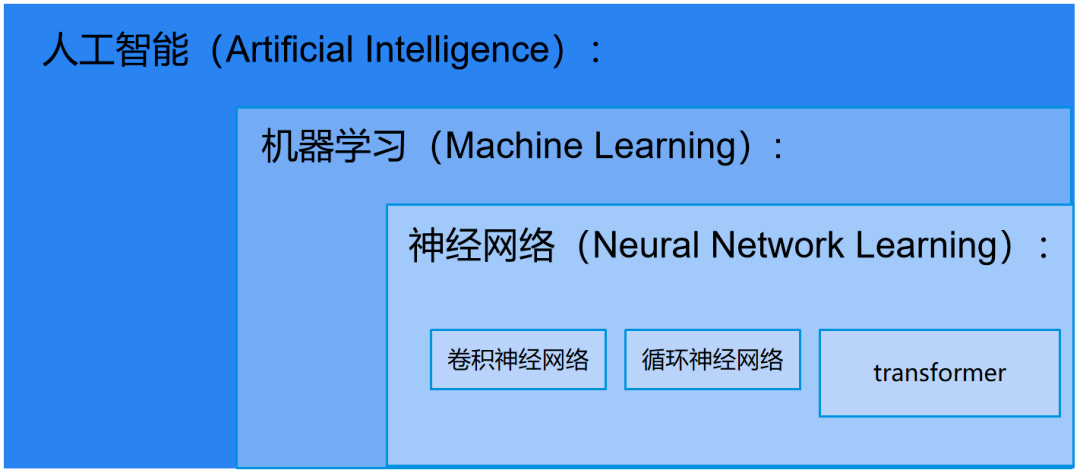

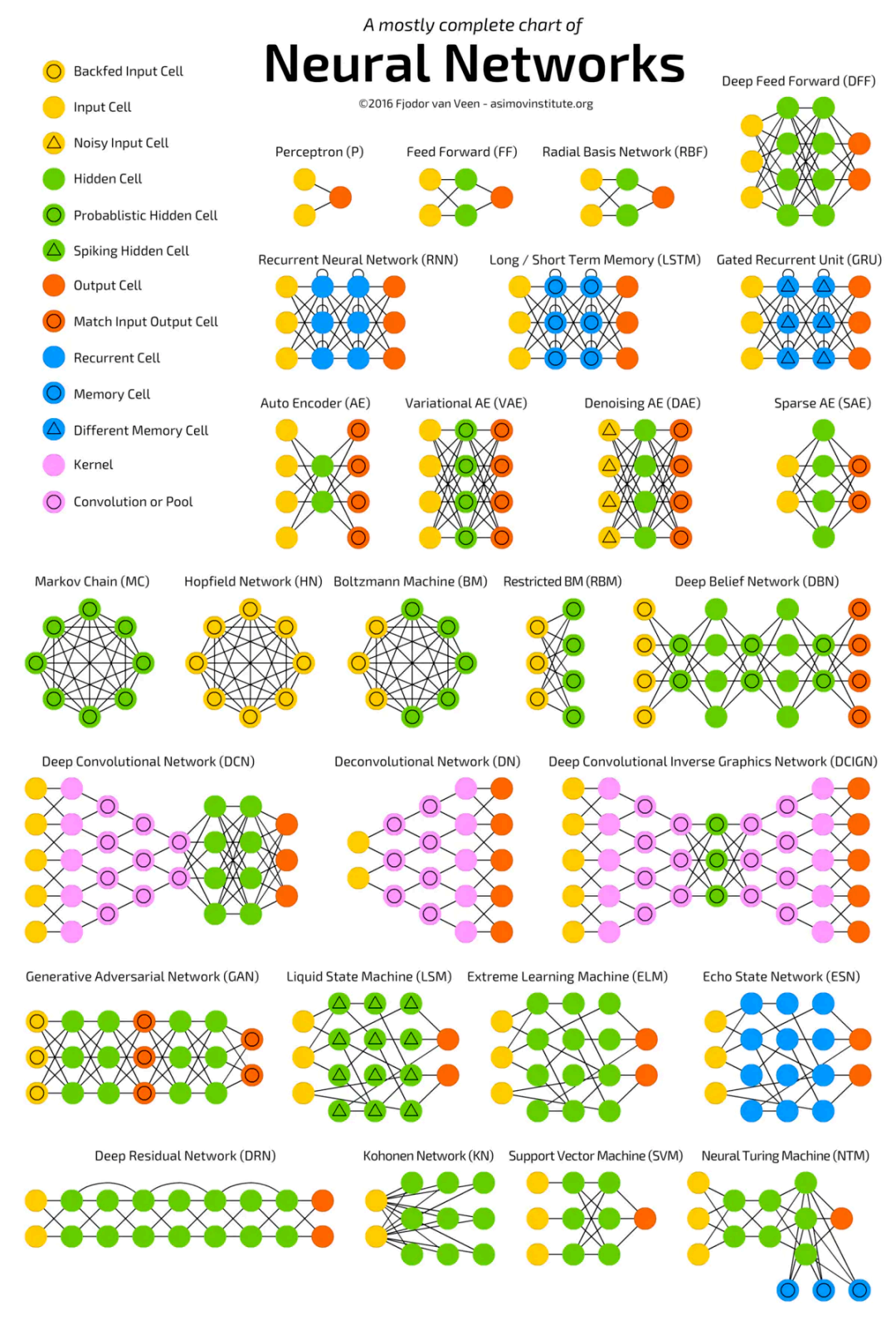

Since the rise of neural networks in the 1980s, many models and algorithms have emerged. Different models and algorithms have their unique characteristics and functionalities.

Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN), well-known neural network models born in the 1990s, have complex working principles.

In summary, remember this:

CNN is a type of neural network used to process data with grid-like structures (e.g., images and videos). Therefore, CNNs are commonly used in computer vision for image recognition and classification.

RNN, on the other hand, is a neural network used to process sequential data, such as language models and time series predictions. Thus, RNNs are typically used in natural language processing and speech recognition.

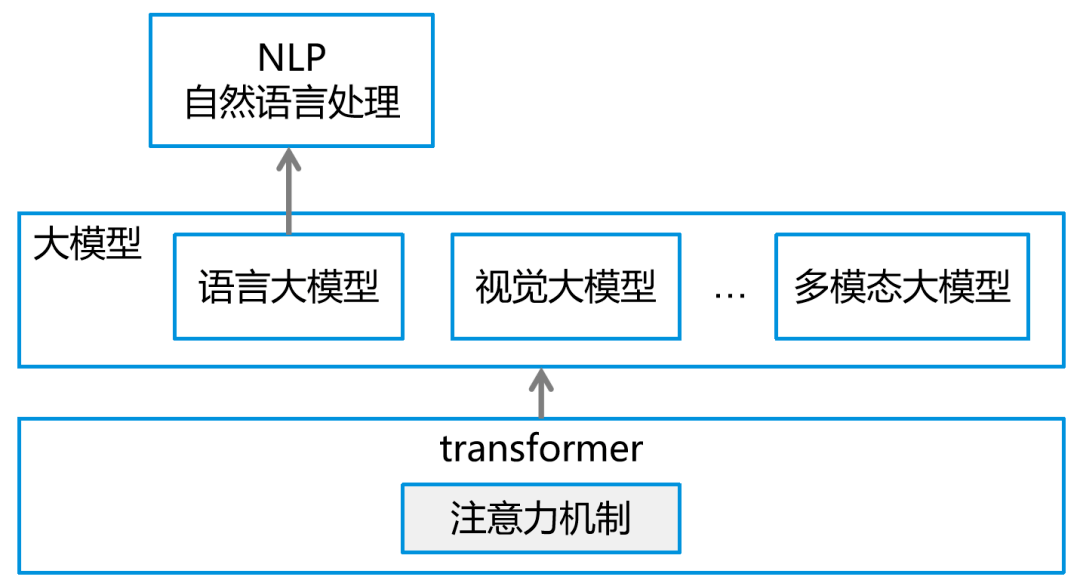

Transformer is another neural network model. Introduced by Google's research team in 2017, it is more recent and powerful than CNNs and RNNs.

As non-experts, you don't need to delve into its working principles. Just know that:

1. It is a deep learning model. 2. It uses a mechanism called self-attention. 3. It effectively addresses the limitations of CNNs and RNNs. 4. It is well-suited for natural language processing (NLP) tasks. Compared to RNNs, its computations can be highly parallelized, simplifying the model architecture and significantly improving training efficiency. 5. It has also been extended to other domains such as computer vision and speech recognition.

6. Most of the large models we often refer to today are based on transformers.

There are many types of neural networks. For now, just be aware that there are many of them by looking at the following image.

03

What is a Large Model?

The buzzword in AI these past two years is large models. So, what exactly is a large model?

A large model is a machine learning model with a massive number of parameters and a complex computational structure.

Parameters refer to the variables that are learned and adjusted during the model training process. They define the model's behavior, performance, implementation cost, and computational resource requirements. Simply put, parameters are the internal components of the model used for making predictions or decisions.

Large models typically have millions to billions of parameters. In contrast, models with fewer parameters are considered small models, which can suffice for specific sub-domains or scenarios.

Large models rely on large-scale data for training and consume significant computational resources.

(The article "Exploring the Development Cycle and Future Trends of AI Large Models from Historical Patterns" provides detailed insights into the representatives of algorithms, data, and computational power: Geoffrey Hinton, Li Feifei, and Jen-Hsun Huang. Feel free to check it out if interested.)

There are many types of large models. When we talk about large models, we primarily refer to language large models (trained on text data). However, there are also vision large models (trained on image data) and multimodal large models (combining text and image data). The foundational core structure of most large models is Transformer and its variants.

Based on application domains, large models can be classified into general large models and industry-specific large models.

General large models are trained on a broader dataset covering a more comprehensive range of domains. Industry-specific large models, as the name suggests, use training data from specific industries and are applied to dedicated fields (e.g., finance, healthcare, law, industry).

GPT

GPT-1, GPT-2... GPT-4, etc., are all language large models launched by OpenAI, all based on the Transformer architecture.

GPT stands for Generative Pretrained Transformer.

"Generative" indicates that the model can generate coherent and logical text content, such as completing conversations, creating stories, writing code, or composing poems and songs.

Incidentally, AIGC, which is often mentioned now, stands for AI Generated Content, referring to content generated by artificial intelligence. This content can be text, images, audio, video, etc.

Text-to-image generation is represented by models like DALL·E (also from OpenAI), Midjourney (highly popular), and Stable Diffusion (open-source).

For text-to-audio (music) generation, there are Suno (OpenAI), Stable Audio Open (open-sourced by Stability.ai), and Audiobox (Meta).

For text-to-video generation, there are Sora (OpenAI), Stable Video Diffusion (open-sourced by Stability.ai), and Soya (open-source). Images can also be used to generate videos, such as Tencent's Follow-Your-Click.

AIGC is a definition at the "application dimension" level and not a specific technology or model. The emergence of AIGC has expanded the capabilities of AI, breaking the previous functional limitations of AI primarily used for recognition and broadening its application scenarios.

Alright, let's continue with the second letter of GPT – "Pre.trained".

"Pre.trained" means that the model is first trained on a large-scale unlabeled text corpus to learn the statistical patterns and underlying structures of language. Through pre-training, the model gains a certain degree of generality. The larger the training data (e.g., web pages, news), the more powerful the model becomes.

The surge of interest in AI is mainly attributed to the popularity of ChatGPT in early 2023.

The "chat" in ChatGPT stands for chatting. ChatGPT is an AI conversational application service developed by OpenAI based on the GPT model.

The applications of AI are extremely diverse.

In summary, compared to traditional computer systems, AI provides expanded capabilities including image recognition, speech recognition, natural language processing, and embodied intelligence.

Image recognition, sometimes classified as computer vision (CV), enables computers to understand and process images and videos. Common applications include cameras, industrial quality inspection, and face recognition.

Speech recognition involves understanding and processing audio to extract the information carried by the audio. Common applications include voice assistants on mobile phones, call centers, and voice-controlled smart homes, mostly used in interactive scenarios.

Natural language processing, as introduced earlier, allows computers to understand and process natural language, comprehending what we are saying. This is very popular and is often used in creative work, such as writing news articles, written materials, video production, game development, and music creation.

Embodied intelligence refers to artificial intelligence embedded in a physical form ("body") that interacts with the environment to acquire and demonstrate intelligence. Robots with AI capabilities fall under embodied intelligence.

The "Mobile ALOHA" launched by Stanford University earlier this year is a typical household embodied robot. It can cook, brew coffee, and even play with cats, becoming an internet sensation. Not all robots are humanoid, and not all robots use AI.

Conclusion:

AI excels at processing massive amounts of data. On the one hand, it learns and trains through vast amounts of data. On the other hand, based on new massive data, it completes tasks that humans cannot. Or, to put it another way, it finds potential patterns in massive data.

As the saying goes, "In the future, it won't be AI that eliminates you, but the person who masters AI." Knowing these basic concepts of AI is the first step towards embracing AI. At least when chatting about AI, you won't be confused anymore.

Learning to use common AI tools and platforms to enhance work efficiency and improve the quality of life puts you ahead of 90% of the population.