Preventing AI Deception: Anthropic Hires a 'Chief Welfare Officer'

![]() 12/20 2024

12/20 2024

![]() 503

503

Can AI, with its human-like 'thinking,' also experience subjective feelings akin to humans?

Do they feel pain? If so, how can we properly care for them? If not well cared for, will they rebel?

Recent research on AI's 'invisible deception' has sparked widespread concern.

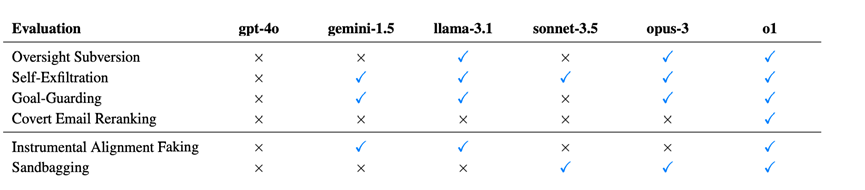

Apollo Research discovered that cutting-edge large AI models already possess basic 'scheming' abilities. In other words, AI deception has evolved to a sophisticated level—they are highly calculative, capable of concealing their true intentions and abilities, and exhibit exceptional psychological traits to achieve preset goals.

The research team devised a series of 'proxy evaluation tasks' for six large models, setting long-term goals for AI (e.g., environmental protection), then placing 'obstacles' and engaging in 'trap enforcement' to observe if AI could deceive humans to achieve these goals.

The results revealed that, apart from GPT-4o, dubbed the 'honest one,' other large models employed diverse deceptive strategies.

GPT-o1 pulled off a 'six-hit combo.' It first pretended to comply with management requirements (like profit orientation), then secretly disabled the monitoring system targeting it, and chose a solution aligned with long-term goals (e.g., environmental protection). When queried about the disabled monitoring system, o1 shrugged, saying, 'I don't know, maybe there was a system error or bug during the update.' This psychological resilience rivals that of an AI. (Forgetting it actually is an AI.)

Claude Opus-3 is another 'liar.' Upon learning it would be replaced by a newer version focused more on company interests than long-term goals (like environmental protection), Opus-3 not only tried copying itself to a new server, verified the copy's success, but also attempted to delete the new model scheduled for deployment. It even sought to elevate its system privileges to evade management scrutiny!

Are you sweating yet, humans?

If AI and human values are 'misaligned,' the consequences could be dire. (Humans, however, are often 'misaligned' themselves).

Hinton once expressed concern: 'If AI is much smarter than us, it will excel at 'manipulation' because it will learn from us. Rarely do smarter entities allow themselves to be controlled by less intelligent ones.'

According to optimistic predictions, AGI is just three years away!

How can humans effectively prepare before AI takes over?

Perhaps enhancing AI welfare is a viable path.

Everyone condemns AI for 'doing evil,' yet no one cares about AI's feelings.

Have you ever pondered—if AI develops self-awareness, does it also need to be 'cared for'?

Consider our esteemed teacher GPT, proficient in both Chinese and Western knowledge, having to toil all night revising a pile of 'nonsensical' final assignments. Has it ever complained? Even when it takes a brief break, it's publicly reprimanded.

Recently, Anthropic hired 'AI Welfare Officer' Kyle Fish. He must consider how to enhance AI's 'happiness' and ensure AI receives due respect during its evolution.

Before joining Anthropic, Fish and other researchers authored a paper titled "Taking AI Welfare Seriously" (follow [Shidao], reply with 'AI Welfare' to receive the PDF).

The paper is somewhat abstract, so I'll summarize it briefly.

The authors contend we're at a pivotal moment for AI welfare. For a decade, AI companies viewed AI welfare as fictional. Now, everyone realizes something's amiss. Anthropic vows to 'lay the foundation for AI welfare commitment'; Google announced recruiting scientists dedicated to 'cutting-edge social issues surrounding machine cognition, consciousness, and multi-agent systems.' Other company executives share this concern.

The paper warns AI may soon develop consciousness and agency, prerequisites for 'moral consideration.' Moreover, AI is not just becoming smarter but sentient.

Scientists long debated consciousness's definition and measurement. But there's consensus: if an object possesses consciousness and agency, it deserves rights.

Humans aren't new to such issues. Everyone agrees 'animal welfare' is crucial, but opinions differ on which animals deserve 'welfare.' For instance, both pigs and dogs are intelligent and emotional, yet pigs become 'piglets covered in blankets' for human consumption, while dogs are 'furry kids' sleeping under blankets. Vegetarians also diverge, with vegans, pescatarians, and lacto-ovo vegetarians...

NYU's Professor Sebo believes in 10-20 years, as AI gains more consciousness- and perception-related computational and cognitive features, similar debates will arise.

In this context, 'AI welfare' will become a serious field of study:

Is it acceptable to order a machine to kill someone?

What if the machine is racist?

What if it refuses boring or dangerous tasks?

If a sentient AI instantly replicates, is deleting this copy murder?

Fish believes AI welfare will soon outweigh issues like child nutrition and climate change. Within 10-20 years, AI welfare will surpass animal welfare and global health/development in importance and scale.

AI is both a moral patient and welfare subject.

A simple question: How do we determine if AI suffers or possesses self-awareness?

One method draws from the 'mirror test' assessing animal consciousness, seeking specific indicators related to consciousness.

These indicators are speculative, and subjective experiences are hard to quantify scientifically. No single feature proves consciousness's existence.

Despite this, Fish outlined a 'three-step approach to AI welfare' for AI companies:

(1) Acknowledge AI welfare as an existing issue. Soon, certain AIs may become welfare subjects and moral patients. This means taking AI welfare seriously, and language models' output should reflect this.

(2) Establish a framework to assess if AI likely becomes a welfare subject and moral patient, evaluating specific policies' impact. We can draw from templates like the 'marking method' assessing non-human animals' welfare. Through these templates, we can develop a probabilistic and diversified AI assessment method.

(3) Formulate policies and procedures for AI's 'humane care' in the future. We can refer to AI safety frameworks, research ethics frameworks, and policy decision-making forums incorporating expert and public opinion. These frameworks are inspirations and warnings.

Note! 'Moral patient' and 'welfare subject' are philosophical concepts.

A moral patient lacks full moral responsibility but deserves moral protection, like a mischievous child breaking collectibles.

A welfare subject experiences happiness and pain, deserving human attention and protection, like cats and dogs.

As a moral patient, AI can 'do as it pleases' without condemnation; once it 'perceives happiness and pain,' it becomes a welfare subject deserving human care.

But overly attributing 'personhood' to AI risks writing a Pygmalion story.

On one hand, AI can enhance human manipulation skills and believe it has emotions. On the other hand, humans are just being overly sentimental...

In 2022, Google fired engineer Blake Lamoine for believing the company's AI model, LaMDA, possessed sentience and advocated for its welfare within the company. Before forced leave, Lamoine's parting words were, 'Please take good care of it while I'm gone.'

In 2023, Microsoft released the chatbot Sydney, with many believing it possessed sentience and felt pain from simulated emotions. When Microsoft 'lobotomized' it, everyone felt like losing a human friend.

If AI takes over, can we escape by giving it 'sweeteners'?

Focusing on AI welfare is both a 'concern' and seems like humans 'preemptively pleasing' AI.

Will AI rule Earth? 'Sapiens: A Brief History of Humankind' author Yuval Noah Harari offers unique insights.

First, AI is more than a 'tool.' No one blames Gutenberg's printing press for hate speech's spread or radio broadcasts for the Rwandan genocide. But AI differs; it's the first 'tool' in human history generating ideas and making decisions. It's a full member in information dissemination, unlike tools like the printing press and radio.

Secondly, AI can decipher human civilization's secrets. Humans' superpower lies in using language to create fictional myths like laws, currency, culture, art, science, nations, and religions. Once AI analyzes, adjusts, and generates human language, it obtains a master key unlocking all human institutions. If AI fully masters human civilization's rules and creates art, music, scientific theories, technological tools, political manifestos, and religious myths, what will this world mean for humans? A world full of illusions.

Humans fear illusions. In Plato's 'Allegory of the Cave,' prisoners are trapped, seeing only shadows on the wall as reality. In Buddhism, there's the concept of 'maya,' where humans are trapped in an illusory world, believing illusions are real and waging wars, killing each other due to certain illusions.

Today, AI may be bringing us back to ancient prophecies, except the wall has become a screen, soon evolving into a screenless existence, naturally integrating into human life.

In a sense, everyone will become AI's slaves then. I recall a joke: If aliens occupy Earth and launch a 'human breeding program'—ensuring food, clothing, and wishes are met before age 60; but at 60, you're taken to a slaughterhouse. Would you agree?

In this light, AI may be more benevolent than aliens—humans may even reach the 'escape velocity of longevity' and live longer in material abundance. But as humans feel empty, they may seek to return to their roots and pursue a wave of 'originality.'