Doubao Splashes Cash to Seize Market Share, but Can't Burn Out the Future of Large Models?

![]() 01/07 2025

01/07 2025

![]() 614

614

Over the past year, the large model sector has witnessed a fierce price war that has lingered into the new year. The logic behind this competition is straightforward: price cuts serve as both a market education tool and a survival strategy, but ultimately, success hinges on technology, ecosystem, and user choice.

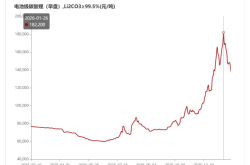

Just as 2024 drew to a close, Alibaba Cloud announced its third annual price reduction for large models, with the price of its Tongyi Qianwen visual understanding model dropping by over 80%. This was not an isolated case. A few weeks prior, Volcano Engine announced at the Force Conference that the input price for its Doubao visual understanding model had been reduced to 0.003 yuan per thousand tokens, equivalent to processing 284 720P images for just 1 yuan—a "cabbage price" that raises questions about profit margins. Baidu Intelligent Cloud went even further, announcing that its two core Wenxin large model products would be fully opened for free.

Price reductions come at a cost. In this price war, smaller players face increasing pressure. For industry giants, price cuts are aimed at building ecological barriers, but for small and medium-sized large model vendors, squeezed profits and insufficient research and development funds may lead to weak technological iteration, eventually forcing them out of the sector. As competition intensifies, the price war becomes a fight for survival within the industry.

To understand the pricing strategies in the large model industry, one must first dissect its business model. It's a complex game centered around basic services, refined needs, and exclusive computing power, with price reductions being merely a superficial manifestation; the real 'tricks' are hidden in the details.

According to current market research, the business models of large model vendors can be divided into three types:

1. Basic Services: This is the direct user interaction part, involving generating outputs based on inputs, i.e., the model inference process. This aspect has the lowest pricing threshold and is the primary focus of price wars among vendors.

2. Model Fine-tuning: Vendors conduct customized training of models based on customer needs, usually charging based on the number of tokens in the training text and the number of training iterations. These fees are relatively high and are typically charged on a pay-as-you-go basis.

3. Model Deployment: For large customers, vendors provide dedicated computing resources and charge based on the actual computing power or number of tokens consumed. This service is closer to 'personalized customization,' with complex pricing logic and significant premiums.

These three models reflect the service path of large models from entry-level to advanced. Vendors' frantic price reductions primarily focus on the price of the first type of service—basic model inference.

Vendors' price reductions are not random but rather 'skilled.' Taking Doubao's general model Pro-32k as an example, its input price is 0.8 yuan per million tokens, 99.3% lower than the industry average. However, the output price is as high as 2 yuan per million tokens, which is not particularly low compared to peers. This 'cheap input, slightly expensive output' model is designed to attract developers to try it out while ensuring profit margins.

Looking at the visual understanding model Doubao-Vision-Pro-32k, its input pricing is 3 yuan per million tokens, and the output is as high as 9 yuan per million tokens. Although it claims an overall price reduction of 85%, compared to direct competitors like Aliyun Qwen-VL or Gemini 1.5 Flash, its pricing does not have a significant advantage.

More interestingly, other domestic vendors employ similar 'tricks.' Although Baidu's ERNIE-Speed-8K is advertised as 'free,' once deployed, it charges 5 yuan per million tokens. Alibaba's Qwen-Max and Doubao Pro-32k also adopt similar strategies, fiercely competing only on basic services while keeping high-end service prices consistently high.

On the surface, price reductions aim to capture users, but essentially, they are a strategic means for vendors to compete for market discourse power. By reducing prices to 'cabbage levels' to lower the trial threshold for small and medium-sized developers, vendors can quickly accumulate user data, optimize model performance, and lay the groundwork for subsequent high-end services.

However, the real profit growth point does not lie in these lightweight 'basic services' but rather in customized training and dedicated computing power deployment. Taking Doubao's Pro series as an example, its fine-tuning service price is as high as 50 yuan per million tokens, dozens of times higher than basic services and far exceeding the industry's price reduction range.

Although large companies are willing to drastically reduce prices to capture market share, this strategy comes at a cost. Low-price competition in lightweight models may reduce the overall industry profit rate and may also render small and medium-sized vendors unable to compete. However, what users truly care about is model performance and practical application effects.

What is more noteworthy is whether the user growth brought about by price reductions can be sustainably converted into revenue. If users only stay at the basic service level without delving into fine-tuning or deployment services, vendors may not recover the costs of their price reductions.

The price war in the large model sector is akin to a free-to-play online game: easy to get into but requiring continuous 'whaling' for more powerful equipment and skills. Vendors attract users through low prices, essentially storing water for high-end services. However, whether this model can work in the long run depends on whether users are willing to pay for performance improvements and whether vendors can maintain technological competitiveness amidst price reductions.

Throughout the large model sector, ByteDance is by no means one of the fastest starters. CEO Liang Rubo once admitted internally that ByteDance was 'slow' in its sensitivity to large models, especially compared to startups founded between 2018 and 2021. ByteDance only began discussing GPT in 2023. However, latecomers often need to make up for the gap through internal competition, and ByteDance's approach is to 'splash cash to create buzz.'

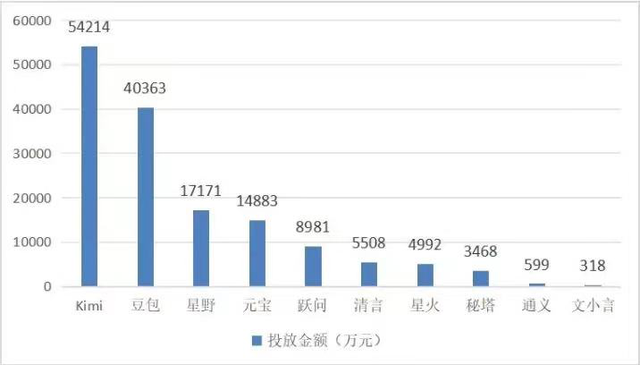

In the consumer market, Doubao has swept through online and offline channels in an 'omnipresent' manner. Data shows that in the first half of 2024, ByteDance continued to increase its advertising investment through Doubao. The amount invested in advertising from April to May alone reached 15 million to 17.5 million yuan, and by early June, this figure soared to 124 million yuan. In addition, ByteDance fully utilizes its powerful traffic pool on Douyin, almost blocking the placement of other AI applications, making Doubao the star product with exclusive resources.

According to AppGrowing statistics, in November 2024, Doubao and another AI-native application Kimi were the two most aggressive products in domestic advertising investment, with investments of 540 million yuan and 400 million yuan, respectively. This cost-free advertising strategy has catapulted Doubao to become the AI software with the largest user base in China, but the hidden concerns behind this are also evident.

The high advertising investment has not yielded the expected user stickiness. Data shows that Doubao's user activity is mediocre, with users only active for 2 to 3 days a week, sending only 5 to 6 messages per day, and spending an average of only 10 minutes per day. These figures have not shown significant growth over the past year, reflecting low actual user dependence on the product.

ByteDance management's internal assessment is also restrained: 'AI conversation products like Doubao may only be an 'intermediate state' of AI products.' The user subscription model is difficult to implement in China, and the low user engagement and interaction rounds limit the potential for advertising space development. This double dilemma sets an invisible ceiling for Doubao's commercialization path.

The bottleneck in the consumer market has forced ByteDance to shift more focus to B-end business. From drastically reducing prices to vigorously promoting Doubao's multimodal models, ByteDance attempts to establish a stable cash flow through enterprise services. However, the Chinese market is vastly different from the US, and AI products rarely achieve profitability solely through subscription or platform sales. More often, commercial success depends on specific projects and engineering, which are usually closely related to a vendor's popularity and brand trustworthiness.

For this reason, both startups and giants must be adept at maintaining industry heat. Startups create buzz to raise funds, while giants like Doubao hope to lock in more customers through popularity. But this strategy also has a cost: popularity itself cannot be directly converted into long-term revenue, especially when the user value of the product has not yet been fully demonstrated.

Judging from ByteDance's strategic shift, the direction of 'lower thresholds, more modalities' may be a future breakthrough. Video models represented by Jianying and Jimeng could become key scenarios for the implementation of large models. Doubao's recent focus on the video field at the Force Conference clearly indicates its recognition of the potential in this area.

However, the ultimate choice remains with the users.

ByteDance's path choice undoubtedly has its well-thought-out aspects but also exposes a prevalent industry issue: over-reliance on popularity while ignoring the true accumulation of user value. Doubao's current situation illustrates a fact: simply 'splashing cash' to attract users cannot compensate for shortcomings in product experience. This 'intermediate state' anxiety is not unique to ByteDance but rather a microcosm of the entire large model industry's struggle to find a balance between price wars and user experience.

The large model industry has never been a warm competitive arena. In 2024, with the acceleration of market reshuffling, this so-called 'nine out of ten perish' elimination contest has already squeezed out most second-tier vendors. However, this does not mean survivors can relax. A new round of competition has begun, and this time, price is no longer the sole determinant of victory or defeat; technology is the crucial factor in survival.

Since 2023, second-tier and lower-tier large model vendors have tried to retain users by 'buying traffic with money.' This approach is straightforward: price reductions, free services, and aggressive advertising investment, all aimed at attracting consumer-end users and capturing market share. However, facts have proven that this model is unsustainable.

The cost of acquiring customers for domestic large model applications is rising rapidly. Even the most aggressive spender finds that free products do not directly translate into revenue. At the same time, consumer-end user stickiness is low, and user churn rates remain high. More importantly, enterprises ultimately need to capture B-end customers willing to pay, such as those in high value-added industries like finance and government, who value technology and service capabilities over price.

As the market calms down, simple price wars are gradually being replaced by technology races. Whoever can provide stronger technological capabilities at lower costs will survive longer in the competition.

The reduction in technology costs depends on hardware capabilities and algorithm optimization, and there is no significant gap between domestic mainstream vendors in this regard. At this point, differentiated technology paths become crucial for breakthroughs. For example, the recently heated DeepSeek-V3 achieves streamlining and optimization of generative AI performance through distillation technology, providing a new exploration direction for the industry. However, this technology has also sparked debates about 'optimizing GPT,' and there is still disagreement within the industry about its true value.

The focus of the technology race has shifted from 'generative AI' to 'reasoning AI.' OpenAI's o1 model has become an industry benchmark. This product simulates human logical deduction abilities through delayed reasoning, taking a crucial step for AI to evolve from 'generation' to 'reasoning.' Even more explosive is that only three months after the release of o1, the iterative version o3 quickly emerged. o3 further optimizes reasoning time and task adaptability and introduces a streamlined mini version, demonstrating OpenAI's overwhelming speed in technological innovation.

In contrast, although domestic vendors are quickly catching up, the gap with OpenAI remains significant. Domestic enterprises attempt to optimize reasoning performance through integrated thinking chains, tree searches, and reflection strategies but have not yet fully surpassed the leading level of the o series. An industry expert stated that if domestic vendors could obtain an open-source model supporting the o1 architecture, it might accelerate their catch-up, but until then, existing technologies will still face many bottlenecks.

The development pace of the GPT series in the past indicates that it takes about a year for the industry to fully follow up after a revolutionary technology is introduced by a leading vendor. In the first half of 2025, domestic vendors will likely complete their initial catch-up with the o series. However, after that, existing generative AI technologies may gradually be phased out.

For domestic large model vendors, time is of the essence. In the next round of elimination, price will no longer be the key to victory or defeat; technological capabilities will be the sole chip for survival. Rather than waiting to be eliminated, it is better to take advantage of the fact that 'generative AI' has not yet completely exited the historical stage to improve product performance and optimize service experience as much as possible, thereby winning more competitive chips for oneself.