NVIDIA GTC 2025: Revolutionizing AI Physicalization - From Agentic AI to Physical AI

![]() 03/19 2025

03/19 2025

![]() 675

675

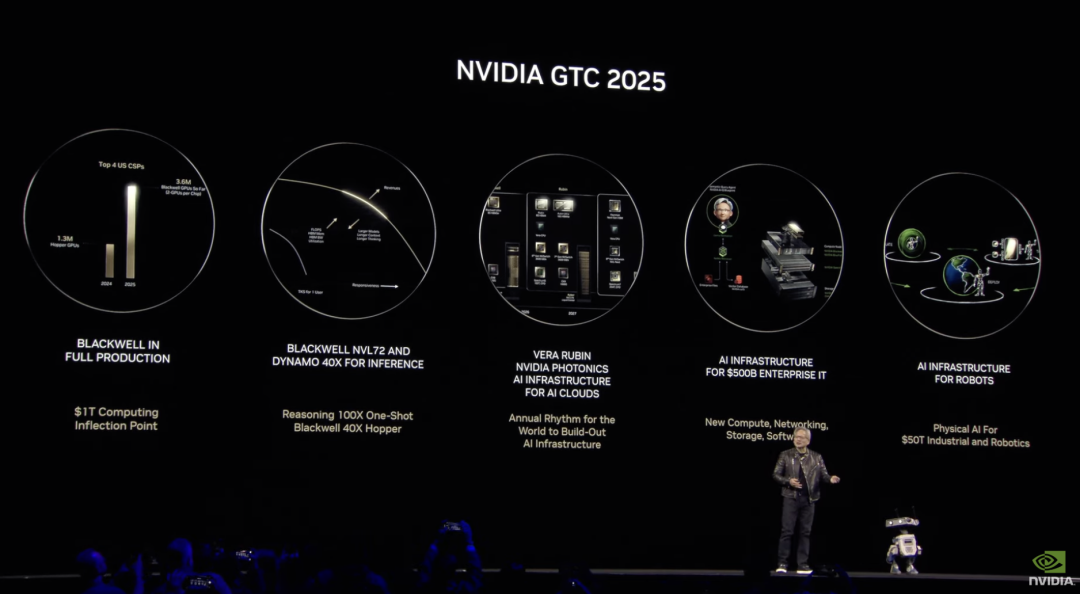

At the 2025 GTC conference, NVIDIA CEO Jen-Hsun Huang took the stage in his signature black leather jacket, delivering a packed keynote address to tech enthusiasts worldwide. The event saw NVIDIA unveil several groundbreaking products and technologies, offering a comprehensive glimpse into its AI strategy and insights into future technological advancements. From iterative AI chip upgrades to embodied intelligence breakthroughs, NVIDIA is pioneering a new era of AI through holistic technological innovations.

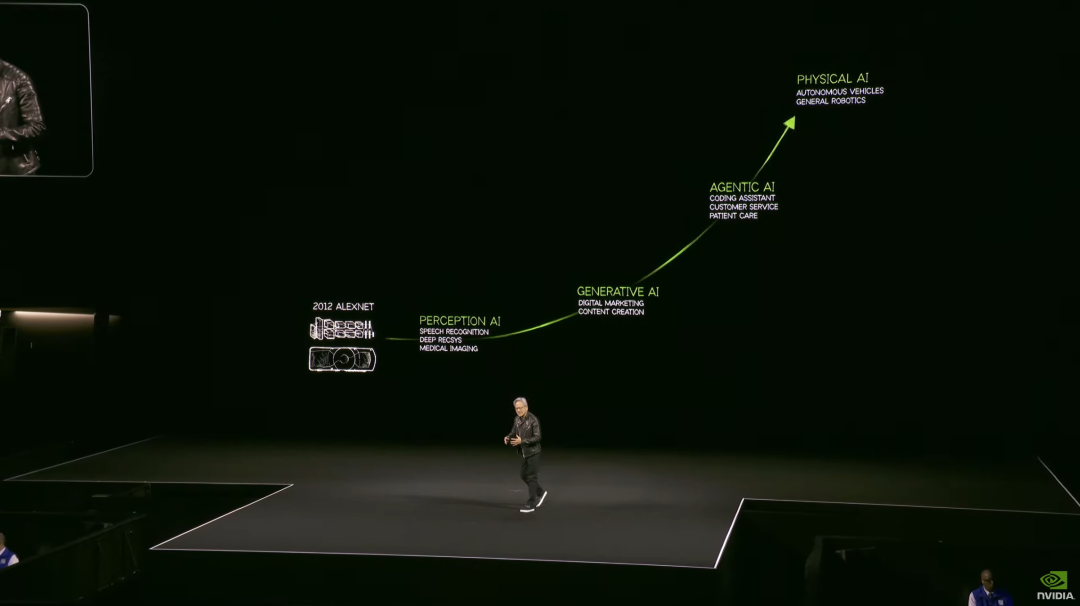

Evolution of AI Technology: From Perception to Physics

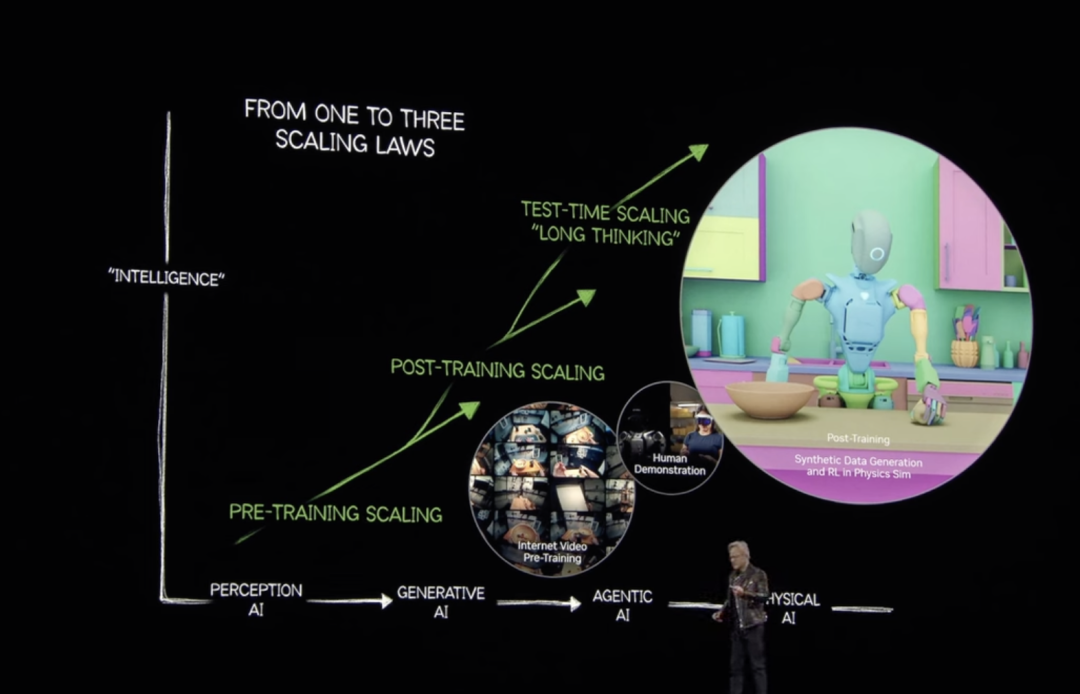

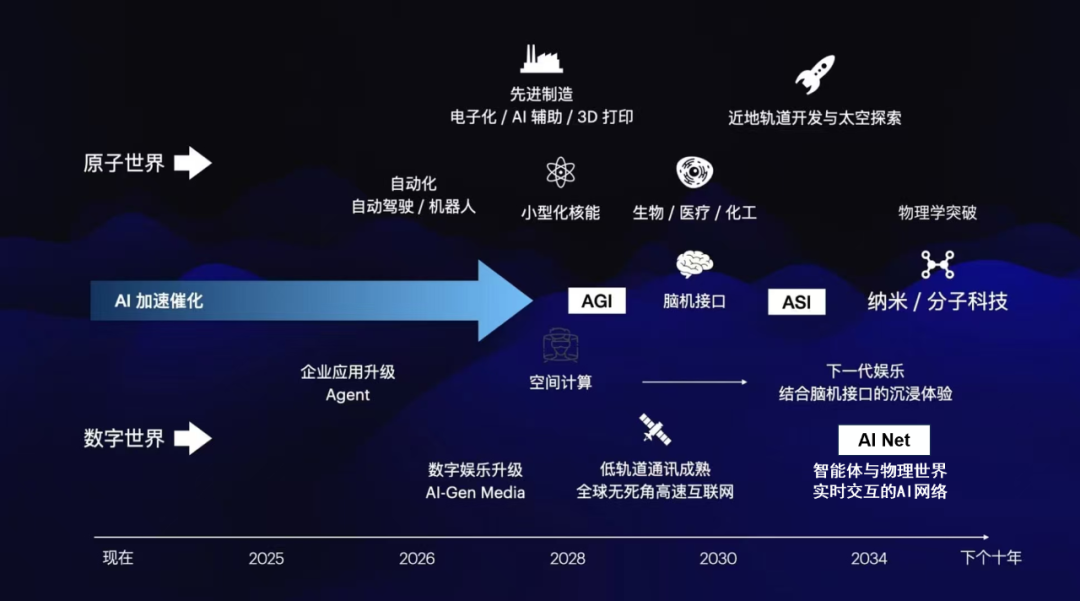

AI's journey began with discriminative AI (speech recognition, image recognition), evolved into generative AI, and now stands at the cusp of Agentic AI, with Physical AI poised to revolutionize the physical world. This evolution underscores AI's transition from simple task processing to complex reasoning and deep physical world interaction. NVIDIA has been instrumental at every stage, pushing AI boundaries through relentless hardware and software innovation.

From 'Compute Monster' to 'Energy Efficiency Revolution'

In AI chips, NVIDIA has always been forthright about its competitive stance. At GTC 2025, Jen-Hsun Huang introduced the Blackwell Ultra and Vera Rubin architectures, addressing the industry's soaring inference compute demand. Blackwell Ultra's core innovation lies in 'liquid cooling + silicon photonics' co-evolution. Built on a 5nm process, it integrates 288GB of HBM3e memory, boasts an FP4 compute power of 15 PetaFLOPS, and achieves a 40x inference speed increase over the Hopper architecture with its NVLink 72 rack system. Crucially, NVIDIA extends liquid cooling to the rack level, enabling over 1 ExaFLOPS compute density while reducing energy consumption by 30%. This 'powerful elegance' addresses the Agentic AI era's token throughput demands.

However, Jen-Hsun Huang's vision extends further. The 2026 Vera Rubin architecture introduces NVLink 144 technology, integrating 144 GPUs with HBM4 memory and 3.6 ExaFLOPS compute power, a 3.3x performance leap over Blackwell Ultra. The 2028 Feynman architecture, named after quantum computing pioneer Richard Feynman, targets the convergence of quantum computing and AI, hinting at NVIDIA's quantum supremacy ambitions.

Yet, challenges lurk beneath this chip frenzy. Despite Jen-Hsun Huang's claims of 'exceeding expectations' Blackwell orders, supply chain reports suggest production delays and cost pressures are testing NVIDIA's 'hardware myth.' Competitors like DeepSeek R1 challenge NVIDIA's pricing power with lower inference costs. In the competitive AI chip market, NVIDIA must prove that efficiency revolution trumps raw compute power stacking.

Leveraging Open-Source Models in the $38 Billion Market

If chips are AI's 'muscles,' robots are its 'limbs.' At GTC 2025, NVIDIA announced the 'general-purpose robotics era' with GR00T N1 and the Newton physics engine. GR00T N1's breakthrough lies in its 'dual-system architecture': System 1 (fast thinking) converts commands to precise actions within 300 milliseconds, using 780,000 Omniverse-generated motion trajectory data; System 2 (slow thinking) processes complex commands and multi-step tasks via a visual language model. Inspired by human cognition, this enables robots to respond swiftly while handling abstract instructions. Crucially, NVIDIA open-sourced GR00T N1, providing training data and evaluation scenarios, allowing developers to deploy specialized robots with just 20% custom data.

To support this, NVIDIA collaborated with DeepMind and Disney to launch the Newton physics engine. It simulates rigid bodies, soft bodies, and fluids, achieving ultra-real-time training through GPU acceleration, a 70x efficiency boost. Demonstrations by Disney's 'WALL-E' robot BDX and NVIDIA's Blue robot signify physics engines' key role in robots' transition from 'tools' to 'partners.'

However, the robotics revolution's real challenge lies in the 'data loop.' NVIDIA's solution is the 'real-virtual-real' cycle: collecting minimal real data, generating infinite Omniverse variants, and optimizing training through the Cosmos platform. This model improves data efficiency by 40x, compressing a 9-month task learning process into 11 hours. General Motors chose NVIDIA's simulation environment to train autonomous driving models, bridging the 'simulation-reality' gap, recognizing this advantage.

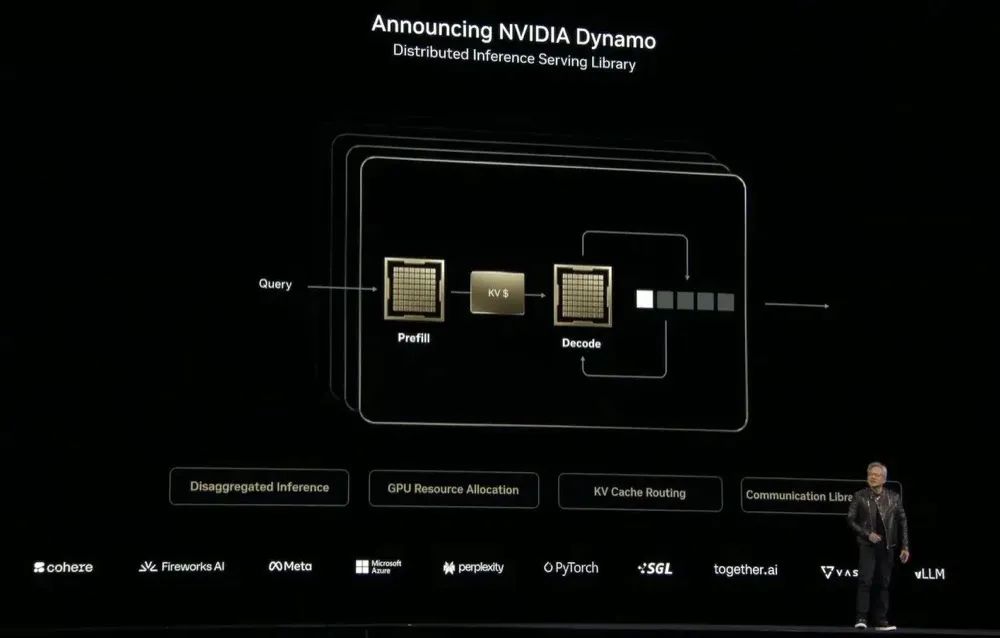

Dynamo: Reshaping Computational Economics

In Jen-Hsun Huang's blueprint, the 'AI factory' will be future enterprises' standard configuration. It's not just about compute power stacking but achieving the optimal balance between 'token throughput' and 'response speed.' To this end, NVIDIA introduced Dynamo, an open-source 'distributed inference service library' that dynamically allocates compute power, enabling a single rack to integrate 600,000 components with liquid cooling supporting exascale compute power.

Dynamo's core objective is 'maximizing revenue per megawatt.' By optimizing model inference resource scheduling, the Blackwell factory achieves a 25x performance boost over Hopper, increasing cloud service providers' revenue opportunities by 50x. Microsoft and Perplexity's collaboration demonstrates Dynamo's ability to reduce user request response latency to milliseconds, crucial for real-time interactive Agentic AI.

More radically, NVIDIA introduced two 'desktop-grade AI computers': DGX Spark and DGX Station. The former, Mac Mini-sized, is equipped with the GB10 chip and starts at $3,000; the latter, a 'desktop data center,' features the B300 Grace Blackwell Ultra chip and 784GB unified memory. Jen-Hsun Huang declared, 'This is what a PC should look like.' This signifies AI compute power's shift from the cloud to the edge, fundamentally disrupting AI deployment barriers for enterprises and developers.

NVIDIA's 'Full-Stack Moat'

NVIDIA's ambitions extend beyond hardware. At GTC 2025, Jen-Hsun Huang showcased the CUDA ecosystem's comprehensive evolution: the CuOpt mathematical programming library, with Gurobi and IBM, optimizes logistics and resource scheduling; the HALOS security architecture, with 7 million lines of code undergoing security reviews, ensures in-vehicle AI reliability; AI-RAN, a Cisco and T-Mobile collaboration, builds AI-native wireless networks optimizing 5G signal processing. Additionally, NVIDIA announced a quantum computing research center exploring qubit noise issues based on GB200 NVL72 hardware, hinting at its transition from classical to quantum computing, paving the way for the Feynman architecture.

The most notable breakthrough is in 'semantic storage.' NVIDIA, with Box, is creating an 'interactive storage' system where users retrieve data through natural language. This transforms the storage layer from a 'dead hard drive' to an AI's 'knowledge base.'

Market Doubts Behind the Stock Price Plunge

Despite impressive technology, the capital market's reaction to GTC 2025 was subdued. On the keynote day, NVIDIA's stock price closed down 3.2%, with a year-to-date decline of over 16%. Market doubts center on supply chain risks, cost sensitivity, and intensifying competition. Jen-Hsun Huang acknowledged, 'The outside world once misjudged AI expansion's speed, but now we need to convince the world of the future.' This reveals NVIDIA's core contradiction: the tension between its advanced technological vision and market implementation lag.

Agent Collaboration in the AI Network Ecosystem

In the Physical AI era, the 'single agent's capabilities' limit is being replaced by 'multiple agents' collaborative efficiency.' While GTC 2025 focused on hardware, the industry's silent battle has shifted to constructing an 'AI network ecosystem.' The ultimate AI form isn't a super brain but countless small, specialized intelligent nodes collaborating through a network.

Dynamic sparsification technology rapidly increases floating-point operation efficiency, significantly reducing inference costs, enabling SMEs to deploy complex logical models. The edge intelligence + cloud collaboration model breaks traditional AI's central node dependence, laying the foundation for a distributed agent network. Flexible collaboration technology opens the door to 'swarm intelligence' for robots. In the future, liquid joint drives and tactile neural networks could enable robots to perceive physical environment changes like humans, even achieving multi-robot collaboration through 'swarm intelligence' algorithms.

The MogoMind AI network is building a super agent with 'vehicle-road-cloud-human' collaboration. Unlike traditional large AI models, the AI network offers multimodal understanding, spatiotemporal reasoning, and adaptive evolution. It processes not just digital world data like text and images but also possesses real physical world perception and cognitive abilities, deeply integrating real-time physical world data, connecting autonomous driving, low-altitude economics, embodied intelligence, intelligent transportation, smart cities, and personal AI assistants, providing real-time data and precise decision support to build a seamless intelligent ecosystem bridging the physical and digital worlds.

The 'Cambrian Explosion' of AI

GTC 2025's keynote was a 'technological declaration' about AI's future. NVIDIA not only showcased hardware limits but also outlined AI's digital to physical world penetration panorama: robots navigating factories and homes, autonomous driving fleets restructuring urban transportation, and quantum computing and AI convergence catalyzing a new scientific revolution. Jen-Hsun Huang's ambition is to make NVIDIA this revolution's 'operating system' - from chips to robots, from the cloud to the edge, from code to physical interaction, building a full-stack intelligent ecosystem. However, just as the Cambrian explosion required a suitable environment, AI's 'physicalization' faces challenges: technological bottlenecks, ethical controversies, market acceptance... Can NVIDIA maintain its lead in this long race? Perhaps the answer lies in Jen-Hsun Huang's words: 'AI is the ultimate productivity tool, and NVIDIA is building the ultimate infrastructure for it.' As chips, robots, and AI factories intertwine into a network, human and agent boundaries blur, and we stand at this revolution's starting point.