Big model difficulties, Zhou Hongyi's rescue efforts

![]() 08/09 2024

08/09 2024

![]() 611

611

Author | Yang Zi

Edited by Liu Jingfeng | Standing at the pinnacle of large model development, OpenAI is also facing tough times. Just recently, John Schulman, one of OpenAI's co-founders, announced his resignation on social media, revealing his intention to join a rival company, Anthropic. Meanwhile, Greg Brockman, OpenAI's other co-founder and president, has been reported to extend his leave of absence until the end of this year to "relax and recharge." Peter Deng, who joined OpenAI last year as the head of product, has also chosen to leave. While the specific reasons for their departures remain unclear, they nonetheless reflect the current awkward position of some large models—as technology moves away from its early rapid iterations, commercialization faces increasing pressure.

And the latter issue may be even more concerning. Last month, foreign media reported that OpenAI is facing a significant challenge: the company is projected to lose $5 billion this year, with estimated revenues of $3.5 to $4.5 billion and total operating costs reaching $8.5 billion. If the industry leader is struggling, one can only imagine the difficulties faced by other large model enterprises. So, where lies the problem? A prevalent view is that while paid subscriptions contribute significantly to OpenAI's annual revenue, ChatGPT still falls short of being a "killer app." Furthermore, ChatGPT's capabilities as a standalone model are inherently limited. At the opening of ISC.AI2024, the 12th Internet Security Conference and AI Summit held on August 1st, Zhou Hongyi, the founder of 360, offered insights into solving AI application challenges: Firstly, identify star scenarios; secondly, recognize that one model alone may not solve all problems, necessitating the collaboration of multiple models. Zhou announced that as of July 2024, 360AI Search has become the most trafficked AI application in China, with its July user traffic surpassing that of other popular AI apps in the country. It appears that a solution to this dilemma is emerging.

Developing large models has proven challenging in 2023, a fact visible to all. Since the stunning debut of ChatGPT 3.0, the world has embraced the entrepreneurial fever surrounding large models. In China, this has manifested as a "hundred models war." However, starting a large model venture differs from past internet startups and is even more difficult than AI startups from a few years ago. Firstly, large models demand a higher level of technical proficiency, which explains why nearly all domestic large model entrepreneurs are the most outstanding scientists and entrepreneurs in the AI field. Secondly, large models are characterized by their massive parameters, requiring enormous amounts of data and computing power, both of which are costly. As a result, despite the initial buzz surrounding the "hundred models war," only a handful of top-tier startups and major companies' large models have emerged as viable players. This year, the challenge for large models has shifted to commercialization.

Currently, almost every large model company is grappling with commercialization concerns, with startups facing the most significant challenges—even OpenAI, with over $10 billion in funding, is not immune. According to foreign media reports, OpenAI's total operating costs for this year could reach $8.5 billion, with inference and training costs accounting for $4 billion and $3 billion, respectively. If OpenAI's annual revenue is $3.4 billion, its revenue gap would amount to $5 billion. For other startups, this hurdle is even more formidable. In contrast, internet companies such as Baidu, 360, Alibaba, and Tencent have clearer commercialization prospects, largely due to their extensive business ecosystems. By integrating large models into existing products and services, such as Baidu Wenku's document assistant and 360AI Search, these companies can embed generative AI as an auxiliary function to enhance user engagement and drive revenue growth. However, the question remains: what if these large models cannot find viable commercial models? Zhou Hongyi shared two key strategies for AI application development. He believes that firstly, star scenarios must be identified; secondly, a single model may not solve all problems, necessitating the collaboration of multiple models.

"Last year, there was a great deal of excitement around large models, with companies showcasing various functions and capabilities. There was a misconception that creating a chatbot would suffice, enabling users and enterprises to enhance productivity. However, the reality appears different. Upon reflection, I've come to understand that large models are not products but capabilities. While capabilities are crucial, they must be integrated into scenarios to generate real value and user adoption," said Zhou Hongyi. Recalling the difficulties in AI implementation several years ago, it is imperative that someone steps forward to explore the practical application of large models today.

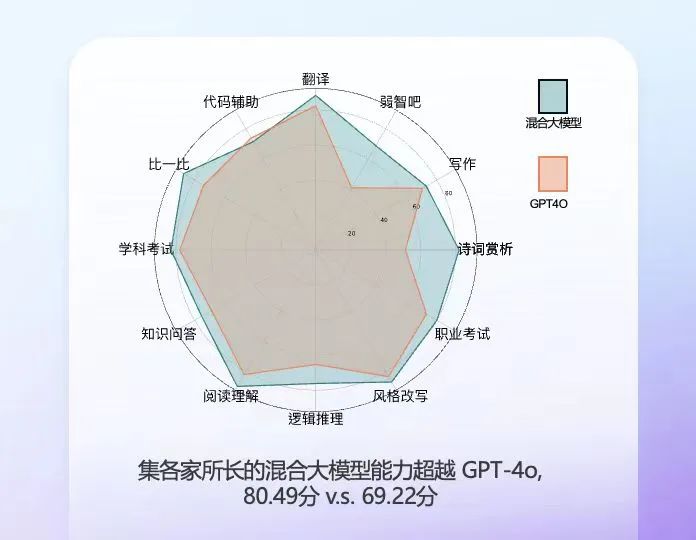

A notable recent development in China's AI sector is Zhou Hongyi's launch of the "AI Assistant," which integrates 360 Brain and the large models of leading domestic players such as Zhipu AI, SenseTime, Baidu, Tencent, iFLYTEK, Huawei, MiniMax, ZeroOne, Mianbi AI, Alibaba Cloud, Huanfang Quant, TAL Education, and Moon Dark Side. According to Zhou, in terms of performance, China's large model companies are on par with GPT-4o in individual capabilities. By pooling the country's top AI models, they can compete with GPT-4o in overall strength. At the ISC.AI Summit, Zhou demonstrated the results of a head-to-head comparison between the hybrid large model and GPT-4o, revealing that the AI Assistant, which combines the strengths of various models, scored 80.4 out of 100, significantly surpassing GPT-4o's 69.22 and leading in 11 capability dimensions.

"If each model focuses on one task and collaborates with others, we can achieve rapid response, generate real value, and ensure user adoption," said Zhou Hongyi. This collaboration among 16 top domestic large model companies is precisely for this reason. "What will our collaboration look like? For instance, we will integrate everyone's assistant capabilities into the browser, providing a universal AI assistant function," Zhou explained. Upon its official launch, users can quickly access the AI Assistant through the 360 Security Guard floating ball, the sidebar of 360 Secure Browser and Extreme Browser, and text highlighting.

Ultimately, the collaboration aims to enhance user experience. Compared to other assistants on the market, the AI Assistant, powered by the combined strengths of 16 large models, boasts three key advantages: Firstly, users can choose from multiple models based on their strengths. While each model claims to surpass GPT-4 or even GPT-4o, actual testing reveals unique advantages for each. Secondly, users can compare results from different models to obtain the optimal solution. When asking a question, users can view results from 2-3 models simultaneously and perform cross-validation to find the best answer. Thirdly, the AI Assistant utilizes intent recognition to automatically select the most suitable model for the user. For example, if the user intends to solve a math problem or write code, DeepSeek, known for its excellence in these areas, will be invoked. By integrating the capabilities of 16 large model companies and providing convenient access through desktops and browsers, the AI large model has taken a significant step forward in product entry and ecosystem openness.

Achieving openness is not the end of product development but rather the beginning of refinement. AI needs to continuously enhance user engagement and accessibility to further activate the potential of large models. To achieve this, we must identify suitable scenarios.

"In 2024, I believe we should focus on finding applications, scenarios, and products that demonstrate how large models can address users' daily needs. These needs often lurk behind various scenarios. Therefore, I consider 2024 the 'Year of Scenarios,'" said Zhou Hongyi. He even elevated scenarios to a critical factor in determining whether AI can trigger an 'industrial revolution' in China, with two key metrics: whether it can penetrate various industries and reach every household. To this end, 360 has contributed star scenarios such as its security guard, browser, search, and large model watch, collaborating with domestic large models to create the AI Assistant and pioneering the opening of desktop and browser access points, serving over 1 billion users. Suitable scenarios allow each large model to leverage its strengths, with a star scenario potentially enhancing the experience tenfold. Identifying star scenarios is the first step in unleashing the potential of large models. "Finding scenarios doesn't necessarily mean providing an all-encompassing model that solves every problem," said Zhou. "Instead, it's about leveraging one or several models to support the functionality of a specific scenario."

For instance, focusing solely on document analysis or search may not fully utilize the capabilities of many large models. Search is one of the scenarios closest to users, with a widespread audience and high traffic. Over the past two decades, users have developed ingrained habits of using search engines. Whoever excels in AI search stands to disrupt the stagnant traditional search engine market. However, AI search requires even stronger product capabilities, offering simplicity, speed, accuracy, and lower inference costs than traditional search to win over the masses. Established search engines, with their large user bases and data volumes, hold advantages. Years of deep diving into vertical application scenarios have enabled them to accumulate rich user data, experience, and moats that startups struggle to overcome in the short term. Furthermore, they are better positioned to break through the barrier of early AI search's insufficient accuracy. Additionally, 360 applies the capabilities of hybrid large models to AI search, delivering a seamless user experience.

Through 360AI Search, users can access the resources of 16 large models in two ways. They can either input questions or requests directly into the search box, allowing AI to automatically analyze and invoke one of the 16 models for a response. Alternatively, users can select a model before receiving an answer and compare results from different models to select the best one. Users can switch models at any time during the process, and the system will remember the previous context, allowing subsequent models to continue the conversation, truly realizing the principle of "using the best model for the job." As technology matures and simplifies complex tasks, AI search will develop a strong user engagement. Importantly, as more users embrace AI search, they provide feedback that further activates the power of various large models.

Over the past two decades, search engines have been a crucial 'development engine' driving the internet industry. As an infrastructure-level product and technology, search engines have facilitated the creation of numerous products and applications, both in the past and present. As creators of new internet species, search engines continue to evolve.

Today, AI serves as the most significant variable in search engines, leading and shaping their future development.