Industry | Google Unveils Local VLA Model, Ushering Embodied Intelligence into the 'Edge Era'

![]() 07/04 2025

07/04 2025

![]() 750

750

Preface: Over the past decade, the robotics field has focused on [visible] visual perception and [understandable] language understanding. With the emergence of the VLA model, robots have embarked on the third stage of [precise movement]. The VLA model is increasingly recognized as a universal framework connecting perception, language, and behavior, forming a cornerstone for achieving general intelligence. It enables robots to learn from diverse data sources, including the internet, and translate this learning into concrete actions.

Author | Fang Wensan

Image Source | Network

Google Releases Gemini Robotics On-Device: A Local VLA Model

Recently, Google introduced Gemini Robotics On-Device, a cutting-edge embodied intelligence model designed for offline operation. This model allows the vision-language-action (VLA) multimodal large model to function locally on embodied robots.

It seamlessly processes visual inputs, natural language commands, and motor outputs, maintaining stable performance even in offline environments.

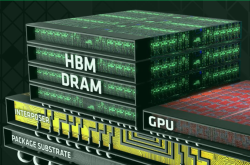

This intricate array of processing tasks is now executed within the robot's internal computing unit.

Notably, the model excels in adaptability and versatility.

Google highlights that Gemini Robotics On-Device is the first robot VLA model to offer fine-tuning capabilities to developers, empowering them to tailor the model to specific needs and applications.

Research indicates that with as few as 50 to 100 new task demonstrations (typically conducted via remote robot operation), the model can swiftly learn and master new skills, showcasing remarkable [rapid task adaptation] capabilities.

Additionally, Google has made the corresponding Software Development Kit (SDK) publicly available, marking a significant step toward the practical application of embodied intelligence technology.

Gemini Robotics, launched by Google in March, is part of the VLA series models, aiming to bring the capabilities of multimodal large models into the real world.

As its name suggests, Gemini Robotics On-Device is optimized for local operation on robotic devices, aiming to achieve robot intelligence with minimal computational resources.

Local models offer the advantage of ensuring stable robot performance, even in situations with unstable or no network connectivity.

In various test scenarios, Gemini Robotics On-Device has demonstrated robust visual, semantic, and behavioral generalization capabilities, capable of understanding natural language instructions and performing delicate tasks such as untying bags or folding clothes.

Since it operates independently of the data network, it is particularly suited for latency-sensitive applications, ensuring stable operation in environments with intermittent or zero connectivity.

Evaluation data reveals that the On-Device version excels in generalization performance tests.

While there is a slight gap compared to the cloud version of Gemini Robotics in terms of visual generalization, semantic understanding, and behavioral generalization, it significantly surpasses previously available local models.

When handling out-of-distribution tasks and complex multi-step instructions, Gemini Robotics On-Device also exhibits notable advantages over previous local models.

The launch of Gemini Robotics On-Device signifies a pivotal shift in embodied intelligence, moving from reliance on cloud computing power to local autonomous operation.

Deployment of Embodied Intelligence Faces Challenges

Previously, many robotic systems, including those from Google, adopted a hybrid architecture: deploying a smaller model on the robot for rapid response while offloading complex reasoning and planning tasks to powerful cloud servers.

While this approach is feasible, it places stringent demands on network connectivity's stability and speed.

Any network latency or interruption can lead to sluggish or halted robot responses.

Furthermore, uploading sensor data (especially visual data from privacy-sensitive environments like homes or healthcare facilities) to the cloud raises ongoing privacy and security concerns.

Challenges include:

- Heavy reliance on cloud computing resources, limiting robots' ability to operate independently in unstable or offline environments.

- Large model size, making it difficult to run efficiently on robots' limited computational resources.

Currently, most robots require tens of thousands of training sessions to complete a single task.

Google aims to provide an open, versatile, and easy-to-develop platform for the robotics field, akin to what Android did for the smartphone industry.

In the past, limited by bandwidth and computing power, many robot AIs could only demonstrate capabilities.

The development of embodied intelligence technology has long been constrained by its heavy reliance on cloud computing resources, making it difficult for robots to independently complete tasks in the absence or instability of network connections.

Additionally, due to their large size, these models struggle to run efficiently on robots' limited computational resources.

This breakthrough opens new avenues for practical applications in the robotics industry and paves the way for robots to be used in a broader range of scenarios.

For instance, applications such as precise part assembly in factories without network connections and autonomous rescue in disaster ruins rely on the deployment of robot edge-side models.

Currently, achieving a unified software architecture is challenging due to the differences in body structure, degrees of freedom, and sensor configurations among various types of robots.

Once hardware standards are unified, similar to the norms formed by universal components like USB ports, keyboards, and screens in the smartphone ecosystem, it will significantly promote the standardization of algorithms and the realization of local deployment.

Embodied Intelligence Enters the 'Edge Era'

Local VLA models will make robots more suitable for sensitive scenarios such as homes, healthcare, and education, addressing core challenges like data privacy, real-time response, and safety and stability.

Over the past few years, the [edge deployment] of large language models has emerged as a significant trend.

From initially relying on large-scale cloud computing resources to now being able to run locally on edge devices like phones and tablets, advancements have been made in model compression and optimization, inference acceleration, and hardware collaboration.

A similar evolutionary path is gradually unfolding in the field of embodied intelligence.

The VLA model (Vision-Language-Action model), as the core architecture of embodied intelligence, essentially endows robots with the ability to understand tasks from multimodal information and take corresponding actions.

The release of this large model version may trigger a chain reaction in the industry. With the continuous evolution of AI computing power and model architecture, [edge intelligence] is moving from traditional IoT to a more advanced stage represented by embodied intelligence.

The leadership in localized VLA heralds a new phase in the development of embodied intelligence.

This breakthrough technology marks a shift in robot AI from reliance on cloud computing to autonomous edge intelligence, bringing unprecedented possibilities to fields such as industrial manufacturing, healthcare, and household services.

Completely free from cloud dependence, robot AI achieves [independent thinking].

Traditional robot AI systems typically rely on cloud computing resources, uploading sensor data to remote servers for processing before sending back instructions.

While this architecture boasts powerful computing capabilities, it suffers from inherent flaws like network latency, unstable connections, and privacy and security issues.

This year, international companies like Google, Microsoft, and Figure AI have launched their respective VLA models, while domestic companies such as Yinhe Universal, Zhiyuan Robotics, and Zibianliang Robotics have also deployed corresponding strategies in this field.

On June 1 of this year, Yinhe Universal officially launched its self-developed product-level end-to-end navigation large model, TrackVLA.

This model is an embodied large model with pure visual environment perception, language instruction driving, autonomous reasoning capabilities, and zero-shot generalization abilities.

Then, at the 2025 Beijing BAAI Conference a week later, Yinhe Universal released the world's first end-to-end VLA large model for retail scenarios, GroceryVLA.

Conclusion:

From the global perspective of embodied intelligence development, the launch of Gemini Robotics On-Device represents a significant paradigm shift in large model technology within the robotics field.

Over the past decade, robotic intelligence has primarily relied on the support of large cloud models, but in the future, it will shift to a new era of edge local deployment, large model miniaturization, and high-frequency adaptive updates.

This trend is crucial for international giants in embodied intelligence such as Google and Tesla, while also presenting higher requirements for China's embodied intelligence industry chain.

Some references: AI New Era: "Google Gives Robots 'Brains'! First Offline Embodied VLA Model, Precise Control without Internet", Quantum Bit: "Google Releases Local Embodied Intelligence Model! Fully Offline Execution of Fine Operations, Covering from Humanoid Robots to Industrial Robots", DeepTech: "Google Releases Gemini Robotics On-Device, Achieving Full Localization of Robot AI Models for the First Time", Blue Whale TMT: "Google Releases Local VLA Model, Is the 'Android System' for the Robotics Industry Coming?", Top Technology: "The 'Most Powerful Local Brain' for Robots is Born, Google DeepMind Launches a New Generation of VLA Models, Domestic Research Makes Multi-dimensional Advances", Zhiwei Insights: "Google Releases Gemini Local Model, Accelerating Embodied Intelligence", Era of Machine Awakening: "Google RT-1 Model - An Important Early Exploration of Embodied Intelligence VLA Model"