Revolutionizing Cockpit Interaction with Edge-Side AI Models: A High-Computing Power Perspective

![]() 07/07 2025

07/07 2025

![]() 547

547

Produced by Zhineng Technology

With the advent of next-generation cockpit chips, locally deployed large AI models are making their way into vehicles, assuming pivotal roles in the smart cockpit ecosystem. Unlike traditional cloud-dependent solutions, local models offer superior responsiveness, robust privacy protection, and enhanced scene stability, fundamentally transforming human-vehicle interaction.

Indeed, the integration of edge-side AI large models in vehicles has redefined the technical architecture of the smart cockpit. Beyond rapid response and multimodal interaction, smart cockpit products powered by local large models can deliver high-precision personalized services even without network connectivity. Following Qualcomm's Suzhou conference, we are poised to document the transformative changes in consumer experience.

01 From 'Local Intelligence' to 'Active Understanding'

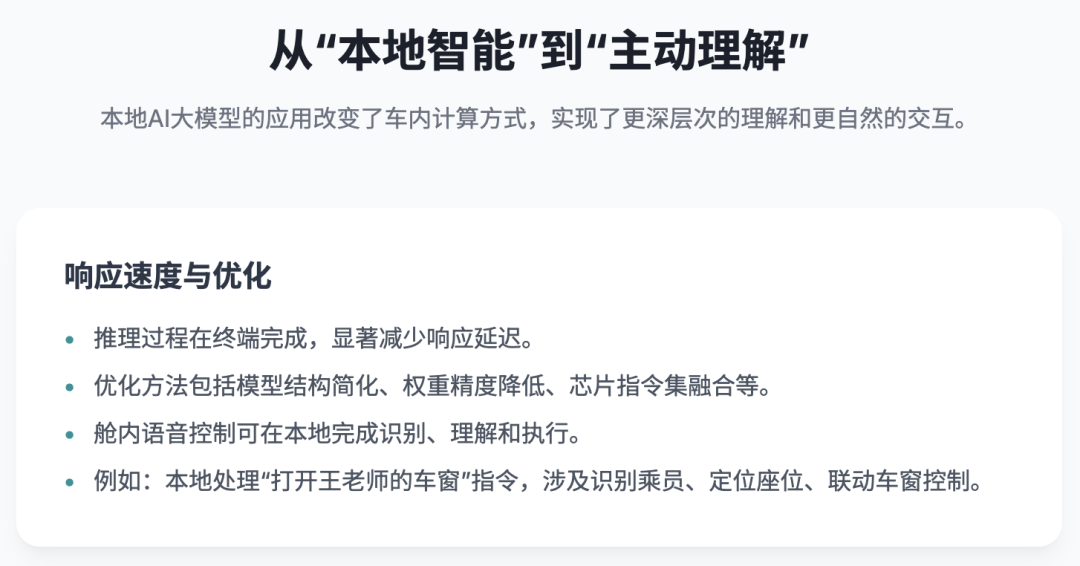

The introduction of local AI large models has revolutionized in-vehicle computing logic. Unlike traditional cloud solutions, local models execute their reasoning processes directly on the terminal.

This approach significantly reduces response latency, keeping the average human-computer interaction wait time within 200 milliseconds (0.2 seconds), thereby mitigating the 'lag' and 'misjudgment' issues commonly encountered in intelligent systems. This enhancement is not solely due to model compression but results from multi-dimensional collaborative optimization, including simplified model structures, reduced weight precision, chip-optimized instruction set fusion, and other advanced engineering techniques.

Take in-cabin voice control as an example. Previously, speech recognition relied heavily on the cloud for keyword extraction, intent recognition, and command execution matching. Now, local models can handle these tasks within a single computation graph.

For instance, executing the command "Open Mr. Wang's window" locally involves recognizing entities like "Mr. Wang" and "window," locating the occupant using the seat sensor matrix, and coordinating the window control logic throughout the process (prior cockpits could only differentiate between driver's seat, passenger seat, left rear, right rear, etc.). This requires real-time collaborative reasoning between language understanding, user memory management, and device control, all achieved within a unified model.

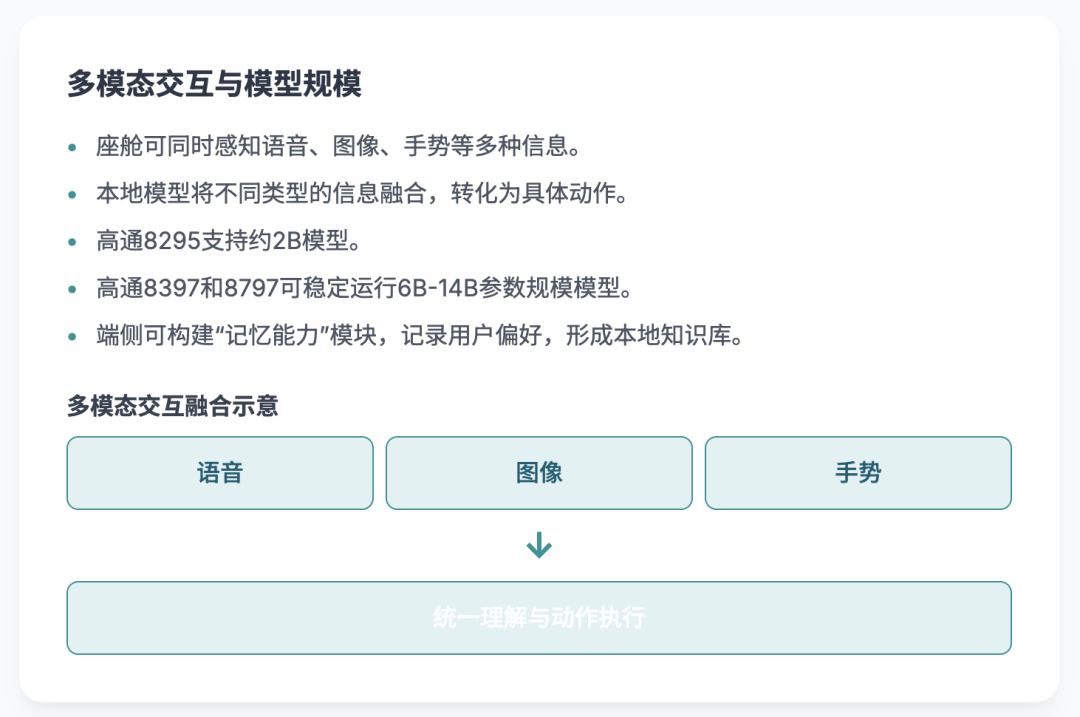

Multimodal interaction has also emerged as a crucial metric for evaluating local model capabilities. Smart cockpits now possess the hardware foundation to simultaneously process voice, image, gestures, and other information. Local models fuse this heterogeneous data into a unified semantic representation and convert it into actionable instructions.

For example, opening the door with an external gesture requires the system to combine visual detection and semantic recognition to make informed judgments about user intent. Interpreting children's instructions through in-cabin video necessitates deep integration of image recognition and semantic dialogue capabilities. This integration goes beyond simply "stacking perception channels" and involves constructing semantic consistency at the spatio-temporal level through a unified encoder and cross-attention mechanism, demanding a high degree of model lightweightness and generalization.

Current Qualcomm 8295 chips can run models with around 2 billion parameters, while Qualcomm 8397 and 8797 can stably run models with parameter scales ranging from 6 billion to 14 billion, covering tasks like navigation, voice control, and multimedia recommendations. Modules with 'memory capabilities' can also be constructed on the edge to record user behavior preferences, frequently used command patterns, and form a local private knowledge graph.

These 'in-car memories' are independent of the cloud and can dynamically adapt to the vehicle environment and user status, enabling true active service rather than passive response.

02 Privacy, Stability, and Immersion: The Local Model Cockpit

Another key advantage of locally deployed AI models lies in their robust protection of user data.

Images and audio captured by in-cabin cameras and microphones no longer need to be uploaded to the cloud for processing. Instead, local models directly recognize and respond to this data, mitigating data leakage risks and adhering to regulations like the Data Security Law and GDPR. This is particularly crucial for sensitive tasks involving facial recognition, children's images, and occupant behavior analysis.

Due to their minimal dependence on network connections, local models offer superior stability compared to cloud solutions, especially in mountainous areas, tunnels, and low-signal environments.

This ensures that the smart cockpit's response capability is no longer contingent on operator signal quality but is driven by in-vehicle computing power. This significantly enhances continuity and reliability in scenarios requiring immediate feedback, such as voice control, video playback, and navigation planning.

Another technological evolution direction is the immersive upgrade of the cockpit UI.

With the enhanced local GPU computing performance, smart cockpits now support complex 3D modeling and dynamic lighting rendering. Combined with the language-driven capabilities of local large models, vehicle status information (like tire pressure, battery level) can be visually fed back in real-time, boosting the driver's perception efficiency.

For example, when a user says "Set the front left tire pressure to 2.4," the system not only adjusts the setting but also displays the real-time change in tire pressure status through 3D images.

As in-car infotainment systems evolve into 'second terminals,' local models have also acquired cross-device control capabilities.

Through collaboration with home IoT devices and mobile phones, local models can control home appliances, synchronize meetings, switch media, and realize a computational closed loop of 'vehicle-home integration.' The core here is not just remote control commands but the model's ability to continuously understand context and take over tasks.

Traditional voice assistants function more like a one-time question-and-answer mechanism, whereas local large models can understand multi-turn contexts. For instance, they can proactively remind users of schedules during travel, coordinate routes with weather changes, and even adjust home air conditioner temperatures.

As model complexity increases, the ability to adapt to chip microarchitectures becomes increasingly critical.

From the current industry perspective, local models are driving chip design towards AI-native architectures, such as optimizing the reasoning path of Transformer-type models on NPUs, increasing on-chip cache capacity, and reducing latency paths. The symbiotic relationship between models and chips is emerging as a key infrastructure for the next phase of industrial evolution.

Summary

Local large models are reshaping the operational paradigm of in-vehicle intelligent systems. They are not merely a 'detach from the cloud' compromise but rather a 'digital brain' with independent reasoning capabilities, demonstrating significant advantages in user privacy, response speed, and interactive immersion.

They have transformed human-vehicle interaction and fostered deep integration among chips, systems, and algorithms. Smart cockpits are gradually transitioning from a 'helper' role to an 'agent' role, heralding a new era of automotive intelligence.