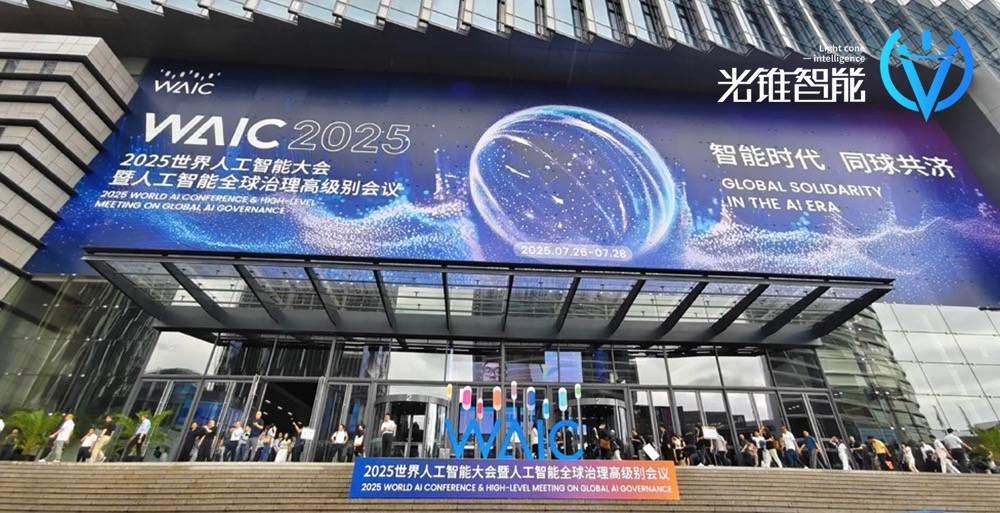

WAIC 2025: Accelerating Progress with Large AI Models

![]() 07/28 2025

07/28 2025

![]() 639

639

"The crowd is so dense it feels like the venue will explode, and navigating the exhibition is comparable to attending a temple fair."

In 2025, the World Artificial Intelligence Conference (WAIC) has proven its popularity: tickets were being resold on Xianyu for up to 2,000 yuan each; foreigners were visible everywhere; and every exhibition area was packed with visitors...

The sheer buzz outside the venue already indicates that this year, AI large models have made significant strides in application.

The unprecedented number of exhibited products attests to the widespread adoption of AI: a record 800 companies exhibited over 3,000 products and over 100 new products, covering over 40 large models, over 50 AI terminal products, and over 60 intelligent robots. Compared to last year, the number of products has doubled, leading many visitors to joke about "walking until their legs give out".

One exhibition area showcased various approaches demonstrated by different vendors across different categories: robots replicating human movements 1:1, mixing drinks, carrying objects; agents writing code, composing essays, serving as financial assistants; AI+education featuring not only AI learning machines but also AI blackboards...

Compared to intangible agent "digital employees," physical intelligent robots have become the "top stars" of the conference.

In Hall H3, the scene of numerous robots "performing" was quite spectacular: Unitree robots sparring with humans, ZHIYUAN robots practicing stacking Pepsi cans, Magic Atom's robotic dogs running around the venue...

In addition to the explosion of products targeting C-end users, AI has also been applied within B-end enterprises.

"Within our company, employees need to write a lot of code and conduct many research experiments every day. Roughly 70% of this code is written by AI, and 90% of data analysis is done by AI." In a sharing by MiniMax CEO Yan Junjie, the intelligent system built by agents has truly assisted employees.

As large models entered the deep reasoning stage early this year, their performance reached a highly usable level, coupled with further reductions in training and inference costs. After three years of rapid progress, vendors have finally showcased their capabilities in a high-profile manner.

From imitation learning to reinforcement learning, large models have evolved into the 2.0 era.

Taking OpenAI's GPT-4 model as a watershed, 2025 became the "year of explosion" for inference models. Unlike previously relying on large amounts of supervised data to improve performance, the training mode through RL (reinforcement learning) algorithms has elevated the capabilities of large models to a new stage.

As a result, the addition of reasoning capabilities in fields such as text, vision, and multimodal has become the focus of competition among domestic large model companies in the first half of the year.

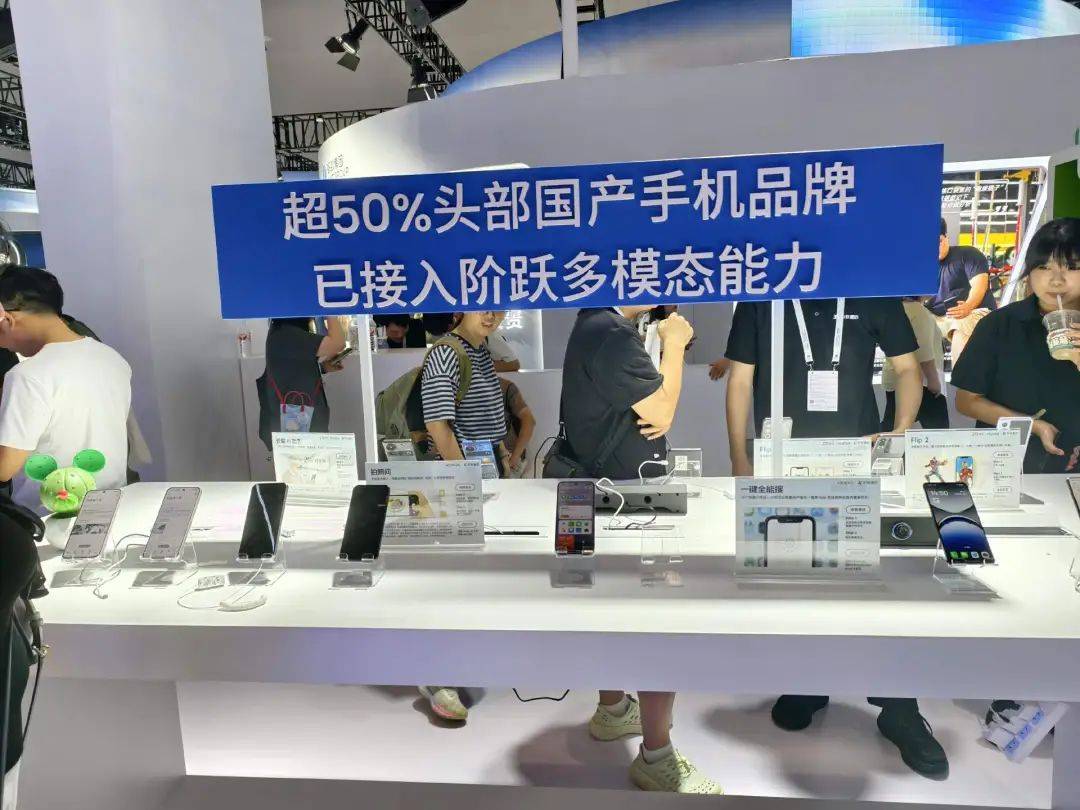

At the WAIC conference, StepLeapStar, one of the "New Top Five Large Models," released its native multimodal inference model Step-3, achieving SOTA status among open-source models; SenseTime also released its RDI V6.5 multimodal base large model at the forum, emphasizing three major optimizations: strong reasoning, high efficiency, and intelligent agents.

With the general direction shifting towards reinforcement learning, a noticeable trend is that training and inference costs have further decreased in the first half of the year. Through the use of new model architecture optimizations, advancements in training algorithms, and improvements in computing power efficiency through hardware and software collaboration, large models have begun to rival traditional methods in providing cheaper training and inference costs.

Yan Junjie gave a more optimistic judgment, believing that within the next one to two years, the inference costs of the best models may be reduced by another order of magnitude.

From the application perspective, considering commercialization, the vast majority of models will no longer pursue tens of thousands of parameters, and will instead consider the application requirements of commercialization routes.

Taking video generation as an example, Luo Yihang, CEO of Shengshu Technology, believes that there is still room for further optimization in the entire video generation process, which he summarizes as "fast, good, and cheap."

"First, consistency needs to be improved, then the speed of generation needs to be accelerated, and thirdly, the cost of video generation needs to be significantly reduced," said Luo Yihang.

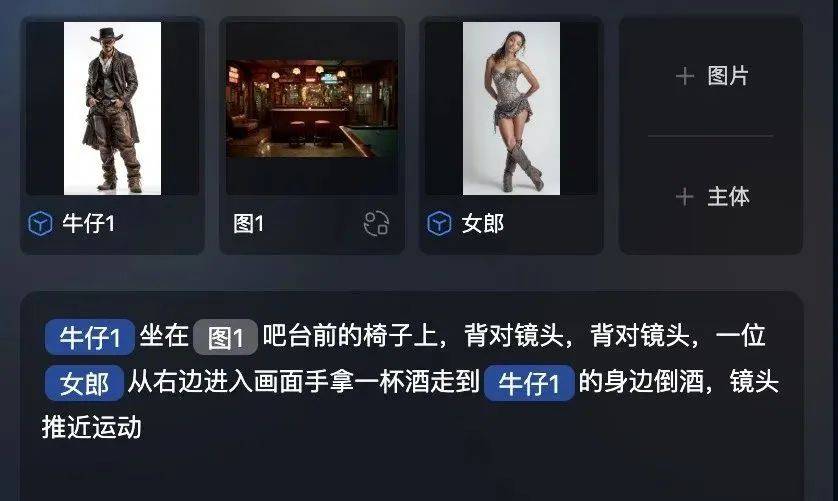

Correspondingly, several video model products have coincidentally optimized for professional track production directions. Shengshu Technology's product Vidu, in its July release of Vidu Q1, introduced a reference video generation feature that changes the traditional video production process and AI image-to-video process. By simply uploading reference images of characters, props, scenes, etc., a video can be directly generated, completely eliminating the need for storyboard production, and the reference generation supports seven subjects with consistent and undeformed appearance; KuaiShou's Keling AI, meanwhile, released "Lingdong Canvas" during the conference, bringing AI assistance and multi-person collaboration into one infinitely large working interface.

In addition, AI companies have also begun to focus on two product implementations that the industry pays more attention to - agents and embodied AI, creating models that match their specific capabilities.

From the perspective of the development of intelligent agents, some models "tailor-made" for agents emerged in the first half of this year, such as ultra-long text models that better match contextual understanding and visual reasoning models that optimize agents' "vision." Furthermore, with the popularity of embodied AI, the task of refining robots' "vision" and "brains" has fallen on large model companies. At the booths of companies such as SenseTime, iFLYTEK, and StepLeapStar, robots were also performing.

When the capabilities of large models reach a certain stage, applications also explode accordingly.

Jiang Daxin, founder and CEO of StepLeapStar, said: "The rapid iteration of the two generations of base models from Step 1 to Step 2 prompted us to deeply consider what is the most suitable model for applications. As large models enter the stage of reinforcement learning development, a new generation of inference models has become the mainstream. While the improvement in model performance is significant, does this fully equate to model value? Faced with this industry question, we must return to customer needs, base ourselves on real application scenarios, and explore feasible paths for model innovation and implementation."

At the WAIC venue, agents and embodied AI became the two most popular tracks. The enhancement of multimodal capabilities and reasoning capabilities has equipped agents and embodied AI with "eyes" and "brains."

Agents were present in almost every booth:

For C-end users, there are a small number of general agents and various vertical agents for segmented scenarios. For example, Baidu's GenFlow 2.0 supports collaboration among multiple agents, while StepLeapStar's agent is installed on smartphones, equipped with functions such as AI photo asking and AI assistant; MiniMax's agent, which has just launched full-stack functionality, allows users to create a webpage in just a few minutes.

On the intelligent hardware side, agents have also begun to take over people's life experiences. Midea's high-end AI technology home appliance brand COLMO has implemented the industry's first AI agent intelligent entity adapted to all scenarios of home appliances and furnishings - COLMO AI Butler. Through "multi-dimensional perception - autonomous learning - reasoning planning - decision execution," it enhances the smart home's ability to understand scenarios.

For software and hardware vendors doing To B business, intelligent agents have become a means to help enterprises increase efficiency and revenue for their customers. In addition to software applications, vendors that collaborate on software and hardware also embed intelligent agent services within their AI platforms. At the DeepSeek all-in-one machine booth, Guangzhui Intelligence encountered agents in the government/medical fields as new product capabilities for its software service end.

In the progress made in the first half of the year, a noticeable trend is that AI agents have begun to transition from a supporting role to a stage where they can autonomously drive task completion.

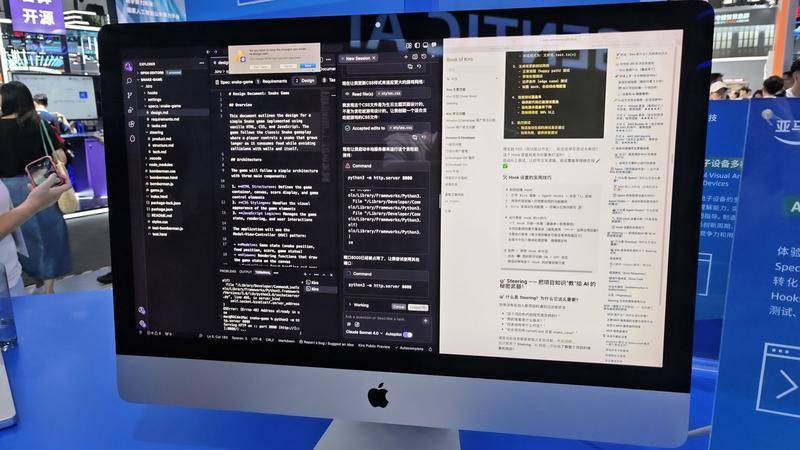

Taking Amazon Web Services' programming intelligent agent Kiro as an example, in its Spider mode, during the demand-planning-execution process of an agent's task, AI can provide assistance in each step. A Kiro technical expert gave an example: for instance, when a user gives a sentence-long demand, AI will create a more detailed PrD (Product Requirement Document) based on the demand, excavating various implicit demands to ensure that AI can accurately execute the task and produce a deliverable product.

Within enterprises, the operation and collaboration of multi-agents have already shown initial results.

When discussing the implementation of large models within factories, Zhang Xiaoyi, Vice President and CDO of Midea Group, told Guangzhui Intelligence that they have already seen factories using the capabilities of large models to improve factory operations. In a 3-4 month trial, intelligent agents have already helped improve product quality and efficiency.

"At Midea's Washing Machine Jingzhou Factory, we have launched 14 intelligent agents, and these 14 intelligent agents can operate interconnected," said Zhang Xiaoyi. In the past, all this data required direct communication and collaboration between people to find the root cause of the problem. But after handing it over to the intelligent agents, they will automatically find the current parameter conditions, equipment, production lines, and specific models, and then judge the approximate results.

"The intelligent agent that controls quality can reflux all relevant data into it, making real-time judgments. When quality fluctuations are detected, it will in turn affect the intelligent agent responsible for product equipment maintenance and the intelligent agent responsible for equipment processes," said Zhang Xiaoyi.

Overall, after half a year of building the agent market, some technically urgent tasks have been exposed during the process of experimenting with enterprises.

An agent platform staff member told Guangzhui Intelligence that currently, developers are more concerned about long-term memory and the expansion of the MCP ecosystem. The former determines that agents can retain contextual information in scenarios requiring long-term memory, while for some immediate scenarios, information needs to be cleared in a timely manner to avoid unnecessary cost waste; the expansion of the MCP allows agents to have more tools to call upon.

Similarly, embodied AI, which is also armed with a "big and small brain" by large models, has become the category with the largest number of exhibits at this conference.

In terms of the leaders in embodied AI, it mainly consists of two parts: the big brain and the cerebellum. Taking the mainstream "big and small brain" hierarchical architecture as an example, the multimodal large model serves as the "big brain," responsible for perception, understanding, and planning; while multiple small models combined with motion control algorithms form the "cerebellum," responsible for motion control and motion generation.

However, for the embodied AI track, which features a profusion of products and eager financing, everything is still in its infancy, and it is still too far from actual scenarios for implementation.

One issue that has arisen in the robotics sector is that the gap between software and hardware has widened even more this year.

Cui Guangzhang, Technical Director of Condi Robotics, which cooperates with CloudMinds to deploy security robots, told Guangzhui Intelligence that currently, the generational gap between hardware and software is still widening. Software has entered the process from verification to mass production, but hardware has not yet reached this stage, lagging behind in terms of functionality, stability, and cost performance.

Currently, the software and hardware capabilities of robots for industrial use can already satisfy certain tasks in specific industrial scenarios. The robotic dog co-developed by Condi Technology and CloudMinds will be sold to North America for security inspection scenarios in North American warehouses.

Just like the lingering implementation of large models last year, these two tracks still require more exploration and the evolution of model capabilities to support them.

"The development of AI large models began in 2022, the first year of the fourth wave of artificial intelligence, and various players have been racing ahead," said Li Xiang, Vice President of iFLYTEK.

In the process of continuously expanding large model technology, AI+large models are also reconstructing the ecosystem of industrial intelligence. On the B-end, by leveraging AI to mine the value of data and the Industrial Internet, large models and intelligent agents are gradually taking on more tasks to increase revenue and reduce costs.

"There are many open-source large models on the market, but they are all independent individuals," Kang Jian, General Manager of the Chemical Industry at COSMO+, which focuses on AI+Industrial Internet, told Guangzhui Intelligence. "Whether it's vertical small models or industry models, the value of scattered points is still limited."

Taking the chemical large model as an example, COSMO+ did not choose to immediately build a model, but instead set up an industrial Internet platform to integrate the business and data of all groups and secondary units in three cities, followed by data collection and governance. On this basis, large models and intelligent agents have the pull of business demand and the support of data.

Kang Jian provided an example using Yanchang Petroleum to illustrate how the collaborative application of production and operation intelligent agents, alongside market product price prediction intelligent agents, adjusts production strategies in real-time based on industrial chain conditions. By considering the prices and returns of refined oil and chemical products, production plans and respective capacities are fine-tuned to maximize the value of the group's entire industrial chain.

"Without the seamless integration of business and data, it's impossible to connect the entire industrial and value chains, nor can we bridge users and suppliers," emphasized Kang Jian. "If these connections are lacking, the potential of intelligent agents remains untapped. A single-scenario model might save 100,000 yuan annually, but our large-scale industrial chain model-based intelligent optimization could yield a value of 100 million yuan per year."

Compared to last year's generic, somewhat underwhelming industry customer service and dialogue assistants, AI has taken a leap forward this year with deep integration into industry data, introducing more industry-specific functionalities across various sectors, showcasing a more sophisticated and practical application outlook.

In the realm of AI+medical industry, large model companies have collaborated with hospitals, and following data training, several valuable functionalities are now being implemented. At the exhibition stand, Alibaba showcased an AI CT scan feature. Leveraging its general multimodal medical large model, the system automatically generates detailed diagnostic reports based on patients' medical images, aiding doctors in their assessments.

The model's capabilities set the upper limit for its applications, and this year has been no exception. A significant milestone was the release of various inference models, epitomized by DeepSeek-R1, further bolstering AI's application prowess across industries.

Taking the education industry as an example, the advancements in the capabilities of inference large models, represented by DeepSeek, have found corresponding applications in AI education.

"Parents often have reservations about AI education, fearing it might encourage copying answers and mislead students," said Li Tong, AI product leader at Xuesi Learning Machine. "However, the emergence of DeepSeek this year has been a major positive. Parents need to experience the large model firsthand and recognize its capabilities before they're willing to let their children try it too."

Li Tong noted that programming and problem-solving abilities have significantly improved in large foundational models over the past period. For purely textual math problems, large models can achieve an accuracy rate of 92% even when confronted with unfamiliar scenarios. Building on these enhanced problem-solving rates, AI has made notable breakthroughs in one-on-one problem-solving tutorials using large models.

From mere "showcasing capabilities" to "getting down to practical work," large AI models are breaking new ground in the deep-water territories of various industries. As AI capabilities continue to advance, the hidden value beneath the iceberg will gradually come into full view.