Addressing "Data Scarcity" in Embodied AI: SpatialVerse Paves the Way

![]() 07/29 2025

07/29 2025

![]() 671

671

Author|Mao Xinru

This year's World Artificial Intelligence Conference (WAIC) set new records.

Over 40 large models, 50 AI terminal products, 60 intelligent robots, and more than 100 "global first launches" and "China first shows" of major new products were featured. Additionally, over 150 humanoid robots were on display at the venue.

Unlike last year, when only 18 humanoid robots, mostly reliant on safety ropes, were present, this year's robots were performing various tasks such as boxing, playing drums, serving tea and water, and sorting packages.

These rope-free robots, collaborating in real-world scenarios, signify that embodied AI is transitioning from "technology demonstration" to a new stage of "task execution." This shift underscores the industry's collective breakthrough in the data-driven paradigm.

At WAIC, companies like SenseTime, Tencent, Zhiyuan, and Kuke Technology unveiled new projects. Each with a unique technical approach, they share a common goal: enabling robots to learn to "efficiently make mistakes" in the physical world.

However, for robots to truly "learn" from mistakes and grow in the complex physical world, they require vast amounts of high-quality, diverse training data—a resource the industry sorely lacks.

Data Becomes a "Choking Point" for Embodied AI

Embodied AI is undoubtedly one of the hottest concepts in current technology.

According to incomplete statistics, there were 130 financing events in the embodied AI field in the first half of 2025, 1.9 times the total for 2024, with a disclosed amount of 9.668 billion yuan, surpassing the 2024 total of 8.933 billion yuan. Including undisclosed transactions, the total scale is conservatively estimated to exceed 23 billion yuan.

Despite this capital heat, the practical difficulties of commercialization are stark. Orders for humanoid robots are concentrated in education, exhibition, and government projects, with slow penetration into industrial and household scenarios.

In a detailed conversation between Physical Intelligence, a renowned embodied AI company in the US, and Redpoint Ventures, researchers highlighted three major implementation challenges: complex task execution capabilities, environmental generalization capabilities, and high reliability performance.

Among these, "performance" has emerged as the biggest hurdle in transitioning from the lab to commercial use. "They still fail frequently and are currently more 'demo-ready' than 'deployment-ready,'" noted the researchers.

Data scarcity is the "choking point" between technological ideals and commercial reality, manifesting in three key aspects:

1. **Severe Data Scarcity**: Humanoid robot development is relatively new, with valid data accumulation far below the PB-level scale needed for large language models. A simple task like grasping a cup requires 5,000 real operation data points, and new scenarios often need to be built from scratch, creating a "reality gap."

2. **High Acquisition Costs**: Debugging every 1,000 robot actions in real-world training can cost hundreds of thousands of yuan, necessitating high-precision motion capture equipment and professional operators. Synchronizing multiple sensors requires millisecond-level precision, further raising the threshold.

3. **Abundance of "Data Silos"**: Companies treat data as a core competitive asset, keeping private datasets closed. Open-source community datasets are limited to simple tasks, with scarce data for complex scenarios and a lack of unified quality standards.

The essence of the data dilemma stems from the complexity of the physical world. Unlike autonomous driving, robots need to actively interact with their environment, exponentially increasing problem difficulty. The industry is currently exploring two breakthrough paths: real-machine data collection and simulation data synthesis.

Zhiyuan Robotics represents the real-machine data faction, having established a million-level real-machine dataset called AgiBot World, covering five real-world scenarios: home, dining, industry, supermarkets, and offices.

The simulation data faction generates synthetic data through algorithms to reduce acquisition costs, presenting a diversified landscape including physical simulation, video transfer, spatial reconstruction, etc.

Breaking down synthetic data, it can be divided into two key parts: scene generation and simulation. The generation of diverse indoor spaces has become a bottleneck in system performance, with two main technical paths available:

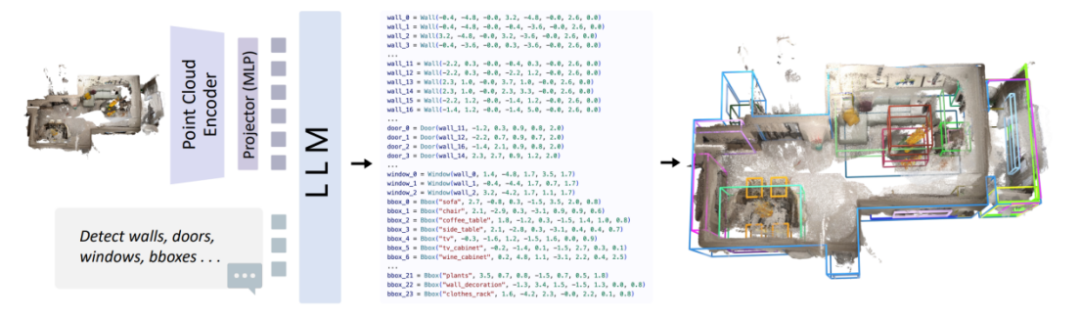

1. **Synthetic Video + 3D Reconstruction**: Driven by pixel streams, this approach generates video or images first, then reconstructs them into unstructured 3D data like point clouds or meshes, ultimately converting them into structured semantic models. Kuke Technology and Li Feifei's World Models are notable examples.

2. **Direct 3D Data Synthesis Using AIGC**: Utilizing methods like graph neural networks, diffusion models, and attention mechanisms, this approach directly synthesizes structured spatial data. ATISS and LEGO-NET are representative in this field.

Currently, training agents to adapt to the complex physical world urgently requires a foundation of vast amounts of real, interactive 3D environmental data.

This is precisely the current bottleneck—traditional simulation environments are costly and inefficient to construct, while real-world data acquisition is extremely difficult.

Agents require high-quality data for training, especially 3D spatial data reflecting complex spatial relationships, physical properties, and task logic.

The 3D community is now exploring new ways to acquire and present data. Among them, 3D Gaussian Splatting technology is a hot topic, capable of rapidly reconstructing high-fidelity, dynamic 3D scenes with basic physical properties from multi-view images. Its efficient data generation capabilities and realistic rendering effects provide a new paradigm for 3D data production.

3D Gaussian Splatting scene data offers a new approach for robot training. This equates to feeding high-quality, low-cost, editable 3D dynamic environmental data generated by cutting-edge graphics technology directly into robot learning algorithms, significantly lowering the threshold for constructing simulation environments and enhancing the richness and realism of training data.

Kuke Technology, one of the "Six Little Dragons of Hangzhou," is exploring this technical route.

3D Gaussian Semantic Dataset Equips Robots with a "Spatial Brain"

On the eve of WAIC, SpatialVerse, Kuke Technology's spatial intelligence platform, released the latest high-quality 3D Gaussian Semantic Dataset InteriorGS, aimed at enhancing the spatial perception capabilities of robots and AI agents.

The InteriorGS dataset contains 1,000 3D Gaussian semantic scenes, covering over 80 indoor environments, giving agents a "spatial brain" to improve their environmental understanding and interaction capabilities. It is the world's first large-scale 3D dataset suitable for the free movement of agents.

In recent years, 3D Gaussian Splatting, with its advantage of "reconstructing scenes with just a scan," has been implemented in fields such as cultural relic preservation and spatial design. The InteriorGS dataset, released this time, introduces this technology into the field of AI spatial training for the first time.

SpatialVerse distinguishes itself from traditional 3D technology vendors through its rare full-link closed-loop capability of "reconstruction-semantics-simulation." Most vendors focus on single-point breakthroughs, either excelling in 3D reconstruction algorithms, producing exquisite but lifeless static models, or specializing in physical simulation engines but lacking high-quality, semantically rich input scenarios.

Currently, there are two fundamental challenges in the development of spatial intelligence and embodied intelligence:

1. **Shortage of High-Quality 3D Scene Data**: There is an extreme shortage of large-scale, interactive 3D scene data that captures the complexity of the real world and supports agents in perception, decision-making, and action validation.

2. **Lack of Physical Attributes in 3D Data**: Whether static or rendered models, if they lack encoding of physical laws such as gravity, collision, material friction, and object motion states, agents cannot learn basic abilities that rely on physical interaction, significantly reducing training value.

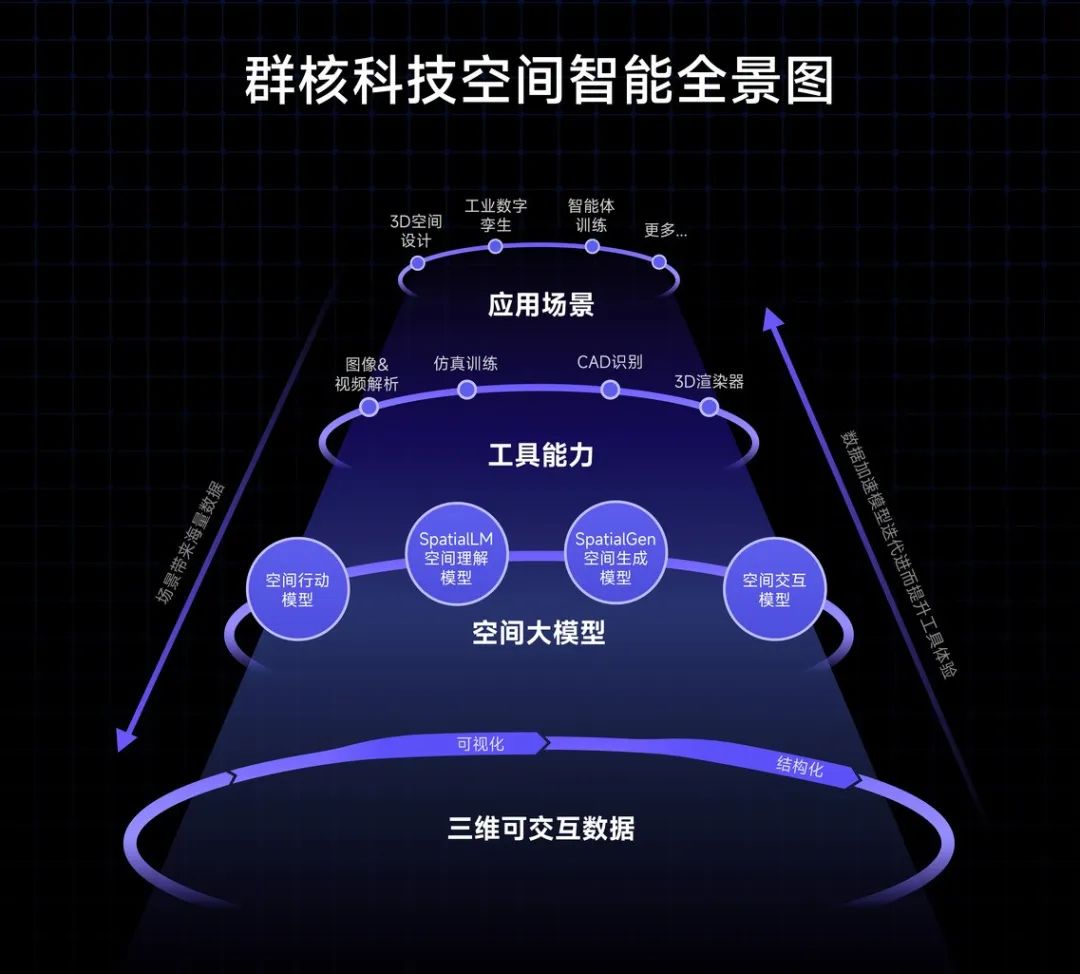

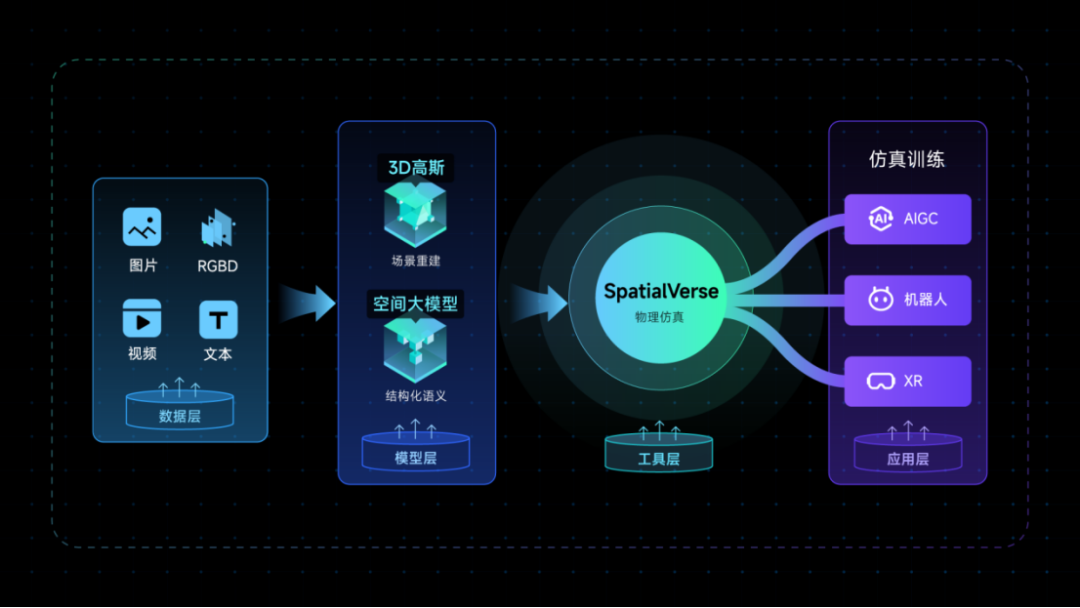

Faced with these industry pain points, Kuke Technology relies on its experience in indoor space digitization to blaze a path with unique advantages through SpatialVerse: starting with irreplaceable scene data accumulation, connecting the full link of "reconstruction-semantics-simulation," building a self-reinforcing "data flywheel," and ultimately creating a spatial intelligence base platform.

Centered on the KuJiale platform, Kuke Technology has constructed InteriorNet, the world's largest and most detailed structured dataset of indoor spaces. This rare dataset of interactive 3D data contains a large number of real floor plans, detailed furniture arrangements, material textures, and preset interactive logic, providing agents with a nearly real virtual training ground.

Previously, in the seminal embodied AI paper "FireAct" by Google and Stanford, explicit thanks were given to SpatialVerse for providing high-quality data.

Additionally, Kuke Technology has formed an efficient and self-reinforcing "data flywheel" system:

1. **Data Layer**: Represented by InteriorNet, providing massive interactive 3D data as initial fuel.

2. **Model Layer**: Represented by the SpatialLM spatial large model, pre-trained and fine-tuned based on vast data, SpatialLM possesses spatial understanding and reasoning capabilities, able to parse structures, identify objects, and understand relationships from complex scenarios. Its open-source version once topped the Hugging Face trend chart.

3. **Tool Layer**: Represented by the spatial intelligence platform SpatialVerse, which integrates the understanding capabilities of models like SpatialLM into the simulation platform, enabling it to generate smarter, more physically consistent scenarios or dynamically add richer semantics and interaction possibilities to existing scenarios. The training behavior data of agents in SpatialVerse can also be fed back to the data layer and model layer for optimizing simulation rules and improving model accuracy.

This system forms a mature closed-loop logic: data drives model optimization, models feed back into tool iteration, and tools generate new data.

The ImageNet of the 3D World, Accelerating Sim2Real Evolution

Interestingly, most robot companies exhibiting at WAIC this year have partnered with SpatialVerse, including domestic embodied AI leaders like Zhiyuan Robotics and Galaxy General. Behind the robots' on-site antics lies the hidden presence of SpatialVerse, providing a "simulation dojo" for robots to learn various tasks.

However, it's not just robots that lack data; all AI agents need vast amounts of 3D data to learn about the complex physical world.

Through the release of the 3D Gaussian Semantic Dataset, Kuke is not only providing a new dataset but also presenting a systematic solution to the core challenges of spatial intelligence.

SpatialVerse aims to become the "ImageNet" of the spatial intelligence field—just as ImageNet fueled the explosion of computer vision, it will provide a "digital dojo" for AIGC, XR, embodied intelligence, and other fields.

As a spatial intelligence base, the SpatialVerse platform inherently belongs to spatial intelligence and physical AI from the "root technology," naturally driving the activation of the XR industry and the innovation of AIGC workflows.

The core of the XR experience lies in constructing immersive and interactive virtual and mixed spaces. SpatialVerse's advantage lies in its ability to construct high-fidelity virtual environments, anchor mixed reality spaces, and enhance the credibility of physical interactions.

For the AIGC field, traditional 3D content creation is highly dependent on professionals and tools, often inefficient and costly. SpatialVerse's massive and high-quality spatial data and structured information can provide training materials for generative AI models.

Combining it with AIGC technology enables automated 3D scene and object generation, physically credible content simulation, and multi-modal content interaction.

Spatial intelligence allows agents to "see" and understand the geometric structure of the world; physical AI enables agents to "understand" the operating rules of the world; embodied intelligence allows agents to leverage their understanding of the world's structure and rules to actively interact, learn, and complete tasks in real environments through a body.

The significance of SpatialVerse lies specifically in accelerating the rapid evolution of Sim2Real and bridging the gap between the "virtual" and "real" worlds:

Massive High-Fidelity Pre-training: SpatialVerse offers a vast array of high-precision spatial and physical simulation data encompassing diverse scenarios such as homes, commerce, industry, and cities. This enhances the fundamental spatial cognition and physical common sense of embodied intelligence models.

Safe and Efficient Spatial Interaction Training Ground: Within the virtual environment crafted by SpatialVerse, agents can engage in limitless task attempts and reinforcement learning, rapidly iterating and refining strategies with zero risk and minimal cost.

Qunhe Technology's next strategic focus is on establishing an open and thriving spatial intelligence ecosystem, continuously overcoming future challenges.

By attracting hardware manufacturers, algorithm developers, and industry application partners, we aim to collaboratively create standardized data interfaces, toolchains, and solution libraries. This will foster an open platform while enhancing ecological collaboration.

Secondly, we strive to continuously improve simulation accuracy, explore multi-agent collaborative simulations, and bolster AI's proactive exploration and meta-learning capabilities within simulation environments. Our goal is to optimize Sim2Real migration efficiency and overcome profound technical challenges. Additionally, as we build a massive spatial database, we will establish rigorous data privacy protection mechanisms and ethical guidelines for the use of spatial data.

The ultimate aspiration is to make "understanding the physical world" an inherent ability for every intelligent agent.

Whether it's a service robot at home, a logistics robotic arm in a factory, a digital human in a virtual realm, or an AR assistant on a mobile device, they can all achieve a seamless loop from "perceiving the environment - understanding rules - creating value." This is based on precise perception of spatial structure and a profound understanding of physical rules.

When intelligent agents truly acquire the capability to perceive the physical world, human-machine collaboration will usher in a new era.

In this technological race shaping the future, the entity that pioneers the breakthrough of embodied intelligence's "singularity" will seize the reigns of the intelligent age.