Directly Covering WAIC: Large Models Enter the 'Midfield Battle'

![]() 08/04 2025

08/04 2025

![]() 687

687

By Xiong Yuge

Edited by Chen Feng

The Shanghai World Expo Exhibition Hall was bustling with activity at the end of July as the 2025 World Artificial Intelligence Conference (WAIC) welcomed unprecedented popularity.

Behind this technology feast, three major trends in China's large model industry have become increasingly clear since 2025, reshaping the competitive landscape:

- Inference models have emerged as the new technological frontier.

- Application implementation has shifted from concept to practical use.

- Domestic computing power has made significant breakthroughs.

From the open-source storm of DeepSeek to the debut of Huawei's Ascend 384 super node, from the differentiated strategies of the 'Six Tigers' to the comprehensive counterattacks of industry giants, large models are bidding farewell to the chaotic 'Hundred Model War' era and entering a more rational and intense 'midfield battle'.

WAIC 2025 venue, image source: Official WeChat public account of the World Artificial Intelligence Conference

At the 2025 WAIC, the robot industry, which was relatively low-key last year, has surged in popularity, with the number of related companies jumping from 18 in 2024 to 80. They occupied almost the entire second floor H3 exhibition hall, generating significant buzz. Large model vendors were mainly concentrated in the first floor H1 exhibition hall.

This year, ZeroOne and Baichuan Intelligence from the 'AI Six Tigers' were absent, and the booths of top vendors such as BAT and iFLYTEK no longer focused on comparing model parameters but rather showcased a diverse landing ecosystem.

Today, competition in large models is no longer a simple technological arms race but a comprehensive competition encompassing industrial ecology, business models, and international competitiveness. As large models move from the laboratory to the front line of industry, reasoning ability becomes a new benchmark, and localization is an irreversible trend. Every participant is reassessing their positioning and strengths.

If the 'Hundred Model War' before 2025 was primarily about competition in basic capabilities, inference models represent a qualitative leap from 'being able to answer' to 'being able to think'.

The landmark event for this shift is undoubtedly the emergence of DeepSeek-R1.

According to industry analysis, the training cost of DeepSeek-R1 is only US$5.6 million, far lower than the tens of millions or even hundreds of millions of dollars invested by American AI companies. More importantly, this technological breakthrough has paved the way for the 'democratization' of inference models, making previously inaccessible AGI research relatively accessible.

In response to this change, top vendors quickly followed suit. The proliferation of inference models at WAIC illustrates the evolving rules of large model competition.

According to incomplete statistics, since the release of DeepSeek-R1 in January 2025, top vendors and large model startups have successively launched their own inference models within just a few months.

- In March, Tencent released the official version of Hunyuan T1, Baidu released Wenxin X1, and Alibaba released the QwQ-32B inference model.

- In April, ByteDance released Seed-Thinking-v1.5, and Alibaba released the Tongyi Qianwen Qwen3 inference model.

- In June, Tencent released Hunyuan-A13B.

- In July, Zhipu released GLM-4.5, StepStar released Step3, iFLYTEK Spark X1 ushered in its second upgrade, and Dark Side of the Moon released Kimi K2.

Tencent booth at WAIC 2025, image source: Official WeChat public account of Tencent YouTu Lab

Before and after WAIC, domestic large model vendors showcased their latest inference models, exhibiting clear differentiated strategies in technological approaches, mainly reflected in model architecture, inference mechanism, parameter strategy, and cost:

- Architecture Selection and Innovation: The architecture of large models has shifted from pure Transformer to the era of hybrid architectures. A single architecture can no longer meet the performance requirements of inference models, and hybrid architectures have become the new technological frontier.

- Tencent's official version of Hunyuan T1 abandons the pure Transformer architecture and instead applies the hybrid Mamba architecture to the inference large model, with actual generation speed faster than DeepSeek-R1.

- Dark Side of the Moon's Kimi K2, Alibaba's Qwen3 inference model, ByteDance's Seed-Thinking-v1.5, Zhipu's GLM-4.5, and StepStar's Step3 all adopt the MoE architecture.

- Inference Mechanism Innovation: More suitable for scenario applications and Agent development.

- Baidu's Wenxin X1 adopts 'thought chain-action chain' collaborative training, automatically breaking down complex tasks into more than 20 inference steps, and can simultaneously call over a dozen toolchains to enhance Agent capabilities.

- iFLYTEK's Spark X1 uses a 'fast thinking + slow thinking' unified model architecture, emphasizing both general reasoning ability and domain-specific reasoning.

- Dark Side of the Moon's Kimi2 focuses on code capabilities and Agentic task processing, specifically optimized for software engineering tasks.

- StepStar's Step3 innovatively introduces the MFA attention mechanism and AFD distributed inference system.

- Zhipu's GLM-4.5 achieves the native fusion of reasoning, coding, and Agent capabilities in a single model for the first time, supporting the switching between thinking mode and non-thinking mode.

- Parameter Strategies: Seeking a balance between efficiency, cost, and speed.

- Kimi K2 has 32B active parameters and 1T total parameters, making it the largest open-source model in terms of total parameters in the industry.

- Seed-Thinking-v1.5 reduces the number of parameters to 200B total parameters and 20B active parameters, showing a clear trend towards lightweighting.

- Alibaba's QwQ-32B adopts a 32B parameter scale with video memory requirements controlled at 24GB, significantly reducing the deployment threshold.

- Tencent Hunyuan A13B has a total of 80B parameters and only 13B active parameters, deployable on one mid-to-low-end GPU.

Alibaba booth at WAIC 2025, image source: Official WeChat public account of Tongyi Lingma

Currently, the competition in inference models is no longer limited to parameter scale and computing power consumption. The design of the model's reasoning chain, multi-step logical processing ability, and compatibility with downstream tasks are becoming new evaluation dimensions.

This arms race in inference models is essentially different bets by various vendors on the future direction of AI development. Whether open-source or closed-source, general-purpose or specialized, efficiency or effectiveness, each choice will determine their position in the next phase of competition.

As inference model technology matures, the real contest shifts to the competition for application scenarios.

After inference models solve the problem of 'thinking', application implementation demonstrates the key directions of 'who to think for' and 'what to think about'. The most noticeable change at the 2025 WAIC exhibition is undoubtedly the transformation of large models from 'technology display' to 'application practice'.

This transformation is first reflected in the differentiated strategies among WAIC vendors—Internet giants rely on platform ecosystems and capabilities to continuously make extensive layouts at the B-end and C-end; professional model vendors choose to deepen their presence in vertical tracks, selecting the B-end as a breakthrough outlet.

- Tencent showcased the complete display link of the Hunyuan large model 'from cloud to end', as well as content such as B-end financial analysis assistants, intelligent video editing, and media content generation. A particularly eye-catching highlight is social interaction—adding the 'Tencent Yuanbao' friend on WeChat allows 1.4 billion WeChat users to access AI assistants just like adding friends.

- Alibaba focuses on the convenience that AI tools bring to life. For example, supported by the Tongyi large model technology base, the spatial intelligence system constructed by Tmall Genie's whole-house intelligence allows users to experience rich scenarios such as AI audio and video search and recommendation, AI karaoke, and AI fitness on-site; the Tongyi APP integrates the three functions of AI translation, AI dialogue, and AI voice calls, becoming a 'personal partner' for users. Tongyi Qianwen + DingTalk Office Intelligence can automatically generate project progress tables, meeting minutes, daily report summaries, and integrate knowledge base management, permission control, and RPA capabilities.

- Baidu focuses on AI infrastructure such as the Baige GPU computing power platform and the 'Wanyuan' intelligent computing operating system, cooperating with the Wenxin large model technology base to launch products such as Huibo Xing, Wenxin Kuama, the 'Wanyuan' intelligent computing operating system, AI-upgraded Baidu Wenku, and Baidu Netdisk.

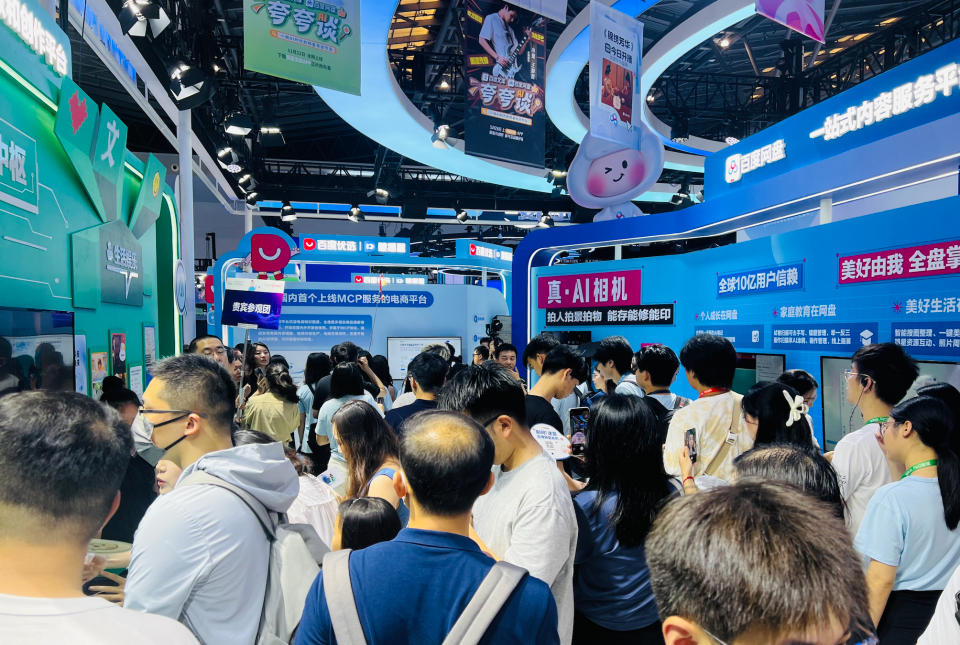

Netdisk and Wenku display at Baidu booth at WAIC 2025, image source: Official WeChat public account of Baidu Netdisk Service

On the other hand, vendors such as Kimi and Zhipu AI lack the traffic advantages of large factories, and their display content focuses more on B-end capabilities.

- Kimi and Zhipu AI, benchmarking Claude, emphasize programming and Agent capabilities. Zhipu AI's CogAgent platform supports training personalized conversational entities, quickly encapsulating them into roles such as intelligent customer service and content assistants, and possesses functions such as private knowledge bases, multimodal APIs, and process customization. Zhipu previously stated that the API price of GLM-4.5 is only 1/10 of Claude's, with a speed of up to 100 tokens/second. Kimi launched the Kimi-Researcher deep research agent, and the newly launched Kimi K2 has obvious advantages in code capabilities and Agentic task processing, and can also complete the integrated process of AI business writing + intelligent table analysis.

- Two of the 'Six Tigers', Minimax and StepStar, place more emphasis on multimodal capabilities. StepStar's new-generation multimodal large model Step 3 covers capabilities in text, speech, images, video, music, and reasoning. It claims that over half of China's top 10 mobile phone manufacturers have accessed StepStar's multimodal capabilities, with flagship models from OPPO, Honor, and ZTE already equipped and adapted. Partners also include Geely Automobile, Zhiyuan Robotics, and other enterprises, with estimated revenue for the full year of 2025 approaching RMB 1 billion. Minimax's Minimax Agent intelligent platform can handle long-range complex tasks, replacing a week's workload of professionals, delving into vertical applications such as webpage development and in-depth research, and supporting the integration of office systems such as DingTalk, Feishu, and WeChat Work.

It is not difficult to see that in terms of application forms, Agent has become the most imaginative landing direction.

However, rooted in the current environment—recently, the C-end general-purpose Agent star product Manus relocated its headquarters to Singapore, laid off 80 employees in China, and abandoned the launch of the domestic version—large model vendors are paying more attention to the development of vertical Agent capabilities.

In terms of landing scenarios, it is also worth noting the accelerated penetration of large models in vertical industries.

For example, in the financial sector, risk control, investment advisory, customer service, and other links are actively introducing large model technology; in the medical field, application scenarios such as diagnostic assistance, drug research and development, and medical record management continue to emerge; in the manufacturing industry, use cases such as intelligent quality inspection, predictive maintenance, and supply chain optimization are gradually being implemented.

Taken together, the application implementation of large models is actually a competition of comprehensive strength. Technological capability is the foundation, but productization capability, ecological integration capability, and customer service capability are equally important. Vendors that can form differentiated advantages in these dimensions are likely to win in the competition for application implementation.

Among all the variables in the large model industry, computing power is undoubtedly the most strategically significant link.

Recently, Huang Renxun's visit to Huawei provided a new perspective for the development of domestic computing power. In an interview with CCTV, Huang Renxun stated that it may only be a matter of time before Huawei's AI chips replace NVIDIA's, which has also heightened expectations for the potential of domestic computing power.

According to public information, the daily average token invocation volume of Alibaba and ByteDance in recent months has increased by nearly or over 100 times compared to a year ago. This explosive growth poses a huge challenge to computing power supply.

At the 2025 WAIC exhibition, domestic computing power demonstrated unprecedented strength and confidence.

As one of the 'Eight Treasures of the Museum', Huawei's Ascend 384 super node was exhibited for the first time. Based on the super node architecture, the Ascend 384 super node achieves large bandwidth and low latency interconnection between 384 NPUs through bus technology, solving the communication bottleneck between various resources such as computing and storage within the cluster.

At WAIC 2025, Huawei's Ascend 384 super node showcased its prowess. Image source: Official WeChat public account of the World Artificial Intelligence Conference

The significance of this breakthrough transcends mere computational prowess; it serves as a sturdy foundation for the training of domestic large models.

Domestic GPU vendors are equally impressive. Suiyuan Technology's latest AI inference accelerator card, the 'Suiyuan S60', benchmarked against NVIDIA's L20, boasts 48G of video memory and a PCIe 5.0 interface. It supports numerous mainstream inference frameworks and over 200 models, effortlessly handling inference demands of models with up to 100 billion parameters.

In February of this year, Suiyuan Technology collaborated with Meitu's Meiyan Camera, deploying nearly 10,000 inference cards online within just four days to meet the surge in demand spurred by the 'AI Costume Change' feature.

Moreover, domestic computing power is transitioning from isolated advancements to ecological collaboration. In July, StepStar joined forces with nearly 10 chip and infrastructure vendors, including Huawei Ascend, Moxi, Biren Technology, Suiyuan Technology, and Tianzhi Core, to launch the 'Model-Chip Ecological Innovation Alliance'.

While domestic computing power faces challenges, including lagging chip performance, inadequate software ecosystems, and the need for further verification of stability and reliability in large-scale deployments, there are reasons to be optimistic. Technological diversity presents catch-up opportunities, the vast domestic market facilitates industrialization, and policy support ensures innovative development.

Crucially, the rapid evolution of the AI industry at WAIC opens a historic window for domestic computing power.

As the excitement of WAIC wanes, the 'midfield battle' in the large model industry is just commencing. This is not merely a technological contest but a comprehensive struggle encompassing technological paths, business models, ecological construction, and international competition.

The hallmarks of this midfield battle are differentiation and focus. Differentiation manifests in varied technological paths, specialized application scenarios, and distinct business models. Focus, on the other hand, is evident in tackling core technologies, achieving breakthroughs in key applications, and constructing ecological moats.

This shift suggests that the large model industry is moving from a phase of rapid expansion to one of intensive cultivation.

Looking ahead, three trends will continue to deepen: reasoning models will expand from logical to multimodal and embodied intelligent reasoning; application implementation will evolve from tool-based to platform- and ecosystem-based; and domestic computing power will transition from catching up to competing, aiming for leadership in niche areas.

Ultimately, the outcome of the midfield battle will be determined by application value and user choice. As the WAIC fervor fades, the path for the domestic large model industry to remain at the forefront remains to be further validated.