Video Generation Models Overview: Who Will Shape the Future of Content Creation?

![]() 08/07 2025

08/07 2025

![]() 656

656

Are You Surrounded by AI Videos?

Over the past two months, numerous individuals have been captivated by short videos of "animals diving" — elephants, piglets, and corgis taking turns to perform high-difficulty dives from a standard platform, often accompanied by professional commentary and enthusiastic cheers. These videos rival movie special effects in their choreography and realism upon water entry.

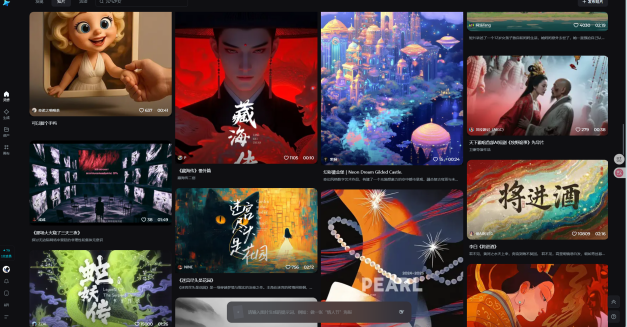

Not limited to "animal diving," a diverse array of AI-generated videos, such as anthropomorphic animal scenarios and stress-relieving clips of cutting various materials, have begun to sweep major content platforms like Douyin, Xiaohongshu, and Bilibili.

Such visual effects were once confined to well-produced films and television shows.

Behind these videos lies a series of workflows centered around "script creation - keyframe generation - video production," giving rise to a group of "digital directors" who monetize their AI video creations.

In fact, as early as 2022, a series of AI-generated videos began appearing on the market. However, these videos often suffered from issues like distortion, strangeness, and a poor viewing experience, limiting their spread.

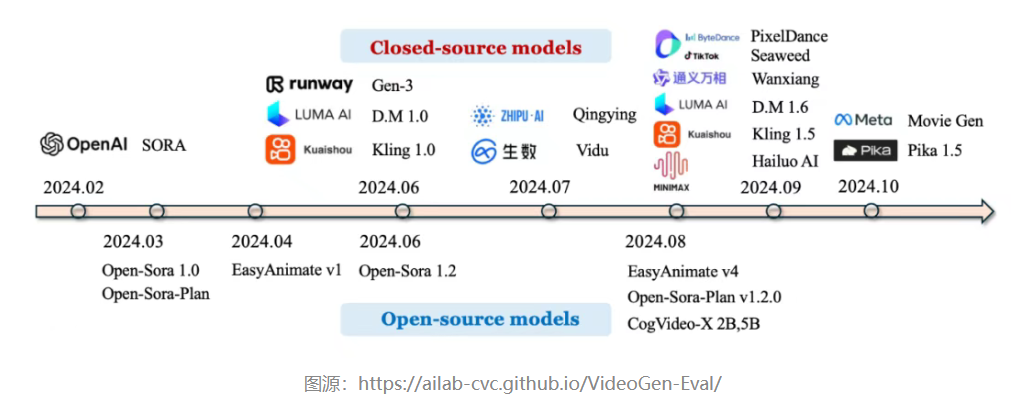

The turning point came in 2024. In February, OpenAI released Sora, powered by the DiT (Diffusion + Transformer) architecture, which broke through bottlenecks in video generation regarding duration, clarity, logical consistency, and more, making "AI-generated videos" truly accessible to the mass market for the first time.

Subsequently, more vendors began adopting the DiT or other hybrid model architectures, successively releasing closed-source model products comparable to Sora, such as Runway Gen-3 and Luma Dream Machine in June 2024, and Kuaishou Keling, fully launched at the end of July.

As we entered the second half of the year, video generation models witnessed explosive growth. Giants like Alibaba and ByteDance invested heavily, with startups like Zhipu and MiniMax quickly following suit. AI videos, once questioned due to technological bottlenecks, have now become one of the most competitive sectors for AI model commercialization.

01 The "Warring States" of Large and Small Companies: How Strong Are Their Hands?

Unlike large language models that have undergone three years of evolution and refinement, AI video generation models are still in the nascent stages of a "warring states" period in terms of both performance and market structure.

Since their intensive emergence in 2024, this field has yet to form a head monopoly akin to ChatGPT, Claude, Gemini, and others in the LLM space in terms of model capabilities, user perceptions, and specific landing scenarios.

Currently, a unified evaluation system for video generation models has not been established, with "temporal consistency," "frame quality," "prompt adherence," "generation stability," etc., often serving as common evaluation criteria. From the changing trends in multiple evaluation rankings, there are still significant fluctuations among various models, with leading advantages yet to solidify.

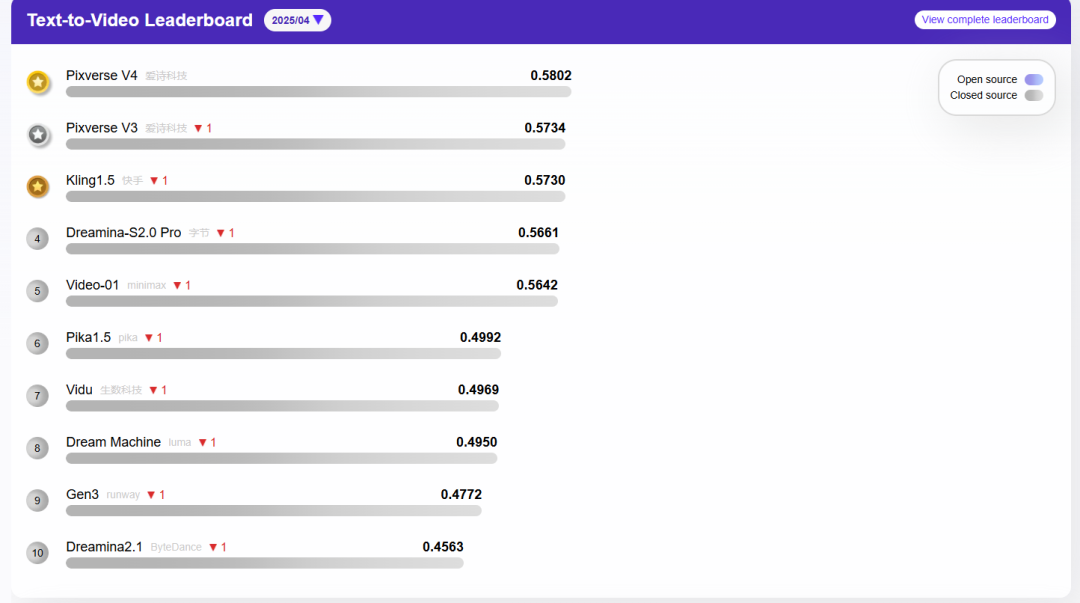

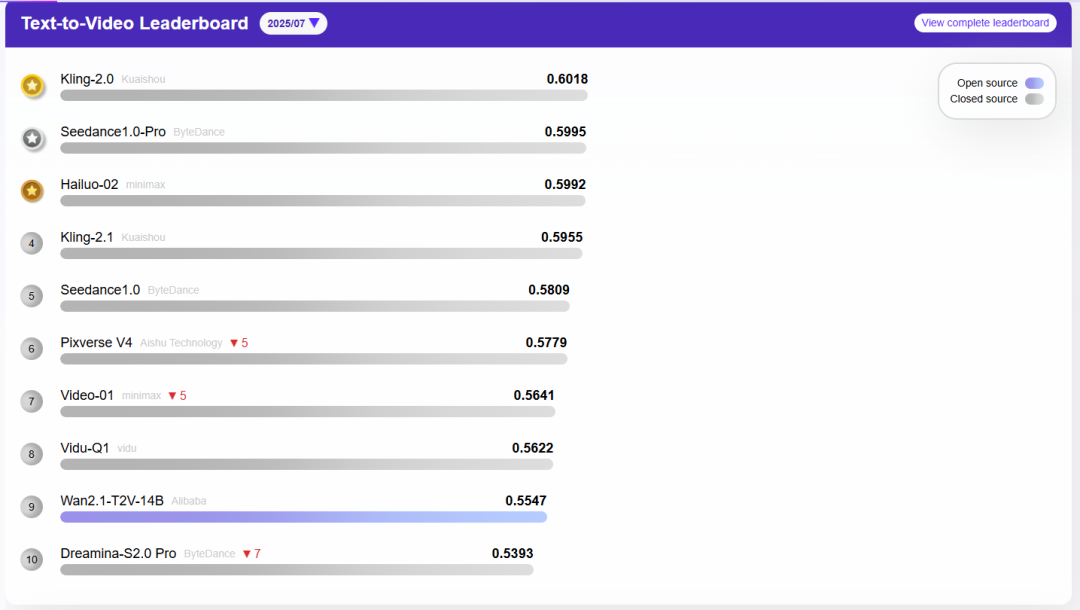

Taking the AGI-Eval rankings (jointly released by multiple top domestic universities and research institutions) in April and July of this year as examples, in just three months, the top ten rankings underwent a significant shuffle, with only Pika 1.5, MiniMax's Video-01, and Aishi Technology's PixVerse V4 maintaining their positions. Other previously listed models were either replaced by their iterative versions or surpassed by newcomers.

It's worth mentioning that, in addition to internet giants like Alibaba and ByteDance, startups such as Minimax and Aishi Technology are also prominently listed on the rankings, with domestic vendors slightly outnumbering foreign ones.

Image source: AGI-Eval official website

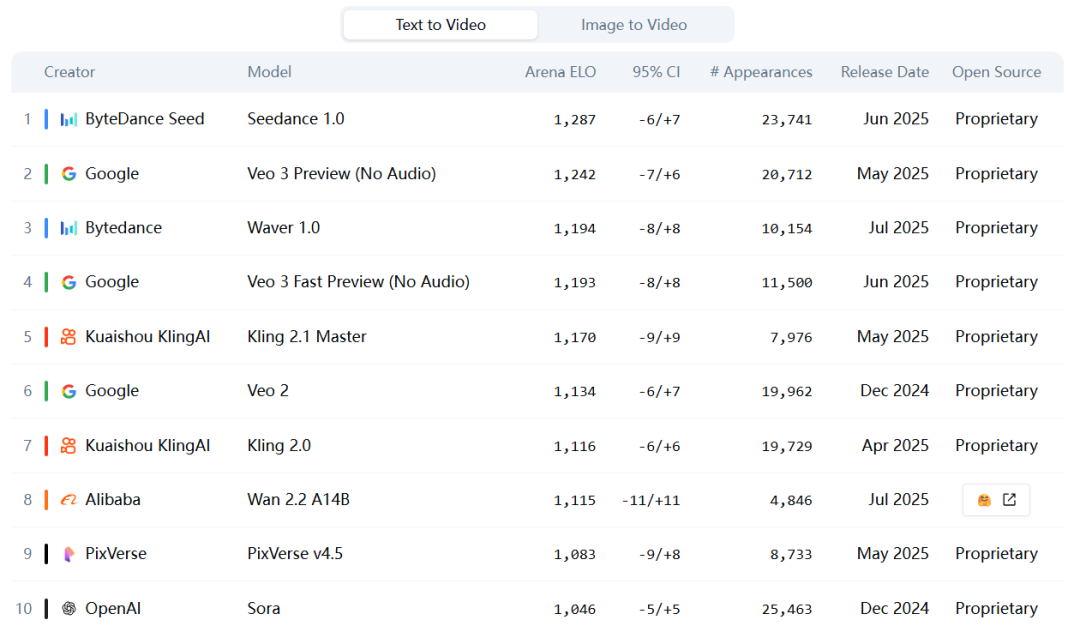

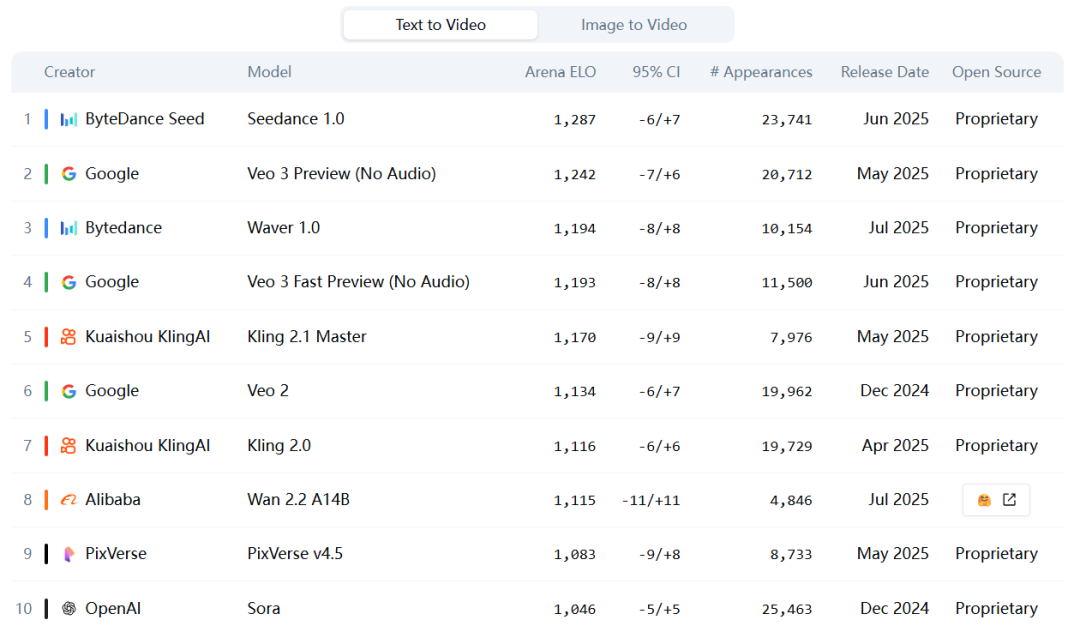

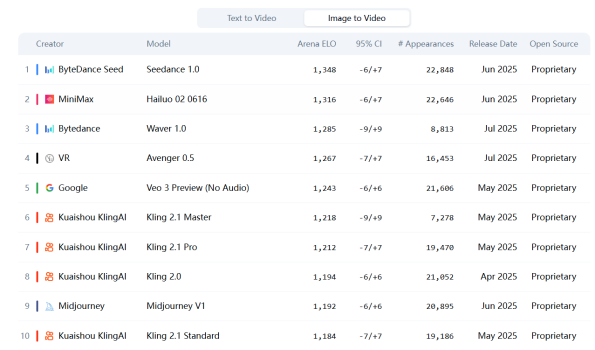

On the other hand, due to different model generation paths, there are also significant differences in evaluation dimensions between text-to-video (Text-to-Video) and image-to-video (Image-to-Video). According to the July 2025 rankings by international evaluation agency Artificial Analysis, only half of the models could simultaneously rank in the top ten of both categories, further indicating that current model capabilities are still in a rapidly evolving period that has not yet solidified.

Image source: Artificial Analysis official website (as of August 6, 2025)

Currently, many voices suggest that large language models have entered a "technological plateau." GPT-5 has been delayed, Claude 4 experienced an 11-month version span, and new DeepSeek products have yet to appear... In contrast, among video generation models, model iterations are still in the explosive growth stage, transitioning from early to mature, with both large enterprises and startups introducing new innovations at a frequency of 2 to 4 months.

Taking Kuaishou Keling as an example, since its launch in June 2023, it has introduced a new feature on average every two months; in the first half of 2025, it completed two major version iterations from 1.6 to 2.1. Conch, under MiniMax, has also achieved three iterations within three months since its launch in August last year and introduced a new model, Conch 02, in June this year.

Although the overall landscape is not yet completely clear, in the past six months, domestic vendors like Kuaishou, ByteDance, MiniMax, and Aishi Technology have basically maintained their rankings in the top 10 in various evaluations, ranking in the first tier.

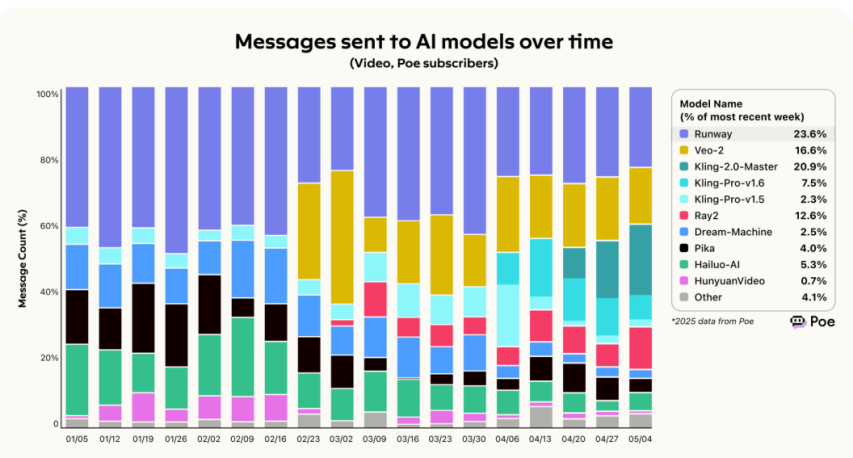

This trend is also reflected in user-side usage rankings. According to the POE rankings, Kuaishou Keling and MiniMax Conch have long occupied the forefront of market share. ByteDance Jimeng, due to the late launch of its new version (June 2025), has not yet appeared in the May rankings.

Image source: Poe, "Report: 2025 Spring AI Model Usage Trends"

02 The Explosive Rise of AI Video Generation: Content Soil, Cost Revolution, and Platform Competition

To some extent, the explosive growth of AI video generation in the past year is the result of "right time, right place, right people."

In addition to the technological breakthrough brought by Sora, the continuous expansion of video content constitutes the most solid "soil" for the implementation of AI videos. According to QuestMobile data, as of September 2024, the monthly active users of China's mobile video industry have reached 1.136 billion, with significant growth, and video content is becoming the core carrier of traffic.

More crucially, AI significantly reduces the labor costs, time costs, and technical thresholds of video production. Whether it's the complex processes involved in live-action videos, such as directors, actors, locations, and post-production, or the highly skilled processes required for animated videos, such as rendering, modeling, and special effects, AI videos can be "one-click generated" within seconds.

A typical example is that a top-tier animated film produced by Disney and Pixar has a production cost of up to US$2 million per minute, while similar footage generated by AI models can currently compress the unit cost to around US$300 per minute.

Although current video generation models still have obvious shortcomings in terms of effect stability and plot coherence, usually only outputting clips of a few seconds to a few minutes, this happens to match the requirements of light content scenarios such as short videos and short dramas.

Short videos not only have lower requirements for duration but also have fragmented user attention, making the content form itself more tolerant of errors. AI tools have naturally become efficiency enhancement tools for short video editors, MCN agencies, and even ordinary creators.

To quickly occupy the creator market, unlike the strategic path of large language models transitioning from closed-source to open-source, video generation vendors have chosen the opposite approach, starting from open-source and giving users certain free usage rights. After attracting new users and cultivating user habits, they realize commercial monetization through a subscription model.

For example, Kuaishou Keling, ByteDance Jimeng, and Tencent Hunyuan guide C-end subscriptions by offering points and free uses. Baidu Huixiang was launched with a free trial in early July 2025, while Tongyi Wanxiang was open-sourced and distributed on GitHub in the form of model source code.

Relying on the distribution resources and user traffic of content platforms, content giants like ByteDance and Kuaishou have more advantages in occupying domestic C-end users and have begun to build a closed-loop ecosystem of "model generation - content creation - platform distribution," injecting AI capabilities directly into the short video author chain to achieve natural penetration of video models among users.

In contrast, many startups such as Aishi Technology's PixVerse and MiniMax's Conch have targeted overseas markets.

Taking Aishi Technology as an example, previously public data indicated that PixVerse's total user base has reached over 60 million, with over 16 million monthly active users. When this data was publicly released, Aishi had not yet launched domestic products, and in terms of user volume alone, PixVerse has become the AI video generation product with the largest global user base.

In terms of model performance, domestic startups have outperformed overseas competitors in multiple rankings. However, in the context of lacking natural traffic inlets and brand advantages in the domestic market, going overseas has become their optimal solution to bypass strong platforms and find blue oceans.

Additionally, Shengshu Technology's Vidu has chosen to target the B-end market, collaborating with Feishu and Baidu Search in April this year. Prior to that, it also collaborated with AR, VR device manufacturers, film and animation websites, and more.

03 Templates or Tools? The Diverging Paths of AI Video Generation

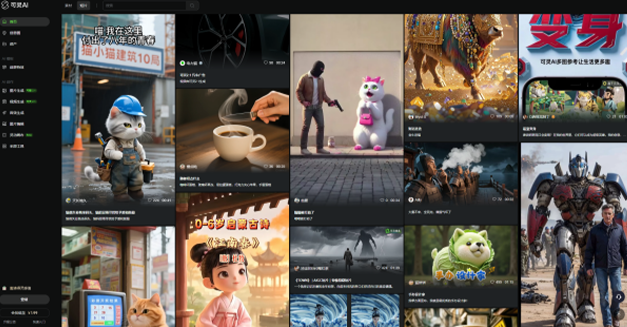

By examining related comments on social media platforms, it can be found that when serving short video creation, Kuaishou and Douyin exhibit different content advantages. Keling excels in Chinese style and anime, while Jimeng excels in realistic and artistic content generation.

The reason why both choose their respective vertical tracks to some extent caters to the content characteristics of their respective platforms. Kuaishou, with real-life recording as its content orientation, is more down-to-earth in template selection, while Douyin, with entertainment and trendiness as its trends, is more prominent in stylization capabilities.

Source: Keling official website

Source: Jimeng official website

However, whether it's Keling or Jimeng, when AI video generation models serve short video creation, the effectiveness and stability of template effects and prompt word design become unavoidable keywords in AI video generation tutorials.

In other words, "quick selection, few modifications, stable output" are the core variables that determine the frequency of tool use.

In fact, to some extent, the reason why Aishi Technology's PixVerse has been able to obtain such a high user volume overseas is due to its templated video generation approach. By providing numerous "template effects" of around 5 seconds, it simplifies the creation path for creators. Similar functions are also found in Conch and Keling.

The advantage of template effects is that they can greatly reduce the production threshold for creators and produce videos with average-level effects. But at the same time, its side effects are also obvious: it can indeed generate a large volume of content, but it cannot prolong the life cycle. Once users experience aesthetic fatigue, content popularity is fleeting, and ROI performance is difficult to sustain.

And this is precisely the structural dilemma faced by AI videos on content platforms - high efficiency but difficult to create "classics."

From this perspective, AI videos still need to explore model generation modes for long-duration, high-quality videos.

As the pioneer who launched its first video generation model in 2018, Runway is ahead of the curve. According to official data, although the website traffic of Runway in the past year was less than half that of Keling, its ARR was almost six times that of Keling.

Runway's business logic takes a diametrically opposed path to the short video model, producing relatively high-quality film and television content works through collaborations with high value-added industries such as film companies.

The difference lies in that Runway positions video generation more as a "creativity efficiency tool" rather than a low-threshold production tool. Its core functions mainly include mid-frame control, AI character expression and movement replication, video expansion, etc. While achieving efficiency, the core creative output remains under the control of humans themselves.

As more and more AI products emerge on the market, the questions left for the industry and the era require a shift from evaluating the efficiency conversion performance of AI to clarifying the usage boundaries of AI tools, allowing truly incremental content to grow from them.