This robot show is all-in, not just for show but for real-world applications

![]() 08/14 2025

08/14 2025

![]() 686

686

Author | Bai Xue, Mao Xinru

"This is not the spring of robots, but their summer."

This may be the most lively and insightful World Robot Conference in its 10-year history.

On the one hand, the number of exhibitors reached an all-time high, with over 200 domestic and foreign robot enterprises and more than 1,500 exhibits.

More importantly, these robots are now in motion.

An exhibition staff member who has attended the conference for six consecutive years mentioned, "There has been a big change from last year to this year. Last year, the robots were all mounted up; this year, they are all in motion."

The World Robot Conference is also a microcosm of the robot industry chain. Hall B, where robot bodies are gathered, was crowded with visitors. Upon entering, companies like Zhongqing, Zhuji Power, Fourier, Magic Atom, Vita Power, Qianxun Intelligence, and Xinghai Map were lined up. Whenever there were boxing matches or dancing performances at booths, forget about it; it was impossible to squeeze in.

Hall A gathered star robot companies such as Unitree, UBTech, Wisdom Square, and Crossrobot.

Hall C mainly gathered suppliers providing software and hardware solutions and core components for the robot industry, such as Hesai Technology, RoboSense, Lingxin Qiaoshou, Dexterous Intelligence, and Aoyi Technology.

Behind the hustle and bustle, robots are evolving from remote control and programming to autonomous thinking, and from showy performances to scenario-based applications. But taking another step forward, humanoid robots at the top of the food chain are still far from commercialization. A humanoid robot with a development price of several million dollars either relies on financing or on the profits of the company's delivery robots to support the engineering team.

Every enterprise is trying very hard to make itself look more attractive.

When you're tired of watching robots shovel popcorn, you'll suddenly see parents pushing children in wheelchairs to learn about lower limb exoskeleton robots. At this point, you'll realize that technology is advancing rapidly, and it hasn't left anyone behind.

Some say there is no consensus at this conference, but that's not true. There are debates about algorithms versus data, real versus simulated data, model capabilities, and robot forms. These debates happen to be the foundation of the era of robot chaos.

The advanced forms of embodied intelligence pursued by all robot companies and their frantic displays are precisely the wonderful aspects of this conference.

This time, we attempt to sort out the initial consensus of the industry from five key areas: robot brains, chips, bodies, eyes, and hands.

Robot Brain: VLA becomes the source of ten thousand models, and the ability to think is the fully evolved form

General Robot = General Brain + General Body, which is the industry's basic understanding of general robots.

After visiting WRC, the general brains of all manufacturers can be divided into three levels of capability:

Beginner: Robot movements mainly rely on remote control and programming. For example, there is a mysterious man in black standing behind the robot, which is a human operator controlling the robot.

Intermediate: Can achieve a certain degree of autonomous thinking in some scenarios, such as autonomously sorting goods in a delivery scenario.

Advanced: Possesses a high degree of cross-scenario generalization ability and has autonomous thinking ability in most scenarios. However, products with this capability have not yet emerged, mainly because the VLA model is still in the laboratory stage.

Advanced capabilities can be understood as the critical point of robot brains. Wang Xingxing gave an example, stating that the critical point of robots should be that even if a robot comes to an unfamiliar venue and is told to bring a bottle of water to the audience, it can complete the task independently.

To achieve this level of autonomous thinking, the mainstream solution in the industry is to develop towards the VLA model. This model can integrate visual perception, language understanding, and physical actions, enabling robots to understand human instructions and the current environment, and ultimately have self-awareness to complete tasks through understanding language.

The most obvious trend at WRC is that robot brains revolve around the "ten thousand models" of the VLA model, with representative enterprises such as Xingdong Jiyuan, Xinghai Map, Qianxun Intelligence, Yinhe Universal, and Lingchu Intelligence.

In December last year, Xingdong Jiyuan, the only enterprise held by Tsinghua University, released the algorithm framework iRe-VLA for reinforcement learning to train embodied large models.

By integrating it into the embodied large model ERA-42, it can control the dexterous manipulation of the whole body of a highly articulated humanoid robot through the same end-to-end VLA model, such as sorting flexible items and scanning codes.

At WRC, Xingdong Jiyuan applied the embodied intelligence large model ERA-42 to the full-size humanoid robot Xingdong L7. In the on-site logistics simulation scenario, multiple Xingdong L7 robots can work collaboratively without relying on programming: one is responsible for intelligent sorting of packages, and the other is responsible for intelligent scanning. Even if the QR code of the package is on the other side, it can autonomously turn it over and recognize the QR code, showing a significant improvement in learning ability.

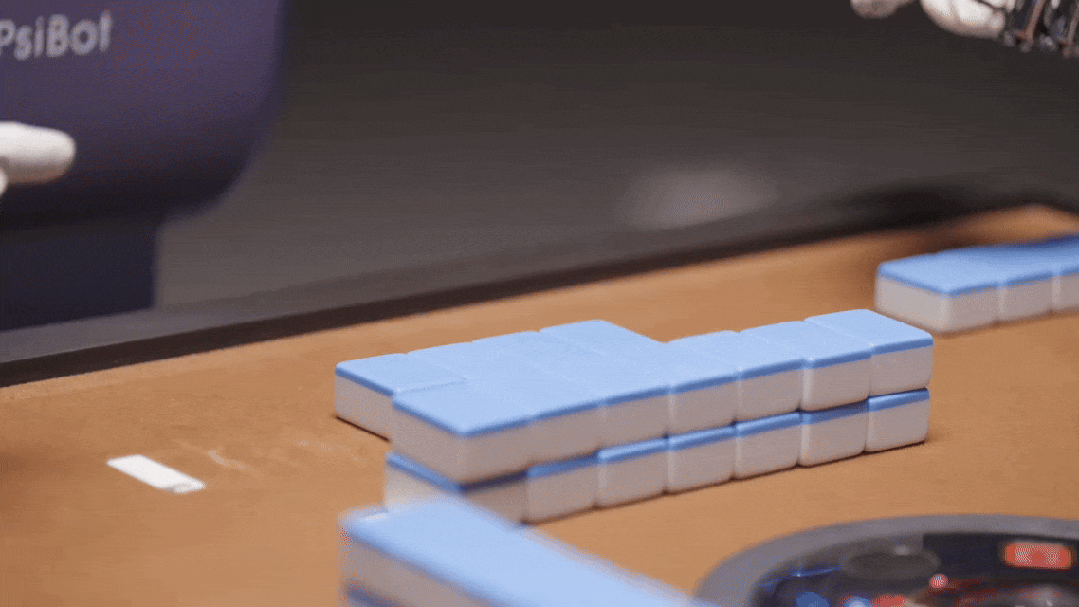

Similarly, Lingchu Intelligence also introduced the end-to-end embodied VLA model Psi-R1 based on reinforcement learning (RL) this year.

The Psi R1 model proposes a fast and slow brain hierarchical architecture. The slow brain S2 system focuses on reasoning, inputting unique language and action information from the VLA model, responsible for scene abstract understanding, task planning, and decision-making. The other fast brain S1 focuses on high-precision control.

A significant change is that the Psi R1 model combines historical actions with the current environmental state to understand the long-term impact of actions, with the ability to complete CoAT long-term thinking chains of up to 30 minutes or more.

At WRC, Lingchu Intelligence's mahjong robot "showed off" and could complete a mahjong game of over 30 minutes with on-site audiences. The most impressive part was the autonomous completion of game decisions such as colliding and Bar ing, demonstrating the ability of the VLA model to dynamically construct decision chains.

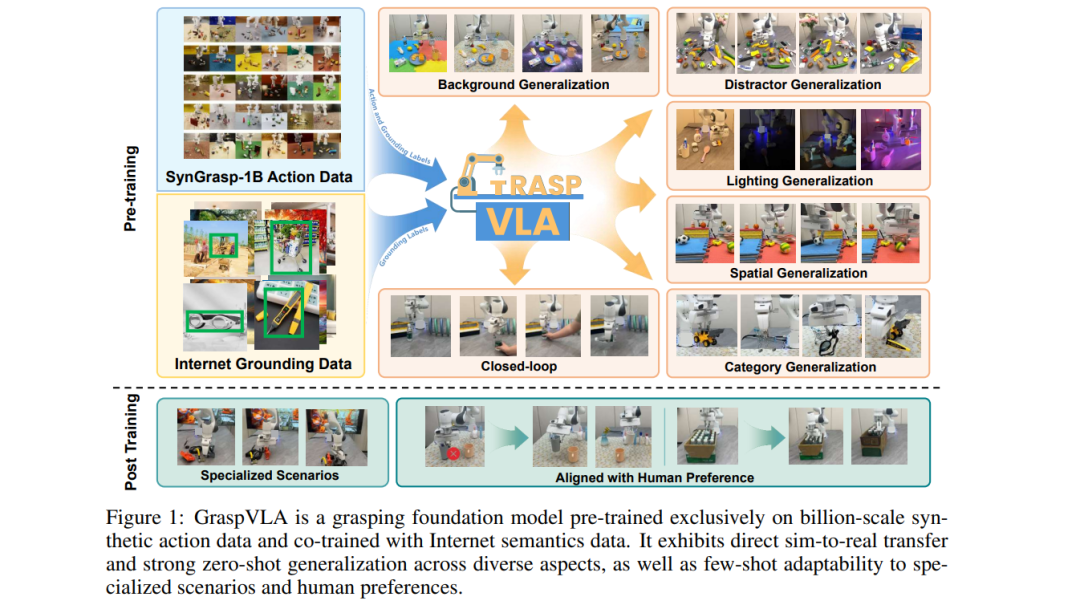

Yinhe Universal also adopted the end-to-end embodied grasping basic large model GraspVLA, making a splash at WRC.

GraspVLA is mainly composed of a VLM backbone network module and an action expert module, where VLM includes a 1.8B large language model, a visual encoder, and a trainable projector.

Ultimately, the VLM module is responsible for visual observation and text instructions, while the action module is responsible for action generation.

Yinhe Universal emphasizes its advantage in model training using generalist + specialist training. Generalists use billions of frames of simulation rendering data to enhance model generalization ability and familiarize themselves with environmental changes of objects. Specialists conduct targeted scenario training with real data in specific scenarios.

Yinhe Universal has specifically developed the end-to-end embodied large model GroceryVLA for the retail industry. At the WRC booth, Yinhe Universal set up a small supermarket for its humanoid robot Galbot. Even with different SKUs and product packaging categories, Galbot can still distinguish materials, accurately identify and grab products according to orders, and hand them over to customers.

Xinghai Map also entered the VLA model this time, bringing the "true end-to-end + true full-body control" VLA model G0 to WRC for the first time. This model can already make the robot independently make the bed in a room through voice commands.

Even though the VLA model has become a hot topic for robot brains this year, different companies have different skill points even within the same VLA framework.

Chen Jianyu, the founder of Xingdong Jiyuan, believes that there are three points that will determine the capabilities of robot brains in the future:

The model architecture determines the upper limit of brain capacity, the richness and quality of data determine the degree of completion of actions, and the quality and responsibility of the body determine the upper limit of execution.

Therefore, model development for VLA is still a long road of further study.

Robot Chips: NVIDIA and Digua Robotics make appearances

This WRC has undoubtedly become a "martial arts field" for various robots. As a key component of the robot's "brain", chips are crucial for determining the robot's perception and decision-making capabilities.

Behind the flexible brains of many robots, there are actually two shovel sellers: one is NVIDIA, and the other is Digua Robotics.

The two shovel sellers demonstrate entirely different computing power routes for robots. NVIDIA represents "high-end general-purpose computing power + simulation/training ecosystem", targeting scenarios requiring large model perception, high concurrency inference on the edge, and complex simulation; Digua Robotics represents "low-cost/customized integrated computing control + developer ecosystem", focusing on large-scale deployment in consumer-grade and structured scenarios.

As two leading companies in domestic embodied intelligence, Unitree Technology and Yinhe Universal have become customers of NVIDIA.

Yinhe Universal's G1 Premium humanoid robot is one of the first humanoid robots equipped with NVIDIA Jetson Thor, demonstrating smoothness and operating speed in complex scenarios such as industrial palletizing, depalletizing, and material box handling.

Unitree Technology has deployed NVIDIA's full-stack robotics technology on its new humanoid robot R1, optimizing motion and control capabilities through the Isaac Sim high-fidelity simulation platform and achieving rapid strategy iteration with the Isaac Lab system.

In addition, the accelerated evolution Booster T1, which plays soccer, uses the Nvidia AGX Orin, providing 200 TOPS of AI computing power; the R1 series from Xinghai Map all use NVIDIA Jetson AGX Orin 32GB; and Zhongqing's SE01 uses NVIDIA Jetson Orin Nano.

Digua Robotics also showcased the landing applications of five partners this time, covering everything from robotic arms to quadruped robots to humanoid robots.

Vita Power's all-terrain autonomous mobile accompanying robot Vbot is equipped with Digua Robotics' RDK S100P as its AI brain. With 128 TOPS of edge computing power and an autonomous driving-grade sensor system, it can "see, hear, think, and converse".

The myCobot 280 RDK X5 robotic arm from Elephant Robotics uses Digua Robotics' RDK X5 as its AI computing platform, with 10 TOPS of computing power and support for over 100 open-source algorithm models, covering scenarios such as YOLO World, VSLAM, object detection, and semantic interaction.

The Qinglong robot, jointly built by the state and local governments, is equipped with Digua Robotics' RDK S100P intelligent computing platform. With 128 TOPS of edge AI computing power, it achieves a full-link closed loop of "speech-vision-grasping".

From chip applications, it can also be found that "cerebellum-cerebrum collaboration" will become the norm.

Real-time control and low-latency decision-making are placed in the local cerebellum, such as an MCU, while complex perception and high-level planning are placed in the high-computing power "cerebrum", such as a GPU, BPU, NPU, etc., thereby forming a system that balances cost and capability.

The design philosophy of the Sweet Potato Robot on the RDK S100 advocates such heterogeneous collaboration, while the adoption of NVIDIA's complete machine pushes more of the "brain" capabilities to the edge side to achieve stronger perception and online generalization capabilities.

Robot Body: Emotional Needs Sprout, Full Self-Research of Key Components is Still Premature

Throughout the WRC, the most attention is still on robot body enterprises.

The first most obvious change occurred in form, with robots becoming more diverse in size.

The size of humanoid robots mainly focuses on two ranges. One is lightweight and small-sized robots, such as Unitree G1, with heights concentrated in the 120-130cm range. For example, Unitree's third humanoid robot, Unitree R1, stands at 127cm and weighs only 25kg.

In contrast are full-size robots, often towering at over 170cm. A typical example is Tesla's robot Optimus, standing at 172cm and weighing 73kg. Another example is Zhongqing Robotics' newly released T800, resembling a giant with a height of 1.85 meters and a weight of 85kg.

There were many medium and small-sized humanoid robots around 140cm to 160cm at the WRC. Magic Atom's newly launched small humanoid robot, MagicBot Z1, stands at 140cm and weighs 40kg, capable of instantly springing to its feet.

This time, Luming Robotics also exhibited the Lumos LUS2 at the WRC, which can spring to its feet in a second. With a height of 160cm and a weight of 55kg, its appearance is closer to that of humans.

Huang Hao, co-founder of Luming, told Xinghe Frequency that they believe the humanoid robot industry will gradually converge to the form of 160cm robots.

The reasons behind this are related to stability, joint size, and cost.

The core reason is that the center of gravity of a 160cm robot is 33% higher than that of a 120cm robot, significantly reducing the stability threshold during dynamic balance and providing better stability.

Even Luming Robotics exhibited its small humanoid robot NIX for the first time at the WRC, with a height comparable to a 3-year-old child.

The second biggest change is that the robot body now has more diverse emotional expressions.

Traditional humanoid robots have two directions. One is simulation-level robots with highly realistic facial features, and the other is robots with a sci-fi appearance, whose bodies and facial features are more superhuman.

Fourier's newly released humanoid robot GR-3 at the WRC created a new appearance.

From the outside, the traditional robot's neck has been transformed into a thick scarf, and a layer of leather has been added to the originally cold engineering plastic. The color scheme has changed from the mainstream black, white, and gray to softer tones, visually weakening the cold feel of traditional robots.

Internally, it focuses on full-sensory interaction, with GR-3 equipped with 31 sensors forming a tactile perception array.

Calling or stroking GR-3 can trigger "fast thinking" feedback, such as quickly turning the head to make eye contact or gently shaking the head in response. Repeated triggering of the same command will activate the "slow thinking" mode.

The large model inference engine understands complex semantics, interaction history, and trigger features to generate more natural and scene-appropriate responses.

This interactive form combining skin touch provides a new idea for the anthropomorphization of humanoid robots.

The third change is that self-research has become the mainstream direction, but full-stack self-research is still premature.

Behind the robot body's expansion is the deep integration of the entire robot industry chain. Throughout the WRC observations, many enterprises have shown a trend of attempting self-research of core components in order to save costs and master core key technologies.

Currently, Luming Robotics is already independently developing core components such as robot joint modules, tactile grippers, and seven-axis data acquisition robotic arms.

Huang Hao told Xinghe Frequency that joint modules account for about 40% of the overall machine cost, and the parts they choose to self-research are those with high costs and high technical requirements.

But he believes that the entire general-purpose robot industry is actually in a relatively early stage, and it is too early to talk about full-stack self-research.

It is necessary to first establish overall supply chain capabilities before it becomes possible to trend towards full-stack self-research from chips to software and hardware, similar to automotive enterprises.

Dexterous Hands: Transitioning from Single-Point Demonstrations to Scenarized and Deployable Solutions

Dexterous hands, as the last centimeter of humanoid robots, determine the upper limit of the robot's operational capabilities. With the increase in the stability of the robot body and the market's higher requirements for robot operational capabilities, dexterous hands have also evolved from initial "single-point demonstrations" to scenarized and deployable solutions.

More than 10 dexterous hand manufacturers exhibited at this year's WRC, bringing over 20 dexterous hand products, a significant increase from last year.

In terms of technical approaches, the transmission schemes are diversified, with a noticeable increase in the use of tendon-driven schemes.

Currently, most products on the market still use the linkage scheme, with degrees of freedom ranging from 6 to 11.

The tendon-driven scheme can provide higher degrees of freedom and, in theory, is the most capable of breaking the impossible triangle of dexterous hands. Both new dexterous hand products exhibited this time adopt the tendon-driven scheme.

Cyborg Robotics' Cyborg-H01 achieves a 40% reduction in weight and over 40% cost reduction compared to traditional schemes through the tendon-driven scheme and a single motor driving multiple joints structure.

Xynova Flex 1 from Xynova Future boasts 25 degrees of freedom with a joint position control accuracy of 0.75°, a 25% improvement over international standards.

In addition, vendors like Dexterous Intelligence, which adopts the tendon-driven scheme across its entire product line, also exhibited three-finger to five-finger dexterous hand products.

Among them, DexHand021 Pro, as a high-DOF dexterous hand, will be officially released in the second half of the year.

At the WRC, Linker Robot, which launched the Linker Hand L6 and L20 industrial versions, also exhibited the Linker Hand L30 research version, which adopts the tendon-driven scheme and currently has the highest degrees of freedom.

Secondly, the weight of perception and touch in the "decision-making loop" has increased, with high-density tactile sensors gradually becoming a standard configuration. The dexterity of a hand cannot be linked to the number of degrees of freedom. The true measure is the deep integration of tactile sensing, force control, and multimodal vision. In other words, it is necessary for the robot to understand "how to grasp, how tightly to grasp, and whether to adjust".

The DH-5-6 dexterous hand from Dahuan Robotics is equipped with an ion-active layer tactile array on the fingertips and palm, which can capture pressure distribution, texture features, and sliding trends in real-time, supporting adaptive grasping and abnormal touch recognition.

Walker S2 from UBTech is equipped with its self-developed dexterous hand, using binocular vision and array tactile sensing to recognize the sliding friction coefficient of different materials, with force fluctuations controlled within ±0.5N when grasping fragile items.

In the past, many dexterous hand manufacturers focused on hardware research and development while neglecting the synergy of software and algorithms. However, for robots to operate accurately in complex scenarios, it is necessary to adopt a "combined hardware and software" approach.

Nowadays, some manufacturers have begun to build an ecosystem of "hardware + algorithms".

At the WRC, ZKSI showcased multiple intelligent dexterous hands and embodied intelligent machines, demonstrating a path: by combining the physical capabilities of robotic arms with large models and multimodal perception algorithms, robots can dynamically adjust grasping strategies according to different scenarios, allowing the same set of "arms + hands" to cover more application scenarios and reducing integration and on-site debugging costs.

The "Dexterous Hand + Data + Scenario" open laboratory jointly created by Aoyi Technology, AIO Intelligence, and NVIDIA made its debut at the WRC. Based on the NVIDIA VSS multimodal vision large model, Aoyi Technology's dexterous hand demonstrated real-time interactions for complex grasping, precision assembly, and rehabilitation assistance at the scene.

In addition, it is also evident that dexterous hands are moving towards modularization and standardization.

Manufacturers are striving to make "hands" pluggable and reusable modules, facilitating quick replacement and integration on robotic arms or complete machines of different brands, thereby shortening the time to market and engineering costs.

Robot Eyes: "Eyes, Brain, Hands" Entering a Higher Dimension of Collaboration

Last year at the WRC, Ma Yang, CEO of Tashan Technology, stated that robots performing complex actions require a unified body to complete the fusion of vision and touch.

This view has become a reality at this year's conference, with multi-sensor fusion evolving from a technical ideal to a core product architecture.

The robot's "eyes" are forming more efficient collaboration with the "brain" and "hands".

In the past, the visual functions of humanoid robots mostly stayed at the level of "showing off skills" or conceptual demonstrations. However, this year, the "productive attributes" of visual technology are more apparent, such as multiple robots collaborating to complete practical tasks like material sorting and cross-regional delivery.

Robots are no longer just "able to see" but are now "able to understand and use vision" in real-world scenarios.

A single sensor can no longer meet the needs of complex scenarios, and the spatial-temporal fusion of multi-source data has become the underlying logic of the vision system.

The Active Camera platform launched by RoboSense adopts integrated multi-sensor integration, with a single hardware capable of providing color information, depth information, and motion status information, and achieving spatial-temporal fusion of these three types of information, breaking through the technical bottlenecks of traditional 3D vision, which includes "inability to see clearly, inaccurate viewing, and slow response".

Orbbec's 3D LiDAR Pulsar ME450 supports the free switching of three scanning modes and is the industry's first "one-machine multi-mode" 3D LiDAR, capable of dynamic switching to adapt to scenarios such as obstacle avoidance and surveying, suitable for complex scenarios such as logistics and outdoor operations.

The essence of this fusion is to upgrade robots from "seeing objects" to "understanding the environment".

At the hardware level, visual devices are developing towards "smaller size and stronger performance".

Hesai Technology's JT series LiDAR is the size of a billiard ball, supporting the industry's widest 360°×189° super-hemispherical field of view and 256-line resolution, with a delivery volume of 100,000 units within five months of its launch.

Its pure solid-state radar FTX is 66% smaller in volume than the previous generation, with a point frequency of up to 492,000 points per second, capable of being discreetly embedded in service robot bodies to achieve a "non-intrusive" perception upgrade.

In addition, unlike last year's WRC discussion of "perception separation", where vision is processed at the brain end and touch at the edge end, this year shows a clear trend of "end-edge-cloud collaboration". Hardware manufacturers are no longer just selling sensors but building full-stack development ecosystems.

For example, RoboSense's AI-Ready ecosystem provides open-source tools, pre-trained algorithm libraries, and datasets, attracting scenario and algorithm developers to promote product landing applications and, in turn, drive hardware iterations.

Meanwhile, the continuous development of robot vision has made robustness a prerequisite for product landing.

The number of humanoid and companion robots exhibited this year has increased significantly, especially with more frequent demonstrations in scenarios such as catering, retail, and households.

Compared to last year's relatively static displays, this year's robots can maintain stable operations in complex environments such as exhibition halls. For example, Vitas Dynamics' Vbot moves "freely" in the venue, and Tiangong robots autonomously "stroll" to their workstations.

This requires that the perception system must undergo more stringent engineering verification. This demand forces manufacturers to continuously optimize algorithm noise reduction, anti-interference design, and software and hardware collaboration.

This year's WRC is like a prism, reflecting the core trajectory of robot development: The market is no longer satisfied with single-point showmanship, but is looking for "truly useful, implementable" system-level evolution.

Whether it is the dexterous evolution of hands, the perceptual leap of vision, the intelligent empowerment of the brain, or the stable support of the body, the ultimate key lies in the synergy of technologies.

The brain's decision-making requires the eyes to provide accurate environmental perception;

The observation of the eyes requires hands and the body to perform verification;

The dexterous operation of the hands depends on the stable support of the body and the fine control of the brain;

The motion efficiency of the body cannot be separated from the overall planning of the brain and the real-time feedback of the eyes.

Wang Xingxing predicts that in the coming years, the annual shipment volume of humanoid robots across the industry will double with certainty. If there are greater technological breakthroughs, it is even possible that hundreds of thousands or even millions of units will be shipped in a sudden surge within the next 2-3 years.

As technology shifts from single-point explosions to multi-dimensional collaboration, robots will eventually tear off the "Demo" label and enter various industries as true intelligent agents.

After all, the ultimate criterion for judging a robot has never been "how many circles it can turn" or "how many objects it can recognize", but whether it can truly "meet" human needs.