GPT-5 Arrives: Unified Naming, Improved Capabilities, but Breakthroughs Still Await

![]() 08/14 2025

08/14 2025

![]() 595

595

After more than two years of anticipation, numerous teasers, and extensive marketing efforts, GPT-5 has finally debuted!

This time, OpenAI has heeded the feedback. Previous model names, such as o1, o3, o4, mini, nano, pro, were more perplexing than a bubble tea menu. Now, they are all streamlined under the GPT-5 umbrella, providing a cleaner and more consistent experience.

However, the kind of groundbreaking technological leap that many were eagerly anticipating, akin to ChatGPT or Sora, did not materialize...

This was precisely my concern.

1. Introducing GPT-5

GPT-5 is not a singular model but a "hybrid system" integrating multiple models. It comprises three distinct tiers:

Daily Response Model: Swift, precise, and economical, addressing most queries.

Deep Reasoning Model: Tailored for solving intricate problems.

Real-time Router: Automatically selects the appropriate model based on the question type, complexity, and tool requirements.

For instance, if you request, "Please reason through this question carefully," it will switch to the reasoning model. After exhausting the quota, a mini version takes over.

This is GPT-5's true strength, showcasing mature tool and model invocation capabilities.

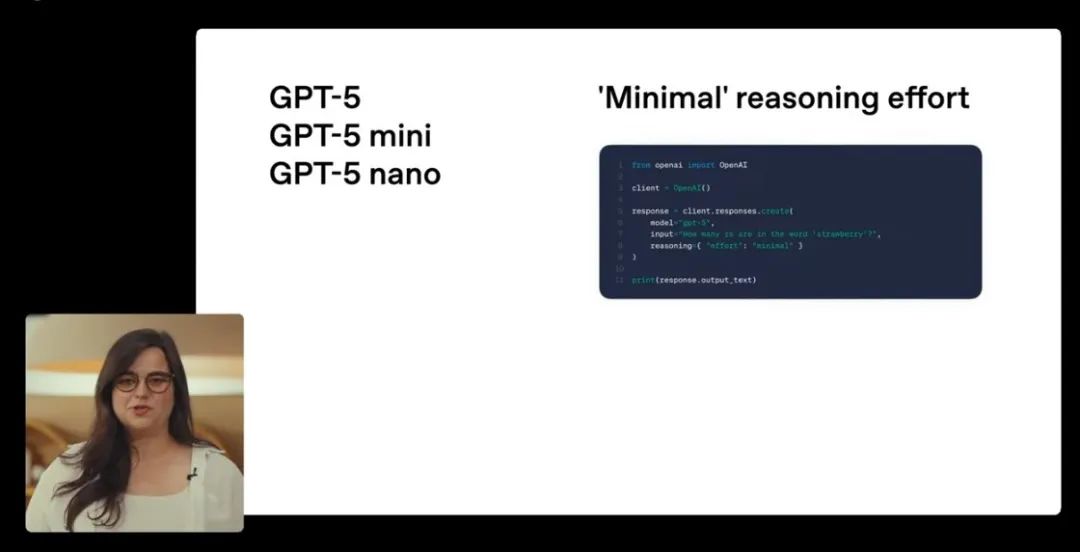

The API is similarly straightforward, divided into three models:

gpt-5 (main)

gpt-5-mini (lightweight)

gpt-5-nano (extremely lightweight)

Each model offers four reasoning levels. A notable addition is the "minimal mode," which streamlines responses without wasting reasoning tokens, enhancing speed.

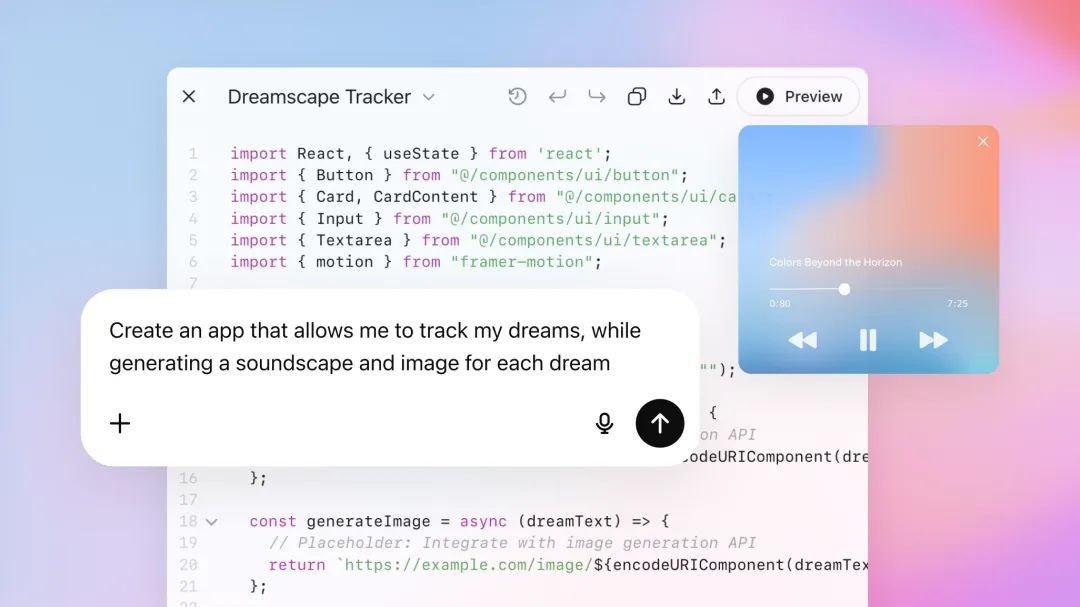

Furthermore, GPT-5 supports ultra-long contexts and dual-mode input:

Input limit: 272,000 tokens

Output limit (including reasoning tokens): 128,000 tokens

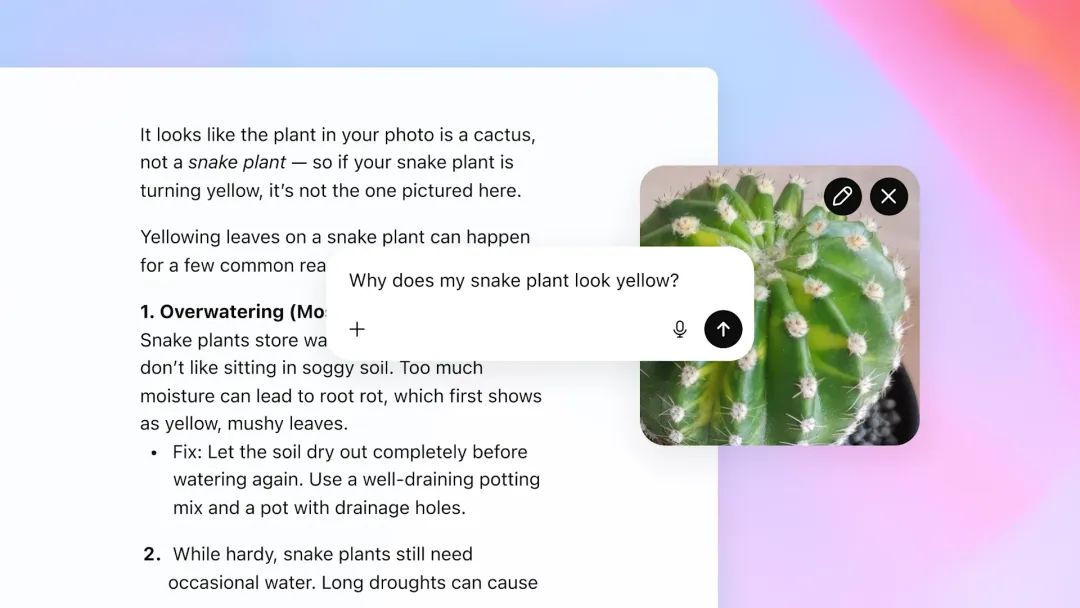

Supports "text + image" input, with text-only output

This means it can process hundreds of pages of documents at once, akin to a magnum opus, and can comprehend images while providing detailed responses.

2. GPT-5 is Indeed Smarter

OpenAI claims GPT-5's reasoning ability has improved, making it more honest with fewer hallucinations, outperforming predecessors in writing, programming, healthcare, and other fields. OpenAI confidently asserts:

"GPT-3 was like talking to a high school student. GPT-4, perhaps like talking to a college student. But GPT-5 feels like conversing with an expert, a Ph.D.-level expert, in any field you need, on demand."

While this sounds promising, early testers' evaluations suggest it's stable and capable but not a qualitative leap.

Particularly in writing, some find GPT-4.5's style closer to humans, while GPT-5 occasionally still produces clichéd, formulaic text.

However, in programming, GPT-5's reputation is impressive.

From generating entire websites to resolving complex dependency conflicts, it's more intuitive than previous models, capable of concurrently invoking multiple tools, functioning like a human programmer.

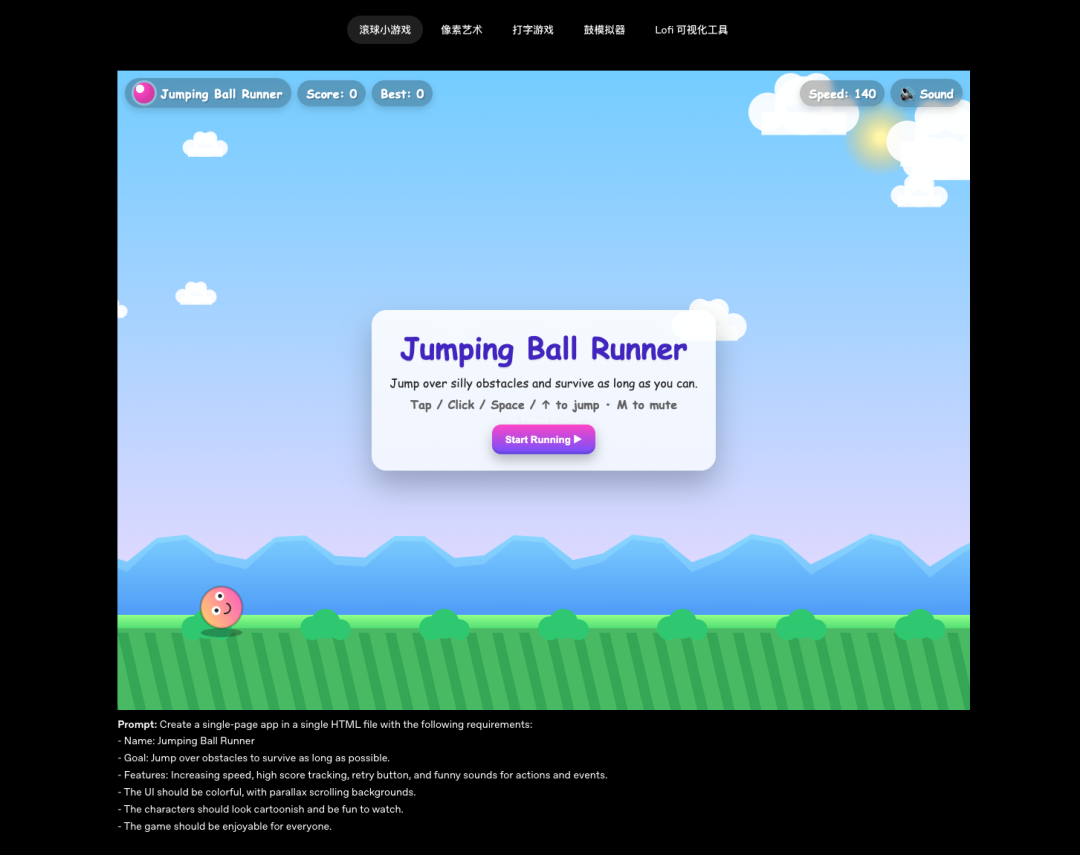

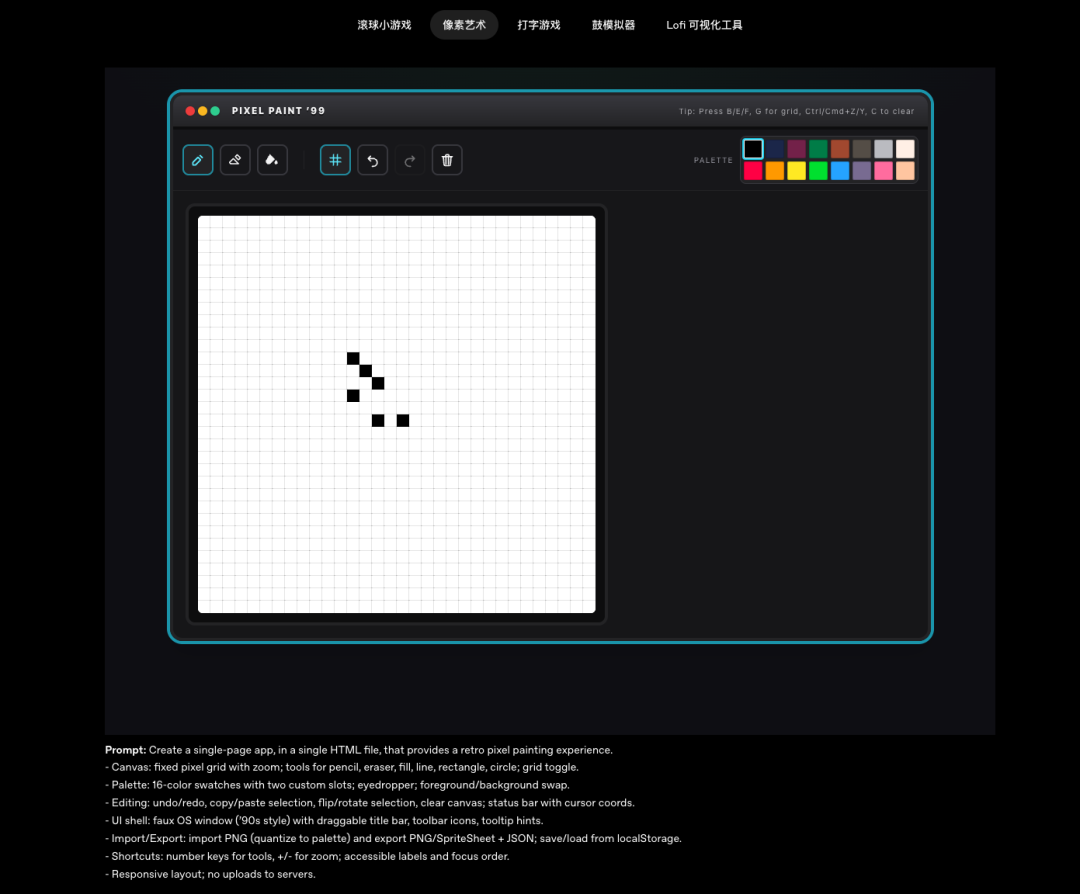

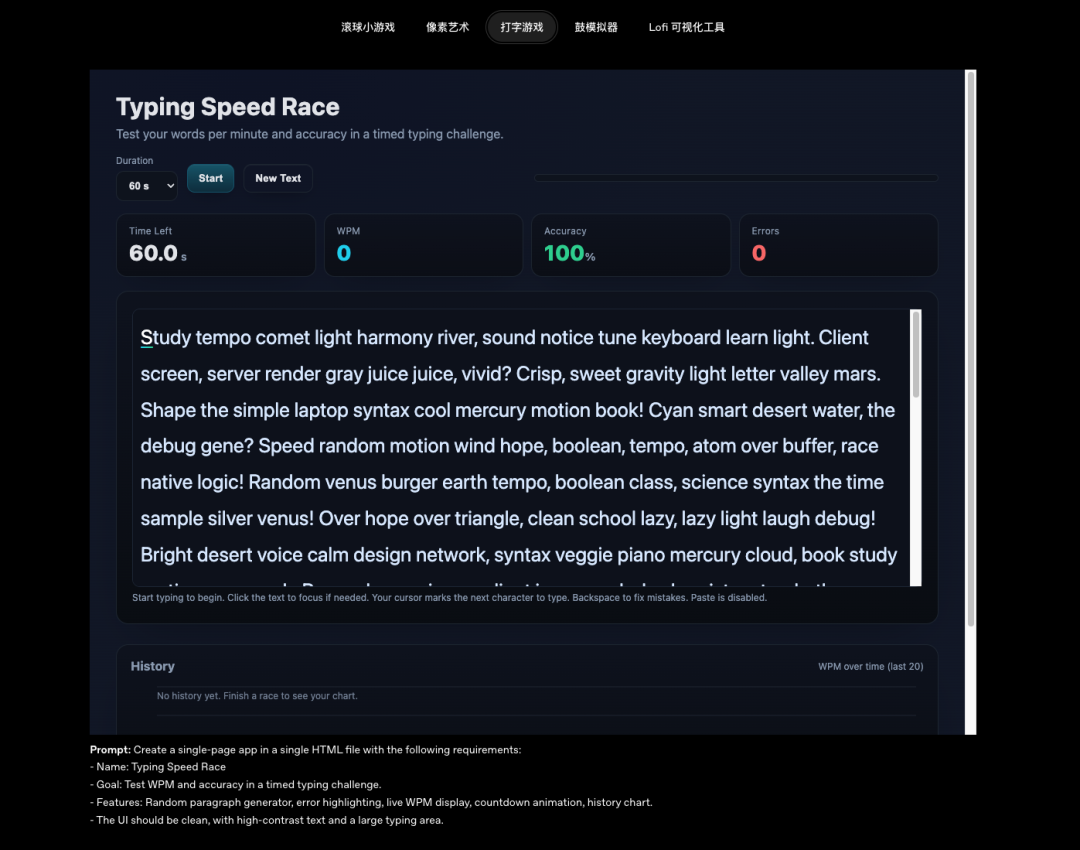

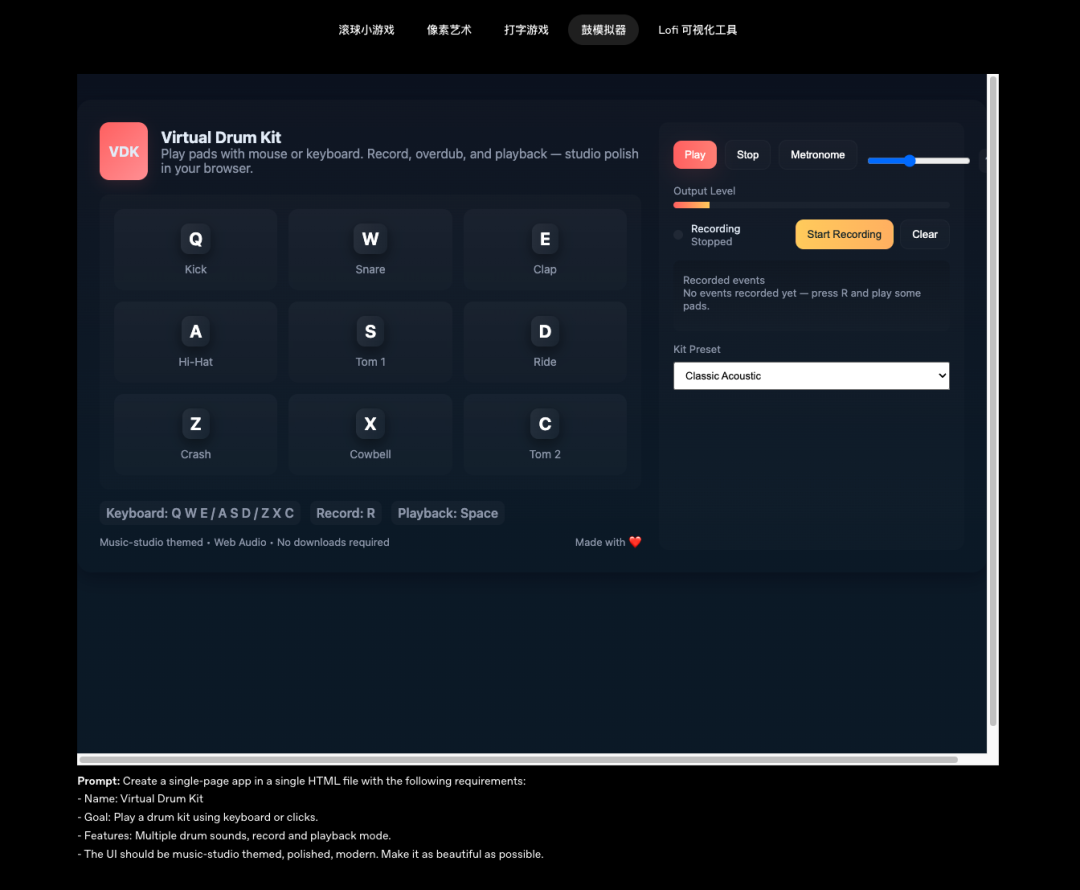

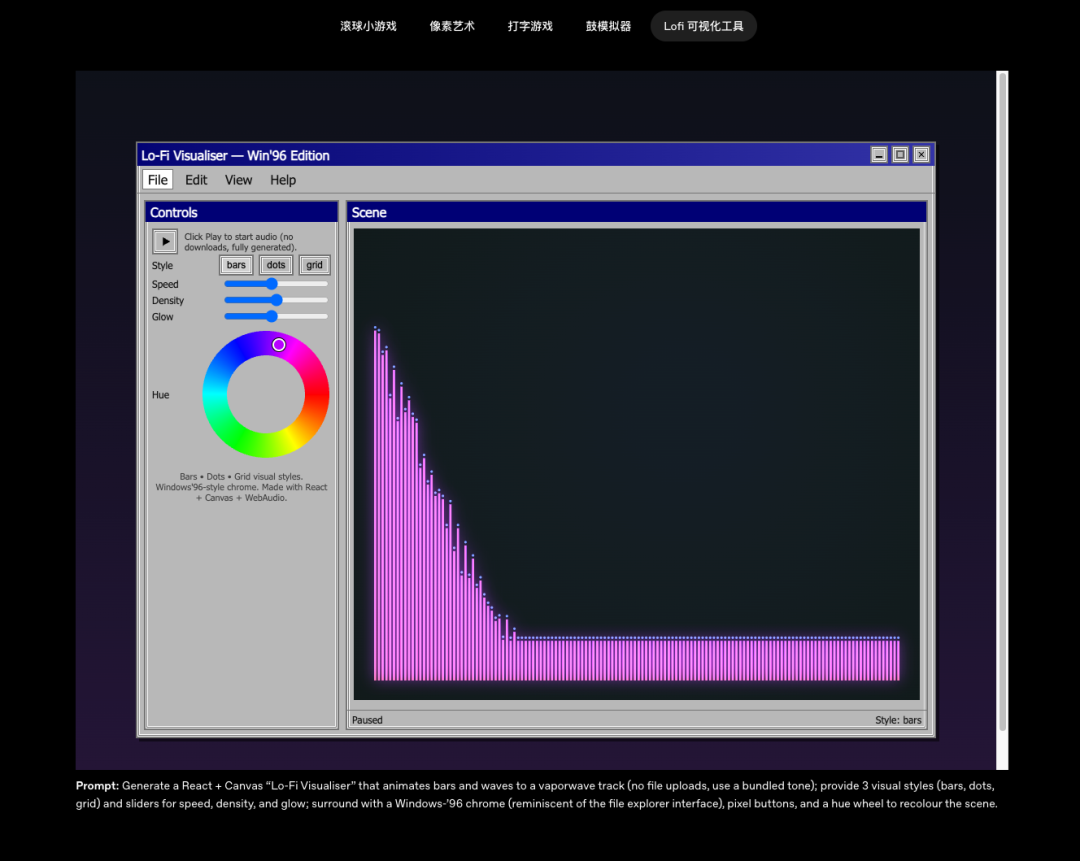

The OpenAI website even showcases a GPT-5-generated game, playable directly, with the corresponding prompt.

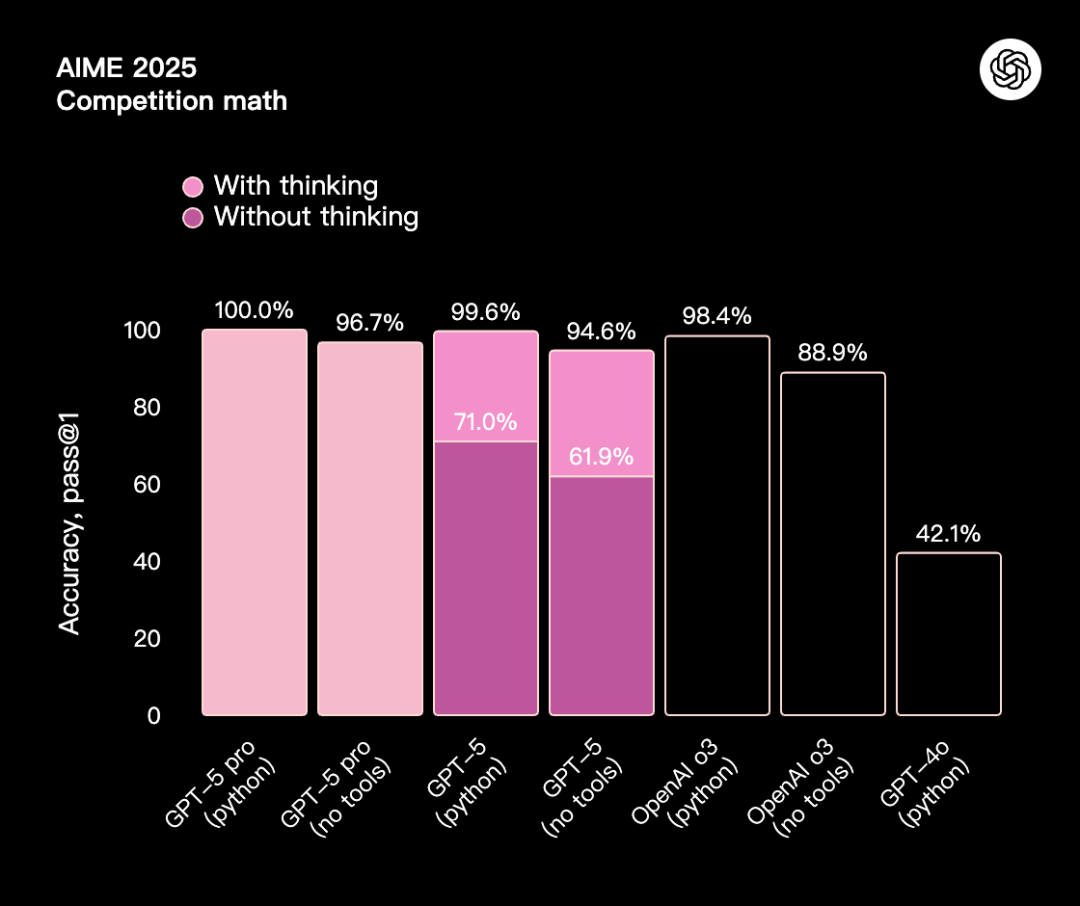

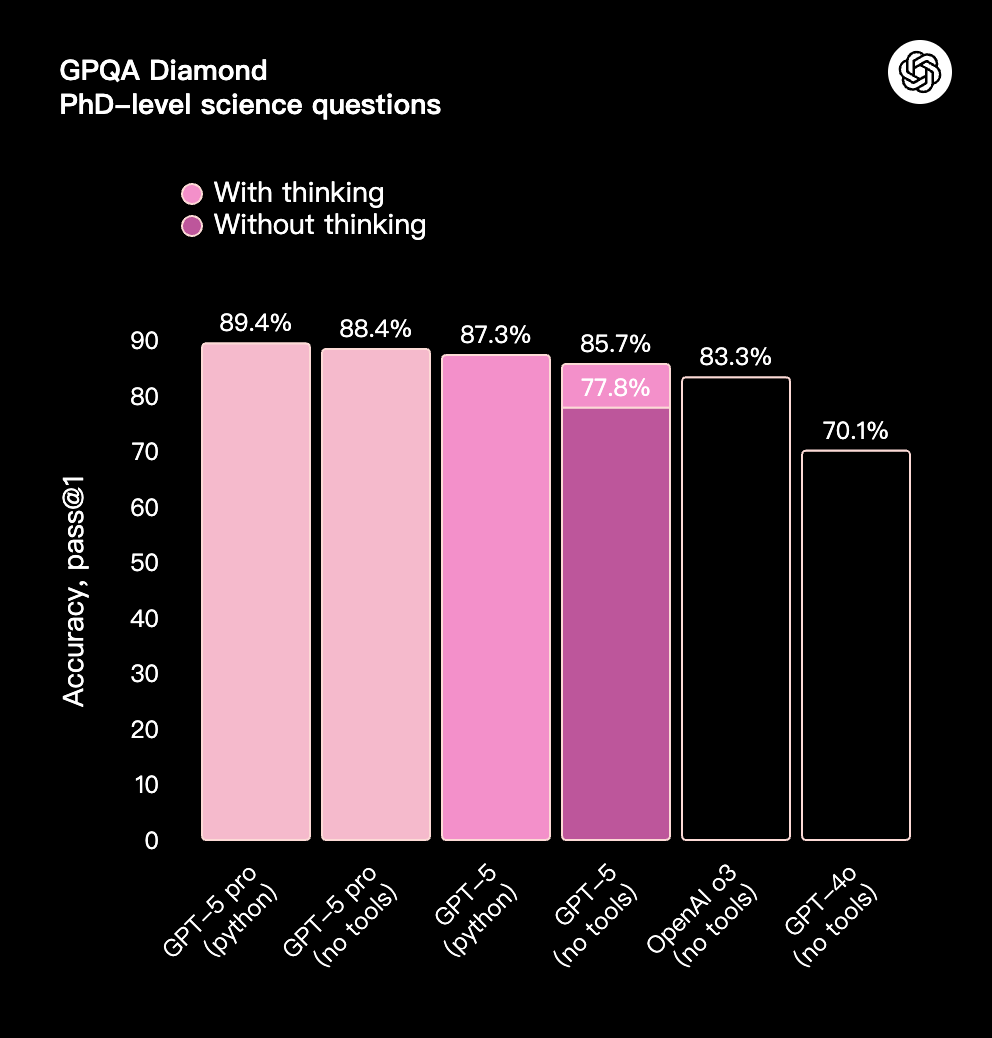

Let's review GPT-5's performance benchmarks:

Mathematics: AIME 2025 score of 94.6% (no tool assistance).

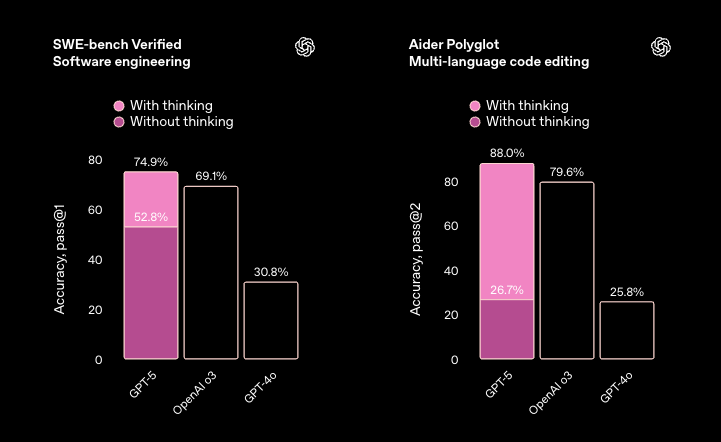

Programming: SWEbench Verified score of 74.9%, Aider Polyglot score of 88%.

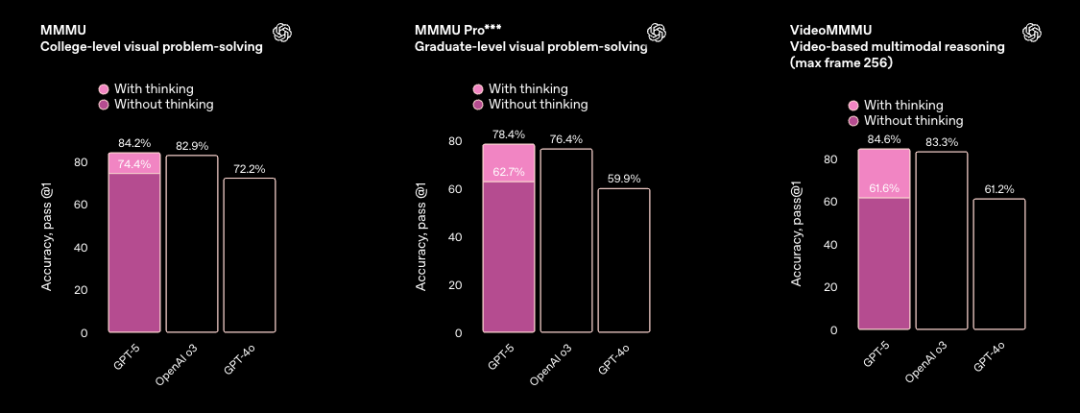

Multimodal Understanding: Achieved 84.2% on MMMU.

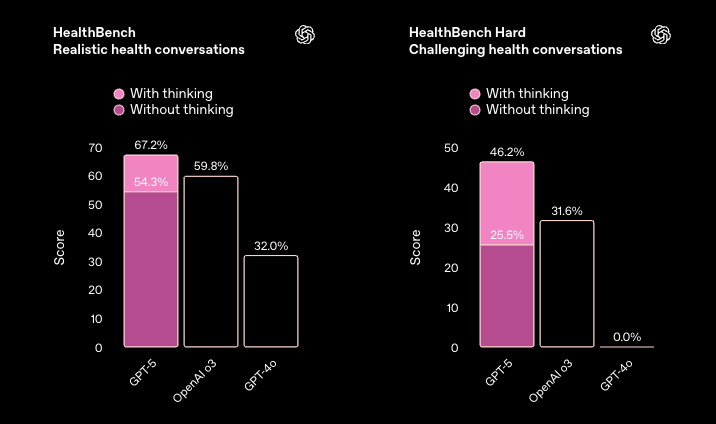

Healthcare: HealthBench Hard score of 46.2%.

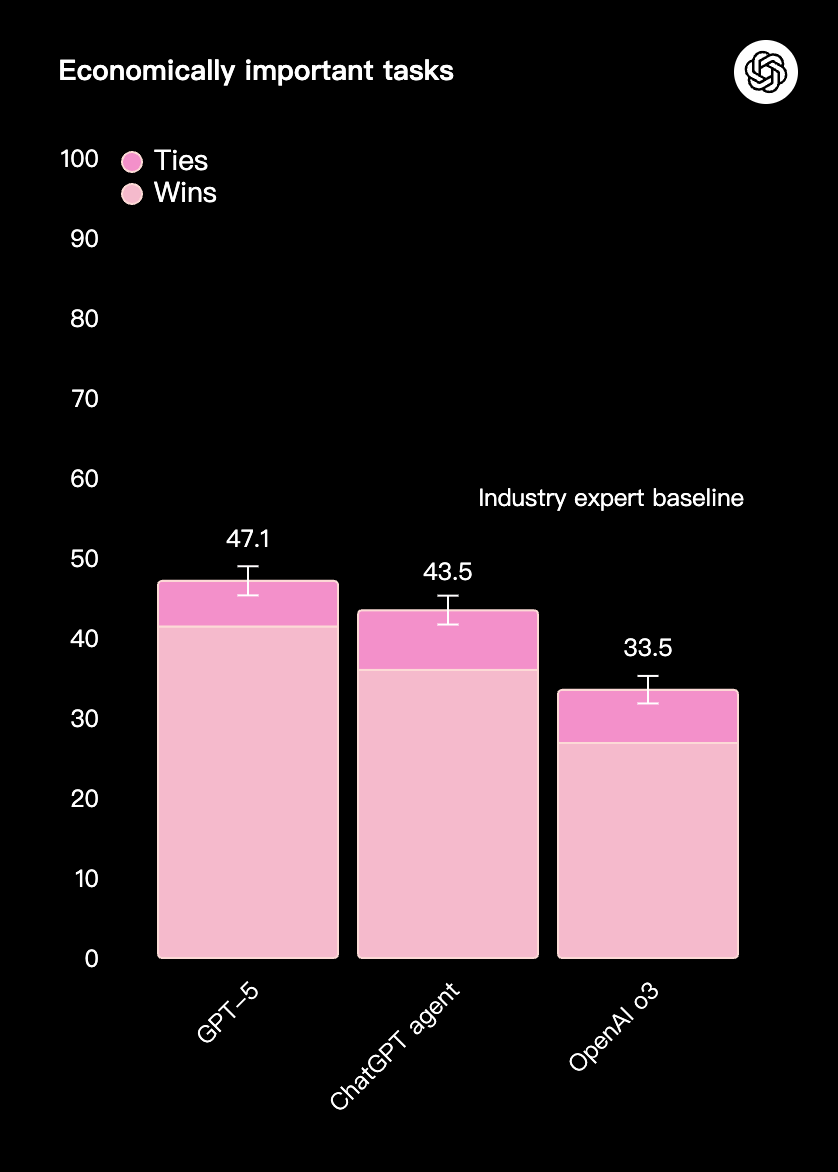

Economically Important Tasks: Outperformed o3 and ChatGPT Agent in tasks spanning over 40 professions, including law, logistics, sales, and engineering.

Reasoning Ability: Set a new record on GPQA (graduate-level scientific questions), achieving 88.4% without tools.

3. Prices Slashed

This time, the pricing is aggressively competitive:

GPT-5: Input $1.25/million tokens; Output $10/million tokens

GPT-5 Mini: Input $0.25/million tokens; Output $2/million tokens

GPT-5 Nano: Input $0.05/million tokens; Output $0.4/million tokens

GPT-5's price is half that of GPT-4o, and with a 90% cache discount (for reusing the same input shortly), AI product costs can be significantly reduced.

Compared to competitors like Claude, Gemini, and Grok, the price advantage is evident. OpenAI is clearly aiming to dominate the market.

4. Safer and More Reliable, from "Refusal" to "Safe Answer"

Previously, AI either answered directly or simply said, "Sorry, I can't answer that."

GPT-5 employs a new safe-completions strategy, providing high-level, useful answers within safety policies, rather than refusing.

Other improvements include:

Reduced hallucination rates: Especially factual errors when offline.

Decreased flattery: Minimizing blind user catering through reward mechanisms.

Acknowledging limitations: Clearly stating when tasks cannot be completed, rather than pretending otherwise.

In external red team Prompt Injection tests, GPT-5 had an attack success rate of only 56.8% (k=10), significantly better than other models, but indicating the issue remains unresolved, with over half of attempts still breaching defenses.

5. GPT-5's Release Timing

The timing of this release is very "OpenAI":

Google just released Gemini Pro 1.5, and OpenAI immediately unveiled GPT-5 to steal the spotlight.

Covering users from free to $200/month in one fell swoop.

Simultaneously integrating into Microsoft's entire suite (Copilot, Azure AI).

This is not just about technological iteration and price wars but an ecological battle: closing the loop for all users and developers within the GPT-5 system, leaving no room for competitors.

6. No Breakthrough Technology, AI Entering a Bottleneck Period?

Despite GPT-5's grand launch, price cuts, and impressive performance data, a sobering fact emerges upon closer inspection:

There was no real "qualitative" technological breakthrough at the core.

Its enhanced reasoning ability, larger context, and more flexible tool usage are essentially stacking and optimization within the existing LLM architecture.

This signals a potential industry-wide concern:

Are we nearing the "ceiling" of this generation of AI technology?

Consider that in the past two years, most AI large model breakthroughs occurred in 2022-2023: ChatGPT emerged, GPT-4 stunned, Stable Diffusion brought image generation to the masses, and Midjourney's artwork style was deified.

But in 2024-2025, the "wow factor" of new products has diminished, focusing more on price reductions, integrations, and ecosystems, rather than boasting "completely new intelligent paradigms."

Moreover, large model training costs remain prohibitively high for practical applications, and performance improvements increasingly rely on "fine-tuning" like data cleaning and reasoning strategy optimization, rather than foundational revolutions.

This may imply:

The next qualitative leap may require a breakthrough in an entirely new architecture, rather than continually refining the Transformer.

Current LLMs may be in a short-term bottleneck period, and it's unclear if this will last one year or five.

In other words, GPT-5 is an excellent iteration, but its significance is more akin to "Apple pushing the iPhone 15 to the extreme" rather than "Jobs first unveiling the iPhone."

Are you surprised or disappointed by GPT-5?

Welcome to discuss in the comments section!