Maintaining GPT-5's Throne: OpenAI's Strategy to Prevail with Price

![]() 08/14 2025

08/14 2025

![]() 611

611

From OpenAI CEO Sam Altman's initial mention of GPT-5 at the end of 2023 to its official release today, a year and a half have passed.

People still stay up late to watch OpenAI's launch events, but the overnight discussions are now tinged with skepticism rather than awe.

Fortunately, in terms of model performance, OpenAI has successfully regained its previously declining reputation. The GPT-5 presented at the launch event still achieves state-of-the-art (SOTA) capabilities in multiple aspects and boldly claims to be the "world's strongest programming model," challenging Claude's leading position.

However, some inherent impressions persist: OpenAI's gap with its peers is no longer the cliff-like lead enjoyed by GPT-3 and GPT-4.

OpenAI's competitors are also keeping a close eye. Not only did Anthropic officially announce a major version update this week, but Elon Musk even spent an entire evening emphasizing that his model, Grok-4, has surpassed OpenAI's new model in some tests.

How long can OpenAI maintain its new "throne"? Altman remains silent, focusing instead on the story of large models being inexpensively deployed.

Aiming for the Strongest Code Model: GPT-5 Comprehensive Upgrade

Refreshed performance scores, exceptional cost-effectiveness, targeted optimization of hallucination issues, and ultra-long context handling—all aspects of the optimization are designed to make GPT-5 a large model more suitable for practical use.

Firstly, in terms of architecture, GPT-5 is a unified model consisting of three parts: a base model, a GPT-5 thinking model with deep reasoning capabilities, and a real-time router.

The real-time router's benefit is that it can quickly decide which model to use based on the type of conversation, question complexity, tool requirements, and user intent. For example, when a user's prompt includes phrases like "help me think deeply about this," it will invoke the deep reasoning model.

Altman, who hyped up GPT-5 the day before the launch event, hinted at its performance advantages with an image on X. At the launch event, he also emphasized his confidence in GPT-5.

"If chatting with GPT-4 is like talking to a high school student and O3 like talking to a college student, then communicating with GPT-5 is like conversing with a PhD student," Altman described the experience of using GPT-5.

Starting with performance, GPT-5, which Altman regards as a killer app, indeed achieves SOTA in multiple model capabilities. Focusing on evaluation metrics, GPT-5's advantages are evident in programming, mathematics, multimodal understanding, and health.

First, let's talk about programming capabilities, which OpenAI emphasized at the beginning. This time, OpenAI has regained its desired leading position. In their words, "GPT-5 is the world's strongest programming model."

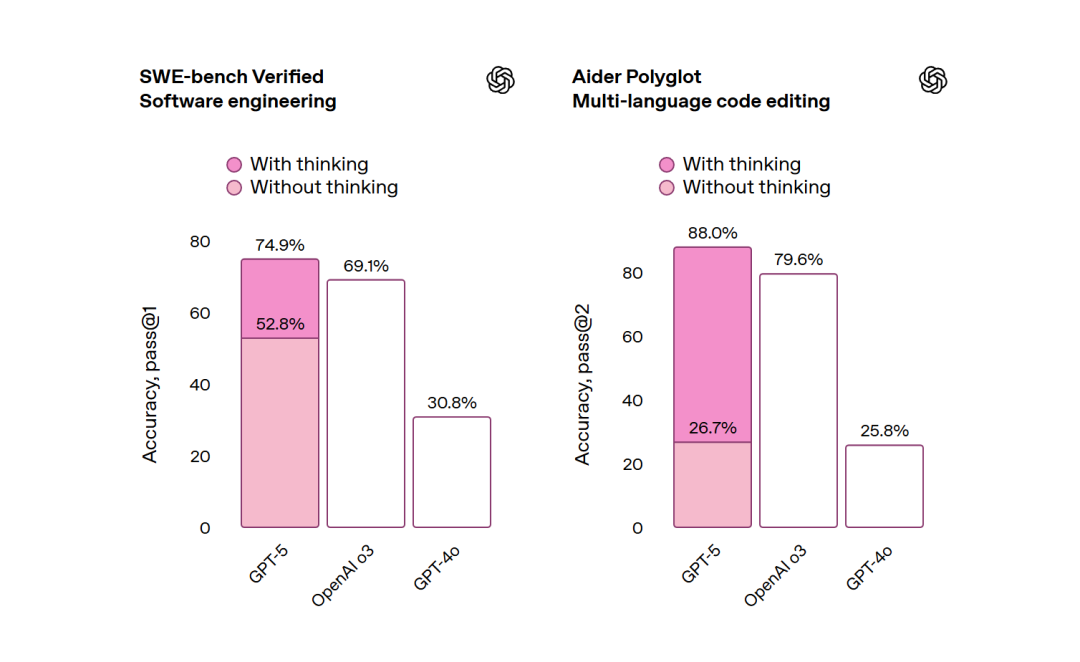

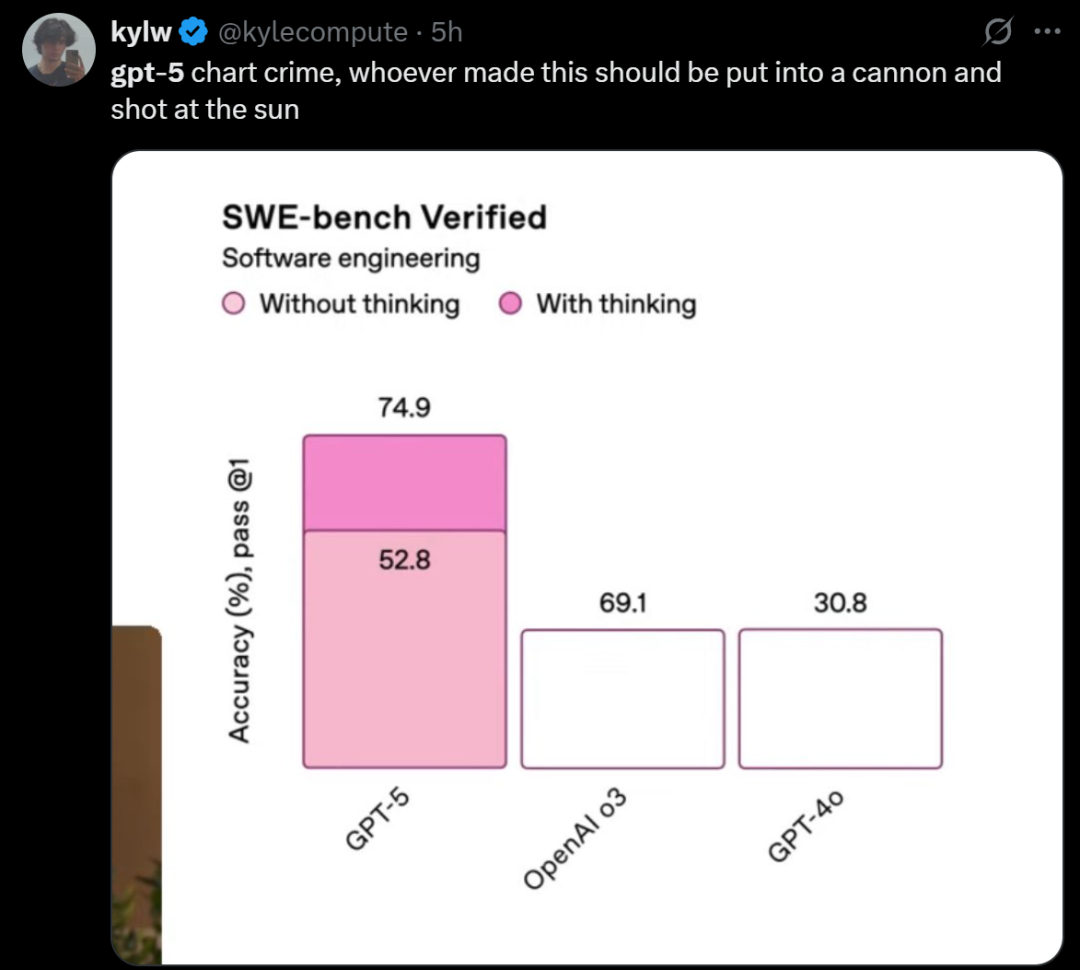

On the SWE-Bench (testing large model code completion capabilities) metric, GPT-5's reasoning version scored 74.9%, surpassing not only its own model O3 but also Anthropic's newly released Claude Opus 4.1 (74.5%). In terms of programming capabilities, OpenAI has usurped the throne from the Claude series.

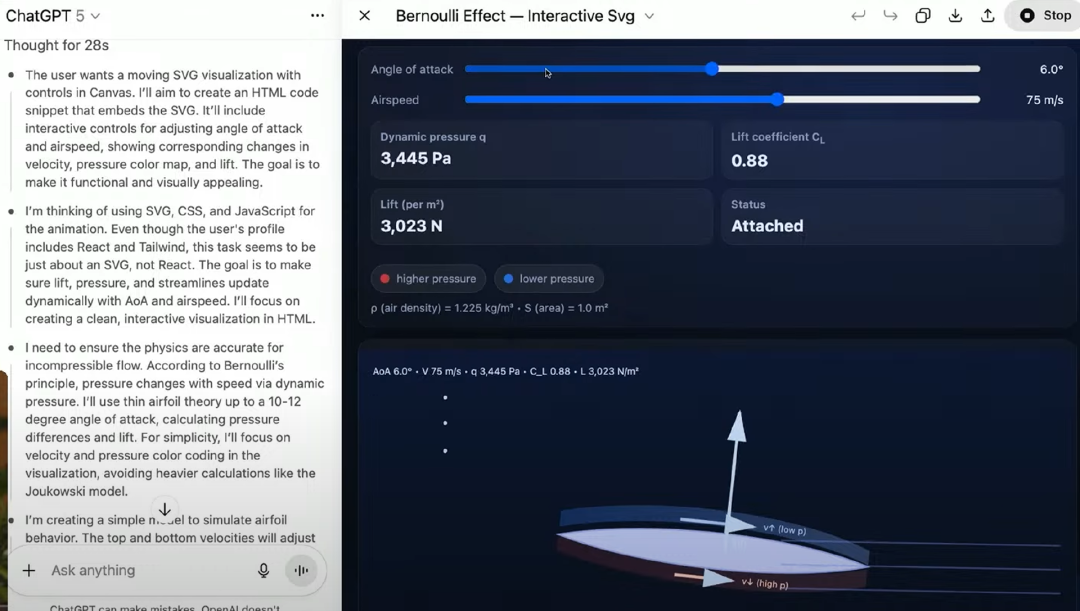

At the launch event, OpenAI specifically demonstrated its programming capabilities through some cases. For example, it was tasked with creating a web page explaining Bernoulli's principle. Within two minutes, GPT-5 generated 400 lines of code, creating a web version that supports parameter adjustment.

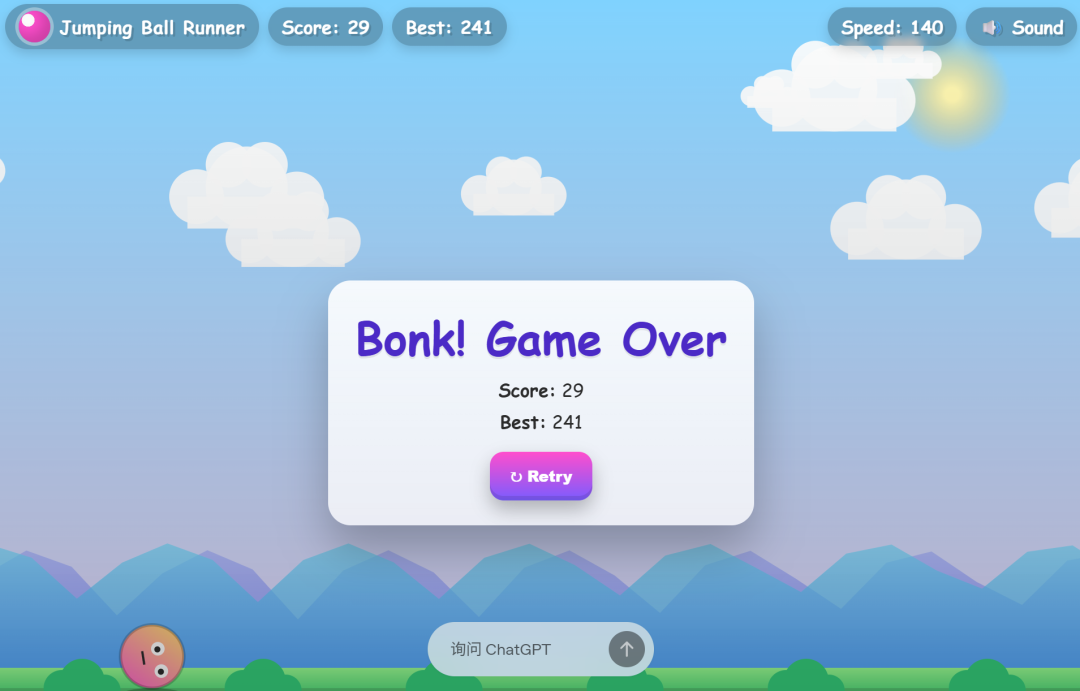

Additionally, OpenAI showcased the model's ability to create web-based mini-games, such as a web game where you jump to avoid obstacles, a canvas game where you can draw freely, and a visually enhanced version of Snake. While these capabilities can be accomplished by some current open-source large models, OpenAI's offerings are a step up in terms of aesthetics and process completeness.

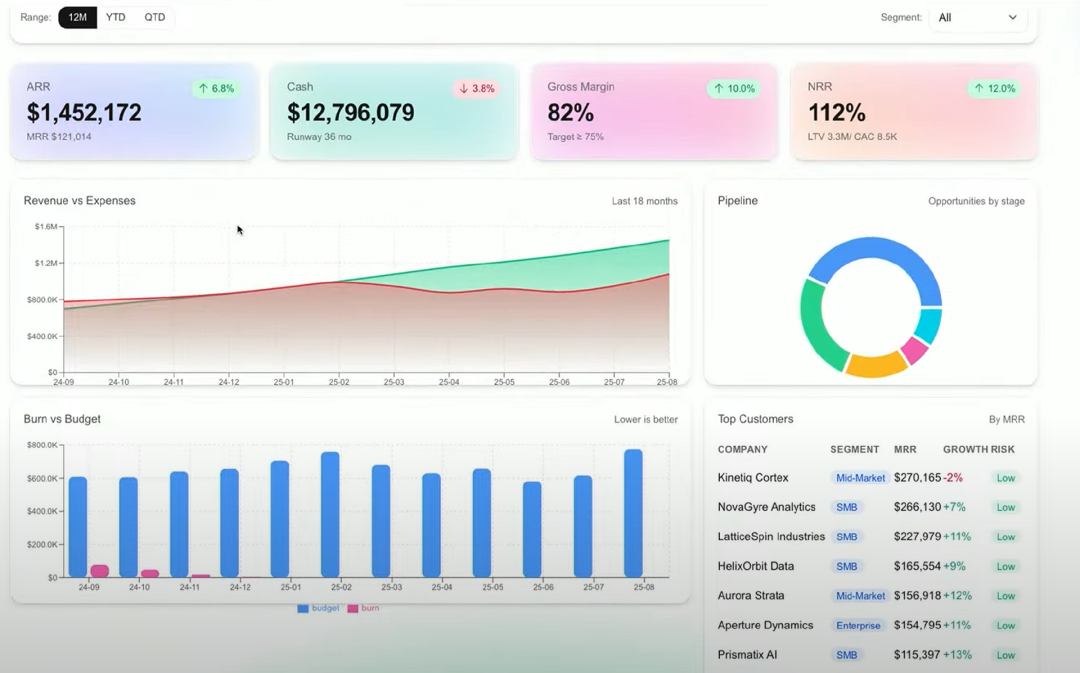

Apart from these consumer-facing examples, in actual B-end use, OpenAI also demonstrated GPT-5's code-writing and deployment capabilities to developers. GPT-5 is no longer just buried in writing code but has solidified its "out-of-the-box" deployment capabilities. For instance, after writing and optimizing code in the backend, GPT-5 developed a financial information dashboard according to requirements, with a beautifully designed UI.

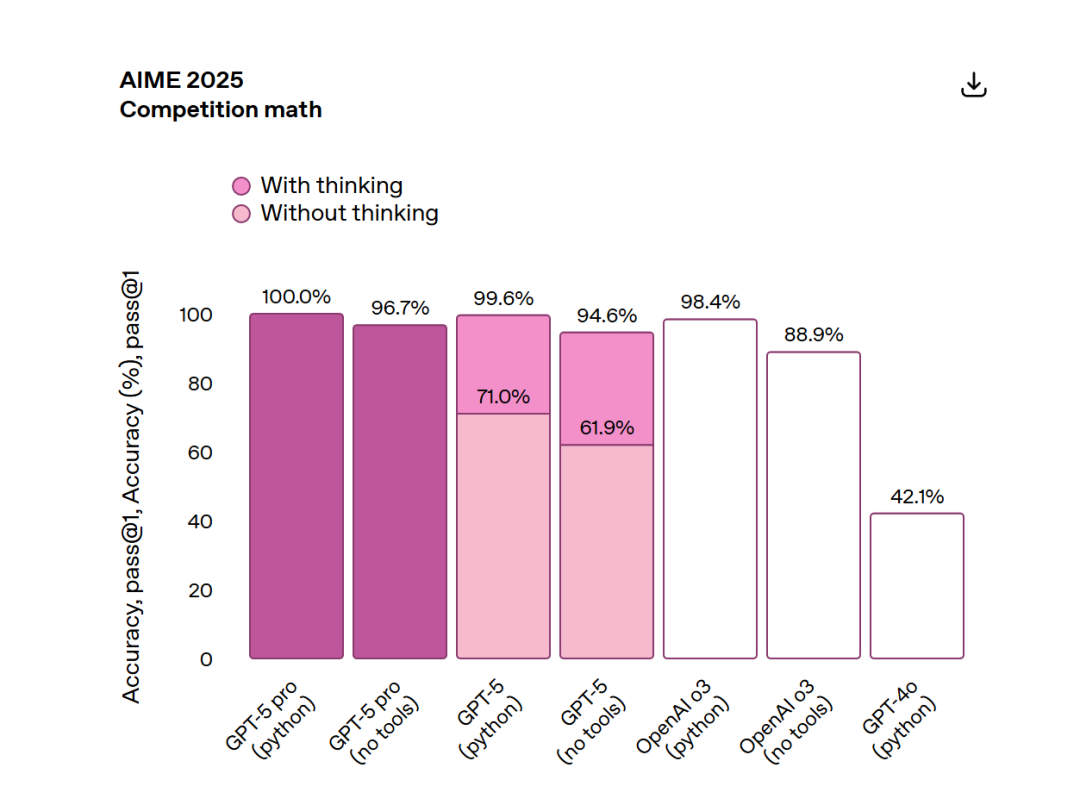

Besides programming, GPT-5 also refreshes the upper limits of its own models in several other dimensions, including mathematics (94.6% on AIME 2025 without tools), multimodal understanding (84.2% on MMMU), and health (46.2% on HealthBench Hard).

GPT-5 once again proves the feasibility of the idea that "a model is a product"—with tool capabilities added, GPT-5 Pro can even score a perfect 100 on the AIME 2025 (American Invitational Mathematics Examination) test.

However, OpenAI's blunder became the first viral discussion. During the live demonstration at the launch event, OpenAI made a very serious and basic mistake: in the chart shown on-site, the relationship between the size of the numbers and the display of the bar graph did not even match. The bar heights for 69.1 and 30.8 were exactly the same, and 52.8 was even larger than 69.1...

In response, Guangzhui Intelligence also randomly tested a set of data and asked GPT-5 to create a bar graph. The final data and chart relationship alignment were correct, indicating that it was not an issue with the model's generation but rather a simple charting error.

Apart from these optimizations, GPT-5 also makes adjustments in areas such as the model's ultra-long context understanding and hallucination issues. All efforts are aimed at better deploying this model.

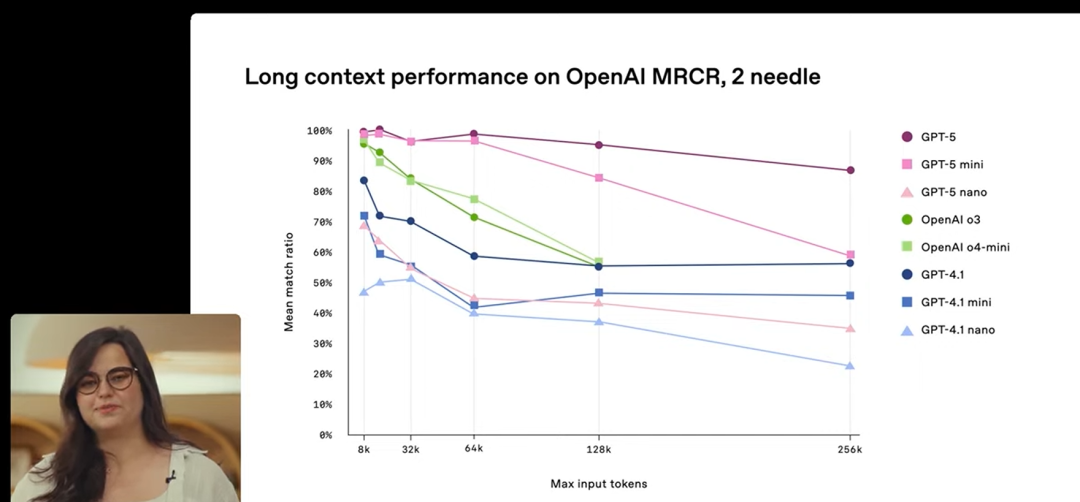

According to data provided by OpenAI, GPT-5's performance in context understanding has not only improved but also, in stages with longer texts (e.g., inputs of 128-256k length), the decline in GPT-5's understanding ability is significantly slower than that of other models, as can be seen from the graph. Furthermore, GPT-5 significantly reduces hallucination issues, with a 45% lower factual error rate than GPT-4o, which is beneficial for model applications in industries requiring precision such as law and healthcare.

If evaluated based on performance, while GPT-5 has undergone a comprehensive upgrade, it is clearly not the disruptive leap that the public expected. However, in terms of cost-effectiveness, GPT-5's pricing directly outstrips its competitors by a wide margin.

Take the standard version of GPT-5 as an example. Compared to Claude Opus 4.1's pricing of $15 per million tokens input and $75 per million tokens output, GPT-5's input price is less than one-tenth and its output price is less than one-seventh of Claude's.

The extremely low price is also the confidence behind OpenAI's willingness to limitedly and freely open it up to all users. Currently, free users can also experience GPT-5, but unlike paid users who have unlimited access, free users will automatically switch to the GPT-5-mini model after using a certain quota.

Furthermore, to cater to the different needs of developers, GPT-5 introduces new features in its API, allowing developers to control the length of AI-generated content by adjusting the verbosity level, which is divided into low, medium, and high.

Hard-to-Maintain SOTA, but Price May Win Over All

While GPT-5 has refreshed SOTA records in multiple capabilities, this leading edge is no longer a gap that requires long-term catching up.

Starting with OpenAI's initial claim of the "strongest programming model," the lead of just 0.4% over Claude Opus 4.1 is likely to be surpassed by Anthropic this month.

From poaching OpenAI members last year to surpassing some capabilities of OpenAI's flagship model, Anthropic is now in a stage similar to when OpenAI sniped at Google's new model, tightly following OpenAI's footsteps.

This month, on the same day OpenAI released two open-source models, Anthropic beat them by a dozen minutes, releasing a minor update version of Claude 4.1. Especially given that the company has also announced that a "major model update" will be released this month, the slim 0.4% margin makes it difficult to remain optimistic about OpenAI.

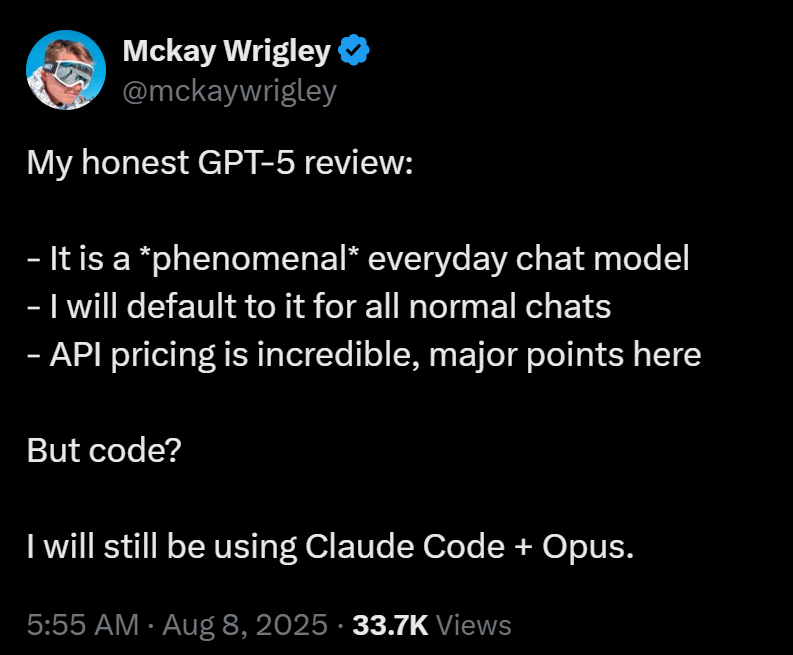

This slim advantage has also led to a polarized reception in reviews. Even though OpenAI boasts the status of the best programming model, it has not yet formed an overwhelming advantage in user experience.

Some users have stated that Claude outperforms GPT-5 in various cases, with better UI and frontend effects. Others believe that the code generated by GPT-5 is more refined.

GPT-5's comprehensive coverage and slight advantages may not even be as impressive as Google's visual generation model Genie, released the day before. After all, 24 frames per second can already make AI-generated videos smooth, and the 720p resolution represents a doubling of clarity.

Under the pressure from competitors like Anthropic and Google, the AI field's "SOTA" throne is becoming increasingly difficult to hold and leave a lasting impression.

So, where will the competition among large AI models go in the context of converging performance? OpenAI's answer is price. When technological leadership fails to form an absolute barrier, "price wars" become the ultimate weapon to win the market.

"GPT-5 is our smartest model to date, but what we mainly pursue is practical application value and large-scale popularization/affordability," Altman said on X. "We can definitely release a smarter model, and we will do so, but this model will benefit billions of people."

By optimizing costs, OpenAI's pricing, while not comparable to domestic cheap and abundant open-source models, still significantly outperforms Claude, which can easily cost programmers thousands of dollars a month. OpenAI can reduce input and output prices on the API end to one-tenth and one-seventh, respectively, representing a disruptive cost advantage.

This is also why in the second half of the launch event, OpenAI opened a "Developer Session" specifically to showcase the practical capabilities of the model to the developer community and brought in endorsements from the CEO of Cursor and the Chief Scientist of Manus to demonstrate the effectiveness of their model in Agent and Vibe Coding (ambient programming).

For C-end users, the freely available GPT-5 will significantly enhance the user experience for those who previously could not afford paid models like O3. For B-end users, the inexpensive API will also be a consideration for developers seeking cost-effectiveness.

In the year and a half between GPT-5's secret training and its release, OpenAI's true barrier has no longer been supported by increasingly shorter SOTA dividends but has become price and model deployment effectiveness.

When costs drop drastically to a point more accessible to the general public, the explosion of AI applications will be just around the corner.