AI SSDs: Accelerating Momentum

![]() 08/14 2025

08/14 2025

![]() 576

576

The storage industry is currently abuzz with excitement.

On August 5, Micron unveiled three data center SSDs powered by its G9 NAND technology, claiming to cater to the diverse demands of AI workloads.

Coincidentally, Kioxia also launched a 245.76TB NVMe SSD tailored for generative AI needs, while SanDisk released its latest 256TB SSD dedicated to AI applications.

Storage giants have been unveiling SSD products one after another, emphasizing their capacity to "empower AI".

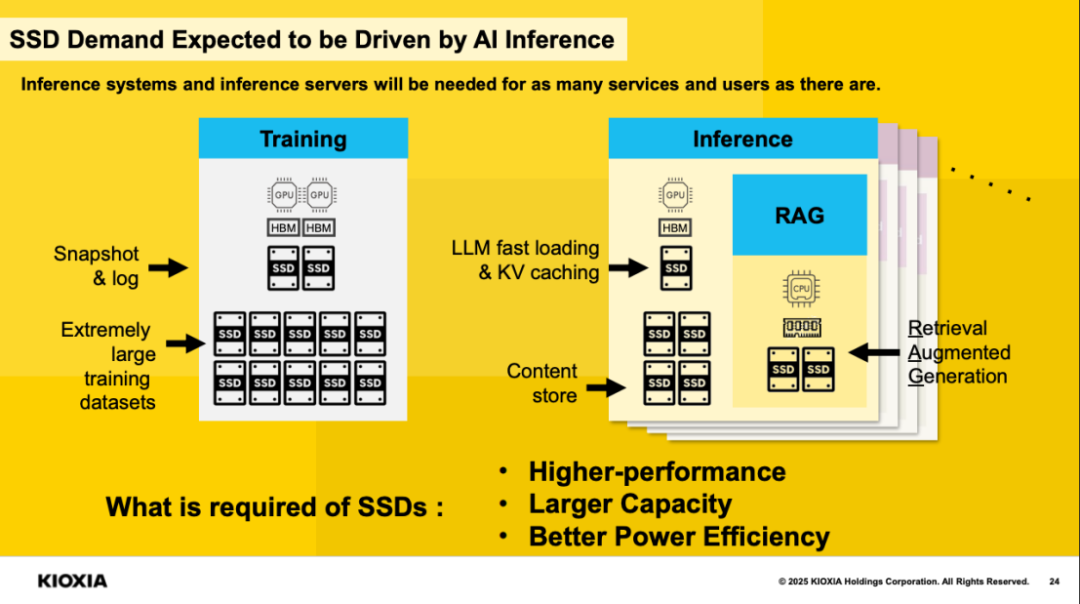

In the evolution of AI, the unique storage requirements of the two core processes—training and inference—have directly fueled the rapid rise of AI SSDs.

Unlike standard solid-state drives, AI SSDs are designed to handle the massive data throughput, low latency, and high IOPS (Input/Output Operations Per Second) demands of artificial intelligence applications such as deep learning, neural network training, and real-time data analysis.

AI Training: Demanding Storage Needs

Large models iterate rapidly, with each upgrade accompanied by an exponential increase in training data volume. PB-level data has become the baseline for AI training. The training process involves components like GPUs, HBM, and SSDs responsible for snapshots and logging, with significantly higher storage requirements than the inference process.

During training, the system needs to repeatedly read and write vast amounts of data, including training corpora, model parameters, log files, and intermediate results. The data flow frequency is extremely high and the load is continuous, with IO density far exceeding that of daily applications.

In the process of AI model training, SSDs are not only responsible for storing model parameters, including constantly updated weights and biases, but they also create checkpoints to periodically save the progress of AI model training, enabling recovery from a specific point even if training is interrupted. These functions heavily rely on high-speed transfer and write endurance, prompting customers to primarily choose 4TB/8TB TLC SSD products to meet the demanding requirements of the training process.

AI Inference: The Core Supporting Role of SSDs

In the AI inference process, SSDs assist in adjusting and optimizing AI models, particularly by updating data in real-time to fine-tune inference model results. AI inference primarily provides services such as Retrieval-Augmented Generation (RAG) and Large Language Models (LLM), and SSDs can store relevant documents and knowledge bases referenced by RAG and LLM to generate responses with richer information. Currently, large-capacity SSDs of 16TB and above, such as TLC/QLC, have become the primary products used in AI inference.

AI's triple rigid demands for storage—"high performance, large capacity, high energy efficiency"—make SSDs the optimal solution in AI scenarios.

According to TrendForce data, globally, the procurement capacity of AI-related SSDs will exceed 45EB in 2024. In the coming years, AI servers are expected to drive an average annual growth rate of SSD demand exceeding 60%, and the proportion of AI SSD demand in the entire NAND Flash market is expected to rise from 5% in 2024 to 9% in 2025.

Kioxia: AI SSDs, Approaching from Two Aspects

Kioxia unveiled its medium- to long-term growth strategy for the AI era this year. The focus is on AI-driven storage technology innovation, SSD business expansion, and capital efficiency optimization to strengthen its competitiveness in the NAND flash market.

For AI SSDs, Kioxia has two product lines:

The first is high-performance SSDs. Kioxia's CM9 series, designed specifically for AI systems, features PCIe 5.0 optimized for data centers, maximizing the functionality of GPUs that require high performance and reliability.

The second is capacity-oriented SSDs. Kioxia's LC9 series is suitable for use cases such as large databases used in inference, with a capacity of 122.88TB at the time and plans to introduce even larger capacity products in the future.

A couple of days ago, Kioxia launched a new capacity—245.76TB. It is understood that Kioxia's LC9 series SSDs also use QLC 3D flash memory, but they are characterized by the integration of CBA (CMOS directly bonded to the array) technology, which enables a capacity of 8TB in a small 154-ball grid array (BGA) package. Kioxia claims this is an industry first.

Source: Kioxia

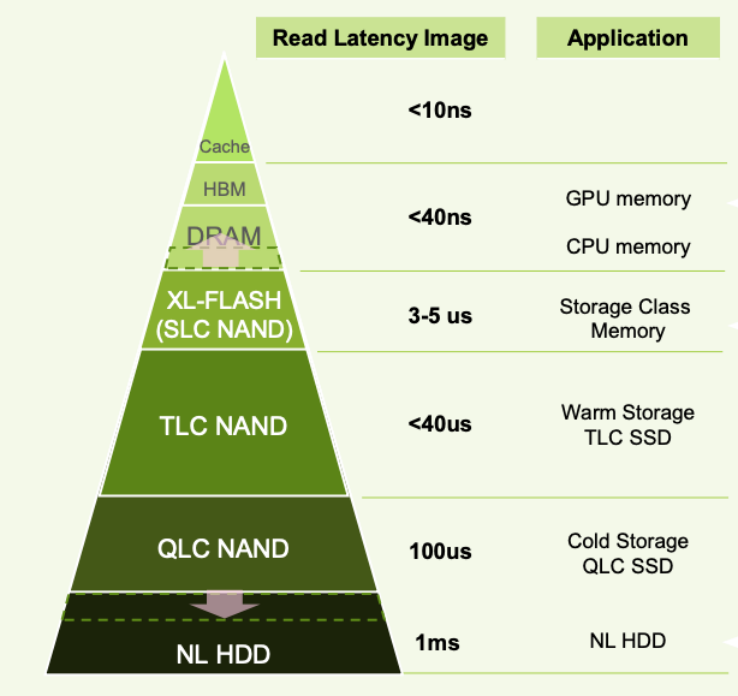

For future AI SSDs, Kioxia has also proposed its own vision, primarily breaking through from two aspects. The first is faster speed. Current SSDs can handle 2 to 3 million small file reads and writes per second, mostly using TLC and QLC, while new products will use XL-FLASH SLC flash memory, increasing speed to over 10 million times per second, particularly suitable for AI scenarios requiring frequent reading of fragmented data.

Here's an explanation: XL-FLASH is a low-latency, high-performance NAND developed by Kioxia to fill the performance gap between volatile memory (such as DRAM) and current flash memory. Initially, Kioxia positioned XL-FLASH as a competitor to Intel's discontinued Optane memory. Currently, Kioxia's second-generation XL-Flash uses an MLC (Multi-Level Cell) architecture, doubling the density and increasing chip capacity from 128Gb to 256Gb.

The second is greater intelligence. Currently, AI data retrieval relies on memory. In 2026, Kioxia will launch the AiSAQ software, enabling SSDs to handle AI retrieval tasks on their own. This not only reduces the memory burden but also allows AI applications to run more efficiently, particularly suitable for smart terminals and edge computing devices.

Micron: The AI SSD Trio

Micron's latest AI SSD offerings comprise three products.

The first is the Micron 9650 SSD, the world's first PCIe 6.0 SSD, primarily used in data centers. It offers a performance of 28 GB/s. According to Micron's tests, compared to PCIe 5.0 SSDs, the 9650 SSD provides up to 25% and 67% improvements in storage efficiency for random writes and random reads, respectively.

The second is the Micron 6600 ION SSD, with a maximum capacity of 245TB per disk, primarily used in hyperscale deployments and enterprise data center consolidated server infrastructures to build large AI data lakes. Compared to competing products, this SSD offers up to 67% higher storage density, with a single rack storage capacity exceeding 88PB, significantly reducing the total cost of ownership (TCO).

The third is the Micron 7600 SSD, mainly used for AI inference and mixed workloads. It is said to achieve industry-leading sub-millisecond latency under highly complex RocksDB workloads.

From Micron's product launches, it is clear that the focus is on speed, capacity, and cost-effectiveness. According to Micron's latest financial report, as of May 29, 2025, Micron's Q3 fiscal quarter revenue was $9.3 billion, a year-over-year increase of 37%; net income was $2.181 billion, a year-over-year increase of 210.7%. Among them, Micron's NAND revenue was $2.155 billion, accounting for 23% of total revenue, with a sequential increase of 16.2%. NAND Bit shipments increased by approximately 25% sequentially. Micron stated that it expects record revenue for fiscal year 2025.

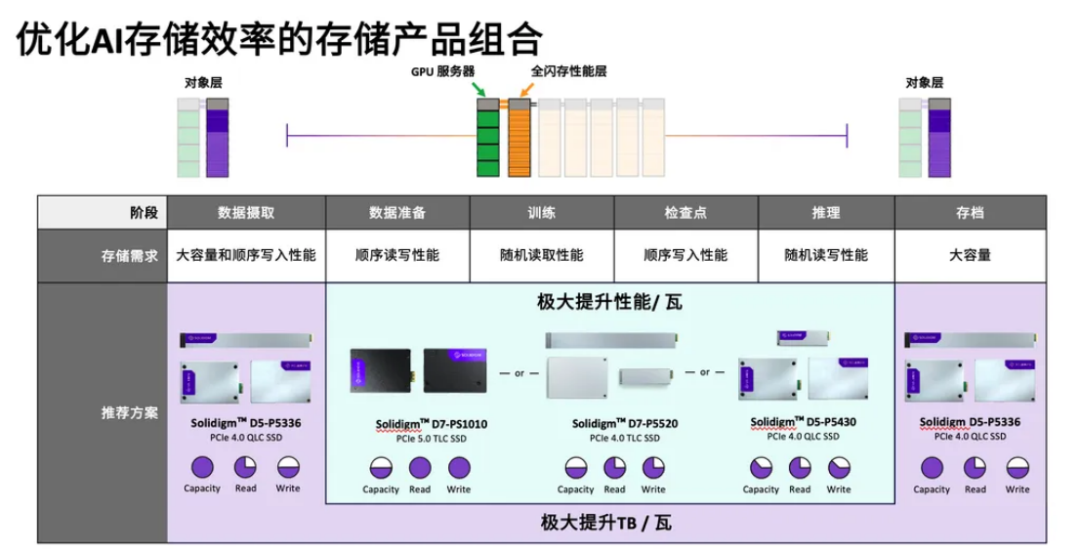

Solidigm: Storage Product Portfolio Optimizing AI Efficiency

Solidigm broadly classifies AI storage solutions into two categories.

One is Direct-Attached Storage (DAS), which focuses on IOPS per unit of power consumption for performance-sensitive scenarios such as training. The other is network storage (including NAS file/object storage), which targets large-capacity scenarios such as data ingestion, archiving, and Retrieval-Augmented Generation (RAG), requiring high read performance while also pursuing the lowest cost for storing massive amounts of data.

Currently, Solidigm has built a complete SSD product line covering SLC, TLC, and QLC. From the high-performance D7 series to the high-density D5 series, Solidigm can provide the most suitable products for every stage of the AI process, including data ingestion, preparation, training, checkpoints, inference, and archiving. This includes the D7-PS1010, D7-PS1030 PCIe 5.0 SSDs, and the high-capacity D5-P5336 QLC SSD.

Another highlight of Solidigm in AI SSDs is QLC SSDs. Since launching its first QLC SSD in 2018, Solidigm has shipped over 100EB of QLC and served 70% of the world's leading OEM AI solution providers.

Not only is Solidigm driving the popularization and application of QLC technology, but it is also boldly experimenting with liquid-cooled SSD technology. In March 2025, Solidigm showcased its first cold plate liquid-cooled SSD using the Solidigm D7-PS1010 E1.S 9.5mm form factor, significantly improving heat dissipation efficiency.

Although most AI SSDs are expected to be launched in 2026, comparisons from the previous text show that the contours of AI SSDs are already taking shape.

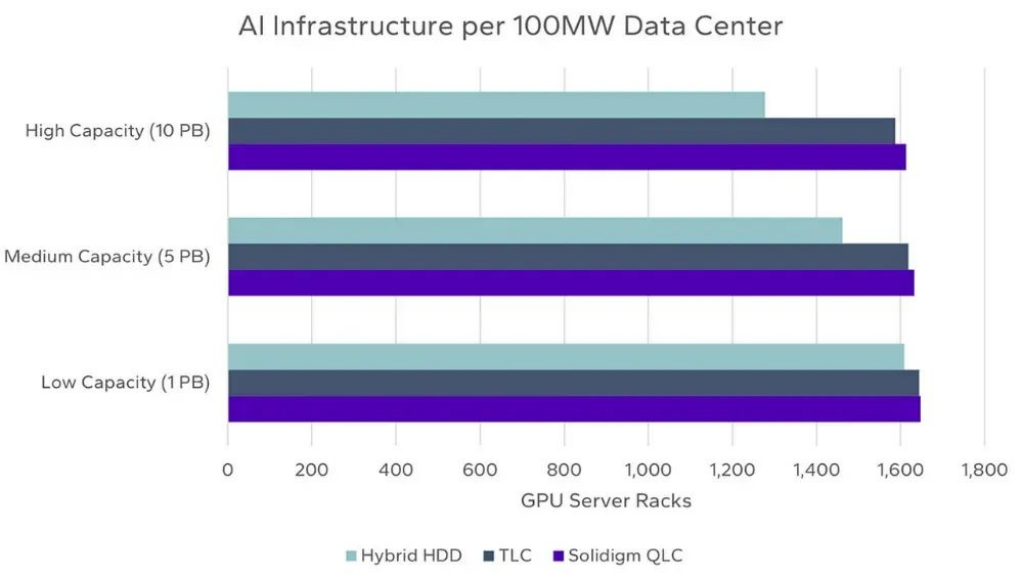

In terms of particle selection, AI SSDs will move towards QLC particles. Kioxia CEO Toshiaki Yanagi also stated that QLC SSDs are the best choice for the AI industry. Although SSD performance has been declining from SLC to MLC, then to TLC, and finally to QLC, with technological evolution, QLC SSDs in 2025 are already much faster than TLC SSDs in 2017. Today, QLC SSDs offer sequential read and write speeds of around 7000MB/s, providing powerful performance that can meet the requirements of AI large model data storage and retrieval.

Based on AI infrastructure scale for a 100-megawatt data center Source: Solidigm

From actual tests, Solidigm constructed a model of a new 100-megawatt AI data center to assess the impact of QLC SSDs, TLC SSDs, and hybrid deployments based on hard disk drives. It was found that QLC SSDs offer 19.5% higher energy efficiency than TLC SSDs and 79.5% higher energy efficiency than hybrid TLC SSDs and hard disk drives, allowing for the deployment of a larger number of complete AI infrastructure sets within the same data center when using QLC SSDs.

From the perspective of transmission interfaces and protocols, adopting the PCIe interface and supporting the NVMe protocol will likely become the standard configuration for AI SSDs in the future. The PCIe interface, with its continuously upgraded bandwidth capabilities, has evolved from PCIe 3.0 to the current PCIe 5.0, and the industry has already advanced to PCIe 7.0 (released in June).

As seen in the previous text, most of the currently launched SSDs support PCIe 5.0, and Micron has already launched PCIe 6.0 products with sequential read rates up to 28GB/s. Competitive AI SSDs should adopt PCIe 6.0 by next year.

However, PCIe is still relatively expensive at present, with the first batch of PCIe 6.0 SSD products priced at $500-$800 (1TB), which is 3-5 times that of ordinary PCIe 4.0 SSDs. Additionally, these products need to be paired with CPUs and motherboards that support PCIe 6.0.

Furthermore, the NVMe protocol is specifically optimized for flash storage, providing SSDs with extremely high I/O throughput and low latency, which is crucial for reducing data access bottlenecks. The efficient data access mechanism built on top of the PCIe interface significantly reduces latency and enhances IOPS performance, fully leveraging the fast read and write characteristics of flash memory. With technological advancements, the PCIe interface and NVMe protocol will continue to evolve, incorporating emerging technologies such as CXL.

The AI SSD race is becoming increasingly crowded.

From cloud training to edge inference, storage not only needs to ensure basic performance but also needs to be deeply compatible with AI computing processes. Storage vendors are adjusting their technical routes, shifting from purely pursuing performance indicators to optimizing overall system synergy. This shift reflects the industry's deep understanding of the characteristics of AI workloads.

The current focus of technological competition has shifted from comparing hardware parameters to achieving seamless coordination between storage and computation. After all, in the era of AI, the best SSD is not the one with the highest benchmark score but the one that makes AI "forget" that storage even exists.