From "Capable of Driving" to "Able to Replace Humans": What Are the Real Barriers to the Widespread Use of Autonomous Driving?

![]() 10/17 2025

10/17 2025

![]() 509

509

At present, vehicles equipped with Level 2 (partial automation driving assistance) are widely available in the market. Meanwhile, Level 4 Robotaxis are undergoing trial operations in select cities or within limited scenarios with smaller coverage areas. However, a substantial gap persists between "what assisted driving can accomplish" and "the large-scale commercialization that fully replaces human driving." Despite years of development, autonomous driving has yet to make a qualitative leap.

What exactly is obstructing its progress? Is it inadequate technology, high costs, outdated regulations, or a lack of public trust? The answer cannot be distilled to a single factor. Nevertheless, when we trace all these factors back to their origins, we uncover a core contradiction: we still cannot definitively, in a verifiable, quantifiable, and provable manner, assert that autonomous vehicles are sufficiently safe.

01 Perception and Long-Tail Scenarios: The "Last Mile" Challenge in Technology

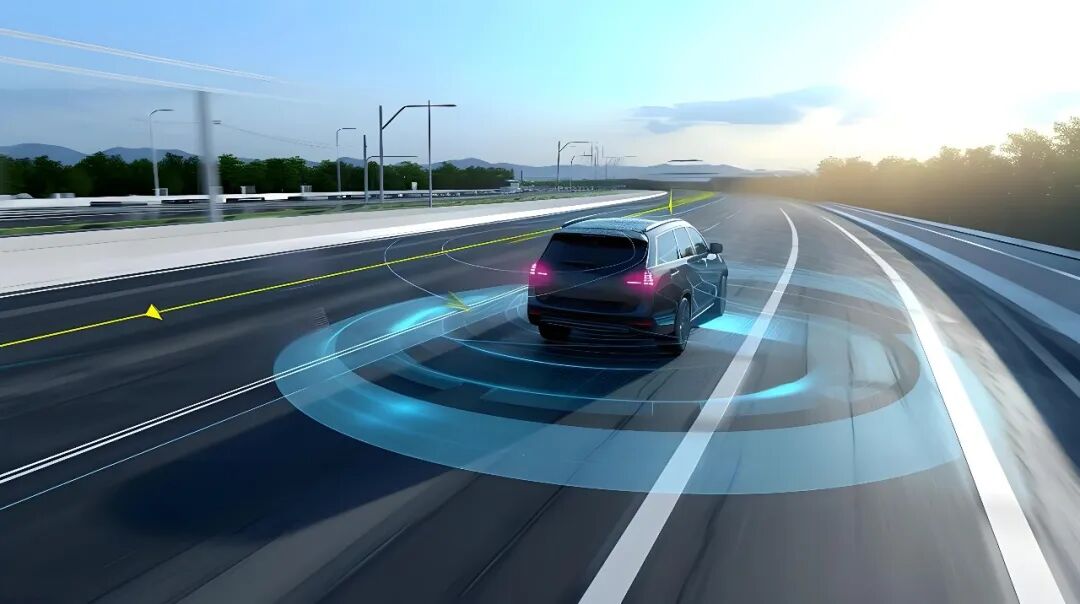

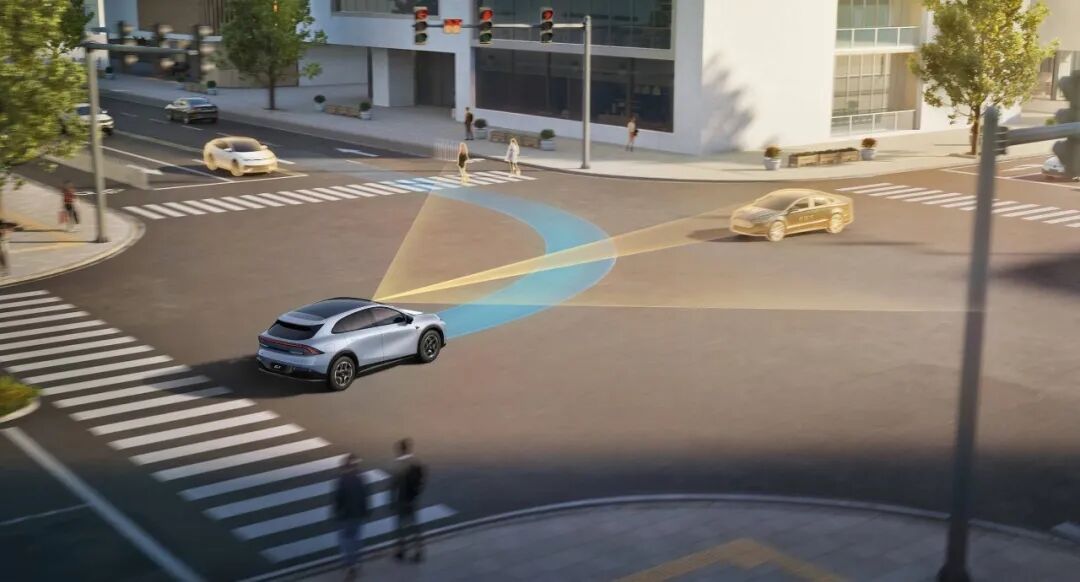

As the heart of autonomous driving, the perception system has always been a focal point in numerous autonomous driving designs. Cameras excel at discerning details and colors, millimeter-wave radars perform reliably in conditions of poor visibility, and LiDAR provides dense point cloud depth information. Theoretically, fusing data from these sensors for object detection, tracking, and scene understanding can offset the shortcomings of individual sensors. However, in reality, the problem is far more complex than simply "adding up" three types of information.

Adverse weather conditions and complex lighting situations, such as rain, snow, fog, irregular reflections, intense backlighting, and reflections from water on the road surface, can all lead to systematic deviations in sensor output. The proportion of such scenarios in training data is typically extremely small, resulting in high uncertainty when the model encounters unseen combinations. Additionally, there is the issue of long-tail scenarios. The traffic environment is replete with countless rare yet potentially fatal situations, including sudden pedestrian behaviors, non-standard traffic facilities, temporary construction sites, crossings by unusual animals, and obscured traffic signs. Training the system to cover these long-tail scenarios demands an enormous volume of data, diverse collection scenarios, and extremely precise labeling. Relying solely on road testing or limited simulations is far from adequate.

Moreover, many existing perception and decision-making modules are based on deep learning, which often lacks reliable confidence metrics and explainable failure modes. This implies that when the system makes errors on real roads, it is challenging to pinpoint whether the issue stems from perception failure, prediction errors, planning problems, or execution abnormalities, making it difficult to develop effective remediation and certification methods. Many technical solutions propose mitigating these issues through redundant sensing, rule checking, and edge safety controls. However, these approaches not only increase the cost and complexity of autonomous driving systems but also fail to fundamentally eliminate long-tail risks.

02 Institutional Challenges in Proving "Sufficient Safety"

Even if a technology team develops an autonomous driving system that appears flawless, boasting industry-leading perception, prediction, and planning capabilities, does that guarantee its widespread deployment? Not necessarily. For consumers, what matters is an evidence chain that can determine "this system is safer than human driving over the long term within the target Operational Design Domain (ODD)." Building such an evidence chain necessitates a systematic verification and validation (V&V) framework, massive amounts of real-world vehicle data, strict scenario coverage standards, and statistically convincing confidence intervals.

This is precisely why "safety certification" has emerged as one of the core obstacles to the commercialization of autonomous driving. Existing safety standards (such as functional safety ISO 26262 and discussions on SOTIF) can constrain electronic/software failures and known hazards but lack mature, universally accepted testing protocols for "AI uncertainties in the open world." As a result, judicial and regulatory bodies are reluctant to approve widespread deployment because, in the event of an accident, the boundaries of liability, recall and repair mechanisms, and insurance compensation must be clearly defined. Many companies opt to start with small-scale trials within defined ODDs (such as limited routes, specific weather conditions, or with remote takeover or safety operators). However, this approach also restricts the expansion of scalable business models, as the serviceable scenarios are too narrow, and marginal revenues are insufficient to cover high research and development and operational costs.

Technologies such as scenario-based simulation, verification methods based on causal inference, synthetic data enhancement for rare scenarios, formal verification of critical submodules, redundant systems, and continuous online health monitoring can technically address issues of poor explainability. Nevertheless, these methods are either costly or still lack socially accepted authoritative evidence in certain areas. In other words, technological incompleteness directly leads to institutional hesitation, creating a feedback loop that hinders widespread adoption.

03 Cost and Business Models: Who Bears the Cost of Safety?

Perception hardware (especially high-precision LiDAR), high-performance computing power, redundant actuators, continuous high-definition mapping and cloud updates, and large-scale data labeling and training all represent significant cost investments. Early Robotaxi companies primarily relied on venture capital and subsidies to support a "invest first, scale later" strategy. However, for long-term commercial viability and self-sufficiency, cost control is essential.

To enable the commercial application of autonomous driving, two paths can be taken. The first is cost reduction: lowering hardware costs, computing power consumption, and mapping maintenance costs to make the system economically replicable. The second is limiting ODDs: initially focusing on scenarios that can achieve economies of scale (such as fixed-route campus logistics, enclosed or low-speed urban shuttles, and industrial park autonomous delivery) and using scale and frequency to dilute per-unit costs. Both approaches require corresponding regulatory and insurance mechanisms; otherwise, even if costs can be reduced, large-scale adoption will remain elusive.

In addition to hardware costs, operational and maintenance costs, continuous mapping and software updates, legal compliance costs, and post-accident compensation and reputation recovery can account for a substantial proportion of total costs. To bring autonomous driving to the mass market, sustainable business models must be found, such as mileage-based pricing for mobility services, data-driven differentiated pricing, or cost-sharing partnerships with urban transportation infrastructure. However, the implementation of these business models hinges on whether laws can clearly define liability and reduce operational risks.

04 Regulations, Liability, and Public Acceptance: An Interconnected Triangle

For autonomous driving technology, public acceptance is a highly sensitive indicator. A single fatal accident can be enough to tighten public opinion and regulatory scrutiny. Whether the public is willing to entrust their lives to algorithms depends on whether they understand the risks, trust regulatory bodies to hold parties accountable after incidents, and see long-term statistical evidence that the system is truly safer. Regulators must strike a delicate balance between protecting public safety and not stifling technological innovation.

Liability allocation is also one of the core challenges hindering the widespread adoption of autonomous driving. When a vehicle operates with a higher degree of automation, who is responsible in the event of an accident: the vehicle manufacturer, software developer, map provider, or operator? The insurance industry is also waiting to see how to price autonomous driving risks. The lack of a clear legal framework discourages investors and operators, delaying large-scale deployment.

05 Final Thoughts

After reading this, you might ask: What is the biggest obstacle? My conclusion is that the hindrance cannot be attributed to a single factor. The key challenge to the widespread adoption of autonomous driving lies in the absence of "provable long-term safety," which tightly binds together technological maturity, cost pressures, regulatory lag, and public trust. Technology cannot achieve predictable performance in all long-tail scenarios, so regulators cannot confidently approve widespread deployment. Regulatory hesitation limits scalability, which in turn means insufficient data accumulation and cost dilution to further improve technology. Without scale and clear regulations, the public lacks long-term safety records, making acceptance difficult to improve. It is a closed loop, and breaking this systemic bottleneck through a single point of improvement is unlikely to succeed.

-- END --