AI Chips: Dual Strategies, One High-Stakes Game

![]() 10/17 2025

10/17 2025

![]() 433

433

In the high-stakes chess match of computing power, the clatter of moves echoes through the bustling AI landscape of 2025.

Recently, two chip behemoths have clinched billion-dollar computing power deals with leading AI firms. One is leveraging technological dominance to carve out new growth paths, while the other is forging alliances through equity ties. These two contrasting strategies are sharpening the competitive edge in the global AI chip arena, making the core battle increasingly intense and clear-cut.

01 AI Chip Titans: Two Distinct Strategies

In September, NVIDIA and OpenAI unveiled a strategic partnership: OpenAI will deploy a minimum of 10GW (10 gigawatts, equivalent to 10 million kilowatts) of NVIDIA systems, comprising millions of GPUs. In return, NVIDIA will continuously invest in OpenAI as these computing capabilities are rolled out, with total investments reaching up to $100 billion (approximately RMB 711.47 billion).

Notably, 10 gigawatts of computing power equate to 4-5 million GPUs, consuming NVIDIA's entire AI chip production capacity for 2025 and doubling its shipment volume from the previous year.

Simultaneously, both parties announced the deployment of the first GW-level data center, powered by NVIDIA's Vera Rubin platform, in the latter half of 2026.

This collaboration is pivotal for both NVIDIA and OpenAI. Jensen Huang, NVIDIA's CEO, hailed it as the "largest computing power project in history" and the "largest AI infrastructure project in history."

Sam Altman, OpenAI's CEO, stated that without this partnership, OpenAI would struggle to deliver the services its users demand or continuously develop superior models. These infrastructures act as the "fuel" propelling OpenAI to enhance its models and boost revenue.

In early October, OpenAI and AMD struck another significant deal. OpenAI will deploy up to 6GW of AMD Instinct GPUs over the next few years. Under the agreement, the first 1GW of equipment will be operational by 2026, featuring the AMD Instinct MI450 series, with plans to extend to the MI350X and subsequent generations.

According to the agreement, the first 1GW of equipment will be up and running by 2026.

AMD has granted OpenAI warrants for up to 160 million shares, with exercise terms linked to chip deployment milestones and stock price targets. If OpenAI fully exercises these warrants, it could acquire roughly 10% of AMD's equity based on its current outstanding shares. This equity binding has transformed OpenAI and AMD into a "community of shared interests."

So, what impacts do these partnerships with OpenAI have on NVIDIA and AMD, respectively?

02 The Impact on NVIDIA and AMD

Firstly, the most immediate impact is the direct revenue boost for both companies.

Jensen Huang revealed during an earnings call that the cost of constructing 1GW of computing power is approximately $50-60 billion, with NVIDIA's chips and systems accounting for about $35 billion. Based on this, the investment scale for the latest 10GW data center construction project is estimated to be around $500-600 billion, roughly in line with the previously announced Stargate project scale. NVIDIA is poised to generate approximately $350 billion in revenue from this endeavor.

Lisa Su, AMD's CEO, anticipates that the agreement will bring in hundreds of billions of dollars in additional annual revenue over the next four years and spur other clients to follow suit, propelling AMD's overall new revenue to exceed $100 billion.

Beyond direct revenue, the collaboration between OpenAI and these two AI chip leaders also carries significant potential implications for the entire AI market. Industry insiders have noted:

Firstly, at the pricing level, NVIDIA's bargaining power may be diluted. OpenAI's ability to compare prices through its partnership with AMD could compel NVIDIA to reduce its premiums in subsequent orders.

Secondly, at the technological level, OpenAI will directly participate in the design feedback stages of multiple generations of AMD products, from the Instinct MI300X to the MI450 and MI350X. The cooperation between OpenAI and AMD transcends mere supply and demand exchanges, establishing a framework for joint hardware and software R&D. Both parties will share product roadmaps and collaborate to optimize GPU architectures, memory bandwidth, AI accelerators, and AMD's ROCm software ecosystem. This could directly weaken NVIDIA's "hardware-software" synergy.

Finally, at the market level, the core value of hundreds of billions of dollars in additional annual revenue from OpenAI lies in the "customer endorsement leverage." Currently, OEM manufacturers like Dell and Lenovo are ramping up mass production of MI300X systems, with cloud providers like Microsoft and Oracle following suit in procurement. OpenAI's involvement confirms the "leading customer drive effect." Lisa Su recently stated that the AI boom is expected to endure for a decade, with this year being only the second year of the wave. Faced with concentrated computing power demands over the next eight years, AMD's close cooperation with OpenAI has precisely secured a crucial market entry point.

03 Market Upheaval: Who Stands to Gain?

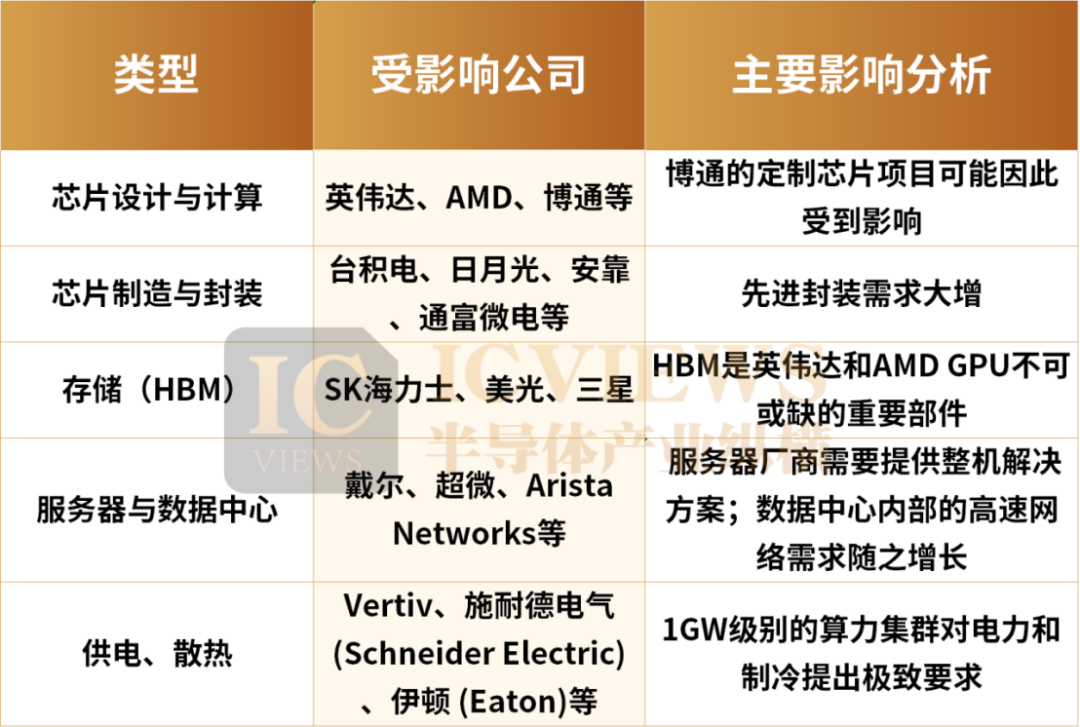

In terms of chip design and computing, OpenAI's custom chip project with Broadcom may face some impact, but Broadcom's exchange chip business could still benefit from AI data center construction.

However, this strategic move extends far beyond the chip design realm.

In chip manufacturing and packaging, both AMD and NVIDIA rely on TSMC for foundry services and adopt advanced packaging technologies such as CoWoS. These large orders will continue to drive up demand for TSMC's advanced processes and packaging capacities. Additionally, packaging and testing companies like ASE and Amkor will also benefit from the soaring demand for AI chips. Notably, ASE, as the world's largest independent OSAT (Outsourced Semiconductor Assembly and Test) provider, is accelerating its advanced packaging布局 (layout) in Kaohsiung, comprehensively upgrading capacities for high-end technologies like CoWoS, SoIC, and FOPLP.

There is also a company that may not be the brightest star but is an indispensable "behind-the-scenes hero" for giants like AMD: Tongfu Microelectronics. Tongfu Microelectronics has formed a joint venture + cooperation model with AMD, establishing a close strategic partnership and signing long-term business agreements to provide packaging and testing services for AMD's AI PC chips and AI accelerators used in work training and inference. It is now AMD's largest packaging and testing supplier, with AMD also becoming a major client of Tongfu Microelectronics.

Furthermore, AMD holds a 15% stake in Tongfu Microelectronics' Penang and Suzhou factories, with over 60% of Tongfu Microelectronics' annual order revenue coming from AMD (64% in the first half of 2025).

The announcement of cooperation between OpenAI and AMD will directly benefit Tongfu Microelectronics.

In terms of storage (HBM), SK Hynix, Micron, and Samsung are the main participants in HBM, which is an indispensable component for NVIDIA and AMD GPUs. Therefore, these companies will collectively benefit from the industry's high business climate (prosperity). Notably, recent market news indicates that NVIDIA has basically confirmed that its latest AI acceleration chip, the GB300, will adopt Samsung's fifth-generation high-bandwidth HBM3E technology, meaning Samsung can finally enter NVIDIA's supply chain after multiple setbacks. Currently, both parties are finalizing details such as supply quantities, prices, and delivery times.

In the server and data center sectors, server manufacturers like Dell and Supermicro need to provide whole-machine solutions, and the cooperation will drive orders for these manufacturers. The demand for high-speed networks within data centers will also grow, benefiting network equipment providers like Arista Networks from the upgrade to 400/800G Ethernet in AI data centers.

In terms of power supply and cooling, 1GW-level computing clusters impose extreme requirements on power and cooling. This will directly benefit data center infrastructure suppliers like Vertiv, Schneider Electric, and Eaton in power distribution and liquid cooling.

04 OpenAI's Third Path

While collaborating with international GPU chip leaders, OpenAI is also exploring a third path: developing its own chips.

OpenAI began collaborating with Broadcom last year to develop its first custom AI inference chip, aimed at handling its large-scale AI workloads, particularly inference tasks.

Broadcom will assist OpenAI in chip design and ensure manufacturing by TSMC, with production expected to commence in 2026. As the world's largest chip manufacturer, TSMC possesses advanced manufacturing technologies and massive production capacities. According to previous information, the chips developed by OpenAI will gradually utilize TSMC's 3nm and subsequent 1.6nm processes for production.

To diversify its chip supply, OpenAI previously planned to establish its own chip foundry. However, due to the high costs and significant time required to build a foundry network, OpenAI has shelved this plan and is focusing on internal chip design instead.

OpenAI's strategy of developing its own chips holds two main significances:

Firstly, OpenAI is one of NVIDIA's largest GPU buyers and has previously relied almost entirely on NVIDIA GPUs for training. Since 2020, OpenAI has developed its generative AI technology on a large supercomputer built by Microsoft, which uses over 10,000 NVIDIA GPUs. However, due to chip shortages, supply delays, and high training costs, OpenAI has had to explore alternative solutions.

As early as 2024, OpenAI CEO Sam Altman expressed concerns on social media about the insufficient supply of NVIDIA GPUs, which constrained its AI development progress. This decision to develop its own chips aims to gradually reduce reliance on a single supplier through internal technological iteration and achieve greater autonomy.

Secondly, not just OpenAI, but the current AI market has welcomed numerous competitors, including Meta and Microsoft. Notably, these companies have all launched their own high-performance AI chip products, such as Meta's MTIA series and Microsoft's Azure Maia 100. Amazon's self-developed Trainium series chips reportedly offer 30-40% higher cost-effectiveness than other GPU (Graphics Processing Unit, such as NVIDIA) suppliers.

However, neither self-developed AI chips nor AMD's accelerated progress are likely to significantly impact NVIDIA's market share in the short term.

According to the latest report from industry analyst Susquehanna, NVIDIA's market share in the AI graphics card sector has approached 80%, fully reflecting its significant competitiveness in technological innovation and product layout.

Despite increased market competition in the future, Susquehanna predicts that NVIDIA will still hold a 67% market share by 2030. Although this represents a decline from its current position, it still far exceeds other competitors, demonstrating its unshakable industry status.

The data shows that under NVIDIA's dominant market landscape, other vendors are also actively seeking breakthroughs. As the second-largest beneficiary, AMD is expected to capture approximately a 4% market share by 2030. Although this proportion is relatively low, its annual revenue is expected to grow from the current $6.3 billion to $20 billion, indicating strong growth potential.

Meanwhile, Broadcom has carved out new market space by focusing on the development of custom ASIC chips. Analysts predict that Broadcom will capture a 14% market share by 2030, with revenue reaching $60 billion, a significant increase from this year's $14.5 billion.