NVIDIA's "Suppressed" 25 Years

![]() 07/02 2024

07/02 2024

![]() 699

699

In the mid-19th century, the western United States witnessed a booming gold rush, but it was not the gold diggers who took risks with their lives that ultimately made money. A merchant named Sam Brannan became the first millionaire in California's history by selling shovels to gold diggers.

Every time a new trend emerges, there are always companies that want to play the role of "shovel sellers." Especially in the wave of the metaverse and artificial intelligence, some companies have even explicitly labeled themselves as "shovel sellers" in their presentations, fearing that the outside world may not understand their positioning.

However, until now, it seems that only NVIDIA can truly be called a "shovel seller."

On June 18th, NVIDIA's market capitalization reached 3.34 trillion US dollars, officially surpassing Microsoft to become the company with the highest market capitalization in the world. Hans Mosesmann, an analyst from Rosenblatt Securities, even boldly predicted that NVIDIA would continue to rise in the next year, with its market capitalization expected to reach nearly 5 trillion US dollars.

Looking back at NVIDIA's market capitalization growth journey, this "shovel seller" founded in 1993 only started to show a sharp rise in market capitalization after 2017, and the mainstream technologies currently supporting NVIDIA's market capitalization all originated before 2017.

Why was NVIDIA so ordinary in the previous 25 years?

01 Choosing the Right Graphics Track, Almost Losing to "Wrong Route"

Back in early 1993, 30-year-old Jen-Hsun Huang quit his job at LSI Logic and founded his first company, NVIDIA, choosing the graphics processor track.

At that time, there were already over 20 companies in the market, including large corporations like IBM, HP, Sony, Fujitsu, Toshiba, and many entrepreneurs like NVIDIA. With his connections and experience in the semiconductor market, Jen-Hsun Huang and NVIDIA still managed to secure a spot.

In May 1995, NVIDIA released its first product, NV1. There was no concept of a graphics card back then, and this product was called a multimedia accelerator, integrating 2D and 3D graphics processing technologically and featuring video processing, audio wavetable processing, game ports, and other functions.

In the second month after the release of NV1, NVIDIA secured its first round of financing from Sequoia Capital and Sierra Ventures. Because NV1 integrated sound cards and joystick control units into the chip, NVIDIA also successfully attracted the attention of Sega, a game console manufacturer, and secured an order for the graphics processing part of Sega's next-generation console, as well as a development deposit of 7 million US dollars.

Originally, it was expected that NVIDIA would make rapid progress in the gaming market and embark on a rise in the graphics processor market, but the emergence of Microsoft directly rewrote NVIDIA's trajectory.

At that time, graphics acceleration technology was in its infancy, and there was no unified standard in the industry. Many companies adopted the "triangle rendering" specification, while NVIDIA chose "quadrilateral rendering." In 1996, Microsoft released the Direct 3D standard, announcing that graphics software would only support "triangle rendering." However, NVIDIA did not realize the change in the external environment in the first place and remained committed to the "quadrilateral rendering" route.

Facing the established industry standard, NVIDIA was forced to pay for its "mistake" - the NV1, which took two years to develop, soon became obsolete, and the NV2 developed for Sega ended in failure, putting NVIDIA on the verge of bankruptcy. Meanwhile, 3Dfx, which conformed to the historical trend, occupied 80% of the market within a year with its first product, Voodoo, and snatched Sega's order away from NVIDIA.

After deep reflection, Jen-Hsun Huang realized that "if we want to survive, we must make changes."

In terms of personnel, Jen-Hsun Huang appointed David Kirk, the Chief Technology Officer of Crystal Dynamics, a console game manufacturer, as NVIDIA's "Chief Scientist." In terms of research and development, NVIDIA shifted to the mainstream market, targeting 3Dfx, which sparked the 3D technology revolution. At the same time, it set a six-month internal cycle goal, ensuring that even if a product failed, it would not threaten the company's survival as the next-generation replacement product would be ready.

In April 1997, NVIDIA released its third-generation product, NV3, later known as RIVA 128. Although it was inferior to 3dfx's Voodoo in image quality, its 100M/second pixel fill rate, compatibility with Open GL, and price advantage made RIVA 128 win the favor of OEM manufacturers. Its shipment volume exceeded 1 million units in less than a year, pulling NVIDIA back from the "brink of death."

In the following year and a half, NVIDIA successively launched products such as Riva TNT and Riva TNT2, continuously expanding its market share through a combination of enhanced strategies. 3dfx, which had briefly dominated the market, retreated step by step, and NVIDIA's revenue exceeded 150 million US dollars in 1998, successfully listing on NASDAQ.

1999 was an important milestone in NVIDIA's history. Besides its listing, NVIDIA also launched its first GeForce-branded product, GeForce 256, in August of that year, introducing the concept of GPU for the first time. Subsequently, Dell, Gateway, Compaq, NEC, IBM, and others announced that they would preinstall NVIDIA's GPU. NVIDIA, which had almost "collapsed midway," became a "Silicon Valley newcomer" in the eyes of the media.

02 CUDA, the Future Deity, Created the Third Crisis

An interesting aside is that NVIDIA, which was once in trouble due to Microsoft's Direct 3D standard, later gave Microsoft a hard time.

The popularity of NVIDIA's GPU accelerated the popularity of the DirectX standard and garnered Microsoft's praise for this startup. NVIDIA was not only invited to participate in the development of the DirectX standard but also secured its largest order since its inception - the display chip for the original Xbox.

To enable NVIDIA to fully develop the GPU for Xbox, Microsoft directly paid a deposit of 200 million US dollars, with a contract amount of up to 500 million US dollars. Later, Microsoft also entrusted NVIDIA with the development of the Xbox's Media Communication Processor (MCP). The relationship between the two sides could be described as a "honeymoon period."

On November 15, 2001, the Xbox was first released in the United States at a price of 299 US dollars. At that time, NVIDIA's GeForce 3 started at 329 US dollars, and the X-Chip chip equipped on the Xbox was essentially an improved version of the GeForce 3. Analysts claimed that Microsoft lost 125 US dollars on every Xbox sold. NVIDIA was an unavoidable part of Microsoft's efforts to reduce the cost of Xbox components.

When Microsoft requested a price reduction from NVIDIA, Jen-Hsun Huang rejected it outright. The conflict between the two sides quickly escalated and ultimately led to arbitration. After news of the "breakup" with Microsoft spread, NVIDIA's market capitalization shrank from 11 billion US dollars at the beginning of 2002 to 1 billion US dollars, a significant cost.

Under Microsoft's intense pressure, Jen-Hsun Huang had to admit defeat and reached a settlement with Microsoft in February 2003, but failed to regain Microsoft's "true love." The injured Microsoft extended an olive branch to NVIDIA's rival, ATI, making it the GPU supplier for the next-generation Xbox 360.

With Microsoft's support, NVIDIA and ATI were locked in a battle for years. It was not just a product competition, but also a tug-of-war for market share. Taking the third quarter of 2004 as an example, ATI had a market share of 59% in the independent graphics card market, while NVIDIA only had 37%.

It was not until July 2006, when AMD acquired ATI for 5.4 billion US dollars, that the balance began to tilt towards NVIDIA. AMD had virtually exhausted its cash on hand and incurred 2.5 billion US dollars in debt to acquire ATI. Due to the debt crisis, the merger failed to achieve a "synergy" where 1+1 was greater than 2, allowing NVIDIA to stumble upon a "pie in the sky."

Before that, NVIDIA was almost locked into the GPU track, unable to expand horizontally. After that, NVIDIA accelerated its breakthrough, with CUDA as a representative move.

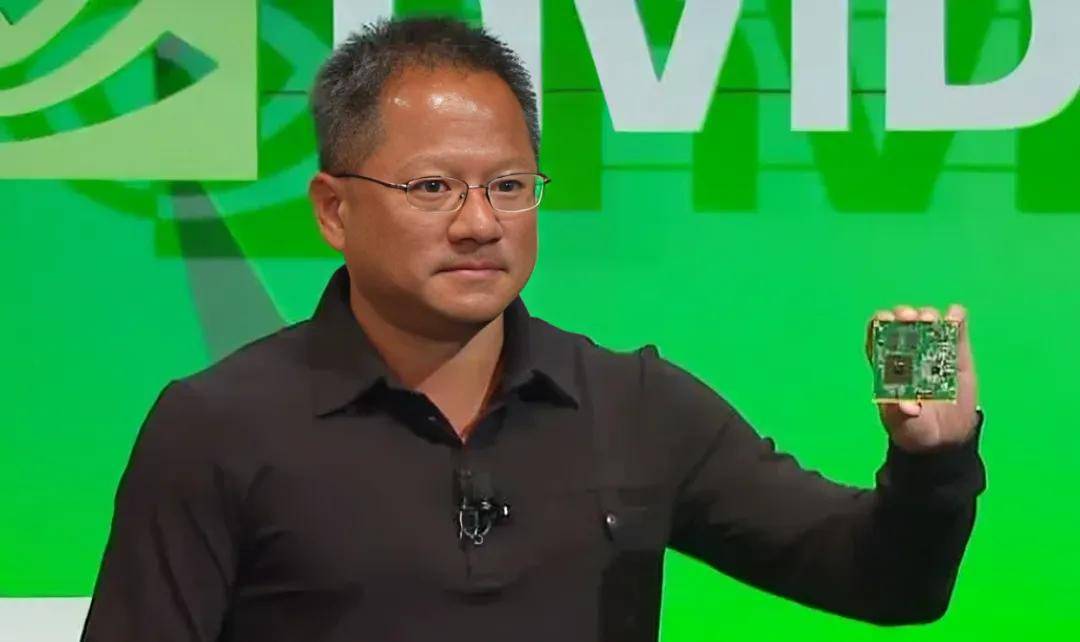

In 2007, NVIDIA held the first CUDA Technology Conference, Compute Unified Device Architecture, a parallel computing platform and programming model that enables developers to use programming languages like C, C++, Fortran, etc., to write GPU-accelerated applications.

Without CUDA, the GPU was merely a "graphics processing unit" responsible for drawing images on the screen. With CUDA, the GPU gained the ability to solve complex computational problems, helping customers program processors for different tasks.

The main use cases for GPUs were no longer limited to gaming.

Many people are familiar with the subsequent story. Deep learning, which became popular in 2012, and ChatGPT, which exploded in 2022, ultimately elevated CUDA to deity status. GPUs are widely used in scenarios such as image recognition, face recognition, speech recognition, and their value even exceeds that of CPUs.

However, in the context of 2008, NVIDIA invested approximately 500 million US dollars annually in CUDA research and development, accounting for one-sixth of its overall revenue, but almost no commercial value was visible. Reflecting on the financial statements, NVIDIA's net profit and gross profit declined significantly after 2008, even experiencing losses at times.

During this period, Jen-Hsun Huang repeatedly elaborated on the value of CUDA, but Wall Street analysts did not buy it, and NVIDIA's market capitalization hovered around 10 billion US dollars for a long time.

03 Failed Attempt to Penetrate the Mobile Market, Deep Learning "Acclaimed but Not Popular"

With NVIDIA managing 3 trillion US dollars, every word from Jen-Hsun Huang could be praised as "far-sighted," and every action could potentially become a famous scene. However, when NVIDIA was managing 10 billion US dollars, Jen-Hsun Huang had to find ways to boost the stock price and regain analysts' confidence.

Therefore, when CUDA was not well-received, NVIDIA had to pursue new trends.

The PC market showed a downward trend after 2008, and NVIDIA, whose main business is GPUs, was well aware of this. It was in this year that NVIDIA launched the Tegra series of chipsets targeting the mobile market, adopting the ARM architecture and built-in GeForce-based graphics processors. The clear thinking was: as long as there was demand for mobile games, Tegra would have a place in the mobile market.

In terms of timing, NVIDIA's entry was not late.

Qualcomm released the Snapdragon S1 in 2007, but it only began production in the fourth quarter of 2008; Apple launched its first in-house chip, the A4, in 2010; MediaTek entered the smartphone market in 2011; Samsung's first Exynos chip was also released in 2011... Not to mention that NVIDIA already had more than a decade of chip development experience and a close relationship with TSMC.

However, the results were far from what was expected.

Due to its lack of experience in the mobile field, when the first Tegra chip was released, NVIDIA chose to cooperate with Microsoft and have it installed on a product called Zune HD, which did not become as household a name as the iPhone. By the time the Tegra 2 series was launched, it was already compatible with the Android system and thus gained support from brands like Motorola and LG.

The Tegra 3 series gained some fame, being used in flagship products like the HTC One X and Nexus 7, but issues also emerged. The self-developed mobile GPU provided powerful graphics computing performance at the cost of high power consumption and heat generation, directly dragging down the reputation of related products.

This led to a familiar scene for domestic netizens: At the Xiaomi 3 launch event in 2013, Jen-Hsun Huang, as a special guest, came to the scene, "staying up late" to learn Chinese and shouting "Xiaomi is mighty." Xiaomi was just starting out at the time but was one of the few end customers for Tegra 3.

This time, NVIDIA was no longer "favored by God." Tegra, which had bet on the wrong technology route, did not get a chance to "redo it." Under the combined attack of Qualcomm and Samsung, the Tegra series of chips quickly fell out of favor. The originally confident NVIDIA regrettably missed out on the trillion-dollar mobile market.

In 2012, when NVIDIA showed signs of failure in the mobile market, Alex Krizhevsky, a researcher from the University of Toronto, used two GTX 580 graphics cards to train the neural network AlexNet. It took only a week for him to win the ImageNet Challenge with a significant advantage.

This unexpected surprise sparked a wave of using GPUs for deep learning model training, opening a new AI world for NVIDIA: deep learning and GPU's brute-force computing "hit it off," starting a race to accumulate data and hardware.

Starting around the GTC conference in 2014, Jen-Hsun Huang officially made AI NVIDIA's most critical business, almost annually decrying Moore's Law and speaking highly of the explosive rise of GPU-accelerated computing. In 2016, NVIDIA launched the Pascal architecture GPU designed for AI, and Jen-Hsun Huang personally delivered the world's first DGX-1 supercomputer to a startup called OpenAI.

NVIDIA had high hopes for the future of artificial intelligence, but the market was not interested.

Before 2016, NVIDIA's market capitalization remained stable at around 15 billion US dollars, with revenue in fiscal 2016 only reaching 5.01 billion US dollars, less than one-tenth of Intel's. The so-called deep learning wave could be described as typically "thunderous but rainless."

During those long days, Microsoft and Google were the giants of the tech industry, while Intel and Qualcomm were the leaders in the semiconductor industry. NVIDIA was merely a leader in the niche market of gaming, a "small chip company" that rose and fell with the gaming market.

04 Accelerating the Transition to "Data Centers," but the Market "Didn't Get It"

NVIDIA's story began in 1993, but it truly entered the public spotlight in 2018, after a long gap of 25 years.

As Jen-Hsun Huang later described: 2018 was "almost a perfect year, ending in a tumultuous way." In October of that year, NVIDIA's market capitalization reached its peak since listing, reaching 218.9 billion US dollars. However, shortly after, NVIDIA's market capitalization halved.

Looking back at that history now, many people tend to focus on NVIDIA's turn in 2017: At the GTC conference in 2017, Jen-Hsun Huang unveiled the AI-oriented Tesla V100 GPU, calling it "the world's most expensive computing power project" with a research and development budget of up to 3 billion US dollars. It also marked that the data center business was defined as NVIDIA's second curve, and its market capitalization surged to 71.4 billion US dollars on that day.

Time once again proved NVIDIA's correctness.