Lei Jun scolded netizens for seven days straight during the National Day holiday! Is the direction of AI voice wrong?

![]() 10/09 2024

10/09 2024

![]() 614

614

In just 3 seconds, your voice can be perfectly cloned.

Who would have thought that Lei Jun, one of the top figures in the tech industry, would spark heated discussions on the internet due to AI voice?

During the National Day holiday, Lei Jun's AI voice packs dominated Xiao Lei's Douyin homepage. The "Lei Jun" in the videos spoke with a familiar accent tinged with a hint of impatience, sarcastically remarking, "If this game is made like this, it's definitely here to cause trouble!" Due to the striking similarity in wording and tone to Lei Jun himself, comments often asked, "Did Lei Jun really say this?"

(Image source: Douyin)

The voice packs quickly gained popularity on short video platforms due to their uncanny resemblance to Lei Jun's speech patterns and accent. However, as the Lei Jun AI voice trend swept the internet, it sparked deeper discussions beyond mere entertainment. The rapid development of AI voice technology is profoundly changing our lives, but its misuse highlights potential risks: If Lei Jun's voice can be easily replicated and parodied, are others' voices also at risk?

Parodying Lei Jun: Is the direction of AI voice wrong?

In fact, before Lei Jun's AI voice gained traction, several celebrities had already fallen victim to similar situations. Late last year, an AI voice video of a well-known American singer frequently went viral. In the videos, she spoke fluent Chinese and even made politically charged statements, sparking significant controversy. The singer's team promptly issued a clarification, but many netizens had already been misled.

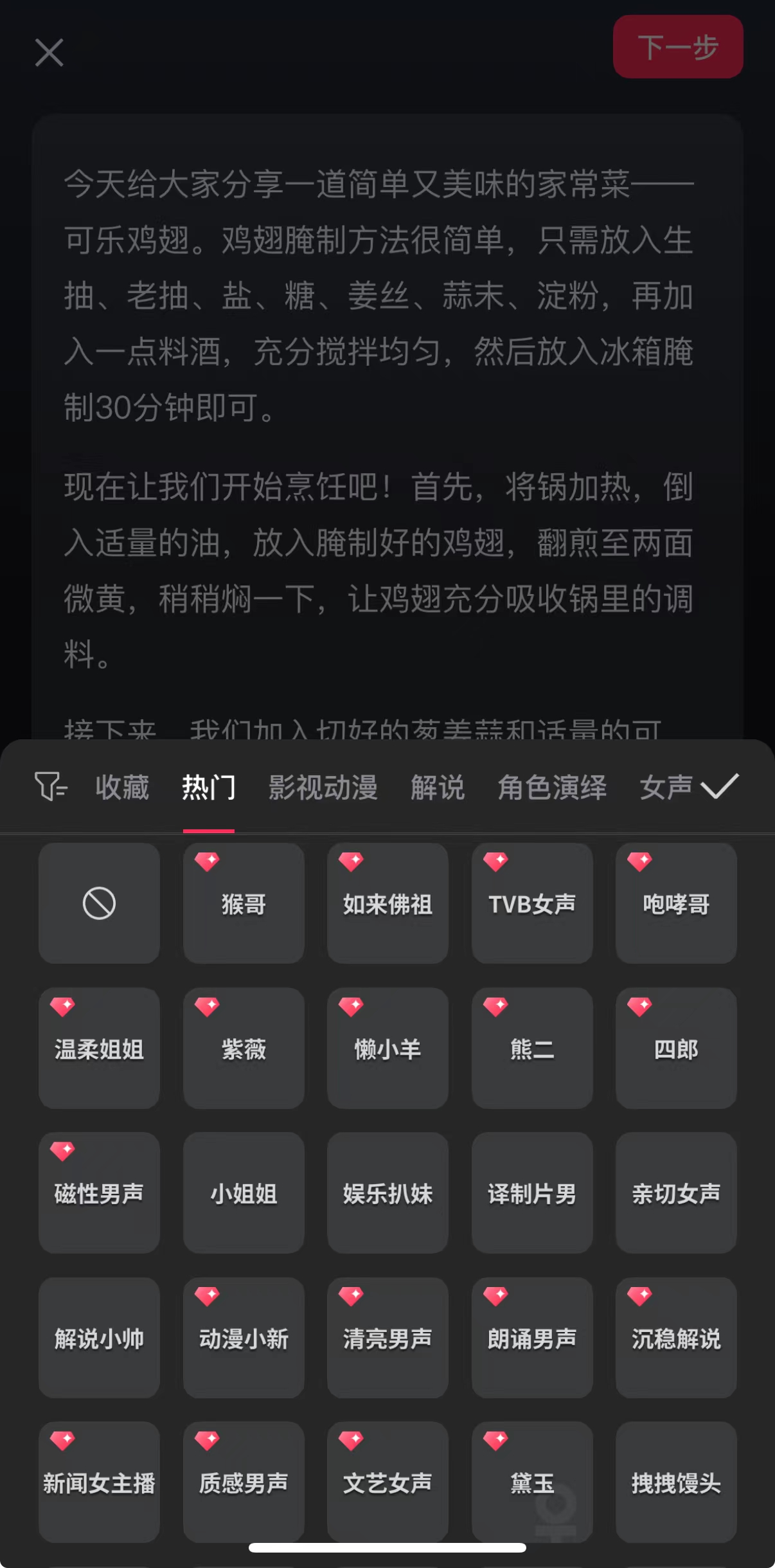

As one of the most widely adopted AI technologies, AI voice is prevalent in our lives. For example, Douyin early on introduced an AI dubbing feature that allows creators to input text and generate voiceovers using AI, imitating different accents and personalities.

(Image source: CapCut)

AI voice technology primarily encompasses five modes: speech synthesis, voice cloning, speech recognition, deepfake audio, and natural language processing. Creators often use a combination of these modes to forge celebrity AI voices, such as Lei Jun's AI voice pack, which integrates speech synthesis, voice cloning, deepfake audio, and natural language processing. These technologies work together to create highly realistic imitations of Lei Jun's voice and tone, providing users with an indistinguishable experience.

Of course, if AI voice synthesis were limited to mere parodies, perhaps concerns over its security would not be as pronounced. According to the Federal Trade Commission (FTC), losses due to imposter scams totaled $260 million in 2022, with many cases involving AI voice cloning technology. Scammers can mimic the voices of victims' friends and family with just a few seconds of audio, launching "emergency assistance" scams. These scams are prevalent in the US, UK, and India, targeting the elderly and young alike.

The rapid global spread of AI voice technology and its exploitation by criminals can be attributed to its explosive growth. Companies like ElevenLabs, specializing in AI voice research, have developed technologies using convolutional neural networks (CNN) and recurrent neural networks (RNN) to recognize and mimic unique vocal patterns, enabling personalized content creation, such as customized virtual assistant voices.

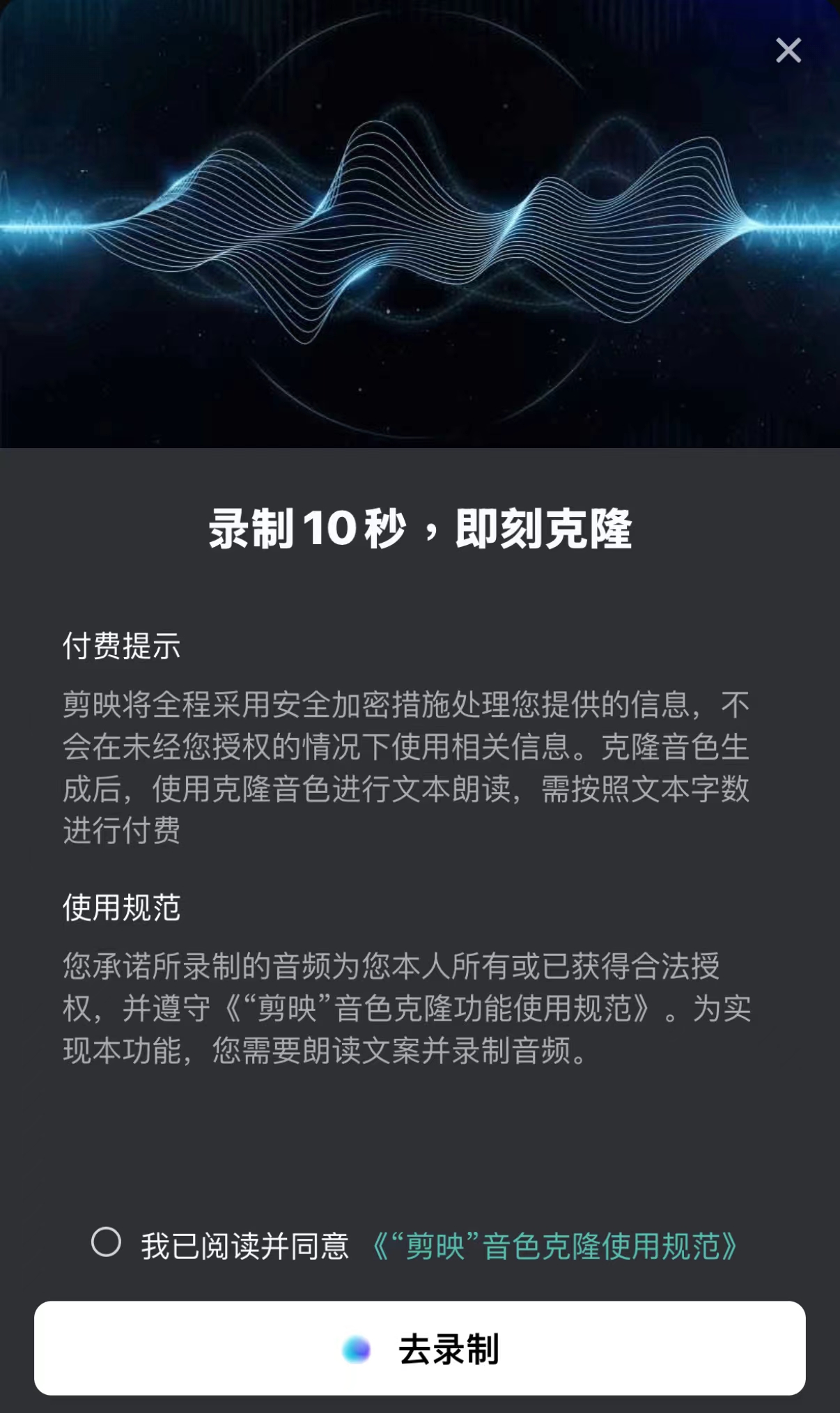

(Image source: CapCut)

Crucially, ElevenLabs pioneered high-fidelity voice cloning, allowing most creators to access ultra-realistic AI voice audio.

Despite AI voice raising security concerns, it provides fundamental support for the era of Artificial General Intelligence (AGI), enabling natural language interaction. This feature is ubiquitous in mainstream AI tools, with OpenAI, Google, and Apple viewing it as a crucial interaction mode.

Preventing AI Voice Abuse: The Key to Safety

Most AI voice forgeries encountered by ordinary users involve parodying celebrity voices for video dubbing, such as Lei Jun's. These parodies often contain vulgar language and inappropriate remarks, damaging celebrities' reputations and fueling online harassment. The spread of such content on social media misleads public opinion and undermines trust in social media platforms.

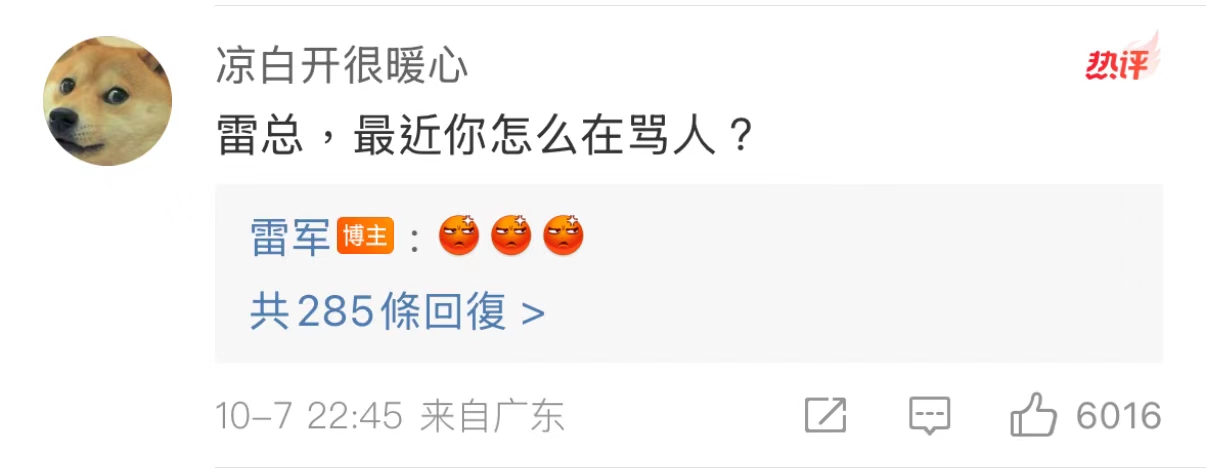

Lei Jun responded to the recent AI voice trend with three emoji on his personal social media account but did not indicate any legal action. This is partly because most of the AI voice content featuring Lei Jun appears on short video platforms like Douyin, which uses AI detection tools to flag risks in video content, minimizing potential disputes and misunderstandings.

(Image source: Weibo)

However, criminals often apply AI voice cloning to financial scams, posing significant risks due to the indistinguishable nature of these voice packs.

In response to the risks posed by AI voice abuse, various measures have been implemented to enhance security. Banks and financial institutions have prioritized multi-factor authentication, with one UK online bank significantly reducing voice scam losses through enhanced biometrics and SMS verification. Besides multi-factor authentication, banks can monitor potential voice fraud using AI technology to safeguard user funds.

AI technology providers also recognize their responsibility. Companies like ElevenLabs, upon discovering technology misuse, promptly introduced detection tools and restricted access for unpaid users. This not only helps identify fake audio but also reduces malicious exploitation. AI companies should further develop detection technologies and collaborate with governments and industry organizations to ensure the legitimate use of AI voice technology.

(Image source: ElevenLabs)

Apart from regulatory measures and AI technology providers' efforts, ordinary users must also be vigilant against AI voice scams.

Firstly, security experts recommend setting a "safe word" known only to family members. This not only prevents impersonation but also facilitates quick identity verification in emergencies. According to McAfee, AI can generate 85% similar voice clones with just three seconds of audio. Adding this simple authentication step enhances security.

Regarding privacy, users should be cautious about sharing content on social media. AI scammers often use public audio and video materials to create fake voices. Thus, controlling privacy settings and limiting audio/video uploads reduces the risk of being targeted. A low-profile online presence can deter criminals.

(Image source: ElevenLabs)

For example, avoid using your original voice on short video platforms or use AI tools to process your voice before sharing.

For financial security, enable multi-factor authentication, which requires two or more verification methods. Besides voice verification, banks may request one-time passwords (OTPs) or biometrics like fingerprints to confirm identity, mitigating AI voice cloning risks.

Lastly, enhancing sensitivity to detect AI voices is crucial. While AI can mimic emotions and tones, subtle distortions or inconsistencies may be present. Carefully listening to AI-cloned voices, such as Lei Jun's, can reveal word-to-word inconsistencies, aiding in authenticity discernment.

Final Thoughts

The rapid development of AI voice technology brings convenience but also exposes new social risks. Lei Jun's AI voice packs remind us that while entertaining, this technology can be a potent tool for scammers. The misuse of AI voice underscores the double-edged sword of technological advancement.

Preventing AI voice scams is a shared responsibility among individuals, businesses, technology providers, and society. Collaboration is crucial to create a safer and more trustworthy technological environment. Technology should serve society, not undermine trust. Balancing regulation, innovation, and public awareness is vital to realizing technology's true value.

Source: Leitech