Nobel Prize enters the 'AI Era': where should humanity go?

![]() 10/11 2024

10/11 2024

![]() 439

439

"Assuming frogs created humans, who do you think would have the upper hand now, humans or frogs?"

This was a question posed by "AI godfather" Geoffrey Hinton at the 2023 Beijing Academy of Artificial Intelligence. As a former vice president of Google, he resigned from his position after more than a decade to freely discuss and highlight the "dangers of AI."

However, a year later, AI has not only avoided the doomsday scenario of "destroying humanity" as he predicted, but has instead brought him the honor he dreamed of - the Nobel Prize in Physics. Moreover, it's not just physics; the Nobel Prize in Chemistry was also awarded to three scientists who used AI to study protein structures, shocking the academic community.

Awards representing humanity's highest achievements and latest breakthroughs in physics and chemistry are now being given to AI. This is not only recognition for scientists but also an affirmation of the trend of "AI-assisted scientific research."

Meanwhile, on October 9, NVIDIA launched a three-day "AI Summit" in Washington. Unlike previous events, this conference focused more on AI's successes in application rather than new products. According to NVIDIA's Vice President of Enterprise Platforms, Bob Pette, "The world is on the cusp of AI adoption."

What insights can we gain from the Nobel Prizes' encouragement of AI applications and NVIDIA's focus on them?

With consecutive shocks to the physics and chemistry communities, where is the AI story now?

According to the Royal Swedish Academy of Sciences, Geoffrey Hinton received the Nobel Prize in Physics for his pioneering contributions to machine learning using artificial neural networks. His machine learning techniques are widely used in data analysis and model building in physics.

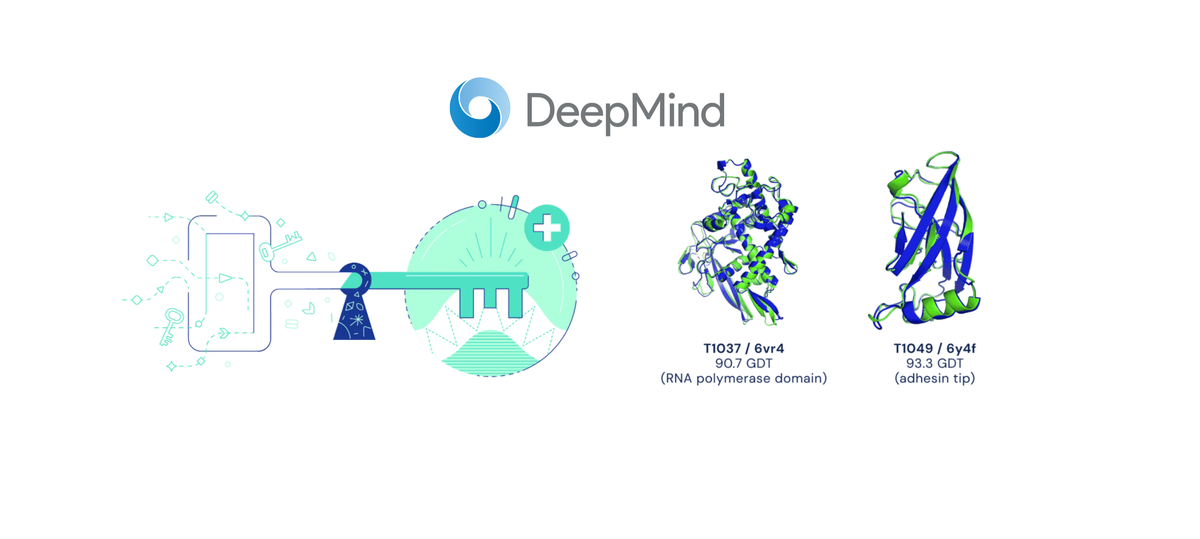

Meanwhile, half of the Nobel Prize in Chemistry was awarded jointly to DeepMind AI scientists Demis Hassabis and John Jumper for their development of the "AlphaFold2" model for protein structure prediction.

It is clear that in these two cases, AI did not win the awards alone. Rather, it was applied in specific research fields through interdisciplinary and cross-boundary integration.

Similarly, at NVIDIA's AI Summit, Bob Pette emphasized AI's applications in real-world scenarios: "From smart assistants to robotic factories, weather forecasting, cancer treatment, and extraterrestrial exploration, there are over 4,000 AI applications in NVIDIA's CUDA library, helping various industries achieve breakthroughs. AI is expected to generate up to $20 trillion in impact across all industries that leverage this technology."

For example, the National Cancer Institute is using NVIDIA's AI services for medical image analysis and information extraction from large databases, helping pharmaceutical companies and researchers screen for new drug molecules, significantly reducing the time required for drug development.

In fact, NVIDIA is not alone in this pursuit. Meta has launched its first AR glasses to explore AI hardware and recently introduced Meta AI chat software, integrating hardware and software for AI applications. Elon Musk has positioned Full Self-Driving (FSD) as a core selling point for Tesla, claiming that its Robotaxi will revolutionize global transportation, "making history."

It is evident that the focus of AI development has shifted from early-stage computing power and model layers to the final application layer, and AI technological progress is transitioning from "technology-driven" to "application-driven."

Why has this shift occurred?

Looking back at the development of generative AI, the entire ecosystem related to AI has formed almost overnight, spanning improvements in computing chips and servers, algorithm and model optimizations, and a surge in consumer applications, all within just a few years. In the past eras of industrial and internet revolutions, this process took decades or even centuries. So, why has it accelerated so rapidly now?

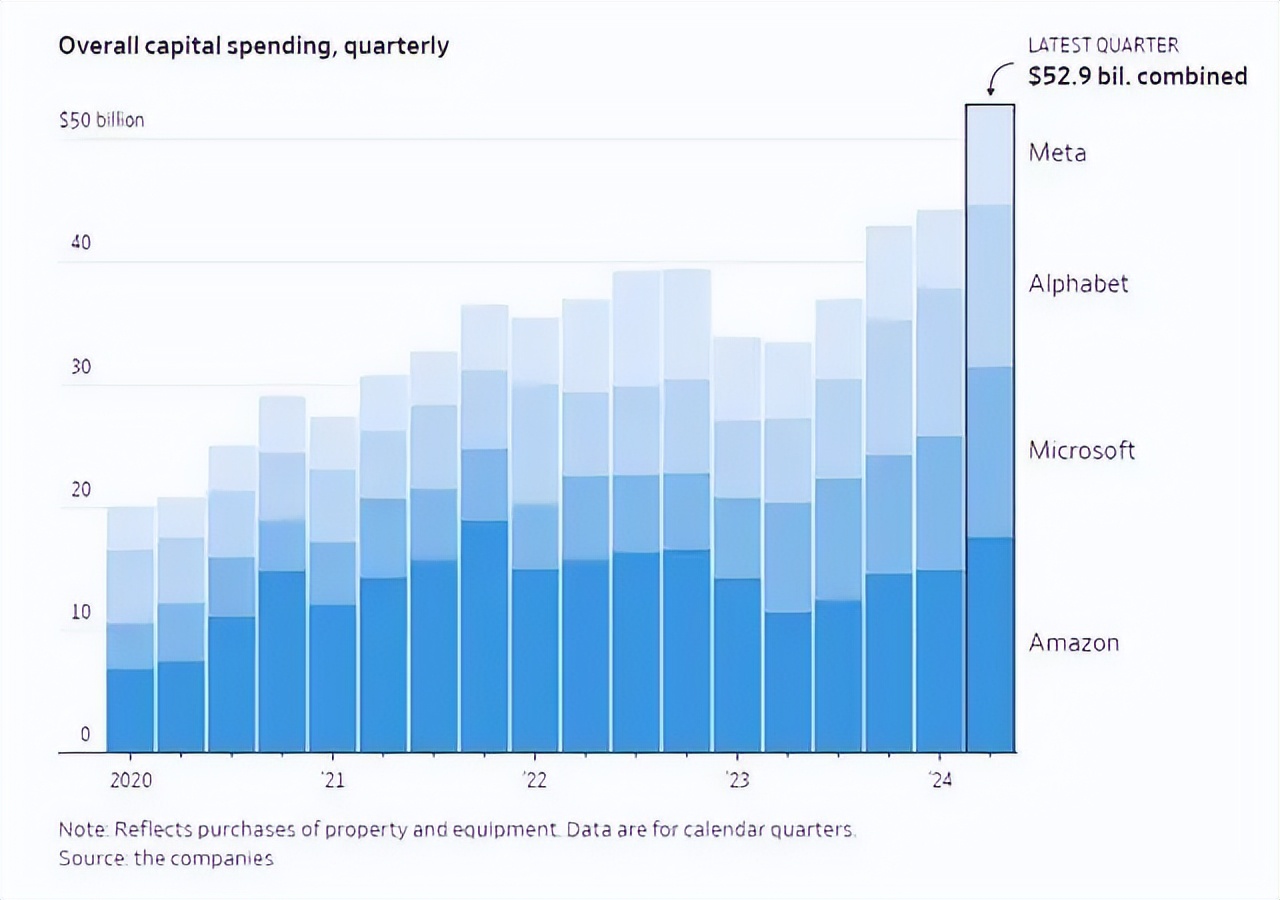

Capital investment is undoubtedly a significant driving force. In recent years, tech giants and investment institutions in China, the US, and Europe have been vying to invest in AI.

Taking Amazon, Microsoft, Alphabet, and Meta's quarterly capital expenditures as examples, the four giants collectively spent over $50 billion on AI investments in the second quarter of this year. Meta CEO Mark Zuckerberg announced that the company would purchase 600,000 GPUs by the end of 2024. Elon Musk also plans to acquire 300,000 GPUs by next summer.

Meanwhile, Chinese internet giants like Alibaba and Tencent are not to be outdone, acquiring several domestic AI startups.

Image source: ZhiDongXi

The influx of hot money has driven up the valuations of AI startups. Based on recent funding rounds, OpenAI's post-investment valuation has reached $157 billion, second only to ByteDance and Elon Musk's SpaceX.

While such investments may seem cost-free in the early stages, they are ultimately made to drive higher revenue and profit growth for companies, requiring a return on investment. Currently, all large AI models are incurring heavy losses, except for upstream players like NVIDIA and TSMC. According to OpenAI's projections, the company's annual losses, currently in the billions of dollars, will continue to widen, with expected losses of $14 billion in 2026 and profitability not expected until 2029.

From this perspective, both computing chip companies (like NVIDIA) and tech giants (like Meta and Tesla) need to demonstrate AI's commercial viability and prove its worth to attract more people and capital to sustain the AI narrative. This drives the shift in development focus.

How will the AI industrial revolution evolve?

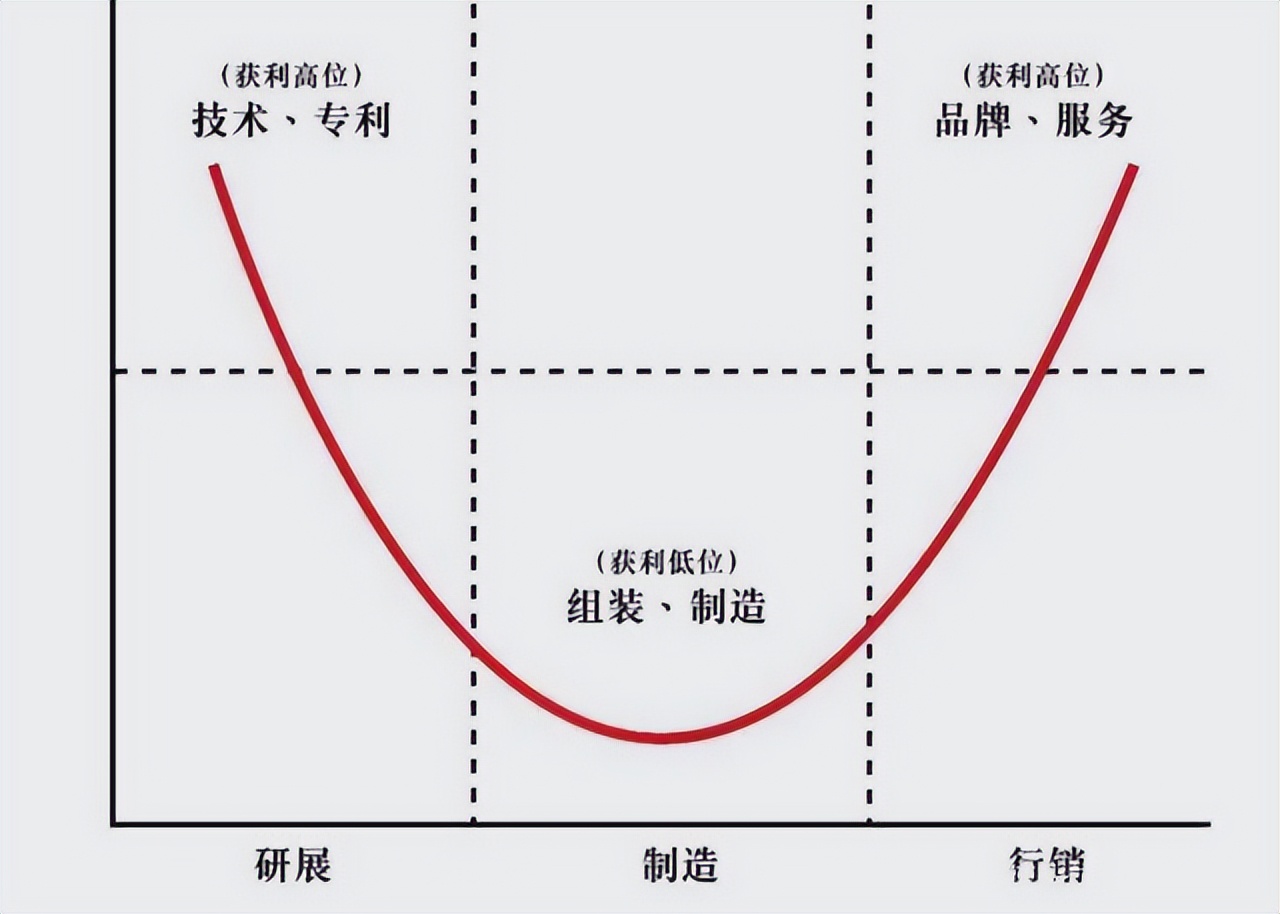

Acer founder Stan Shih once proposed the "Smiling Curve" theory, which aptly explains the current profitability challenges faced by most AI companies.

In his view, high-value-added segments like R&D and marketing lie at the ends of the curve, often yielding higher profits, while the intermediate production and manufacturing stages generate lower profits.

Analogously, the AI industry chain also comprises three main segments: GPU manufacturing/cloud computing, large model development, and AI applications.

Bottom-layer service providers earn substantial profits by selling computing chip hardware and providing cloud server services. AI application companies, positioned closest to the market and monetization, integrate AI into various scenarios like autonomous driving, medical diagnosis, and smart homes, leveraging AI to enhance product capabilities and command premium prices.

In contrast, AI companies focused solely on large models face constraints from upstream infrastructure, competition from AI application vendors, high R&D costs, high technical barriers, and intense iterative competition, leading to low profits and slow monetization. Taking autonomous driving as an example, NVIDIA dominates the industry chain with its high-performance GPUs, while Tesla and Waymo create significant commercial value through autonomous driving applications. However, the underlying large models, which have contributed significantly, generate meager profits and face immense pressure from high costs and fierce competition.

In the past, GPU manufacturers (like NVIDIA and AMD), large model vendors (like OpenAI), and application providers (like Apple, Microsoft, and Tesla) operated and competed independently within their respective industries. Now, they are attempting to "band together" and vertically integrate the industry chain: NVIDIA, an upstream player, has invested in midstream large model company OpenAI, while downstream players like Microsoft and Apple have forged deep ties with OpenAI. Chinese companies like Huawei, Alibaba, Tencent, and NIO-Xpeng-Li are also gradually vertically integrating the AI industry chain, from chip manufacturing to large model training and practical applications.

In fact, Apple in the mobile internet era and BYD in the new energy vehicle era both significantly enhanced their industry competitiveness through such integration strategies. This successful experience may inspire AI-era companies: "Whoever can first unify chips, computing power, data, models, and applications will be the first to reap the 'low-hanging fruit' in this technological revolution."

Source: US Stock Research