After the "price war", what else do large models need to compete on

![]() 10/12 2024

10/12 2024

![]() 633

633

Source | Bohu Finance (bohuFN)

At the recently held 2024 Yunqi Conference, Alibaba once again became the focus of attention.

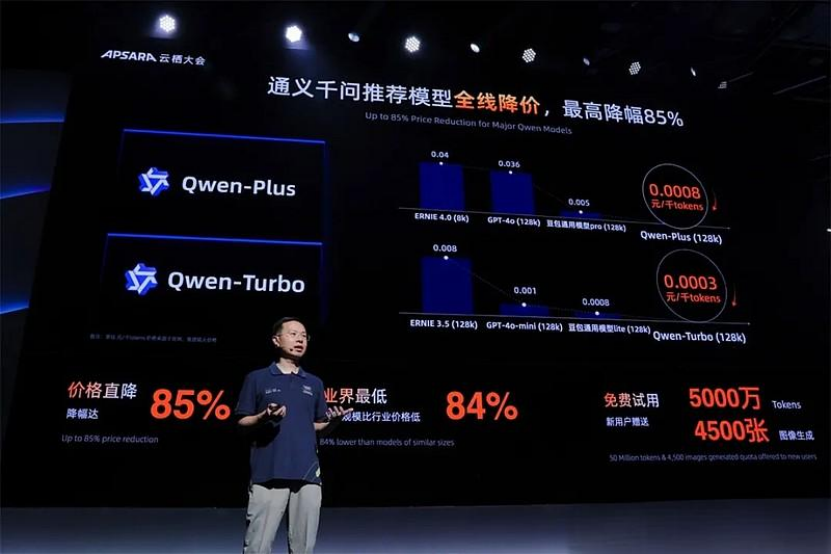

In May of this year, Alibaba Cloud announced significant price reductions for multiple commercial and open-source models under its Tongyi Qianwen series, with the highest reduction reaching 97%. At the Yunqi Conference, prices for three flagship models of Tongyi Qianwen were further reduced, with the highest reduction reaching 85%.

Following Alibaba's lead in May, ByteDance's cloud service Volcano Engine, Baidu Intelligent Cloud, Tencent Cloud, iFLYTEK, and others have officially announced significant price reductions for their large models, with industry-wide price reductions reaching around 90%.

Not only have domestic large model vendors joined the price war, but OpenAI, an industry benchmark, also launched GPT-4o mini in July this year, offering a commercial price that is more than 60% cheaper than GPT-3.5 Turbo.

It can be foreseen that after Alibaba reignites the "price war," prices for large models will continue to drop, potentially even entering the realm of "negative gross margin." In the history of the Internet industry, "losing money to gain scale" is not an isolated case. To transform the business model of the entire industry, higher costs are inevitably required.

However, balancing price, quality, and service has become a crucial consideration for large model enterprises in this process. If companies want to "survive," they cannot solely rely on "low-hanging fruit."

01 Scale is more important than profit

Domestic large models have transitioned from a pricing model of "cents per unit" to a new era of "fractions of a cent per unit." In May of this year, the API call output price for Alibaba's Tongyi Qianwen large model dropped from 0.02 yuan per thousand tokens to 0.0005 yuan per thousand tokens.

After another price reduction in September, Alibaba Cloud's Qwen-Turbo(128k), Qwen-Plus(128k), and Qwen-Max models set new lows for the minimum call price per thousand tokens, dropping to 0.0003 yuan, 0.0008 yuan, and 0.02 yuan, respectively.

Regarding the price reductions, Alibaba Cloud CTO Zhou Jingren stated that each price reduction is a very serious process that involves weighing various factors such as overall industry development and feedback from developers and enterprise users. Price reductions are not merely a "price war"; rather, large model prices are still too high.

As an industry matures, a trend towards price reductions is inevitable. For example, Moore's Law in the semiconductor industry posits that processor performance approximately doubles every two years, while cost reductions due to technological advancements halve the previous costs.

However, currently, the pace of price reductions in the large model industry far exceeds Moore's Law, with reductions approaching 100%. Against this backdrop, can large model enterprises still turn a profit? Perhaps for the large model industry, scale is currently more important than profit.

On the one hand, temporarily ceding profits has become a consensus in the large model industry. Industry insiders believe that the large model industry may have already entered the "era of negative gross margin."

According to a report by Caijing magazine, several leaders from Alibaba Cloud, Baidu Intelligent Cloud, and others revealed that before May of this year, gross margins for domestic large model inference computing power were over 60%, roughly in line with international peers. However, after successive price reductions in May, gross margins plummeted into negative territory.

After price reductions for large models, the number of users continues to increase. The more calls made in the short term, the greater the losses incurred by large models, as each model call consumes costly computing power. In other words, large model enterprises must not only reduce selling prices but also face higher cost inputs.

On the other hand, the effects of price reductions for large models are significant. Taking Alibaba Cloud as an example, after price reductions for large models, the number of paying customers on Alibaba Cloud's Bailian platform increased by over 200% compared to the previous quarter. More enterprises have abandoned private deployments, opting to call various AI large models on Bailian, which now serves over 300,000 customers.

Over the past year, price reductions for Baidu's Wenxin large model have also exceeded 90%. However, Baidu disclosed in its Q2 2024 earnings call that the daily average number of Wenxin large model calls exceeded 600 million, representing a more than tenfold increase within six months.

It appears that large model enterprises are willing to sacrifice profits for price reductions in pursuit of "expectations"—sacrificing short-term benefits for long-term returns.

Industry insiders estimate that currently, the revenue of large model enterprises in the field of model calling will not exceed 1 billion yuan, which is merely a drop in the bucket compared to total revenue in the tens of billions of yuan range.

However, in the next 1-2 years, the number of large model calls is expected to experience at least a tenfold exponential growth. In the short term, the larger the user base, the higher the computing cost for large models. However, in the long term, computing costs in the cloud services sector are expected to gradually decrease as customer demand grows, ushering in a period of "return" for enterprises.

As the industry continues to develop, AI's impact on computing power will become increasingly evident. Alibaba CEO Wu Yongming once stated that over 50% of new demand in the computing power market is driven by AI, with large models accelerating commercialization.

On the one hand, price reductions significantly lower the barriers to entry and trial-and-error costs for enterprise customers, particularly in traditional industries such as government, manufacturing, and energy, where business scales are larger and incremental spaces greater.

When large models become accessible to everyone like other infrastructure, their market potential can be expected to grow significantly. Before this happens, large model enterprises inevitably need to pass on benefits to enterprises and developers.

On the other hand, while price reductions for large models will decrease existing revenue, incremental revenue will increase. Taking Baidu as an example, large models not only generate direct revenue, such as through calls to products like the Wenxin large model, but also drive indirect revenue streams, such as Baidu's Intelligent Cloud business.

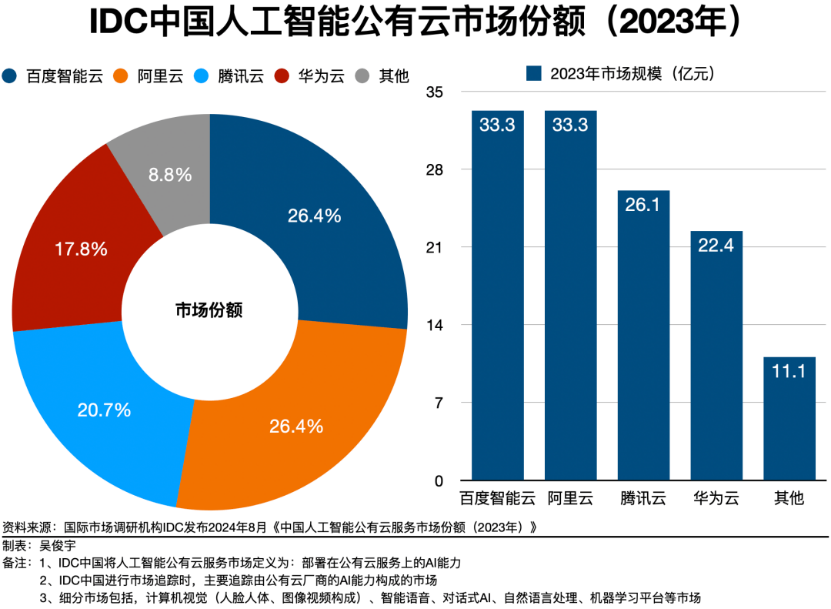

Over the past few years, questions have been raised about Baidu's Intelligent Cloud strategy, which does not dominate the public cloud market. However, in the niche market of AI public clouds, Baidu has begun to overtake competitors. Currently, the revenue share of large models in Baidu Intelligent Cloud has risen from 4.8% in Q4 2023 to 9% in Q2 2024.

Therefore, the current consensus in the large model industry is that scale is more important than profit, a viewpoint that is well-trodden in the Internet era, as exemplified by the "Thousand Group Wars," "Ride-hailing Wars," and "E-commerce Wars." Large model enterprises cannot avoid the "price war" and must aim to survive it, hoping to emerge as the ultimate beneficiaries after the elimination rounds.

02 Alibaba focuses on "AI Infrastructure"

Alibaba is well aware of this truth. After announcing further price reductions for large models, it also introduced the concept of "AI Infrastructure." Alibaba Cloud Vice President Zhang Qi stated that current AI is akin to the Internet around 1996, when internet access fees were expensive, limiting the development of mobile internet. Only by reducing fees can we discuss future application explosions.

Therefore, in addition to announcing further price reductions for large models at the 2024 Yunqi Conference, Alibaba Cloud also released a new generation of open-source large models, listing over 100 models covering various sizes of large language models, multimodal models, mathematical models, and code models, setting a new record for the largest number of open-source large models.

Alibaba Cloud CTO Zhou Jingren said that Alibaba Cloud is unwavering in advancing its open-source strategy, hoping to leave the choice to developers. Developers can make trade-offs and choices based on their business scenarios to enhance model capabilities and inference efficiency, while also more effectively serving enterprises.

According to Alibaba's statistics, as of mid-September 2024, downloads of Tongyi Qianwen's open-source models surpassed 40 million, and the total number of derivative models in the Qwen series exceeded 50,000, making it a world-class model group second only to Llama, which holds the top spot in the open-source large model world with nearly 350 million global downloads.

After the "Hundred Model Wars" ended, many industry leaders agreed that "competing on models is inferior to competing on applications," and large enterprises began to focus on "competing on ecosystems." Baidu Chairman Robin Li once stated, "Without a rich AI-native application ecosystem built on top of foundational models, large models are worthless."

Currently, over 190 large models have been registered with the State Internet Information Office, with over 600 million registered users. However, it remains challenging to address the "last mile" issue for large models. The difficulty lies not only in the scarcity of large model applications but also in their lack of "groundedness." For example, in specialized fields like healthcare and finance, relying solely on "feeding data" for training makes it difficult for large models to be directly applied.

Large enterprises cannot feasibly enter every niche industry to complete the "last mile." However, by creating a complete application ecosystem, downstream enterprises or other developers can independently "forge" model products that meet their needs. This not only optimizes resource allocation but also accumulates more high-quality data during the process, ultimately feeding back into the development of foundational large models.

Alibaba's decision to reduce prices and open source its models is essentially an attempt to lower the barriers to entry for large models, validate their application value through lower prices, and engage more enterprises and creators. Only when large models can truly meet the complex business scenario needs of enterprises can ecosystems develop, and the industry can enter a new phase.

However, the "Hundred Model Wars" may ultimately leave only 3-5 large model enterprises standing. Currently, the first tier of the industry is emerging, and they may become the fundamental foundation for the large model industry in the future.

Therefore, leading large model enterprises are unlikely to voluntarily abandon the price war and cede market share. In addition, many unicorns also hope to carve out a "path to survival" through price wars, with some believing that smaller models may offer better value for money.

In fact, the large model price war in May of this year did not originate with Alibaba but rather with a "DeepSeek V2," a catalyst in an industry where the prevailing inference price was still in the hundreds of yuan per token. This model offered an API pricing of 1 yuan per million tokens (computation) and 2 yuan per million tokens (inference) for models supporting 32k contexts.

Currently, the large model elimination race may continue for another 2-3 years. While the number of surviving large model enterprises will not be numerous, to survive, enterprises must pull out all the stops. However, the question remains: when the "low-hanging fruit" has been picked, the solution to the current large model industry's problems is no longer merely about being cheap.

03 Model Capability Remains Key

However, there are differing opinions within the industry regarding the "price war" for large models. Kai-Fu Lee, founder of Sinovation Ventures, once stated that it is unnecessary to engage in a frenzied price war because large models must be evaluated not only on price but also on technology. If technology is lacking and business is conducted at a loss, such pricing will not be a viable benchmark.

Volcano Engine President Tan Dai also noted when discussing price wars that the current focus is on application coverage rather than revenue. Stronger model capabilities are needed to unlock new scenarios, which is of greater value.

Currently, the essence of the "price war" lies in inadequate product capabilities. Model capabilities across vendors tend to be homogenized, temporarily unable to form a significant gap. Therefore, price wars are hoped to increase the popularity of large models and help vendors increase market share.

However, once the "low-hanging fruit" has been picked, new problems will arise. Enterprises must contend with the next stage of the price war, distinguish their large models from competitors, and ensure they are among the survivors. These issues still need to be addressed.

Therefore, while engaging in price wars, large model enterprises are acutely aware of the importance of products, technology, and cash flow. They must withstand pricing pressures, create technological gaps with competitors, and continuously improve model performance and product implementation to form a virtuous business cycle.

On the one hand, large model enterprises do not solely rely on "price wars." Typically, large model inference involves three variables: time, price, and the number of generated tokens. It is insufficient to consider only token prices without factoring in the number of concurrent requests per unit time.

In actual business operations, more complex inference events often require increased concurrency. However, currently, price-reduced large models generally use pre-configured models (which do not support increased concurrency). Truly large-scale, high-performance models supporting high concurrency have not experienced significant price reductions.

On the other hand, technology is leveraged to further optimize the inference costs of large models. Taking Baidu as an example, its Baihe Heterogeneous Computing Platform has made special optimizations to the design, scheduling, and fault tolerance of intelligent computing clusters, achieving an effective training duration ratio of over 98.8% on ten-thousand-card clusters, with linear speedup ratios and bandwidth effectiveness reaching 95%, respectively, helping customers address issues such as computing power shortages and high computing costs.

Microsoft CEO Satya Nadella once cited an example, stating that GPT-4's performance improved sixfold over the past year while costs dropped to one-twelfth of their previous level, representing a 70-fold improvement in performance-to-cost ratio. It is evident that advancements in large model technology underpin the industry's sustained price reductions.

Finally, it is crucial to create more differentiated products. Low-price strategies can help large model enterprises build ecosystems. However, as the AI field continues to evolve and innovation accelerates, shortening the technology replacement cycle, the ability to consistently provide competitive products and address user pain points in practical applications becomes the core competitiveness of large model enterprises.

Currently, the business logic of the large model industry has evolved from competing on models and costs to a new stage of competing on ecosystems and technology. While low prices remain a vital means of rapidly building ecosystem barriers, reducing costs through technology is the key to propelling large models into the "value creation phase."

Going forward, the new battlefield for large model enterprises will be "cost-effectiveness." On the current pricing foundation, they must further enhance the quality and performance of large models to make them more capable and diverse. While this may not necessarily incubate "super apps," attracting more small and medium-sized enterprises and startups can bring explosive growth opportunities for large model enterprises.