Large Model 2024: Transitioning from 'Burning Money' to 'Making Money'

![]() 12/27 2024

12/27 2024

![]() 655

655

2024 Review: Five Key Trends Shaping the Large Model Industry

As 2024 draws to a close, reflecting on the first year of large model application implementation reveals a bustling market swiftly moving towards rationality. Centered around technology, markets, and business, we have identified five prominent trends in the large model industry.

This year, AI emerged as a new frontier for global powers. Both China and the US intensified efforts to propel the development of artificial intelligence. In China, the 'AI+' initiative spearheaded the large model implementation trend throughout the year, with central state-owned enterprises taking the lead. Across the Pacific, the 'Silicon Valley Seven Sisters' drove gains in U.S. stocks, with AI hardware provider NVIDIA enjoying consistent success.

The implementation process has accelerated. OpenAI's commercial achievements began to materialize, generating $3.7 billion in revenue in 2024, fueling substantial growth across a vast ecosystem. In China, central state-owned enterprises and industry leaders spearheaded commercial implementation. In the first 11 months, large model bidding projects surged to 3.6 times that of 2023, with Baidu, iFLYTEK, and Zhipu securing the most projects.

OpenAI's dominance waned in 2024, as a landscape of multiple players emerged. Chinese vendors made remarkable progress. By 2024, domestic mainstream vendors like Baidu, Alibaba, Zhipu, and DeepSeek caught up in model capabilities, unlike the frustration and anxiety felt earlier in 2023. As model capabilities became comparable, vendors engaged in price wars, accelerating the convergence of players in the basic large model market.

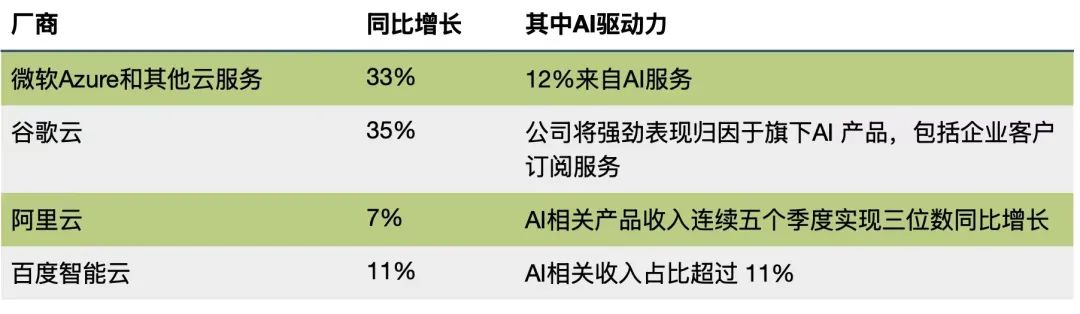

Model training and application fueled the domestic smart computing boom. Internet companies gradually transitioned towards 100,000-card clusters. This smart computing wave introduced new variables into the cloud computing market, with the AI public cloud market continuing to expand. Baidu Intelligent Cloud, an early entrant in the large model race, has ranked first in China's AI public cloud market for five consecutive years with a 26.4% market share.

While super apps have yet to emerge this year, a plethora of highly useful apps have. Whether for businesses (ToB) or consumers (ToC), it has become evident that competition is shifting to a systematic battle. Cultivating well-rounded capabilities is the aspiration of all players.

Amid reflections and discussions, the large model industry chain continues to evolve at a remarkable pace. This indicates that in 2025, the large model market will present a more diversified and fiercely competitive landscape.

01

China and the US Accelerate AI Development

In 2024, government incentive policies emerged as a significant driver for the large model industry.

In China, following the Spring Festival holiday, the State-owned Assets Supervision and Administration Commission convened a 'Special Promotion Meeting on Artificial Intelligence for Central Enterprises,' attended by all heads of central state-owned enterprises. Huawei and Baidu participated as AI enterprise representatives. In March, the government work report proposed an 'AI+' initiative. The same month, the State Council issued a plan for equipment replacement, expecting over RMB 3 trillion in investment over five years to upgrade advanced equipment, creating a vast market for large model implementation in industry.

In the US, the government's 2024 AI budget set a new high. The Department of Energy, Department of Health and Human Services, Department of Transportation, Department of Defense, and others collectively invested over $251.1 billion in AI. Including capital market investments, the total investment reaches trillions of dollars. According to Digital Frontier, from November 2022 to November 2024, NVIDIA, Microsoft, Google, and Meta saw their market capitalization soar by a total of $6.1 trillion, riding the AI wave. NVIDIA and Apple alternated as the world's largest market capitalization holders.

However, with AI's popularization, disputes over AI ethics and regulation, security and privacy protection, and AI application and commercialization intensified (see figure below).

Amid conflicts and controversies, AI governance policies in 2024 shifted from principles to practice, with security governance delving deeper and introducing multiple standards and tests. For instance, in June, the European Union introduced the AI Act, imposing restrictions on 'high-risk' AI systems and rules requiring AI teams to enhance data use transparency, considered a benchmark by the industry.

In China, 'legislative projects related to cyber governance and the healthy development of AI' were listed as preparatory review items for the National People's Congress in 2024.

02

Accelerating the Implementation Process

Unlike the 2023 battle for basic large models, the unsustainability of the 'burn-money-on-models' approach led the industry to prioritize implementation in 2024, alongside technological advancements, making it the first year of large model implementation.

Driven by incentive policies, domestic and foreign markets adopted different implementation paths. Overseas, ChatGPT stood out, sweeping the US with significant user awareness and application. OpenAI anticipates $3.7 billion in revenue for 2024, with $2.7 billion from ChatGPT. In China, central state-owned enterprises and industry leaders blazed a Chinese-characteristic path, combining general and industry-specific large models.

This year, large model implementation costs significantly decreased. In May, a price war among major players drastically reduced large model inference costs by dozens or even hundreds of times in just one and a half years.

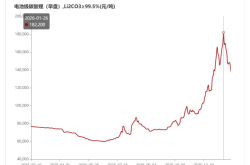

Simultaneously, lightweight large models and Mixture of Experts (MOE) emerged as new trends. From Baidu's year-start conference, unveiling multiple large models of varying specifications and suitable scenarios, to Alibaba's open-source Qwen series of small models, to major players launching MOE models, they all addressed achieving performance and effects comparable to or better than large models at lower costs.

Reflecting on implementation frontlines, deals in the tens of millions became less frequent, with more projects costing two to three million yuan. Some large model applications for single scenarios even cost a few hundred thousand yuan, an acceptable price range for early adopters.

It is predicted that large model inference costs will further decrease in 2025, further benefiting implementation.

This year witnessed an explosion in large model projects. Early in the year, some large model market service providers were so busy they 'only slept 5 hours a day,' while others experienced 'around 10 new demands per week.' Since then, the number of large model projects increased monthly.

According to Cloud Headlines, in the first 11 months of 2024, there were 728 successful large model bidding projects, 3.6 times the total for 2023. Telecommunications, energy, education, government affairs, and finance contributed the most projects. From major players to large model application service providers, everyone competed for projects. Among the 728 successful bidding projects, Baidu ranked first with 40 projects and a successful bidding amount of 274 million yuan.

Behind the surge in project numbers lies increasing AI value recognition. Leading central state-owned enterprises, in particular, have delved into deeper scenarios like power grid dispatching and blast furnace operations in steel plants.

In August and November, PetroChina jointly released the Kunlun large models with 33 billion and 70 billion parameters, respectively, in collaboration with Huawei, China Mobile, and iFLYTEK. In December, State Grid joined forces with Baidu and Alibaba to officially announce the trillion-parameter multimodal industry large model - Guangming Power Large Model, also encompassing planning and exploration of core scenarios, requiring the convergence of business-savvy and AI-savvy talents.

Middle-tier enterprises followed suit. Digital Frontier learned from the industry chain that many small and medium-sized financial institutions observing in the first half of the year began actively seeking solutions successfully implemented by leading enterprises in the second half, hoping to replicate them.

However, the implementation process faced challenges in 2024. Initially, large model implementations suffered from 'good reputation but poor sales,' making the transition from proof of concept (PoC) to actual purchases difficult. Enterprises began reflecting on their strategies. Some service providers prioritized industry customers with high knowledge density, good profitability, and a focus on scenario application development, conducive to market expansion.

In the first half of the year, ROI (Return on Investment) was frequently mentioned. For implementation, helping customers clarify their ROI was crucial. By the second half, customers became more rational and realistic, with procurement becoming 'application-effect-oriented.' They required a logical and opportune business value through PoC before proceeding with further procurement.

The importance of companion services also increased. One service provider noted that for projects around 2 million yuan, the proportion of annual service fees increased from the traditional 10-15% for information technology projects to 25-30%.

At year-end, large model implementations took another step forward, with intelligent agents becoming a new hotspot. At multiple large model-related events, companies invariably mentioned intelligent agents on their exhibition stands. Major players actively promoted intelligent agent development. At Baidu World 2024, Baidu announced the launch of the multi-intelligent agent collaboration tool 'Miaoda' in January next year, marking the debut of no-code development tools composed entirely of intelligent agents.

03

OpenAI's Dominance Wanes, Mainstream Enterprises Catch Up

While 2024 emphasized application implementation, large model technology advancement did not slow. In 2023, generative AI excelled academically, and by year-end, it became a scientific frontrunner, illustrating how 'one AI day is like one human year.'

Who has the strongest basic large model? It's no longer solely OpenAI. OpenAI's GPT-5 encountered training difficulties, with various models catching up in different areas. In IDC's 'China Large Model Market Mainstream Product Evaluation Report, 2024,' taking language models as an example, vendors like Baidu surpassed or caught up to GPT (as shown below). 'OpenAI still has advantages in the strongest complex reasoning models,' said Pan Xin, a former Google Brain member and SparkX Tech partner, to Digital Frontier. OpenAI attempted to adjust its strategy by launching the o1 and o3 series to advance in complex high-level reasoning tasks.

Basic large model enterprises also accelerated convergence. In the US, it swiftly narrowed to five companies - OpenAI, Anthropic, Meta, Google, and xAI. In China, the 'Hundred Model War' became history in a year, with basic large models converging on companies like Baidu, Alibaba, Zhipu, ByteDance, iFLYTEK, and Tencent.

In fall 2024, rumors circulated that some enterprises among the 'Six Little Tigers' of large models had abandoned pre-training. Since then, several tigers released new models or products. However, as Kai-Fu Lee noted, the 'Six Little Tigers' face the same soul-searching question as the 'Four Little Dragons' of the AI 1.0 era: Can they build a sustainable business model from a single large model and prove capable of withstanding investment banks' and the secondary market's tests - generating revenue, growth, and profitability?

Globally, pre-training faces challenges. The slowing down of Scaling Law is a fact. Ilya Sutskever, OpenAI's former chief scientist, even claimed that pre-training was over due to nearly exhausted training data. However, Geoffrey Hinton, Ilya's postdoctoral supervisor and the father of deep learning, refuted, 'Ilya lacks imagination.' If models can truly learn, data is ubiquitous. Pre-training is biologically connected and will never end.

Reinforcement learning overnight became a favorite, with OpenAI's o3 and o1 both adopting it. o3 and o1 demonstrated that there's not just one Scaling Law during pre-training but also during inference. 'The reason OpenAI's o1 caused industry sensation upon launch was reinforcement learning,' said Wang Yanpeng, an outstanding system architect at Baidu. 'Essentially, it uses computing power to create more data, reinforcing it through reinforcement learning's reward model, becoming a main path.'

Due to the exorbitant cost of pre-training, there are varying opinions in China regarding whether to persist with pre-training and how to approach it. Some enterprises contend that China boasts an abundance of industry scenarios, and entering these scenarios can propel pre-training forward. Others, however, are exploring new avenues. According to AI Emerging reports, ByteDance internally pondered that the potential for AI conversational products might be limited, and a paid subscription model might not be viable in China. In the long run, it is crucial to find lower-threshold, more 'multimodal' product forms, with Video Editor Jietu taking precedence.

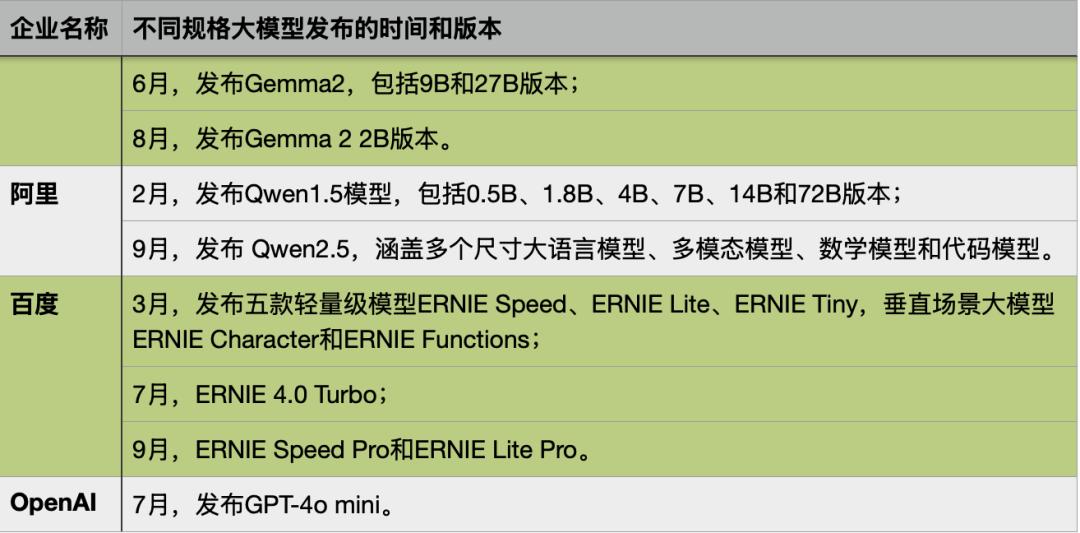

Apart from large model capabilities, global AI giants are also making strides in another area—embodied intelligence. The World Artificial Intelligence Conference held in Shanghai became a showcase for humanoid robots. Wang Zhongyuan, Dean of the Beijing Academy of Artificial Intelligence, believes that the anticipation of humanoid robots being 'ubiquitous' and 'usable anywhere' within two or three years is unrealistic. He concurs with the 2050 timeframe proposed by Professor Zhao Mingguo of Tsinghua University.

04

Amidst the surge of smart computing, the AI public cloud continues to grow at a rapid pace.

Driven by large model training and application, 2024 was undoubtedly a pivotal year for smart computing.

Internationally, multiple AI giants, including Microsoft & OpenAI, xAI, and Meta, are competing to construct clusters ranging from 100,000 to a million cards. Among them, Microsoft's Stargate project, costing over $100 billion, is scheduled to be completed in five phases over the next six years, with operations anticipated to commence by 2028. The project will be equipped with millions of dedicated AI chips and may require up to 5 gigawatts of power support.

In China, previously, there was uncertainty about how to utilize 100-petaflop smart computing centers. However, with the popularity of large models, demand has soared, necessitating smart computing centers with a starting capacity of 1,000 petaflops.

As one of the primary purchasers of intelligent computing power, large model startups typically demand tens of thousands of GPUs. Leading enterprises across various industries also have substantial computing power requirements, ranging from 1,000 to 5,000 GPUs, due to the need to develop industry/enterprise-specific large models.

A prime example is the automotive industry. End-to-end intelligent driving technology supported by large models has swiftly become the mainstream solution, significantly boosting automakers' investment appetite. According to a source from a prominent automaker, automakers' intelligent computing power will quadruple in the next 1-2 years.

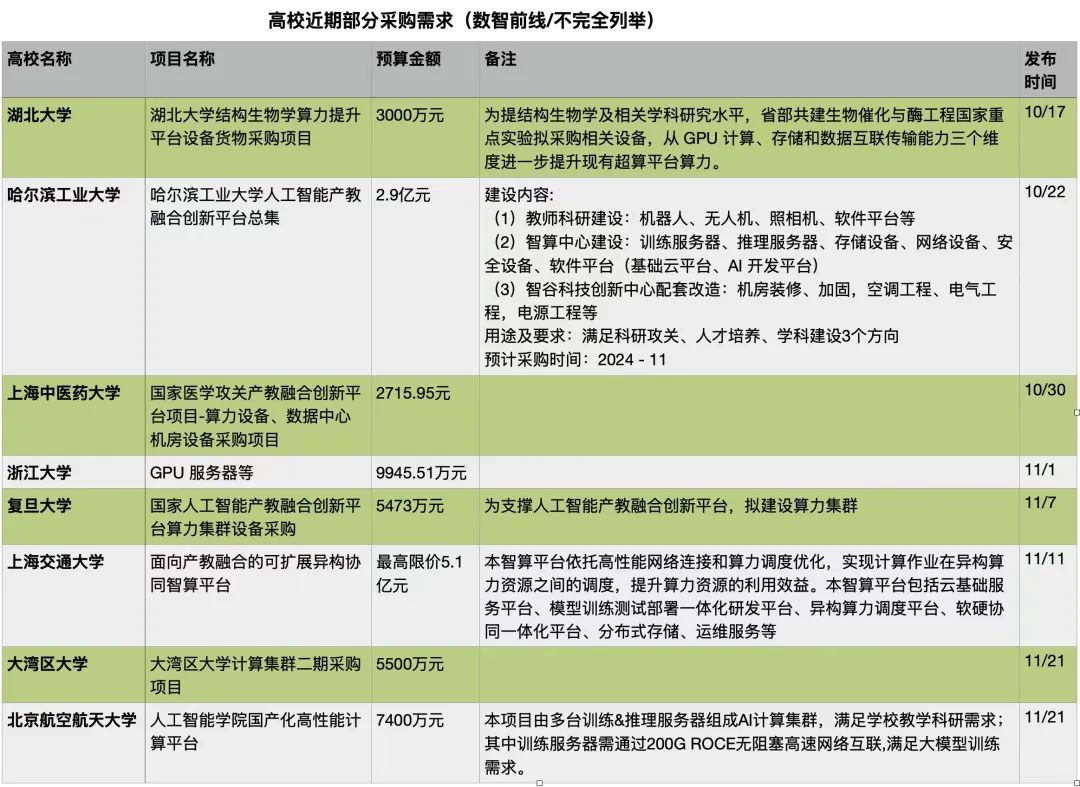

Following this year's Nobel Prize announcement, AI for Science has gained significant traction, and universities' enthusiasm for procuring intelligent computing power has soared. Recently, several universities have announced their intent to purchase computing power ranging from tens of millions to hundreds of millions of yuan.

In addition to corporate advancements, the Ministry of Industry and Information Technology and five other departments issued the "Action Plan for High-Quality Development of Computing Power Infrastructure," explicitly endorsing intelligent computing. Driven by policies, the construction of intelligent computing centers is in full swing across the country, with projects sprouting up frequently. Relevant data indicates that as of June 2024, China had over 250 intelligent computing centers either under construction or completed.

Regarding the development of cluster capabilities with tens of thousands of GPUs, the aggressive layout of American technology companies suggests that the Scaling Law remains valid. A few leading Chinese cloud providers have already taken action. For instance, Baidu and Alibaba have successively announced their proficiency in deploying and managing clusters with tens of thousands of GPUs.

According to IDC's analysis, cloud providers remain the primary players in over 70% of intelligent computing centers amidst the wave of intelligent computing. Cloud providers are also actively constructing AI cloud-native infrastructures. IDC data reveals that the market size of China's AI public cloud services reached 12.61 billion yuan in 2023, with Baidu Intelligent Cloud ranking first for five consecutive years, holding a 26.4% market share.

Apart from corporate dynamics, some intelligent computing centers have encountered several pitfalls in recent years, with utilization rates averaging below 30%. Amidst the current construction boom, the industry is concerned about potential future failures. The industry contends that merely building computing power is insufficient; it must also be utilized effectively.

The market has begun to respond, with several listed companies, including Lianhua Holdings, which previously announced its foray into the intelligent computing sector, issuing announcements to suspend or cancel planned intelligent computing center construction projects.

Since the beginning of this year, all sectors of the industrial chain have come to realize that "construction must lead to operation."

Many investors now require project contractors to meet corresponding operational KPIs. When constructing intelligent computing centers, some enterprises also conduct preliminary research on local demand before building centers of the appropriate scale.

Industry professionals from companies like Beijing Telecom Digital Intelligence also advocate for seamless integration with the local industrial economy from the outset when assisting local governments in building intelligent computing centers. This includes construction planning and ecosystem introduction to genuinely integrate the AI industry chain into the local economy, fostering a virtuous cycle.

According to industry observations, a significant number of intelligent computing centers will be completed in Q4 of this year and Q1 of next year. This will be a litmus test for the entire industry.

05

The Battle for a Comprehensive Large Model Strategy

Following the training and deployment battles of large models in 2024, the industry anticipates that more AI applications will emerge globally in 2025, targeting both consumers (to C) and businesses (to B).

The competition for large models is evolving into a comprehensive battle. Taking to B as an example, a large model enterprise summarized that constructing a genuinely usable large model for an enterprise necessitates a comprehensive suite of capabilities, encompassing computing power, data governance, model training, scenario implementation, application development, continuous operation, and security compliance. It also requires the ability to create various standardized software products, such as digital humans, customer service assistants, and code assistants, along with hardware-software integrated products for scenario implementation.

In essence, the competition for large models demands a "hexagonal warrior." The enterprises that have secured the most projects this year, whether Baidu or iFLYTEK, have excelled in this comprehensive battle. Judging from ByteDance's recent conference, they are also rapidly enhancing their system.

Wang Zhong, co-founder of the large model service provider Zhongshu Xinke, provided an example of their collaboration. In their partnership, Baidu's ecosystem encompasses both basic large models and the MaaS platform, which offers a toolchain platform centered around large models, along with corresponding hardware like all-in-one machines that can be directly deployed in customer data centers. Baidu also supports joint solution development and customized product adaptation.

According to IDC statistics, in the first half of 2024, Baidu Intelligent Cloud led in revenue in China's MaaS market, concurrently topping the AI large model solution market.

This year, model deployments emphasized RAG capabilities, and next year will be the year of Agent proliferation. "The deployment of intelligent agents is not as straightforward as imagined. The primary challenge lies in model capabilities, as large models must possess the ability to decompose and plan complex tasks. Currently, most models still lack this capability," Owen ZHU, an AI application architect at Inspur Information, previously analyzed for Digital Frontier. Companies like Baidu and NVIDIA have adopted a toolchain + Agent approach, making Agents significantly more stable and better suited for deployment.

In the final month of 2024, various large model enterprises have continued to make strides. OpenAI rolled out a series of updates over 12 days, showcasing the latest technological advancements, including o3. ByteDance updated its Doubao large model, further enriching its ecosystem. Additionally, Baidu Intelligent Cloud, Alibaba Cloud, and State Grid witnessed the release of the Guangming large model for the power industry...

Reflecting on 2024, the large model industry chain has rapidly evolved amidst bets, explorations, and reflections. Looking ahead to 2025, large models will undoubtedly continue to branch out and explore, unlocking more complex tasks, spurring numerous innovative applications, and guiding people into new frontiers.