The Coexistence of ASIC and GPU: Diversifying the AI Chip Market

![]() 12/31 2024

12/31 2024

![]() 575

575

Preface: As artificial intelligence technology rapidly advances, global demand for computing power has surged, propelling the artificial intelligence chip market into a state of heightened activity.

However, with the evolution of large AI models, the competitive landscape within this market is undergoing subtle shifts.

Some believe that ASICs are swiftly gaining ground, posing a challenge to GPUs' dominance in AI computing.

Chip focus shifts as AI models expand

AI computing encompasses both training and inference phases.

Training tasks necessitate substantial computational power, making GPUs the preferred choice for AI training among vendors.

Conversely, inference tasks demand less computational power and do not require extensive parallel processing, rendering GPUs less advantageous in this realm.

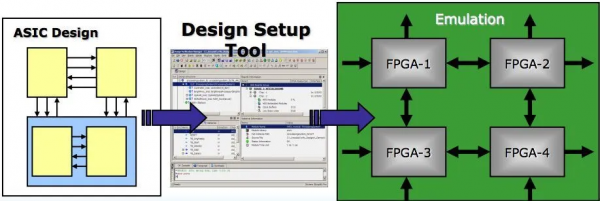

Consequently, numerous companies have adopted lower-cost, energy-efficient FPGAs or ASICs for their computing needs.

This trend persists today, with GPUs commanding over 70% of the AI chip market.

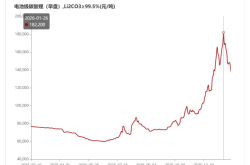

Data, the lifeblood of AI development, is finite, and the data currently utilized for AI pre-training has reached its limits.

Moreover, as model sizes expand, their marginal benefits diminish, while computational costs remain high, prompting reflection on the future trajectory of AI training.

Meanwhile, the next phase of large AI models—logical inference—is gradually emerging as a new research frontier.

Currently, market dissatisfaction with long-term NVIDIA dependency underscores the urgent need for diversified computational power.

Furthermore, as AI models shift their emphasis from training to inference, the burgeoning demand for inference-based AI computing presents opportunities for ASICs.

Thus, fostering the ASIC industry chain and augmenting ASIC chips' market share in AI has become an industry consensus, explaining the surge in Broadcom and Marvell's share prices.

Two primary drivers behind the ASIC demand surge

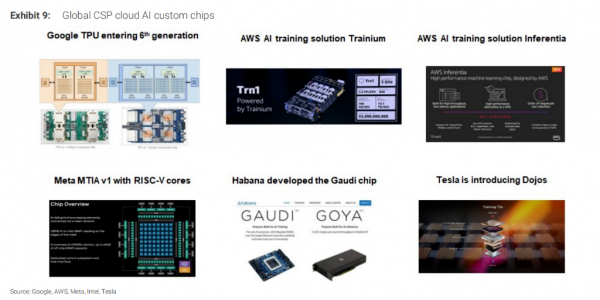

① Large AI models are transitioning from training to inference. Google's TPU, developed in collaboration with Broadcom, exemplifies this shift, with its architecture tailored specifically for machine learning and deep learning, prioritizing inference over training or rendering.

A Barclays research report anticipates that AI inference computing demand will soar, expected to account for over 70% of total AI computing demand, potentially surpassing training demand by 4.5 times.

② Another factor is downstream companies' desire to lessen their reliance on NVIDIA.

Firms requiring custom chips, such as ByteDance, Meta, and Google, harbor concerns about NVIDIA chips' long-term supply stability.

By opting for ASICs, these companies can enhance product control, bolstering their procurement bargaining power.

③ Optimizing internal workloads. By developing bespoke chips, cloud service providers can more effectively meet their AI inference and training demands.

ASIC's potential amplifies in the AI era

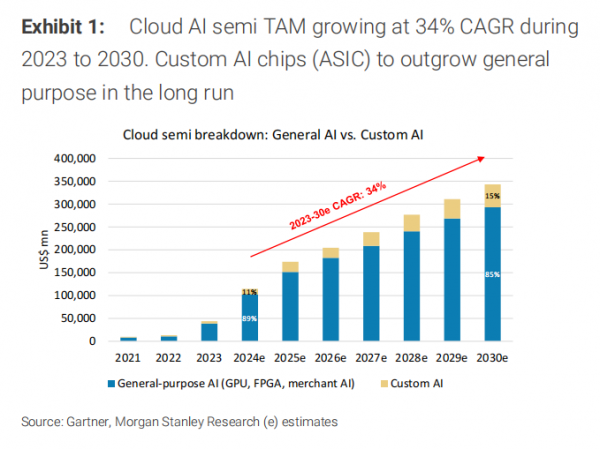

Morgan Stanley's "AI ASIC 2.0: Potential Winners" report, released on the 15th, forecasts that ASICs, due to their targeted optimization and cost-effectiveness, will gradually encroach on NVIDIA GPUs' market share.

Morgan Stanley projects the AI ASIC market to expand from $12 billion in 2024 to $30 billion in 2027, registering a 34% compound annual growth rate.

While NVIDIA is poised to maintain its market leadership in large language model training, firms like Broadcom, Alchip, and Socionext are also favored.

Additionally, Cadence, TSMC, and their supply chain partners (like ASE, KYEC, etc.) stand to benefit from ASIC design and manufacturing's rapid growth.

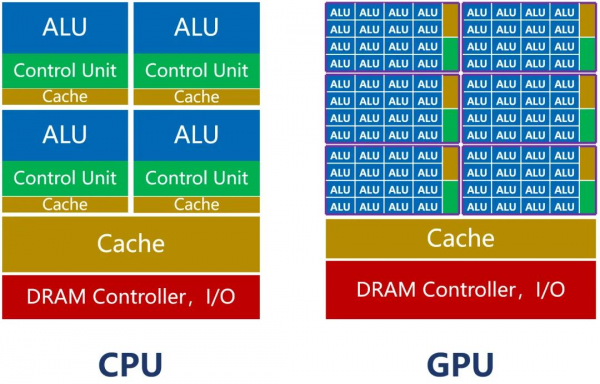

Both ASICs and GPUs are semiconductor chips, collectively termed "AI chips" due to their AI computing applicability.

In the semiconductor industry, chips are broadly categorized into digital and analog types, with digital chips accounting for roughly 70% of the market.

Digital chips further bifurcate into logic chips, memory chips, and microcontrollers (MCUs), with CPUs, GPUs, FPGAs, and ASICs falling under the logic chip umbrella.

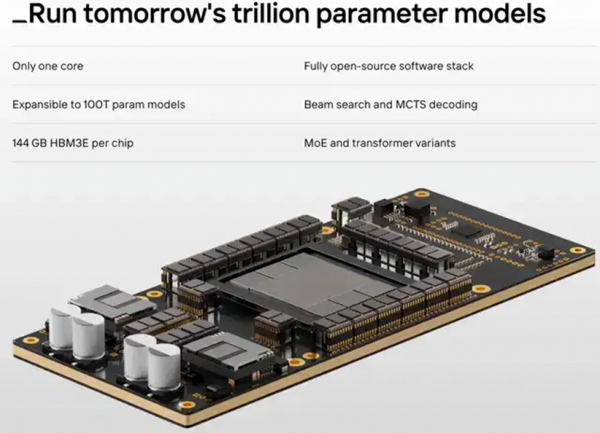

ASICs are integrated circuits tailored for specific applications, specifically designed and manufactured to meet user or electronic system requirements.

Examples include Google's TPU, Bitcoin mining machines, Intel's Gaudi 2 ASIC chip, IBM's AIU, and AWS's Trainium, all ASICs.

As customized chips, ASICs' computational power and efficiency are intrinsically aligned with specific task algorithms.

Core count, the ratio of logic computing to control units, and cache memory are meticulously customized across the chip architecture.

Consequently, ASICs achieve remarkable compactness and low power consumption, typically outperforming general-purpose chips (like GPUs) in reliability, confidentiality, computational power, and energy efficiency.

For instance, within the same budget, AWS's Trainium 2 outperforms NVIDIA's H100 GPU in inference tasks, boasting a 30-40% cost-effectiveness enhancement.

The forthcoming Trainium 3, anticipated next year, promises doubled computational performance and a 40% improvement in energy efficiency.

Apart from tech giants like Meta, Microsoft, and Amazon unveiling new ASIC products, numerous firms and startups are introducing innovative ASIC offerings, bolstering large AI models' training and inference capabilities.

The competitive and cooperative dynamics between ASIC and GPU

Morgan Stanley emphasizes that ASICs' rise doesn't herald GPUs' decline; rather, both technologies are poised for long-term coexistence, offering optimal solutions tailored to diverse application scenarios.

The growing significance of ASICs in AI chips is evident from tech giants' proactive endeavors, gradually becoming a major competitive arena for leading enterprises.

Training large-scale language models (e.g., GPT-4, Llama 3) necessitates robust computational support, driving the growth of both GPU and ASIC markets.

Although ASICs and GPUs compete in certain aspects, they primarily coexist in a complementary state.

Amidst surging AI computational demand, ASICs and GPUs' respective strengths will be optimized.

ASICs excel at specific tasks, while GPUs offer flexibility and adaptability to evolving algorithms and applications.

In the foreseeable future, GPUs, owing to their maturity and mass production, will persist as a mainstream technology, whereas ASICs' rise will unfold gradually.

Looking ahead, GPUs and ASICs are anticipated to coexist across various application scenarios, with diversified computational power development emerging as a consensus trend.

However, technological development might be influenced by emerging fields like quantum computing.

For instance, Google's latest quantum chip, 'Willow,' with 105 qubits, can solve computational problems that would take a traditional supercomputer beyond the universe's age in mere minutes, underscoring quantum computing's unparalleled computational potential.

Conclusion:

While NVIDIA GPUs are currently favored by most cloud service providers, as ASIC design matures, these cloud giants may gain enhanced procurement bargaining power through self-developed ASICs in the coming years.

As AI technology evolves and market demands shift, ASICs and GPUs will continue to coexist and develop, each leveraging unique advantages across diverse applications to collectively propel the AI ecosystem's prosperity.

References: Fresh Date Class: "Will ASIC Replace GPU?", Tech Meal: "Unlocking AI Chips: The Diversified Development from GPU to ASIC", Silk Tapestry: "2025 Computing Power Mainline: ASIC vs. GPU", Energy Technology: "AI ASIC Racetrack: Who Will Be the Ultimate Winner?", Weico Network Electronics: "AI Newcomer's Stock Price Soars 400%, Staging a 'Three Against One' Drama", Yu Hanbo: "The Rise of Inference, Can ASIC Challenge GPU's Dominant Position?", Wind Investment Science: "ASIC Chips Explode"