Google's AI Large Model Revival Amid Skepticism and Ridicule

![]() 12/31 2024

12/31 2024

![]() 756

756

This article is based on publicly available information and is intended solely for informational purposes, not as investment advice.

"I believe 2025 will be crucial. I believe we must recognize the urgency of this moment and need to accelerate our pace as a company. The stakes are high. These are disruptive times. In 2025, we need to relentlessly focus on unleashing the advantages of this technology and solving real user problems." These were the words of Google CEO Sundar Pichai at the 2025 Strategy Meeting held on December 18. While the statement conveys a sense of urgency, the reality is more nuanced.

Google recently enjoyed a triumphant December, having navigated a challenging period. The pivotal event shaping Google's fortune in 2023-2024 has been the rise of large models—a hotly debated frontier. Google faced skepticism and ridicule in this arena. Despite being an early entrant, almost among the first in the so-called "Mag-7," Google launched its first mature large model, Bard, in 2023, shortly after OpenAI introduced GPT-3.5. However, Bard received more ridicule than praise, impacting Google's stock price. To this day, Google maintains the lowest PE ratio among the Mag-7, an unacceptable position for a company that began machine learning research in 2001 and dominated the last mobile internet era.

01 The Rocky Road of Large Models

【1】Early Starter, Late Bloomer

As a dominant player in the mobile internet era, Google has always been at the forefront of technological reserves and innovations. Especially in deep learning and neural networks, where computational power and algorithms are paramount, Google has led the way. In 2001, Google introduced machine learning to correct misspelled search queries. In 2006, it launched Google Translate, followed by TensorFlow in 2015, making AI more accessible, scalable, and efficient. In 2016, DeepMind's AlphaGo defeated the world Go champion, turning AI from science fiction to reality.

That same year, DeepMind introduced TPU, a custom chip optimized for TensorFlow, accelerating AI model training and execution. Google's Gemini 2.0, launched in December 2024, was trained on the sixth-generation TPU. In 2017, Google introduced the Transformer architecture, laying the groundwork for generative AI systems. Subsequently, GPT-2, based on the Transformer, led to GPT-3.5, GPT 4.0, and GPT-o1. Ironically, Google's first large model was not based on the Transformer.

【2】Premature Launch

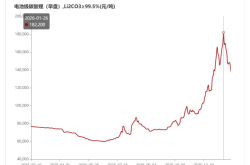

Responding to GPT-3.5's sudden popularity in late 2022, Google launched Bard on February 6, 2023, with an initial rollout in the US and UK in March. Based on LaMDA, a 2021 large model with 137 billion parameters emphasizing natural dialogue, Bard faltered during a live-streamed event in Paris, causing Google's stock price to plummet 8%. Internal teams and media criticized Bard's capabilities, which our tests revealed to be less effective than Apple's Siri.

On April 10, 2023, Bard's underlying model was upgraded to PaLM (Pathways Language Model), offering stronger language understanding and generation capabilities. On May 10, Bard evolved into PaLM2, enhancing logical reasoning and reducing conversational jokes. Google began integrating large models into its products, providing generative AI functions for Gmail and Workspace. It wasn't until December 2023 that Bard underwent a significant upgrade, with Gemini Pro outperforming GPT-3.5 in all aspects. Despite continuous iteration, Google's models never reached the top tier and struggled to expand beyond its ecosystem, while shell products already profited from OpenAI's ChatGPT.

Google faces stiff competition. OpenAI leads, followed by Anthropic's Claude, which impresses with each iteration and attracts funding from companies like Amazon. Meta's unconventional approach of open-sourcing its large model and the rise of Preplexy, a vertical AI search product revolutionizing search results, pose significant threats. Google hasn't felt such crisis in years, as the large model competition tests algorithms, computing power, and infrastructure—areas where Google excels.

【3】The Giant Regains Momentum

On February 8, 2024, Bard was renamed Gemini, marking Google's comeback. On May 14, 2024, Gemini 1.5 Pro was released, followed by Gemini 2.0 Flash on December 6, 2024. Besides catching up with vertical large models, Google expanded with NotebookLM, an AI note-taking application released in September 2024. NotebookLM can understand and summarize inputs, generate conversational audio content ideal for podcast production. In December, NotebookLM underwent a significant upgrade, introducing a premium version, NotebookLM Plus.

We tested two podcast episodes produced using NotebookLM, finding the AI host's conversational proficiency surpassed that of entry-level podcasters. While the content understanding was too 'AI'-like, the efficiency of this AI audio production tool is unmatched. It can be applied to podcast production, paper understanding, and interpretation, lowering the barrier for complex content. Spotify Wrapped fans even created a Spotify Wrapped AI podcast entirely using NotebookLM.

In multimodal capabilities, Google released Imagen 2 in February 2024 but faced backlash due to historical fact errors. Revised significantly and iterated to Imagen 3 in August, the model now offers improved detail accuracy, supporting various styles and richer textures. In May, Google released Veo, a video generation model to compete with OpenAI's Sora. While Veo excelled in image quality, its video content had a strong 'sci-fi' feel, lacking realism.

DeepMind developed GenCast for weather prediction, forecasting changes 15 days earlier than other systems, beneficial for early meteorological disaster warnings. In October 2024, DeepMind won a Nobel Prize in Chemistry (shared with David Baker) for its protein structure prediction model AlphaFold. Google AI's penetration into scientific research, whether in weather or biomedical fields, surpasses newcomers like OpenAI.

【4】A Month of Harvest

After a year of struggles and refinement in 2024, Google found its rhythm, reaping a harvest in the final month. With Gemini 2.0, Google disrupted OpenAI's 12-day product launch streak and solidified its tech leadership with the quantum chip Willow. Before Gemini 2.0's December 11 launch, Google quietly released the gemini-exp-1206 model, quickly becoming a top performer on multiple LLM ranking lists. The more sensational event was the December 11 launch of Gemini 2.0 Flash, likely not the complete version but sufficient to regain Google's tech leadership.

Gemini 2.0 Flash boasts powerful reasoning abilities and one-step multimodal support, contrasting sharply with OpenAI's incremental releases. Google claims 2.0 Flash is faster than 1.5 Pro, operating at twice the speed and supporting various information modalities like images, videos, and audio. It can natively call upon tools like Google Search, code execution, and third-party user-defined functions, outperforming OpenAI's models in math and programming.

Accompanying Gemini 2.0 Flash's release, Google introduced related features, including updates to the multimodal AI assistant Project Astra, and the launch of browser assistant Project Mariner and code assistant Jules. Project Astra, launched as early as May 2024, now supports multiple languages, accents, and obscure words, better integrated with Google's ecosystem. Project Mariner, an experimental browser extension, can understand page elements and complete tasks like ordering products and filling out forms. Jules, designed for developers, assists with code analysis and guidance, integrated into the GitHub workflow.

This release also introduced Veo 2 and Imagen 3, the second-generation video and image generation models, solidifying Google's comeback in the large model arena.

The advanced video model, Veo 2, boasts an enhanced comprehension of real-world physics, empowering it to create high-definition videos with unprecedented detail and realism. Furthermore, there's Deep Research, a research tool tailored for academic exploration. It leverages sophisticated reasoning models to aid in researching topics and drafting research papers. Based on my observations across various social media platforms, not only students and educators from diverse disciplines have swiftly embraced Deep Research, but also professionals engaged in intricate technical work within enterprises are actively testing it as their preferred large model. This press conference signifies Google's resurgence to the forefront of artificial intelligence, marking a decisive victory in the current large model competition. Importantly, leveraging its comprehensive product ecosystem, Google stands poised to outpace other vendors in the next phase of AI large model competition, particularly in the development and deployment of AI Agents. Google's dominance in the large model domain extends beyond the performance and multimodal capabilities of its products; it also encompasses a holistic approach encompassing model chips, training platforms, and downstream applications. With the unveiling of the 2.0 flash model, its underlying core hardware, the sixth-generation TPU Trillium, has also come to light, fully supporting Gemini 2.0's training and inference processes.

The Trillium TPU serves as a cornerstone of Google Cloud's AI supercomputer, an innovative architecture integrating high-performance hardware, open software, leading ML frameworks, and a flexible consumption model. Compared to its predecessor, the TPU v5e, the Trillium TPU offers up to a fourfold increase in training speed for intensive LLMs (like Llama-2-70b and GPT-3-175b) and a 3.8x boost for MoE models. Its host's Dynamic Random Access Memory (DRAM) triples that of the v5e, maximizing performance and throughput. Trillium is transitioning towards practical applications, with any vendor now able to purchase it for their large model products. However, amidst fierce competition from NVIDIA, Trillium currently leads only in terms of parameters and boasts one successful large model case. Its compatibility with upstream and downstream hardware, as well as industry acceptance, still requires time to prove itself.

02 Google's Strengths and Concerns

【1】Strengths: Ecosystem and Financial Muscle

Google has always been an innovator, exemplified by its former "Google 20% Time Policy," which allowed employees to dedicate 20% of their work hours to passion projects. This culture has fostered numerous projects, some of which have transformed into lucrative products sustaining Google's revenue stream today. This policy's enduring presence underscores Google's commitment to innovation, nurturing a fertile ground for new technologies and products. Beyond fostering innovation, Google's infrastructure, technical architecture in computing power and cloud services, and talent pool are unmatched by competitors like Meta and Amazon in the short term. Besides the resources needed for large model development, Google excels in downstream application ecosystem competition. YouTube, Google's video platform, naturally aligns as the ideal application scenario for multimodal content. Google Search has introduced AI Overview to compete with Preplexity AI. Google's self-driving platform, Waymo, may also integrate voice model products in the future. Google's robust product ecosystem enables it to explore large model applications across AI Agents, AI hardware, and robotics. Crucially, Google's financial strength underpins its ambitions. With Q3 revenue of $88.3 billion, a 16% YoY increase, and a net profit of $26.3 billion, up 35% YoY, Google Cloud generated $11.4 billion in revenue, a 35% YoY growth. In Q3, Google generated $17.6 billion in free cash flow, ending the quarter with $93 billion in cash reserves. After two years of large model competition, Google retains nearly $100 billion in cash reserves, alleviating concerns over computational power, chips, and talent. Google possesses the full spectrum of software and hardware requirements for large models, from concept to prototype to industrial-scale deployment. As long as management maintains its focus, large models contributing to Google's revenue and share price is within reach.

【2】Concerns: Antitrust Risks

Google's share price is dampened by the specter of antitrust lawsuits threatening to dismantle its business. The recent antitrust trial loss casts a shadow over Google's core business future. The U.S. Department of Justice (DOJ) demands Google sell its Chrome browser, dissolve agreements with companies like Apple regarding default search engine placement, and possibly mandate the sale of the Android operating system. These demands significantly impact Google's core search business, disrupting key traffic sources. Without these inlets, Google Search's market share and, consequently, search advertising revenue, will suffer. The potential sale of the Android operating system could also compromise Google's mobile application ecosystem's integrity. In response, Google has proposed targeted browser agreements, making the Google Play Store and Android browser non-exclusive or subjecting default search settings to annual reviews to mitigate perceptions of monopoly. Recently, Japan's Fair Trade Commission ruled that Google Search violated Japan's Antimonopoly Act, indicating potential global antitrust scrutiny. This may prompt other countries to follow suit, targeting Google with antitrust rulings. As Google grows, factors once propelling its rise now exhibit volatility. Amidst intense internal and external competition and core business disruptions, Google desperately needs a stable, competent management team. It's no surprise that Sundar Pichai internally declared 2025 a high-risk year, marking a critical juncture for Google. Amidst the antitrust cloud, Google has seized a rare window to establish a foothold in the technological innovation wave and prepare for the true dawn of the AI era.