In the Era of Edge AI, Integrated Memory and Computing Become the New Norm, Catalyzing a Storage "Chip" Revolution

![]() 01/06 2025

01/06 2025

![]() 517

517

Currently, AI technology is swiftly integrating with terminal products, propelling innovation in numerous terminal hardware offerings, notably consumer electronics. By 2024, groundbreaking edge AI products such as AI Phones and AI PCs have ignited new markets, fueled consumer demand with smarter functionalities, revolutionized our daily lives, and accelerated innovation across upstream and downstream industrial chains.

Apple plans to introduce Apple Intelligence to the iPhone in 2024. Despite a recent SellCell report revealing that most users believe Apple Intelligence currently lacks significant value, this does not detract from the future trajectory of smart devices—reducing cloud dependency and focusing on edge AI.

To bolster edge AI competitiveness, robust hardware performance is paramount. Chip requirements emphasize computing power, memory, power consumption, process, area, and heat dissipation, with storage chips playing a pivotal role.

To further enhance the iPhone's edge AI performance, Samsung, per South Korean media, has commenced research on a new low-power LPDDR DRAM packaging method at Apple's request.

Previously, Apple's LPDDR DRAM was stacked on the entire SoC, facilitating compact size, reduced power consumption, and latency—a common packaging method for consumer electronics with limited board space. Apple aims to boost data transmission rates and parallel data channels by separately packaging DRAM and SoC, thereby increasing memory bandwidth and enhancing the iPhone's edge AI capabilities.

Reports also suggest that Samsung may apply LPDDR6-PIM (Processor-In-Memory) technology tailored for device-side AI to iPhone DRAM.

The application landscape of AI technology has broadened from the cloud to edge devices, fostering new market demands in the storage market and driving continuous innovation in edge-side storage chips.

From Cloud to Edge: AI Ignites Storage "Chip" Demand

In the cloud, AI relies on large-scale data centers and efficient storage systems for data storage, transmission, and processing. Edge AI, however, necessitates real-time, low-power data processing on devices with limited computational and storage resources, posing new challenges for storage chip capacity, speed, and energy efficiency, and driving storage technological innovation.

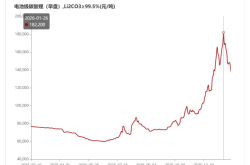

According to TechInsights' "2025 Memory Market Outlook," the memory market, encompassing DRAM and NAND, is projected to witness significant growth in 2025, primarily driven by the accelerated adoption of AI and related technologies. The report further states that the proliferation of edge AI will spur demand for memory solutions tailored to these new functionalities.

Source: TechInsights

Considering this year's market conditions, CFM flash market data reveals a 4% year-on-year increase in mobile phone storage demand and an 8% rise in PC market storage demand. Additionally, edge AI is already driving storage chip replacements in these terminal devices.

In the realm of AI phones, 16GB of DRAM is considered the minimum baseline configuration. Similarly, in AI PCs, faster data transmission speeds, larger storage capacities, and bandwidth requirements are propelling demand for mainstream LPDDR5x and LPDDR5T products. For instance, Microsoft's AI PC mandates a minimum memory capacity of 16GB, with AI PCs equipped with new processors typically upgrading to 32GB, offering ample room for AI model deployment and upgrades.

This year also saw the launch of AI smart glasses and AI TWS earphones. AI TWS earphones, featuring enhanced functionality, require serial NOR Flash for storing more firmware and code programs. Compared to ordinary TWS earphones, several recently released AI earphones have significantly increased their NOR Flash capacity, essentially doubling to support built-in AI features.

For edge AI devices, computational power and data enable diverse intelligent functionalities, necessitating robust storage chips to efficiently read and write this data.

Technological innovations in products often have a ripple effect. Achieving a specific functionality necessitates collaborative updates across the entire terminal configuration. While consumers may only see a specific, user-friendly feature, years of continuous iteration in advanced software and hardware technologies lie beneath the surface. The memory technological advancements behind edge AI device functionalities aptly illustrate this, with increased memory capacity, higher read/write speeds, and lower power consumption reflecting the iterative progress of the entire semiconductor industry's process technology.

Collaborative Evolution of Storage Chips and Edge AI Devices

Storage products are ubiquitous in our daily lives, primarily serving data storage, reading, writing, and erasure. Categorized by storage media, they include Random Access Memory (RAM), Read-Only Memory (ROM), and Flash Memory. Currently, the primary types of storage chips utilized in various smart terminals are as follows:

DRAM

Dynamic Random Access Memory (DRAM) is the most common type of system memory, storing temporary data and processed data. It represents the largest single product market. In AI smartphones, AI PCs, and other smart terminals, DRAM provides rapid data access and processing capabilities. As AI terminals adopt DRAM with larger capacities and higher read/write speeds, the demand for high-performance DRAM continues to escalate.

NAND Flash

NAND Flash is a non-volatile memory used for long-term data storage. In AI phones, AI PCs, and AI Pads, NAND Flash serves as internal storage for operating systems, applications, and user data. As edge devices' operating systems increasingly host large models and other AI applications, higher NAND Flash capacities will be required for long-term storage.

NOR Flash

NOR Flash, another non-volatile memory, is commonly used to store boot codes and firmware. It is particularly crucial in devices requiring fast boot-up and execution, with AI TWS earphones being a typical application. The rising demand for NOR Flash capacity in various AI hardware is a deterministic trend, especially for medium and large-capacity NOR Flash.

UFS

UFS is a high-performance universal flash storage solution employed in high-end mobile devices. The UFS 4.0 version boasts a maximum read speed of 4200MB/s and a write speed of 2800MB/s. Edge AI performance is inseparable from efficient data flow, precisely what UFS 4.0 storage technology supports. As edge AI software continually optimizes and UFS technology extends to more edge devices, edge AI devices will exhibit stronger learning abilities and faster response speeds. Quoting Kioxia's original description of the UFS prospect, "Modern mobile phones are entering a new era of collaborative development between on-device AI and advanced storage technology."

LPDDR

LPDDR (Low Power Double Data Rate) is a low-power version of DRAM designed for mobile devices to reduce energy consumption. LPDDR5 and LPDDR5x offer higher data transfer rates and lower power consumption. LPDDR6 is imminent, fully adapting to AI computing requirements and supporting edge AI devices with higher frequencies and bandwidths.

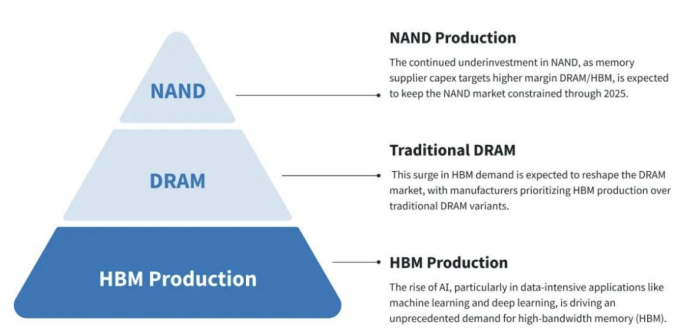

HBM

HBM's popularity is well-established. Essentially, it is a high-performance 3D-stacked DRAM featuring extremely high bandwidth and storage density, suitable for AI and high-performance computing applications requiring massive data processing. It is primarily used in high-performance computing fields such as servers and data centers.

Why is it still listed in edge device storage? Because edge devices are already integrating HBM, promoted by leading memory manufacturers. Previous reports have indicated that SK Hynix is introducing HBM into the automotive sector. In the mobile edge device realm, Samsung and Hynix are also developing edge HBM products, with preliminary estimates pointing to commercialization by 2026.

Driven by the widespread adoption of various edge AI devices, diverse storage chips, both large and small, are embracing new market demands and continuously upgrading to meet the storage requirements of edge AI devices. Currently, storage products appear to have primarily enhanced these aspects in the collaborative evolution with edge AI devices:

Data Processing Speed and Memory Bandwidth

The demand for real-time data processing and quick response in edge AI applications is evident, relying on storage chips with exceptionally high data read/write speeds to experience edge AI's real-time capabilities. With the introduction of large models on the edge, memory bandwidth demand has also surged, necessitating storage chips to complete extensive data read/write operations swiftly and support high-concurrency data access requests. This addresses the memory wall issue and unleashes the performance of large models on resource-limited edges. Additionally, optimizing storage structure and algorithms improves storage space utilization and data read/write efficiency.

Energy Efficiency Management and Miniaturization

In the collaborative optimization of edge AI and storage chips, energy efficiency management and chip miniaturization are crucial for harmonizing with edge smart devices' efficient operation. Edge AI devices' storage goal is to load AI models from storage to memory efficiently. This necessitates achieving the highest possible throughput without escalating power consumption. Reducing power consumption and conserving space are concerns for every Original Equipment Manufacturer (OEM). For instance, Micron Technology's LPDDR5X developed for AI PCs reduces power consumption by 58% and saves 64% of space compared to traditional SODIMM products.

Exploring More Possibilities on the Edge for Storage

The rapid development of edge AI devices is propelling overall storage chip performance innovation, with new storage technologies also accelerating under this trend.

For example, MRAM (Magnetoresistive Random Access Memory) is a memory type based on the tunneling magnetoresistance effect. It offers unlimited read/write cycles, fast write speeds, and low power consumption, combining non-volatility with high-speed read/write capabilities. It is well-suited for edge local storage requiring rapid response and persistent data preservation.

MRAM's high integration with logic chips aids device miniaturization. As edge devices deepen their applications, MRAM is poised to meet traditional memory application requirements and, when combined with other memories, balance performance, capacity, and cost in edge AI device storage.

Another direction gaining attention with the popularity of AI applications is storage and computing integration, further subdivided into near-memory computing, in-memory processing, and in-memory computing based on different emphases. As data processing speed and storage efficiency demands increase, traditional storage solutions segregated from computing may fall short of meeting edge AI's real-time and energy efficiency requirements in the future.

Integrating AI engines like NPUs into memory cores to handle computing and processing functions avoids latency caused by frequent memory-processor data transmission in traditional architectures, enabling faster data access speeds and lower energy consumption. This is crucial for edge applications requiring local real-time responses. High-performance, power-efficient, and real-time mobile edge AI devices, such as AI phones, AI PCs, AI wearable devices, and AI smart homes, may adopt this technological path in the future.

Final Thoughts

Edge AI presents new development opportunities and challenges for the storage chip market. As edge AI functionalities expand and deepen, storage performance and power consumption demands will soar, prompting the entire industry chain to foster technological innovation to maintain competitiveness. Additionally, with emerging new storage technologies and burgeoning storage chip demand in edge AI devices, market competition will intensify. This presents a rising opportunity for domestic storage chip manufacturers.

Revisiting the article's opening narrative, while edge AI devices have yet to achieve true intelligence, a saying goes, "People tend to overestimate the short term and underestimate long-term technological capabilities." In the future, products with meaningful device-side AI capabilities will offer unexpected functionalities. As edge AI device functionalities mature and application scenarios broaden, storage chips will usher in new prospects and opportunities.