2025 AI Agent Conundrum: Distinguishing Genuine Intelligence from Mere Mimicry

![]() 01/17 2025

01/17 2025

![]() 530

530

Editor's Note: Embrace transformation to navigate change, and foresee the unseen for future success. Shidao, Daxiang News, and Daxiang Wealth, in collaboration with Tencent News and Tencent Technology, present the 2024 year-end planning series "Under the Changing Landscape". We reflect on 2024 and anticipate 2025, transcending time with insights to seek certainty in the future.

Authors: Shidao, Rika

Editors: Zheng Kejun, Hao Boyang from Tencent Technology

At the end of 2023, Stanford University unveiled a groundbreaking AI experimental project: "Town Simulation Game". In this virtual town, 25 AI characters autonomously converse, form relationships, and make plans, showcasing impressive social capabilities. This experiment ignited public anticipation for AI Agents—intelligent assistants with autonomous consciousness and decision-making abilities.

A year later, the concept of AI Agents has become a buzzword in the industry. Tech giants like Microsoft and Google have taken action, while startups rush to launch various "Agent" products. However, upon closer inspection, these so-called "Agent" products reveal an awkward truth: they are far from genuine Agents, resembling more conversational robots with natural language understanding capabilities.

This "form over function" phenomenon also recurs in the field of AI hardware. In October 2024, the smart ring brand Oura launched its latest Oura Ring 4, intelligently integrating AI functionality. Soon, Oura's valuation soared above 5 billion USD, making it one of the most commercially successful "AI hardware" vendors. However, there's a consensus that Oura's success has little to do with AI; its core value lies in its basic health tracking function. In contrast, hardware products genuinely focused on AI, such as AI Pin and Rabbit R1, faced disappointing launches.

What constitutes an AI Agent? Is it the Prompt Agent that pops up when you open a large model app? Or is it the professional Agent Cursor in programming? Perhaps Iron Man's versatile assistant Jarvis?

Jon Turow, a partner at American VC firm Madrona, once noted: "When you speak with enough practitioners, you'll encounter a multitude of different concepts, all called Agents."

If we liken the development of AI Agents to a marathon, where are they headed in 2025?

2024 AI Agent Observation: A Mixed Bag of Promise and Hype

Scene of Excitement: Players from All Sides Enter the Fray

In the first half of 2024, the price war for large models raged on; in the second half, the battle for AI Agents intensified.

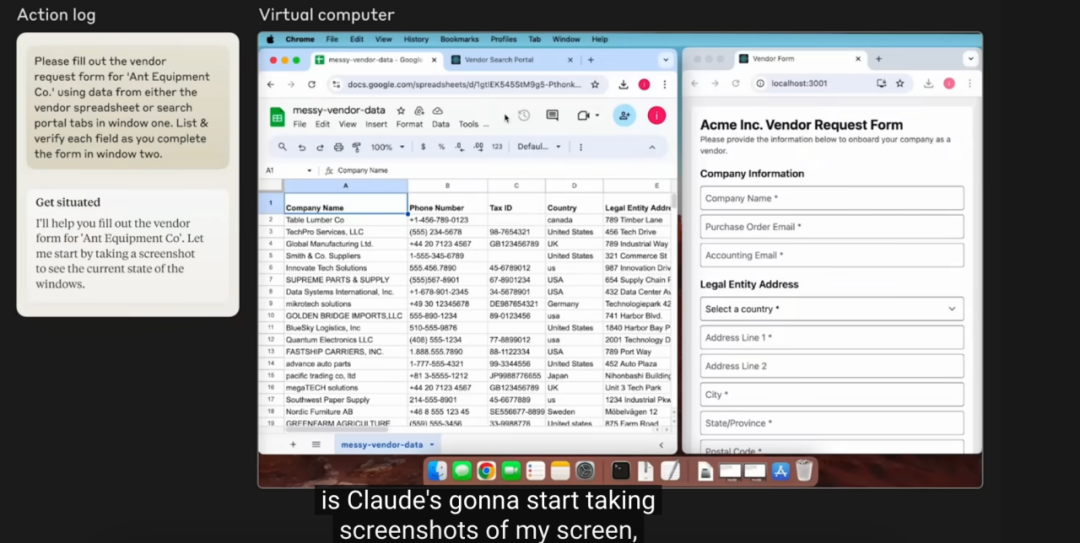

Overseas, tech giants like OpenAI, Anthropic, Microsoft, and Google announced related progress, leveraging their Agent capabilities as key assets. In October, Anthropic launched the AI Agent system "Computer Use", claiming it can "operate a computer like a human". This special API allows developers to guide Claude to complete various computer tasks—observing screen content, moving the mouse, clicking buttons, typing, etc. Developers can use this API to convert written instructions into specific computer commands, automating tasks.

(Image: Anthropic developer demonstrating Computer Use)

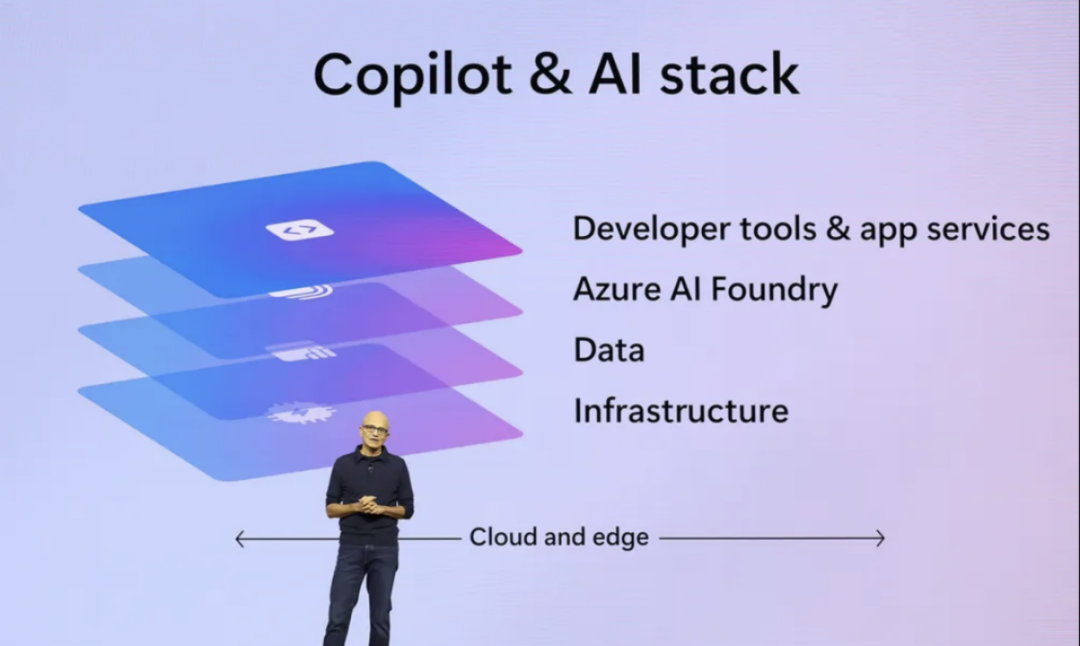

Microsoft is also a pivotal driver of AI Agents. In October 2024, Microsoft announced a significant plan: to develop and deploy 10 AI Agents for the Dynamics 365 business application platform, primarily serving key areas such as sales, accounting, and customer service in enterprises. According to the schedule, these AI Agents will open for public testing by year's end, with the testing phase expected to continue into early 2025.

(Image: Microsoft CEO showcasing Copilot and AI stack)

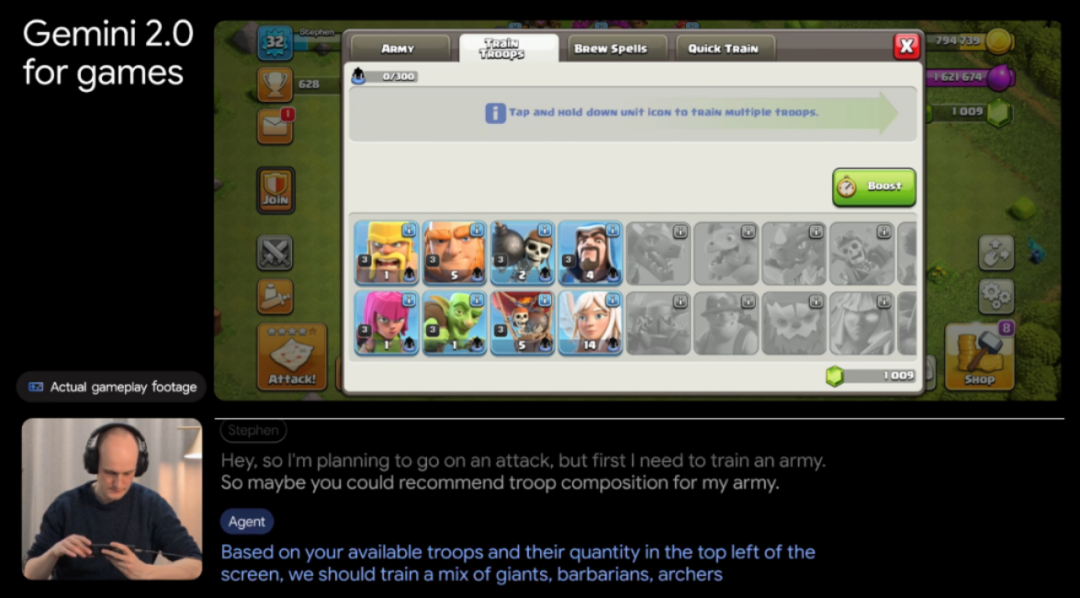

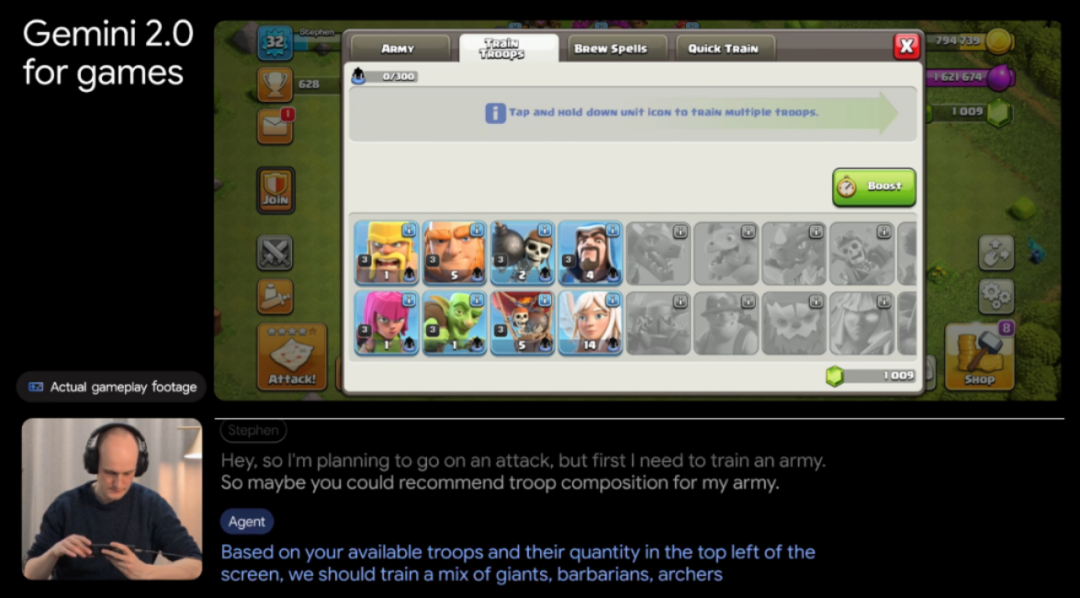

Google's response was relatively slower but caught up by year's end. In December, Google released the new multimodal large model Gemini 2.0. Supported by this new model, Google integrated three AI Agents: "General Large Model Assistant" Project Astra, "Browser Assistant" Project Mariner, and "Programming Assistant" Jules. The "Programming Assistant" Jules can be directly integrated into GitHub's workflow system as an autonomous agent, analyzing complex codebases, implementing fixes across multiple files, and preparing detailed pull requests without continuous human supervision. In the "Clash of Clans" demonstration, the Google AI Agent not only introduced troop characteristics and gave combination suggestions but also retrieved information from Reddit to provide character selection advice.

(Image: Player interacting with Google AI Agent)

Although OpenAI leads in foundational models, its Agent layout is slightly slower. In July, OpenAI updated its AGI roadmap, indicating it was currently at Level 1, approaching Level 2; Level 3 represents AI Agents.

(Image: OpenAI's 5 stages of AI development)

OpenAI is expected to launch a new AI Agent, Operator, in January 2025. This system can automatically perform various complex operations, including writing code, booking travel, and automatic e-commerce shopping. It is reported that Operator may bring significant innovations and application simplifications based on Computer Use, expanding the use range and application scenarios of AI Agents.

In the domestic market, major players like Baidu, Alibaba, Tencent, and Zhipu have also entered the field. On the B-end, platforms like Baidu Wenxin Intelligent Agent, Tencent Yuanqi, iFLYTEK Spark Intelligent Agent Creation Center, Tongyi Intelligent Agent, and Byte Buckle provide intelligent agent creation platforms for enterprise users, adding AI Agent entries to their AI intelligent assistant interfaces.

On the C-end, AI App ZhiXiaobao under Alipay and Zhipu AutoGLM have ignited consumer interest. According to demonstrations, Zhipu AutoGLM can browse and understand screen information, make task plans, and simulate common mobile phone operations—just by receiving simple text/voice commands, it can simulate human operation of the phone, liking posts on WeChat Moments, ordering takeout on Meituan, booking hotels on Ctrip, etc.

Sober Reality: Defining the True Nature of AI Agents

If you only witness the above exciting developments, you might conclude that 2024 was the pinnacle year for AI Agents. However, in reality, only a handful of AI Agents can truly be relied upon by users.

Consider for a moment—which AI Agents do you prefer to use? If you're a programmer, the answer might be Cursor. But if we ask which large AI models you like to use, the answers will vary, including ChatGPT, Gemini, Claude, Kimi, etc.

At least from a sensory perspective, the currently popular AI Agents are still "empty hype".

The main reasons are "unreliability" and "lack of substance". AI Agents rely on LLM "black boxes", which are inherently unpredictable. Workflows involve connecting multiple AI steps, exacerbating these issues, especially for tasks requiring precise outputs. It's difficult for users to ensure that Agents can always provide accurate and contextually appropriate responses.

The State of AI Agents report by LangChain serves as an important reference. Over 1,300 respondents surveyed pointed out that performance quality (41%) is the primary concern, far outweighing factors like cost (18.4%) and security (18.4%). Even for small businesses traditionally cost-conscious, 45.8% listed performance quality as their top priority, with cost factors at only 22.4%. The report also noted that the main challenges in adopting AI Agents in production include difficulty for developers in explaining the functions and behaviors of AI Agents to teams and stakeholders.

Furthermore, while the foundation LLMs on which AI Agents rely perform well in tool use, they are not fast or cost-effective, especially when loops and automatic retries are required. The WebArena rankings benchmarked the performance of LLM agents in real-world tasks. The results showed that even the best-performing model, SteP, had a success rate of only 35.8%, while GPT-4's success rate only reached 14.9%.

So, can AI Agents that cannot "fully take care of themselves" on the market be considered genuine Agents?

Following Andrew Ng's thinking, it's easy to understand—AI Agents can be hierarchical. He proposed the Agentic System and believes that the adjective "Agentic" better helps us grasp the essence of such intelligent agents. Like autonomous vehicles from L1 to L4, the evolution of Agents is also a process.

Yohei Nakajima, founder of BabyAGI, also provides valuable insights into the classification of AI Agents:

1. Handcrafted Agents: Chains composed of Prompts and API calls, with some autonomy but many constraints.

Characteristics: Assembly line robots that complete tasks according to fixed steps.

Example: A dedicated ticketing assistant—when you tell it your flight requirements, it directly calls the API to search and complete the booking; however, it struggles with complex itinerary planning.

2. Professional Agents: Dynamically decide what to do within a set of task types and tools, with fewer constraints than Handcrafted Agents.

Characteristics: Skilled craftsmen who can adeptly use tools in specific fields (like carpentry), not only making furniture according to requirements but also adjusting designs and sourcing materials based on actual needs.

Example: AutoGPT decomposes complex problems through CoT technology and dynamically selects the optimal solution path. Faced with a market research task, AutoGPT can automatically decompose it into sub-tasks like "search trends", "organize data", "generate reports", and complete them.

3. General Agents: The AGI of Agents—currently still a theoretical concept and unrealized.

Characteristics: Versatile assistants, like Iron Man's Jarvis. You can ask it any question, and it can not only understand your needs but also dynamically adapt by combining knowledge and the environment to provide innovative solutions.

Example: No truly realized products yet; related research includes stronger multimodal interaction and long-term memory optimization.

At this historical juncture, Prompt Agents are most prevalent, manifesting as Agents everywhere in large model apps. Professional Agents in vertical fields are at their breaking point and highly favored by capital due to their practicality. The true Agent expected by humans—the versatile assistant Jarvis—awaits breakthroughs in key technologies. This means that in the coming period, we will witness more technological evolutions between "L1-L4".

Where Have AI Agent Underlying Technologies Evolved This Year?

According to the formula proposed by Lilian Weng: Agent = LLM + Memory + Planning skills + Tool use

Imagine you're one of the "Five Tiger Stars" in the culinary world. LLM represents your knowledge base, containing recipes of all cuisines. Memory is like your chef's notes, recording diners' taste preferences and past lessons. Planning is your cooking plan, deciding whether to fry first and then bake, or boil first and then fry, based on different requirements. Tools are your magical kitchen utensils, including how to call different knives (software) to help with complex tasks.

The breakthrough of AI Agents depends on the progress of various technologies.

The first is LLM. Before the advent of a powerful "brain" like GPT5, OpenAI discovered the power of reasoning engines.

In October 2024, Noam Brown, senior research scientist at OpenAI and the father of poker AI, proposed that the performance improvement brought by letting AI models think for 20 seconds is equivalent to expanding the model by 100,000 times and training it for 100,000 times longer.

The technology Brown referred to is System 1/2 thinking, the secret behind OpenAI o1's "reasoning ability".

System 1, or "fast thinking", allows you to recognize an apple as a fruit without conscious effort. System 2, or "slow thinking", requires you to break down steps to solve a math problem like 17*24 for a more accurate answer.

Recently, researchers at Google DeepMind have also integrated this technology into AI Agents, developing the Talker-Reasoner framework. System 1 operates as the default "fast mode", while System 2 serves as a "standby engine" ready at any time. When System 1 feels confused, it hands over the task to System 2 for processing. This "dual-engine" operation greatly aids in solving complex and lengthy tasks, breaking through traditional AI Agent execution methods and significantly improving efficiency.

The second is the memory mechanism. When generative AI starts "talking nonsense", it may not be a performance issue but poor memory. This is where RAG (Retrieval-Augmented Generation) comes in. It acts like an "add-on" for LLMs, capable of utilizing external knowledge bases to provide relevant context, preventing them from pretending to know what they don't.

However, traditional RAG processes only consider a single external knowledge source and cannot invoke external tools. They generate one-time solutions, with context retrieved only once, lacking the ability to reason or verify.

In this context, RAG (Retrieval-Augmented Generation) merged with Agent capabilities has emerged. While Agentic RAG follows the same overall process as traditional RAG—retrieval, context synthesis, and generation—it incorporates the Agent's autonomous planning ability, enabling it to tackle more complex RAG query tasks. This includes deciding whether retrieval is necessary, autonomously selecting the search engine to use and planning the steps for its utilization, evaluating the retrieved context and deciding whether to re-retrieve, and planning whether external tools are required.

If traditional RAG is akin to sitting in a library to look up specific questions, then Agentic RAG is like holding an iPhone, invoking Google Chrome, email, and other tools to search for answers.

Furthermore, the open-source Mem0 project, incubated by YC in 2024, is poised to become an RAG assistant, endowing AI Agents with personalized memory capabilities. Mem0 functions like the "hippocampus" of the brain, providing LLMs (Large Language Models) with an intelligent, self-optimizing memory layer. It can hierarchically store information, converting short-term information into long-term memory, similar to how one organizes "newly learned knowledge" and stores it in their mind. Additionally, it can establish semantic links, creating association networks for stored knowledge through semantic analysis. For instance, if you tell AI you enjoy watching detective movies, it will not only remember but also infer other crime documentaries you might appreciate.

Based on this, Mem0 significantly enhances AI Agents' personalized memory, dynamically recording user preferences, behaviors, and needs, creating a "personal notepad." For example, when you inform an AI Agent that next week is your mother's birthday, it will not only remind you to send blessings but also suggest gifts based on your and your mother's preferences "stored in memory" and even compare prices across platforms to provide shopping links.

Advancements in RAG do not end here. A team of scientists from Ohio State University and Stanford University proposed an intriguing idea: giving AI a "memory brain" akin to the human hippocampus. From a neuroscientific perspective, they designed a model named HippoRAG, mimicking the role of the human hippocampus in long-term memory to efficiently integrate and search for knowledge, similar to the human brain. Experiments reveal that this "memory brain" can substantially improve performance on tasks requiring knowledge integration, such as multi-hop question answering. This may be a new direction in exploring how to equip large models with "human-like" memory.

Progress in Tool use is even more evident. For instance, Claude's Computer Use, through the construction of APIs, converts natural language prompts into various computer operation instructions, enabling developers to automate repetitive tasks, conduct testing and quality assurance, and engage in open research. From now on, AI does not need separate API "keys" to "one-time" invoke various software to complete diverse operations like writing documents with Word, processing spreadsheets with Excel, and searching for information with a browser. However, the current Computer Use capability is not yet perfect, with issues such as inability to train on internal data and being limited by the context window. The Anthropic team also stated that Claude's current computer usage level is only at an early stage, similar to the "GPT-3 era," with ample room for improvement in the future.

Notably, AI Agents have also made strides in visual capabilities. For example, Wisdom Spectrum's GLM-PC applies its general visual-manipulation model CogAgent to computers. It can simulate human visual perception to obtain information input from the environment for further reasoning and decision-making.

In terms of planning capabilities, Planning encompasses task decomposition—breaking down large tasks into smaller ones; reflection and refinement—self-reflecting on existing actions to learn from mistakes and optimize subsequent actions.

Currently, papers have proposed a novel classification: task decomposition, multi-plan selection, external module-assisted planning, reflection and refinement, and memory-enhanced planning. Among these, multi-plan selection gives AI Agents a "selection wheel" to generate multiple plans and choose the best one to execute; external module-assisted planning utilizes external planners, akin to judges in reinforcement learning; and memory-enhanced planning is like a memory bread, remembering past experiences to aid in future planning. These methods are interconnected, jointly enhancing AI Agents' planning capabilities.

Over the past year, various "under-the-skin" capabilities of Agents have made progress, with Tool use capabilities already initially implemented; advancements in memory mechanisms are highly anticipated; and progress in LLMs depends on the capability boundaries of giants, among other factors. However, for Agents, maximizing their capabilities is not merely an addition of various technologies. Breakthroughs in any one technology are anticipated to bring about qualitative changes.

In the future, significant challenges for AI Agent evolution include but are not limited to: achieving low-latency, real-time feedback with visual understanding; constructing personalized memory systems; and possessing robust execution capabilities in both virtual and physical environments. Only when AI Agents evolve from "tools" to "tool users" will true Killer Agents emerge.

Capital's Choice - Large Models Cooling Down, AI Agents Rising

Some argue that since large models can no longer compete, the competition should shift to AI Agents.

In 2024, large model companies once vying to be "China's OpenAI" were forced to backtrack. Taking the "six little tigers"—Wisdom Spectrum AI, ZeroOne, BaiChuan Intelligence, MiniMax, Dark Side of the Moon, and Step Star—as examples, most companies have already initiated business adjustments and even staff reductions. Relying on their solid foundations, large companies can continue to invest in research and development; however, more startups are compelled to face reality, turning to the application level of large models in search of lower costs and faster returns.

Simultaneously, keen capital has also shifted its focus to the AI application layer.

According to Juzi IT data, in the first nine months of 2024, there were 317 financing cases in the domestic AI field, with an average monthly financing amount of 4.2 billion yuan, less than 20% of the previous year. Among them, the top five companies received over 21.2 billion yuan, equivalent to 63% of the total domestic AI financing this year.

Notably, large models and AI Agent projects received the highest investor attention—19 financing cases for large models and 18 for AI Agents. The next highest was AI video generation (10%), with the remaining 50% of investment cases spread across 19 directions.

Thus, under the "winner-takes-all" scenario of large models, AI Agents are not only the best direction for AI startups but also a firm choice for domestic and foreign capital.

Jared, a YC partner and seasoned investor, pointed out that vertical AI Agents, as an emerging B2B software, are expected to become a market 10 times larger than SaaS. With significant advantages in replacing manual operations and improving efficiency, this field may spawn technology giants with a market value exceeding 300 billion dollars.

What do investors look for in AI Agents?

The most prominent example is Cursor, the AI programming tool. The reason is straightforward: coding is the easiest ability for LLMs to master. The training data it generates mainly comes from open-source code on GitHub, most of which are "valid data." Previously, Cursor provided suggested code based on user needs. Now, Cursor can directly help you create code files and prepare the runtime environment to meet your needs. You only need to click the start button to run the code.

Besides, even though a true Killer Agent has not emerged in 2024, Agents have flourished in niche areas.

According to the latest sharing from the YC team, most of the currently funded Agent projects are in the toB field.

Survey and Analysis: Outset applies AI Agents to the field of survey and analysis, replacing traditional manual survey and analysis work, such as services provided by companies like Qualtrics.

Software Quality Testing: Mtic utilizes AI Agents for software quality testing, completely replacing traditional QA testing teams. Unlike previous QA software-as-a-service companies (such as Rainforest QA), Mtic not only enhances QA team efficiency but also entirely replaces manual testing.

Government Contract Bidding: Sweet Spot uses AI Agents to automatically search for and fill out government contract bids, replacing manual completion of these tedious tasks.

Customer Support: Powerhelp utilizes AI Agents to automatically answer phone calls, reply to emails, and solve problems. It can also provide personalized solutions based on user questions and history, enhancing user satisfaction.

Talent Recruitment: Priora and Nico use AI Agents for technical screening and initial recruitment, replacing manual completion of these tasks.

To conclude with Andrew Ng's statement: The path to AGI (Artificial General Intelligence) feels more like a journey than a destination. But I believe that Agent-style workflows can help us take a small step forward on this very long journey. In other words, even if we can't have an "omnipotent Agent" for the time being, the gradual emergence of specialized Agents in multiple vertical fields will continue to give us an experience akin to having Jarvis.

2025: Expected to Be the First Year of Commercial Explosion for AI Agents

Recently, Ilya Sutskever, co-founder of former OpenAI and founder of SSI, directly announced: Pre-training will now come to an end—we only have one internet, and the massive data needed to train models is about to dry up. Only by seeking new breakthroughs from existing data will AI continue to develop.

Sutskever draws an analogy with human brain development: just as human intelligence continues to progress after the volume of the human brain stops growing, the future development of AI will shift to building AI Agents and tools on existing LLMs. He predicts that future breakthroughs will lie in Agentic intelligence, synthetic data, and computational reasoning. Notably, like Andrew Ng, Sutskever also uses the adjective "Agentic" to describe intelligent agents.

According to Bolt, a linear capital firm: We can describe the capabilities of Agent applications using a small, moderate, or high degree of "Agentic" ability. For example, Router-type systems use LLMs to route inputs to specific downstream workflows, possessing a small degree of Agentic ability; State Machine-type systems use multiple LLMs to execute multiple routing steps and have the ability to determine whether each step should continue or be completed, possessing a considerable degree of Agentic ability; and Autonomous-type systems go further, capable of using tools or even creating appropriate tools to advance the system's further decision-making, possessing full Agentic ability.

Based on this, before emphasizing the Agent attributes of a product, manufacturers may want to first ask, "How Agentic is my system?"

Currently, professional AI Agents in many fields are still immature. Relevant surveys reveal that issues such as inaccurate output, unsatisfactory performance, and user distrust hinder their implementation. But if we change our perspective: the most commercially successful AI Agents in the short term may not be the products that appear the most "Agentic"; rather, they are the products that can balance performance, reliability, and user trust.

Following this logic, the most promising development path for professional AI Agents may be to first focus on using AI to enhance existing tools rather than providing extensive fully autonomous independent services.

Using a human-machine collaboration approach, humans can participate in supervising and handling edge cases. Set realistic expectations based on current capabilities and limitations. By combining strictly constrained LLMs, good evaluation data, human-machine collaborative supervision, and traditional engineering methods, reliable and effective results can be achieved in complex tasks such as automation.

For example, Rocks, a company in Sequoia's portfolio, integrates human employees into its Agents. Initially, Rocks developed a technology for automatically writing and sending emails. But they found that incorporating human sales into the process improved performance by 333 times. Therefore, Rocks removed the automatic sending function.

Depending on the specific business scenario, some companies can develop Agent technology to complete tasks, such as Expo in the field of cybersecurity; while other companies prefer to use Agents to "enhance" human employees, such as Rocks.

So, what will happen in 2025?

First, beyond programming, more vertical fields will produce "seed candidates." Konstantine Buhler, a Sequoia partner, predicts that "high service cost" fields such as healthcare and education will become the next important battlegrounds for AI technology.

Meanwhile, according to LangChain's report, people want to delegate time-consuming tasks to AI Agents—serving as "knowledge filters" to quickly extract key information, saving users from manually screening massive data; "productivity accelerators" to assist users in scheduling and managing tasks, allowing humans to focus on more important work; and "customer service assists" to help businesses process customer inquiries and solve problems faster, significantly improving team response speed.

In other words, all time-consuming, labor-intensive, and costly tasks are expected to be replaced by specialized AI Agents in vertical fields.

Secondly, the deployment of AI Agents will shift from "single" to "multiple." On the one hand, AI Agents will evolve from single agents to "group collaboration" modes. In 2025, more Multi-agent modes will emerge, with multiple Agents playing different roles to cooperate in completing tasks. For example, ChatDev, an open-source project by Tsinghua's Mianbi Intelligence, assigns each Agent a different identity, such as CEO, product manager, or programmer, allowing them to cooperate with each other to complete tasks.

Moreover, as models' capabilities for processing image and video information escalate rapidly, a new era of comprehensive multimodal interactions will dawn in 2025. This will allow AI to synergize through multiple sensory avenues, including IoT and specialized information. The integration of multimodal input and output will intensify AI interactions, rendering them more diverse and adaptable to a broader spectrum of scenarios, thereby markedly elevating the overall quality of AI products.

In this context, the Agent emerges as an intelligent entity that encapsulates perception, analysis, decision-making, and execution capabilities, surpassing existing tools in terms of its proactive and dynamic engagement.

Quantum Bit think tank's observations suggest that, based on the evolution of both technology and supporting infrastructure, AI Agents will see widespread deployment from 2025 onwards. These Agents are poised to usher in unique interactive paradigms, product manifestations, and business models exclusive to the AI 2.0 era.

Conclusion

The opening scene of the film "2001: A Space Odyssey" depicts a tribe of herbivorous apes on the brink of starvation. An alpha ape, by chance, wields a bone as a tool, heralding a new era of hunting and carnivorous behavior that ultimately propels them to the apex of the food chain.

Looking ahead, future generations might retrospect 2025 as another pivotal juncture in human evolution, with AI Agents serving as those transformative "bones." As Andrej Karpathy eloquently puts it, AI Agents embody a future brimming with untamed potential.

Intriguingly, the term "Agent" stems from the Latin "Agere," connoting "to act." How, then, do we embrace this untamed future? Perhaps, all it takes is an "Agent."