Anticipated Directions for AI Applications in Their First Year

![]() 01/20 2025

01/20 2025

![]() 715

715

The evolution of large models from myth to reality underscores the true allure of technology: resilience amidst overhyped expectations and bubbles. To fully grasp their potential, we must desensitize ourselves to the technology itself, lest we overlook its core application value, akin to an elephant in the room.

As we gaze ahead to 2025, domestic large models have transcended the chaos of the "hundred model war," steadfastly delving into deeper exploration realms. However, who will remain at the forefront of this technological feast a year from now remains shrouded in uncertainty. Baidu, Alibaba, and ByteDance, with their globally leading prowess in computing power, technology, and data, are poised to compete with GPT5 in the future.

Simultaneously, emerging forces like the "Six Little Tigers" and DeepSeek are gaining momentum, but the Scaling Law's limitations and disillusionment with the "AI leader" aura might prompt these startups to redirect their energies towards commercialization, seeking differentiated strategies amidst established players.

So, where will AI applications steer in 2025? In this inaugural year of AI applications, which directions merit our anticipation?

01. Emphasize Two Primary Directions

According to Bloomberg, OpenAI has outlined five distinct stages in its journey towards artificial general intelligence (AGI). From chatbots in Stage 1 to reasoners in Stage 2, and Agents in Stage 3—systems capable of autonomous action—OpenAI is progressively advancing. Last September, OpenAI unveiled a series of AI models demonstrating significant progress in complex reasoning, marking its entry into Stage 2 towards AGI with the OpenAIo1 series.

With these models' increasing accuracy, a new industrial trajectory is emerging: Agent technology substituting users in specific operations. This technology will find its footing across various terminals, with smart assistants leading the charge. Envision Apple Intelligence enabling Siri to execute hundreds of new actions within Apple and third-party apps, seamlessly bridging tasks like pulling up articles from a reading list or sending photos to friends with a single click. Behind this functionality lies a model with robust planning, precise third-party app invocation, and unparalleled operation accuracy.

Furthermore, OpenAI and Google DeepMind's accelerated focus on multi-agent research signals a new dawn in this field. Both companies have posted job openings for multi-agent research teams, attracting a plethora of scientific talents.

Another pivotal industrial direction is the relentless acceleration of autonomous driving technology. As the industry leader, every iteration of Tesla's FSD system reverberates throughout the sector. The anticipated Q1 2025 launch in China and Europe promises enhanced takeover rates, true smart summon capabilities, and Cybertruck's automated parking, undoubtedly propelling the industry to new heights.

In China, Huawei has introduced the ADS 3.0 system, debuting on the Hongmeng Zhixing Xiangjie S9. This system, with its end-to-end architecture and all-weather intelligent hardware perception, boasts upgrades like omnidirectional anti-collision 3.0 with comprehensive perception, rapid response, and advanced verification. Xiangjie S9 also introduces a parking-to-parking intelligent driving function, offering a seamless connected driving experience. Sales-wise, Hongmeng Zhixing has consistently led the market for new energy vehicles priced above 300,000 yuan, underscoring its technical prowess and market acceptance.

Excitingly, Yu Chengdong, Huawei's Executive Director, revealed that Hongmeng Zhixing's "fourth-tier" product, Zunjie, has entered vehicle validation, with plans for production by year-end and a 2024 H1 launch. This news injects fresh impetus into autonomous driving technology.

Guosheng Securities highlights three key directions for AI applications:

1) Computing Power: Cambricon, Sugon, Hygon, Yunsai Zhilian, Softtong, Zhongji Xuchuang, Newease, Inspur, Foxconn, Digital China, Xiechuang Data, Hongxin Electronics, High-tech Development, etc.

2) Edge AI: Luxshare Precision, DBS, TECNO Mobile, Pegatron, Thundersoft, Edifier.

3) Autonomous Driving: Huawei Smart Car (JAC Motors, Thalys, Changan Automobile, BAIC BluePark, etc.); Domestic Autonomous Driving Chain (Desay SV, Wanma Technology, Thundersoft, HiRain Technologies, HTK, Jinyi Technology, Wanji Technology, Qianfang Technology, Hongquan IoT, etc.); Tesla Chain (Tesla, Shiyun Circuit, Sanhua Intelligent Controls, Top Group, etc.).

02. What Drives the Next Wave of AI Applications?

OpenAI's "Scaling Laws for Neural Language Models" elucidates that language modeling performance improves with larger models, datasets, and training compute. Optimal performance necessitates scaling these factors concurrently, exhibiting a power-law relationship when unconstrained. This prompts large model companies to enhance capabilities by scaling pre-trained models, datasets, and training compute, now the mainstream approach.

Meta's open-source Llama series exemplifies this trend. Llama2, pre-trained on 2T token data with 7B, 13B, and 70B parameter versions, and Llama3, pre-trained on over 15T tokens (7x Llama2's dataset with 4x code), showcase this. The 8B Llama3 scores near the 70B Llama2 on MMLU, while the 70B Llama3 achieves 80.9. The 405B Llama3.1, maintaining the 15T token dataset, scores 88.6 due to expanded parameters.

However, the power-law relationship implies diminishing returns, theoretically requiring exponential growth for linear performance improvements. Besides computational costs, scaling also poses engineering challenges. As per "MegaScale: Scaling Large Language Model Training to More Than 10,000 GPUs," LLM training faces challenges in achieving high training efficiency and stability to maintain efficiency throughout.

Acquiring high-quality training data is crucial. Alibaba Research Institute's "2024 Large Model Training Data White Paper" highlights that high-quality data, particularly multi-modal (videos, images) and domain-specific, is a bottleneck. These data stem from human creation, production, and experience, varying in scale, type, and quality.

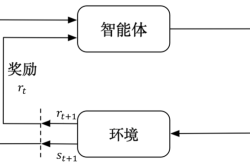

The o1 model's robust reasoning benefits from reinforcement learning and chain-of-thought reasoning. It "thinks" extensively before answering, generating long internal chains, significantly enhancing reasoning. The model also uses reasoning tokens to decompose prompts and consider multiple response generation methods. However, o1 excels mainly in math and programming, with other applications yet to be explored. Its reasoning speed is relatively slow, limiting quick-response applications, and the API cost is high.

Nonetheless, o1's success validates a new direction for improving model capabilities, potentially emulated by other large models, becoming a key paradigm amidst slowing scaling returns. Currently, the Scaling Law in pre-training remains valid, with GPT MoE boasting 1.8 trillion parameters. Thus, we eagerly await OpenAI's next-generation model, GPT-5. Model accuracy is crucial for AI application implementation. If GPT-5 significantly enhances data accuracy across tasks through expanded parameters and training, AI application innovation will explode comprehensively.