AI Inference Engine: Navigating Hardware Choices and Business Models

![]() 01/20 2025

01/20 2025

![]() 430

430

Produced by Zhineng Zhixin

Amidst the rapid evolution of artificial intelligence (AI) technology, AI inference engines are increasingly being deployed across diverse industries. The choice of hardware for these engines is pivotal, as it significantly impacts inference speed, power consumption, cost, and other critical factors.

For startups, deciding how to offer inference engines—whether through developing proprietary chips, licensing intellectual property (IP), or adopting chipset solutions—has become a multifaceted decision-making process. This decision is shaped not only by technology, market demand, and funding but also by long-term development strategies, profit models, and future technological advancements.

In this article, we delve into the hardware platforms and business models for AI inference engines, exploring how different companies manage their core chips and choose strategies to achieve their ultimate AI technology goals.

Part 1

AI Inference Engine: Chip Selection Platforms and Business Models

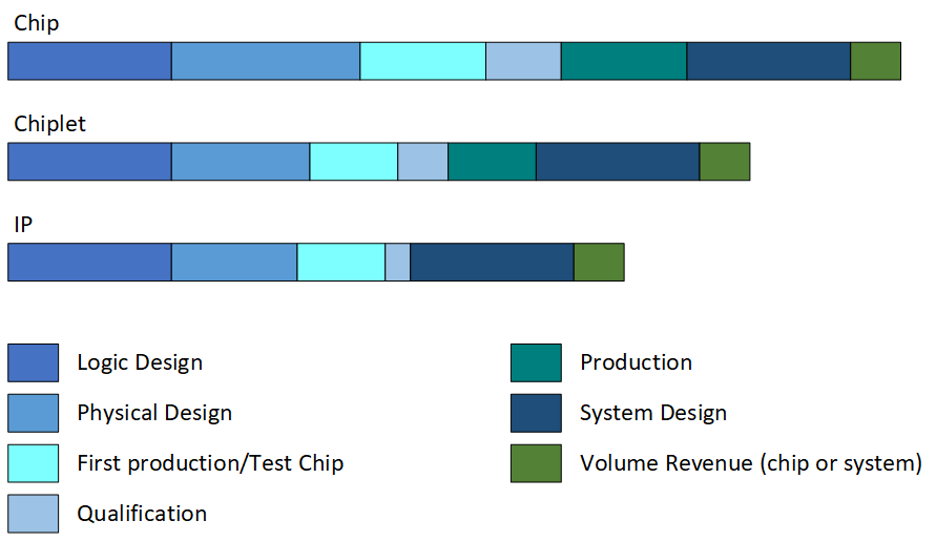

The approach to selecting hardware for AI inference engines can be broadly categorized into three strategies: developing proprietary chips, licensing IPs, and using chipsets.

Each strategy presents unique advantages and challenges, necessitating startups to make informed choices based on their resources, target markets, and technical requirements.

● Developing Proprietary Chips: Developing in-house chips signifies technological independence for startups, enabling customization tailored to specific application needs. Dedicated AI inference chips, particularly those optimized for certain workloads, offer superior performance, lower power consumption, and cost benefits.

Revenue from chips is typically generated through per-chip pricing, allowing chip companies to achieve higher earnings, especially in high-demand markets. For instance, Quadric emphasizes that the economics of niche markets often favor becoming a full-fledged silicon supplier over an IP supplier.

However, chip development involves substantial investment, encompassing technology development, production, packaging, and certification costs. This poses significant challenges for startups with limited funding. Additionally, the rapid evolution of chips necessitates companies to manage the risk of technological obsolescence while ensuring product advancement and market competitiveness.

● Licensing IP: Compared to developing chips, licensing IPs is generally considered a capital-efficient choice. IP providers grant licenses for their core technology to other companies, often earning profits through licensing fees and royalties.

IP development costs are typically lower than chip development, and there is no need to incur production and packaging expenses. For large-scale markets (such as smartphones and data centers), this can lead to substantial revenue generation through licensing to multiple customers.

While IP profit margins are often higher than chip margins, market expansion can be slower, reliant on customer adoption processes. The profit model for IPs involves royalties and licensing fees, necessitating extensive negotiation and cooperation with customers.

Many large enterprises, particularly those planning large-scale production, prefer to develop in-house IPs to avoid high patent fees.

● Chipsets: Chipsets, comprising multiple small chips including dedicated accelerators and general-purpose processing units, are emerging as a viable solution for AI inference engine hardware. This approach offers high flexibility, allowing for the combination of chips from different vendors to meet diverse needs.

Compared to traditional single-chip solutions, chipsets can more rapidly adapt to new algorithms and technological changes, mitigating the risk of technological obsolescence.

However, designing and integrating chipsets is challenging and requires high compatibility and collaboration capabilities between different chips. The profit model for chipsets involves revenue generation through chip sales, but their product design cycles and production costs are higher, particularly when advanced packaging technologies and high-bandwidth memory (HBM) are required.

Part 2

Core Chip Management Strategies and AI Implementation Paths for Companies in Different Industries

Companies across industries adopt diverse strategies for managing their core chips, primarily constrained by industry characteristics, market demand, and technical requirements.

● Technology Companies and Startups: These entities tend to prioritize innovation and differentiation in their core chip management and selection strategies.

Many startups opt to develop proprietary chips or IPs, focusing on AI inference tasks in specific domains. For example, companies specializing in image processing or natural language processing often customize chips based on their technical needs to optimize performance in specific application scenarios. Additionally, startups can license their chips or IPs to other companies, generating licensing fees and royalties.

The challenges include high risks associated with technology research and development, market promotion, potential funding shortages, and resource scarcity when competing with larger enterprises. Therefore, startups must secure sufficient seed funding in the early stages and rapidly bring their technology to market to avoid missing opportunities due to technological obsolescence.

● Large Enterprises and Industry Giants: These entities, especially in sectors like mobile phones, automobiles, and data centers, often control core chip technology through internal development or acquisitions. Smartphone manufacturers, for instance, typically design their AI inference chips, with companies like Qualcomm and Apple increasingly designing their own AI chips to enhance device computing power and reduce power consumption, reducing dependence on external suppliers.

The challenge lies in balancing innovation and risk control. While maintaining control over core technologies, these companies must cooperate with other enterprises in an open ecosystem to increase market share and respond to rapid technological changes to sustain long-term competitiveness.

● Cross-Industry Solution Providers: Providers such as NVIDIA and AMD often choose to offer hardware solutions for AI inference engines and promote their chips as industry standards.

Their core competitiveness stems from efficient chip architectures and powerful computing capabilities, often ensuring that their products meet the needs of diverse fields through continuous technological innovation and optimization.

These companies must strike a balance between proprietary chips and IP licensing. By providing standardized solutions that cater to large-scale applications while supporting specific customer needs through customized IPs, they create a strong market appeal.

Summary

Selecting a hardware solution for AI inference engines necessitates considering a company's funding, technology, and market positioning. Each approach—developing proprietary chips, licensing IPs, or adopting chipset solutions—has its pros and cons, requiring companies to make the best choice based on their unique circumstances.

Regardless of the chosen approach, the goal is to enhance market value and technological influence through innovation and strategic planning. The key to success lies in continually optimizing technology, flexibly responding to market changes, and accurately anticipating future trends.