["Annual Feature" XR Terminals: Will They Benefit the Most from AI Adoption?

![]() 01/23 2025

01/23 2025

![]() 516

516

Text/VR Gyroscope Wan Li

Unsurprisingly, the recently concluded year of 2024 was yet another epoch dominated by AI advancements.

Throughout the year, AI propelled giants like Microsoft and NVIDIA to the pinnacle of global market capitalization. Both the Nobel Prizes in Physics and Chemistry bore a striking resemblance to AI-related innovations. According to CB Insights data, nearly one-third of global venture capital flows in Q3 this year went to AI startups.

In the realm of XR, AI has permeated both existing and upcoming terminal products, with AI functionalities poised to become a cornerstone of subsequent MR and AR hardware applications. This article will delve into the expansive application and deployment of AI over the past year.

Two Major Events: The Ascendancy of AI Glasses and the Introduction of Two Novel AR Products

While 2023 was fraught with debates regarding the ideal AI hardware form factor, 2024 brought clarity to this discourse.

Last spring, three notable AI terminal products emerged: Ray-Ban Meta (glasses), Humane Ai Pin (brooch), and Rabbit R1 (handheld device). In terms of sales and user reception, Ray-Ban Meta, in the form of glasses, led the pack. Moreover, Ray-Ban Meta surpassed the one-million-unit sales mark last year, signifying initial market acceptance.

Furthermore, the hardware layouts and announcements of leading AI giants over the past year reveal interesting trends:

In July, Bill Gates mentioned during an appearance on "The Next Big Idea" blog show that the ideal hardware form factor for future AI Agents might encompass headphones and smart glasses.

In September, Meta unveiled its maiden AR glasses, Orion. Zuckerberg revealed that the team had conceived the idea of building the next-generation computing platform as early as 2014. This glasses-form hardware boasts holographic display and AI assistant capabilities. "Glasses have a unique advantage; they allow people to see what you see and hear what you hear. They provide subtle yet profound feedback."

In November, Meta's former hardware director, Caitlin Kalinowski, joined OpenAI. Kalinowski was instrumental in projects like Orion and Ray-Ban Stories, fueling speculation that this move signals OpenAI's foray into the AR glasses market.

In December, Google released Gemini 2.0 and its latest AI assistant, Project Astra. On the DeepMind website, smartphones and smart glasses are the only two hardware devices integrated with Project Astra.

Domestically, ByteDance completed the acquisition of the OWS headset brand Oladance in September this year, amidst rumors of internally developing AI glasses.

In November, Baidu unveiled its AI glasses, while Xiaomi was also rumored to be developing AI glasses products with plans for a next-year release.

It's evident that these leading enterprises are targeting glasses devices as the vessel for future AI Agent experiences. So, what sets glasses devices apart?

In my opinion, the ideal AI hardware must embody characteristics such as all-day companionship, constant insight into user intentions, and private communication. Based on these criteria, glasses, AI headphones, and wearable devices akin to Humane Ai Pin emerge as potential choices. Among them, glasses stand out as the comprehensive option, balancing excellent wearable attributes. Additionally, micro-displays can be seamlessly integrated into glasses to complement visual information transmission.

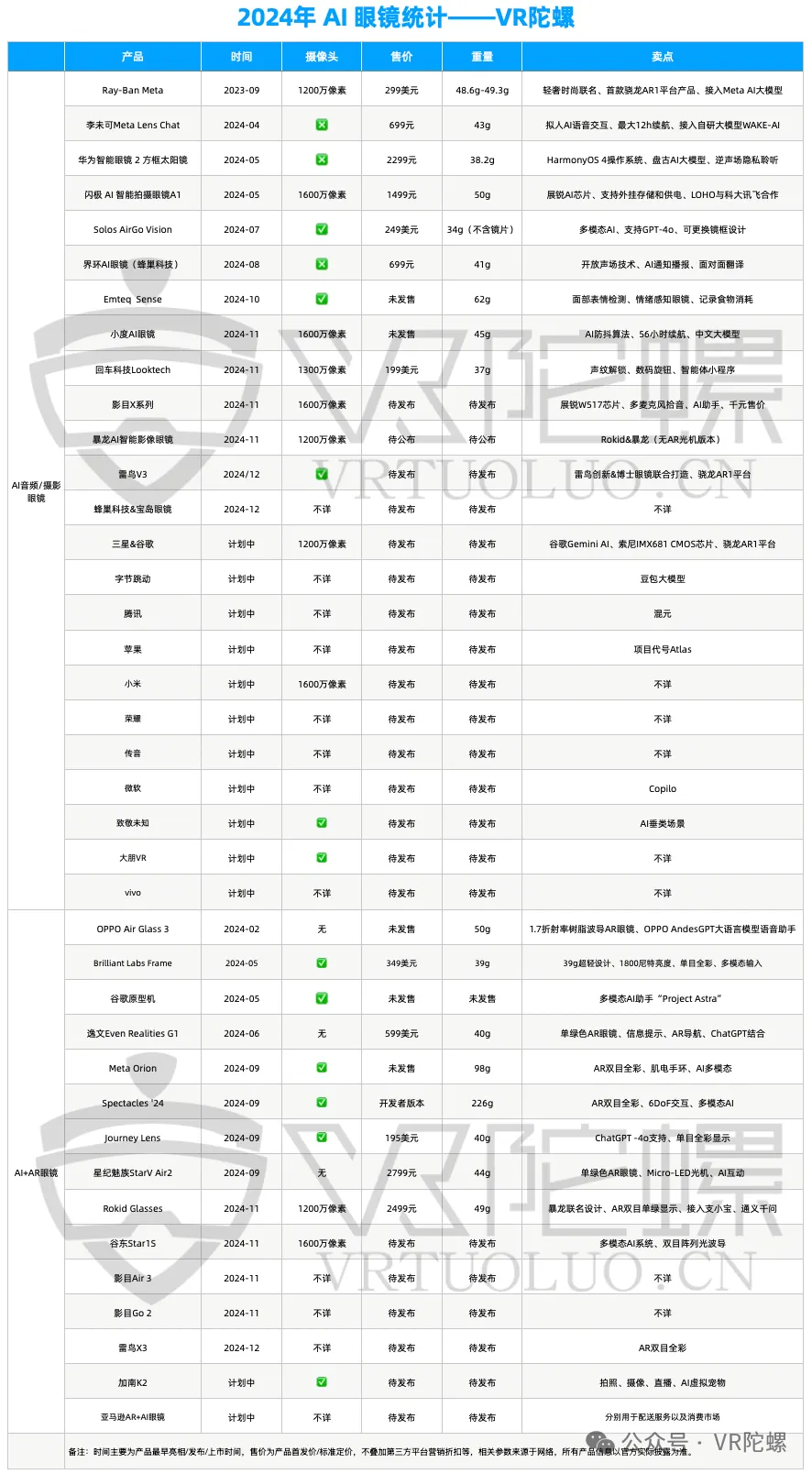

Undoubtedly, glasses have emerged as the clear winner this year, both in terms of new product launches and market popularity. VR Gyroscope's count revealed that as of late November, the number of publicly disclosed AI glasses entrants had reached 36, with an anticipated product count exceeding 50+. Furthermore, CES witnessed the unveiling of a slew of new AI glasses products from companies like Halliday, ThundeRobot Technology, and XPERT.

It's foreseeable that a pivotal focus in the VR/AR industry in the new year will be the "Hundred Glasses Battle," with China witnessing particularly intense competition.

Ps: 2024 might have been the most bewildering year for naming glasses products. Earlier last year, I was accustomed to using smart glasses for products like Ray-Ban Meta that lacked screens, while AR glasses referred to devices with optical displays. However, with the increasing "AI gold content," multiple versions have emerged in the market, including "smart audio glasses," "camera glasses," "AI glasses," and "AI+AR glasses." One of my biggest challenges over the past year was writing articles without causing ambiguity. (Let me reiterate here: AI glasses refer to devices equipped with AI functions but devoid of a display, whereas AR glasses add a display screen to AI glasses.)

Currently, the question of whether to integrate optical screens into glasses (thereby transforming them into AR glasses) remains a topic of exploration. From a purely market performance perspective, glasses without screens seem to be more popular due to their compact size and cost-effective pricing without a display module.

However, it's undeniable that in the long run, AR glasses represent the inevitable evolution of AI glasses and will ultimately be their final form. This year, overseas companies like Snap and Meta set the precedent for AR glasses products.

Spectacles 5: Although exclusively available to developers, this product boasts a high level of completion. It features an all-in-one form factor, runs on the Snap OS system, and supports gesture interaction. Additionally, Snap launched the AR creation platform Lens Studio 5.0 and infused AI capabilities into the glasses through collaboration with OpenAI.

Spectacles 5, Source: Internet

Meta Orion: Perhaps the most advanced AR glasses currently available, Orion weighs under 100g, resembles ordinary AR glasses, and offers a comprehensive set of interactive solutions. Optically, Meta has bet on Micro-LED + waveguide technology, expanding the FoV to 70° with silicon carbide waveguides. This product embodies Meta's long-term AR vision, although it's still some time before it hits the consumer market.

Meta Orion, Source: Internet

AI+AR: Multimodal AI on Board, with "End-to-End" and Memory Capabilities as Key Trends for This Year

If in 2023 many were still pondering the use cases or even killer applications of AR glasses, the answer is now gradually crystallizing—it's AI. Focusing on AI, domestic and foreign AR manufacturers have significantly ramped up their investments this year, with similar reports abounding:

Meta is firmly committed to large AI models and actively enhancing its underlying infrastructure, stating that "by the end of 2024, our goal is to have 350,000 NVIDIA H100 GPUs." Recently, rumors surfaced that ByteDance plans to spend up to $7 billion on NVIDIA chips by 2025. Xiaomi Group recently announced with much fanfare that it was offering salaries of up to ten million yuan to poach AI talent.

From an AI application perspective, the current functionalities of AR glasses have gradually converged. For instance, AI assistants have become a standard feature in many glasses products, with their capabilities primarily reliant on the large models they invoke. Other common AI functions include on-screen translation and meeting minutes.

Besides these conventional functional scenarios, here are a few innovative use cases for AI glasses products that emerged last year:

Star Trek Meizu StarV Air2 integrates with the mobile phone system, enabling simultaneous bidirectional display of translation content on glasses and mobile phones.

Rokid Glasses created an accessibility mode based on multimodal AI for the blind.

INMO Technology's INMO Go 2 achieved offline translation.

Sanag's "PatPat Mirror" A1 introduced an AI memory system capable of remembering various image and voice information of users.

FFalcon Technology's V3 camera glasses developed an AI radio function, recognizing the surrounding environment through the camera and synchronously playing matching music.

Some creative use cases of FFalcon Technology's V3 camera glasses, Source: FFalcon Innovation

At the onset of 2024, I mentioned in my annual review that "multimodal AI" would become standard for AI glasses and predicted that "the camera of AR glasses products will become a crucial module, potentially landing on AR glasses ahead of optical screens."

Looking back, multimodal AI has indeed emerged as a key selling point for AI glasses. For instance, Ray-Ban Meta rolled out real-time AI in December, enabling AI to comprehend real-time video. Similarly, the recently released XREAL One, despite stating it wouldn't blindly follow AI trends, is equipped with a camera accessory for multimodal AI expansion.

So, what new trends will AI glasses exhibit in the new year?

Source: Star Trek Meizu

In terms of user experience, the upper limit of AI functionalities on glasses undoubtedly hinges on the capabilities of large AI models. Over the past year, large AI models have repeatedly set new benchmarks. Earlier, we often assessed an excellent large AI model with "expert-level" proficiency, but now, with the advent of products like GPT-o3, it seems to have attained "PhD-level" intelligence.

For these emerging large AI models, I've found it increasingly challenging to ascertain their AI capabilities using conventional questions. In this scenario, I believe AR glasses should prioritize AI capabilities such as response speed and personalization in the future, as improvements in these metrics will be more discernible to current users.

End-to-End Dialogue: In the nascent stages of AI glasses, I tested many similar products. Back then, there were several primary pain points: first, the mobile phone app needed to be in the foreground and couldn't be closed when invoking the AI voice function; second, the AI response speed was too slow, often taking several seconds, which easily discouraged users.

The release of GPT-4o last year was undoubtedly a pivotal turning point because it revolutionized voice dialogue with an average response speed of 320ms. The dialogue can be interrupted at any time, and the system can comprehend the user's emotional tone, closely mirroring human daily dialogue experiences.

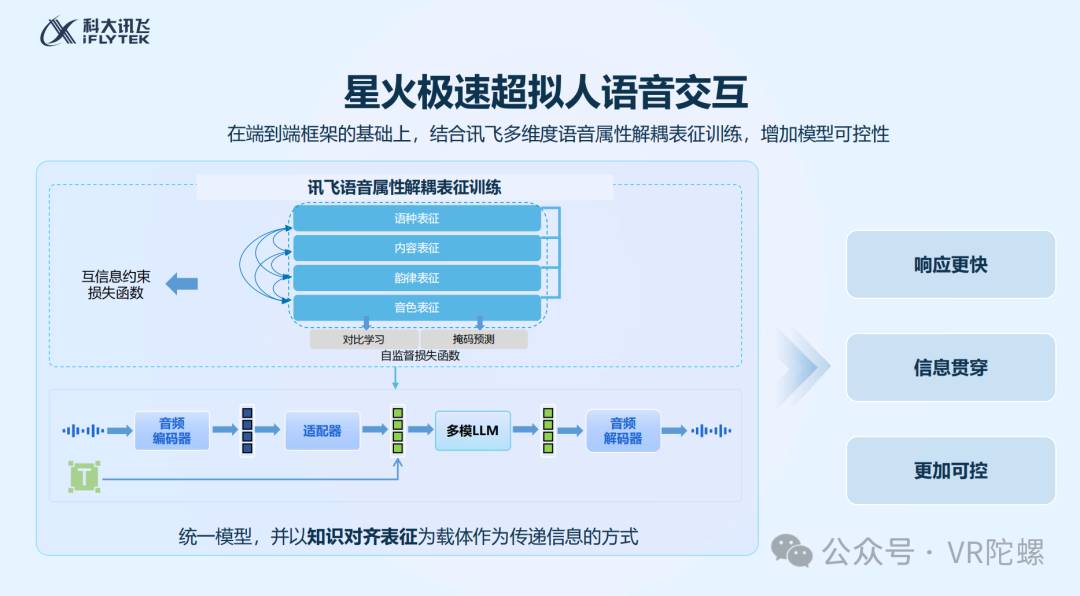

This extremely low latency is a testament to the end-to-end training of the model, where all inputs and outputs are processed by the same neural network. This year, many domestic manufacturers have recognized the benefits of end-to-end AI models (especially voice dialogue-based AI) and have embarked on corresponding research. For instance, iFLYTEK applied an end-to-end unified model framework to its Spark Extreme Superhuman Interaction Technology released in August last year. Doubao announced at the December Volcano Engine Force Conference that its end-to-end real-time speech model will soon be launched. It's anticipated that in the new year, "end-to-end" will become a more frequently used term in the realm of AR glasses.

Source: iFLYTEK

Personalized AI: An AI Agent is the ultimate fantasy for many regarding AI. Unlike terminals like PCs and mobile phones that focus on productivity scenarios, AR glasses should better fulfill our companionship needs. However, based on my experience with AR glasses products, I found that AI still responds somewhat rigidly to user needs, attributed to multiple factors.

On one hand, for AI to understand us better, it needs to be more integrated into our lives, such as keeping the camera and microphone in a constant ready state, which poses a significant challenge to device battery life. Of course, this also touches upon privacy concerns.

On the other hand, the "memory capability" of current large AI models is still a scarce attribute. They can only mechanically record context information for a specific number of tokens, so users will clearly perceive a "barrier" during interaction, making it difficult to foster sticky experiences. (If this could be achieved, today's myriad of annual reports would be rendered insignificant.)

The good news is that there seems to be an imminent breakthrough in the development of AI with superior memory capabilities. Mustafa Suleyman, CEO of Microsoft AI, mentioned in an interview earlier that Microsoft is developing a technology with a "nearly unlimited" memory function. This progress is expected by 2025, enabling AI to retain information indefinitely and thereby transforming user engagement.

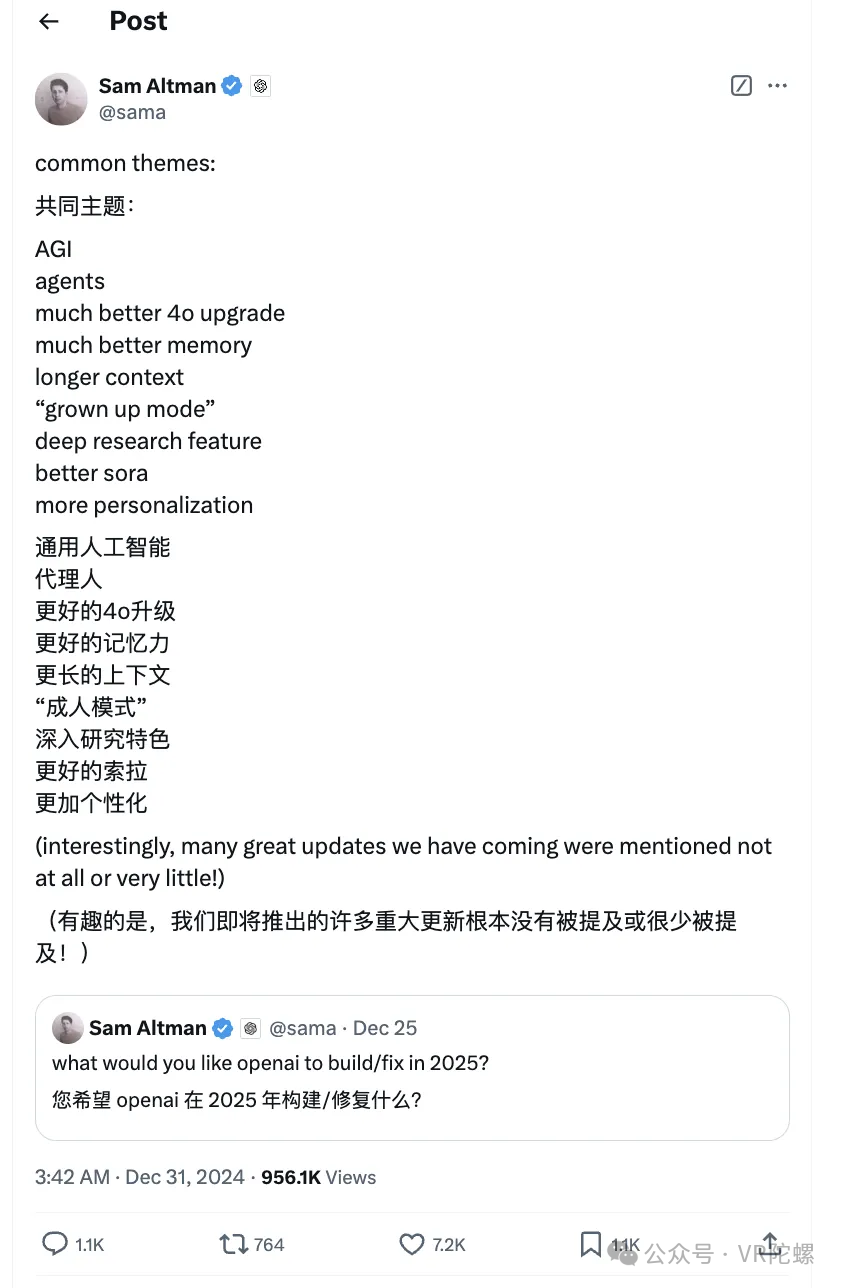

Similarly, Sam Altman, CEO of OpenAI, recently announced the company's new goals, with "better memory and longer context" clearly listed.

Image source: X

Regarding functional execution, both Meta and Google showcased AI capabilities with short-term memory functionalities by the close of last year. For instance, Ray-Ban Meta aids in recalling parking spots, while Android XR-equipped glasses assist in remembering door lock passwords. Google's recently upgraded AI assistant, Project Astra, also highlights "memory" as a pivotal feature, reportedly capable of retaining background information from prior interactions.

Project Astra, Image source: Deepmind

AI+VR: Expansion of AI Capabilities Bolstered by Soaring Processor Performance

While "AI+glasses" remains the most imaginative application, AI-related use cases for MR products have witnessed a substantial increase.

This trend could be attributed to two main factors. Firstly, AI has emerged as a critical marketing tool. Secondly, last year's flagship products, including PICO 4 Ultra, Quest 3S, and Project Moohan, upgraded to the Snapdragon XR2 Gen 2/+ processor, offering enhanced AI computing power. Here are some AI features demonstrated by select head-mounted display products:

Quest 3/3S: In July 2024, Meta introduced Meta AI for head-mounted displays, activated by double-clicking the Meta button on the controller. For devices supporting full-color VST, the AI enables visual search functions, such as identifying plant species in gardens.

PICO 4 Ultra: Features AI-generated desktop backgrounds, AI conversion of 2D Douyin short videos to 3D, and other innovative functionalities.

Project Moohan: Equipped with an Android XR system deeply integrated with AI, surpassing the previous two in gameplay. It offers system-level response, real-time online capabilities, and supports voice interaction and visual search.

The author contends that in light office scenarios, Project Moohan's productivity, owing to its large screen and AI attributes, may rival current PC devices. However, further observation is necessary to ascertain additional functionalities.

Demonstration of Circled Search Function, Image source: Google

Conclusion: The New Year Promises More Buzz

2025 is poised to be a bustling year for the "AI+XR" sector. For AI glasses, giants like ByteDance and Xiaomi are set to enter the market, with Ray-Ban Meta planning to release an iterative version with a screen. For AR glasses, Samsung, Google, and other manufacturers are expected to unveil new products. Rumors also suggest that VIVO might enter the MR head-mounted display market this year.

With these new entrants, the market will witness "giant shocks" in terms of pricing, functional scenarios, and brand influence, leading to a fierce competition for survival.

In the author's perspective, two areas deserve continued attention this year. Firstly, Google released the XR operating system Android XR late last year, and it is anticipated that many brands will join the fray this year. Its introduction is expected to lower the barriers to entry in the XR industry.

Furthermore, the author believes that glasses are not the sole solution for "AI carriers," and the new year may bring forth innovative terminal forms.

Let us embark on this journey together in the new year.