AI Milestones: Domestic 10K Chip Cluster and DeepSeek Spark Innovation

![]() 02/06 2025

02/06 2025

![]() 475

475

Large AI models are swiftly progressing towards inclusivity.

On the first working day of the year, February 5, significant news emerged from the industry: Baidu Intelligent Cloud successfully launched its in-house developed Kunlun 3 10K cluster, marking China's first officially introduced self-developed 10K cluster. Beyond addressing its internal computing power needs, this development is anticipated to further reduce the costs associated with large models.

Previously, DeepSeek introduced the V3 and R1 models, which garnered global attention during the Spring Festival due to their competitive performance with OpenAI's leading models and substantial cost reductions.

Behind these continuous advancements, the competition for AI large models has entered a new era—one that transcends mere technological prowess and encompasses a comprehensive competition involving cost, user experience, and ecosystem. The vision of "raising an AI with the cost of a cup of bubble tea every day" is no longer a distant dream, as AI rapidly progresses towards inclusivity.

01

Domestic Self-Developed 10K Cluster Makes Its Debut Following DeepSeek

In fact, following the launch of the new DeepSeek model, the chip industry has been buzzing with activity both domestically and internationally. Companies such as NVIDIA, AMD, and Intel, along with domestic players like Huawei Ascend, Muxi, Tianshuzhixin, Moore Threads, and Haiguang, have all announced support for DeepSeek model deployment and inference services.

On February 5, the first working day after the Spring Festival, Baidu Intelligent Cloud also announced the successful launch of the Kunlun 3 10K cluster. The establishment of this 10K cluster is poised to further drive down the costs of AI models.

Previously, companies like Google, Amazon AWS, and Tesla developed chips aimed at reducing costs and enhancing cost-effectiveness. In China, over the past year, the scarcity of computing power has been a significant factor contributing to the high costs of large models. Through the development of indigenous chips and the construction of large-scale clusters, not only are self-supply issues being resolved, but it is also anticipated that the costs of large models will be further reduced.

Kunlun is an AI chip developed by Baidu, with the first generation launched in 2018.

Over the past two years, there have been few reports on Kunlun outside of Baidu. However, prior to the launch of the 10K cluster, whispers within the industry had already begun to circulate. Speculation suggested that Kunlun 3 chips entered mass production in 2024. Some industry insiders also informed Digital Frontier that in the second half of 2024, they evaluated purchasing servers based on Kunlun 3 chips.

Baidu Chairman Robin Li has repeatedly emphasized that Kunlun is the "cornerstone" of Baidu's AI technology stack, and that self-development capabilities guarantee technological sovereignty in the era of generative AI.

In some external introductions in 2024, Baidu stated that Kunlun, in deep collaboration with the PaddlePaddle deep learning framework and the ERNIE large model, forms an end-to-end optimization of "chip-framework-model-application," thereby enhancing overall performance.

Digital Frontier learned that the previous two generations of Kunlun chips were primarily used for AI deployment and inference services. Kunlun 3 takes a step further, being an AI cloud chip optimized for large models and training.

The 10K cluster launched this time can significantly reduce the training cycle of models with 100 billion parameters, while supporting larger models, complex tasks, and multimodal data, thereby enabling the development of Sora-like applications. Additionally, the 10K cluster supports multi-task concurrency capabilities, allowing multiple lightweight models to be trained simultaneously on a single cluster through dynamic resource partitioning. This reduces waste of computing power through communication optimization and fault tolerance mechanisms, resulting in an exponential decline in training costs.

It is worth noting that the inference market will also be a highlight this year. Digital Frontier learned that domestic and foreign chip companies are vigorously competing for NVIDIA's market share. An AI computing power veteran told Digital Frontier that inference prioritizes the "energy efficiency ratio," comparing computing performance per watt.

It is anticipated that Baidu's Kunlun chip cluster will also join the competition in this market. For the inference market, the industry's strategy is to focus on mainstream models and provide excellent adaptation services. Undoubtedly, in addition to its own ERNIE Bot, Kunlun has also adapted to a range of models such as DeepSeek.

Baidu's official announcement also mentioned that with the rise of domestic large models, the 10K cluster is gradually transitioning from "single-task computing power consumption" to "maximizing cluster efficiency," with "mixed deployment of training, fine-tuning, and inference tasks" to improve overall cluster utilization and reduce unit computing power costs.

Next, domestic and foreign companies face the challenge of breaking through NVIDIA's CUDA stronghold. Over the past decade, NVIDIA has utilized the CUDA ecosystem to dominate both the training and inference markets. The strength of CUDA lies in its continuous development of application libraries for scenarios such as life sciences, quantitative analysis, and autonomous driving. "If you want to complete an application for drug molecules or autonomous driving, there may already be 100,000 lines of code written on CUDA, and you may only need to write a few hundred more lines to solve the problem," said the aforementioned individual.

Currently, many countries such as the UK, France, Canada, and Chinese enterprises have demonstrated resilience and tenacity in the AI chip ecosystem, developing foundational ecological construction. Additionally, university laboratories and research institutions in some countries around the world, with government support, also continue to perform foundational work.

02

"Raising an AI with the Cost of a Cup of Bubble Tea Every Day"

In addition to the latest chip developments, the large model storm ignited by DeepSeek continues, with major cloud computing companies having announced support for DeepSeek model invocation or deployment, sparking a price war to compete for the market.

The enthusiasm of large companies is tied to the immense traffic generated by the DeepSeek model globally. During this Spring Festival holiday, "mysterious oriental power," "AI's Pinduoduo," and "raising an AI with the cost of a cup of bubble tea every day"... the domestic large model DeepSeek garnered attention both domestically and internationally.

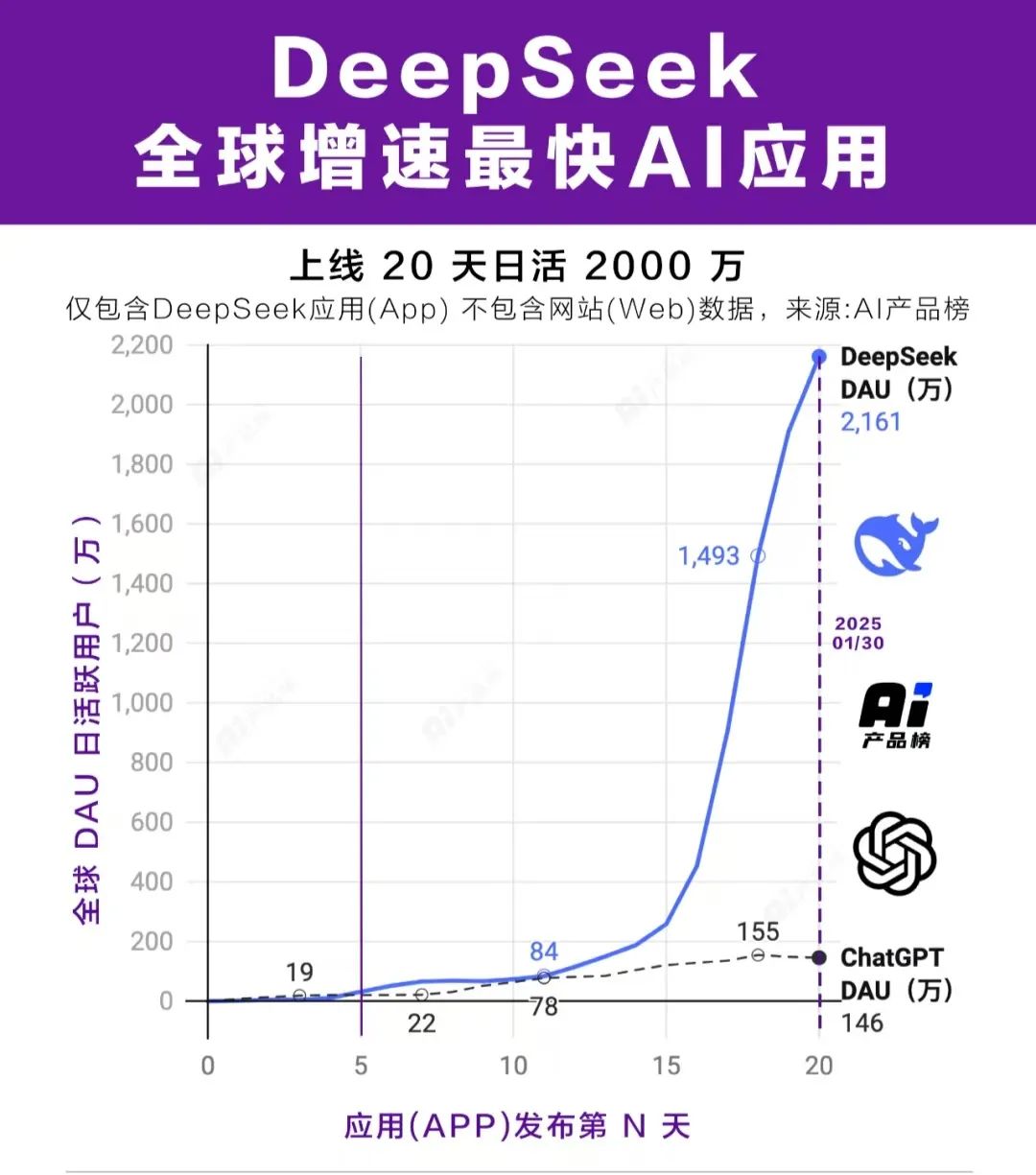

On February 4, the AI Product Ranking was released, revealing that according to the latest statistics, DeepSeek's applications (excluding website data) have surpassed 20 million daily active users within 20 days of launch, outpacing ChatGPT's daily active users during the same period of its launch within just five days, making it the fastest-growing AI application globally.

On Weibo, on February 4, "DeepSeek's answer to how to live a good life" once topped the trending topics. On Xiaohongshu, there are already over 490,000 notes related to DeepSeek, with various tutorials and review posts emerging intensively, and some people even engaging in "AI fortune-telling."

"Free use + better results" is the key to attracting ordinary users to try it out.

More critically, DeepSeek dealt a significant blow to OpenAI's pricing system. According to multiple data calculations, if the average usage scenario is considered, the overall cost of DeepSeek-R1 is approximately 1/30 of that of OpenAI's GPT-4 model, allowing people to apply AI at an extremely low cost.

With the explosive popularity of DeepSeek, the price war among large models between technology giants has intensified. Overseas companies such as Microsoft Azure, Amazon AWS, and NVIDIA NIM services have integrated the DeepSeek model, attempting to seize market share through more attractive cost-effectiveness. Domestic operators, including Alibaba Cloud, Baidu Intelligent Cloud, and Volcano Engine, are also unwilling to be outdone, engaging in price competition after integrating the DeepSeek model in various forms.

Some cloud computing companies offer prices that are the same as or provide certain discounts or free quotas on top of DeepSeek's official list prices.

Among them, on February 3, Baidu Intelligent Cloud offered the lowest prices, with the invocation price of Baidu Intelligent Cloud R1 being 50% of DeepSeek's official list price and the invocation price of V3 being 30% of the official list price, along with free access for a limited time of two weeks.

The significant drop in the invocation prices of large models has lowered the threshold for using high-quality models, drastically reducing the resistance to corporate decision-making and quickly igniting the enthusiasm of developers.

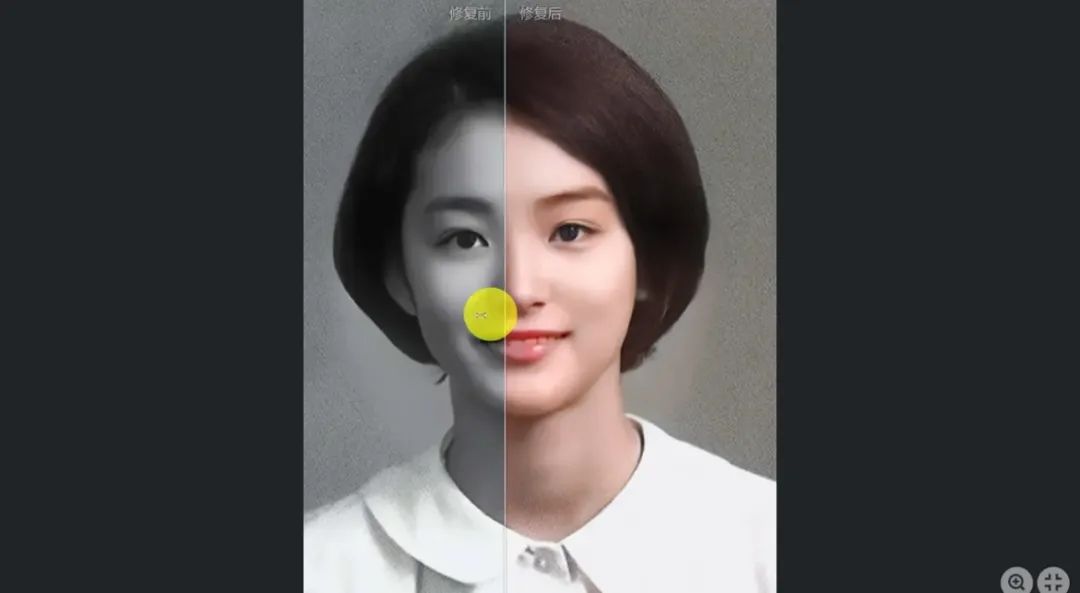

On various technical forums around the world, "DeepSeek" is the hottest topic. On the developer community CSDN, four of the top ten entries on the overall hot list are related to DeepSeek, with related applications rapidly emerging. Some netizens have used DeepSeek to restore old photos to color without writing a single line of code.

In the financial industry, Jiangsu Bank has introduced DeepSeek into its service platform "Wisdom Xiaosu," applying the DeepSeek-VL2 multimodal model and lightweight DeepSeek-R1 inference model to intelligent contract quality inspection and automated valuation reconciliation scenarios, respectively.

A multinational pharmaceutical company has built a drug side effect prediction system based on the DeepSeek-R1 model, combining patient historical data with real-time monitoring to reduce clinical trial risks.

Shanghai Jiao Tong University has begun using DeepSeek-V3 to generate synthetic data for the development of vertical large models.

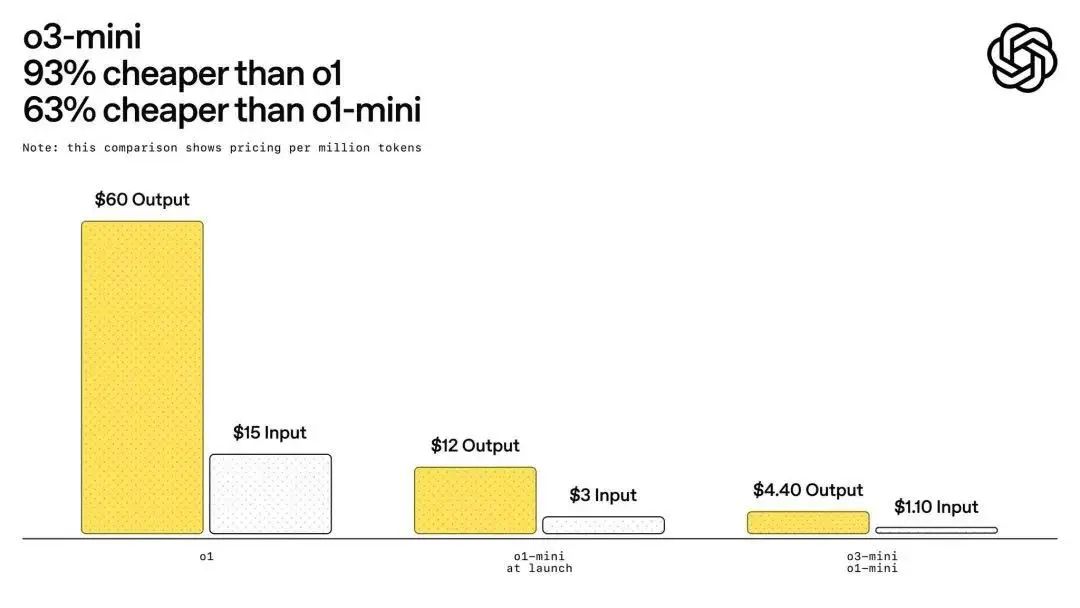

In fact, in response to competition from DeepSeek, OpenAI has swiftly launched a new model, GPT-4-mini, and its pricing has also been reduced.

Although this price is still higher than DeepSeek's pricing, it represents a significant downward trend.

In fact, this round of popularity for DeepSeek also signifies that the competition for AI large models is no longer limited to technology, but a comprehensive competition encompassing cost, user experience, and ecosystem.

"Raising an AI with the cost of a cup of bubble tea every day" is no longer a dream. This round of actions in the industry, with their highly competitive price advantages, has not only altered ordinary users' habits of using AI but also sparked a wave of change within the industry, driving the AI industry towards a more inclusive direction.

03

The Popularization Process of Large Models Will Accelerate

Once technology giants and platform forces join in, the process of large model inclusivity triggered by DeepSeek will accelerate.

On February 3, we experienced the DeepSeek API invocation on the public cloud, using DeepSeek R1 to specifically explore two gameplay methods:

Gameplay 1: AI Strategist Experience Card of Emperor Qinshihuang

Gameplay 2: Old Photo Time Staining Machine

As can be seen, even without any prior technical background, by logging into the Baidu Intelligent Cloud website, simply clicking on the online experience, conducting real-name authentication, and navigating to the "Model Plaza," one can effortlessly invoke the DeepSeek-R1 and DeepSeek-V3 models.

Users can also select six models at once from the 67 models provided by Qianfan and let them perform the same task simultaneously, intuitively comparing the effects of the models and ultimately voting with their feet.

This is where the platform's advantage lies, integrating various modal open and closed-source models, akin to the "Didi Chuxing" of the AI world. It allows users to freely choose the most cost-effective model services by comparing prices and quality, while also enabling intelligent "carpooling" and multimodal collaboration to complement model capabilities and enhance application depth.

In terms of various supporting services, the leading cloud platforms also exhibit an extremely fast response speed in the construction and improvement of capabilities such as one-stop development toolchains, full-lifecycle security mechanisms, and industry solutions.

In terms of toolchains, although large models have been exploding for two years, the threshold remains high, necessitating various user-friendly tools. For example, we found that on the Github community, sorted by the number of stars, the most popular DeepSeek projects include a toolset to assist developers in using DeepSeek—DeepSeek-Tools, and another that helps developers automatically select and optimize hyperparameters for DeepSeek models—DeepSeek-AutoML.

Various cloud giants have also made numerous layouts in the toolchain, such as Baidu Intelligent Cloud's Qianfan large model platform, which, although it does not directly list the DeepSeek toolkit, has concentrated various similar tools such as data processing, workflow orchestration, model fine-tuning, model evaluation, model quantization...

When enterprise users utilize the DeepSeek model to develop applications but are concerned about issues such as training data leakage, generated content not meeting regulations, and the model being maliciously attacked during the inference process, each cloud platform also provides guarantees in terms of security mechanisms.

According to news reports, when Baidu Intelligent Cloud integrates DeepSeek into the Qianfan inference link, it bolsters Baidu's exclusive content security operator, thereby ensuring the integrity of content generation. Through its data security product, it guarantees that the model is exclusively utilized for inference prediction programs, while training data is strictly reserved for model fine-tuning programs. Additionally, the BLS log analysis and BCM alert functions integrated into the Qianfan platform further assure that users with stringent security requirements, such as those in finance or healthcare, can develop more secure and reliable intelligent applications.

Furthermore, the extensive industry coverage and tailored solutions accumulated by cloud platforms aid developers in achieving swift replication and scenario adaptation across various industries, enabling DeepSeek to rapidly penetrate these vertical fields.

As enterprises gradually shift their focus from model training and fine-tuning to inference, the support and optimization of the inference process have become paramount. Baidu Intelligent Cloud has undertaken specific optimizations for DeepSeek, including extreme performance enhancements for computations within the model's MLA structure. By effectively overlapping different resource type operators, such as computation, communication, and memory access, and implementing an efficient Prefill/Decode separate inference architecture, Baidu has managed to increase throughput dramatically while maintaining SLA compliance for core latency indicators (TTFT/TPOT), resulting in significantly reduced inference costs.

Qianfan supports multiple mainstream inference frameworks, empowering developers to select the most suitable inference engine based on their actual scenarios. For instance, vLLM is renowned for its high throughput and memory efficiency, making it ideal for large-scale model deployment. Conversely, SGLang excels in latency and throughput compared to other mainstream frameworks. Moreover, Qianfan allows users to customize the import and deployment of models, providing flexibility for DeepSeek development.

With the involvement of large corporations and platform enterprises, AI inclusivity is poised to be one of the key development themes this year. As large models transition from being "toys for the rich" to becoming "rations for ordinary people," the lowered innovation threshold will foster greater creativity, ultimately enabling humans to transcend the limitations of ability and resources. This includes small shop owners leveraging AI to design trendy packaging, middle school students developing campus assistants using open-source models, and rural doctors utilizing multimodal tools to aid in diagnosis. This intelligent revolution, which welcomes participation from all, allows every ordinary person to stand on the shoulders of AI and grasp a future that was once beyond reach.