Revolutionizing Edge AI: Collaborative Optimization of "Computing Power x Communication x Storage" Unlocks Commercial Value

![]() 02/19 2025

02/19 2025

![]() 486

486

This marks my 360th column article.

Craft a personal voice assistant for just 80 yuan, or build a sophisticated robot capable of large model access, gesture recognition, and touch interaction for only 358 yuan... DeepSeek's open-source, lightweight, and cost-effective nature has ushered in an era of AI accessibility for all.

Against this backdrop, there's no excuse for enterprises to shy away from AI-driven innovation.

Furthermore, the generative AI wave sparked by DeepSeek is fundamentally a dividend of edge AI.

DeepSeek's emergence compels us to reevaluate AI's return on investment (ROI), recognizing that edge AI will be a new avenue for maximizing ROI.

Historically, large generative AI models have grappled with the ROI dilemma between cost and value. While models like DeepSeek continue to enhance capabilities, their training and inference costs remain exorbitant, limiting commercial deployment ROI. Currently, AI investment logic revolves around computing power scale and model capabilities, but this model's sustainability is being questioned.

The crux of AI deployment lies in minimizing computing costs.

Traditional cloud-based AI computing's high investment is prohibitive for many enterprises, particularly small and medium-sized ones. If AI models can't operate in a cost-effective, low-power environment, their commercial applications will be limited, hindering ROI improvement.

Large models like DeepSeek offer novel solutions to this challenge.

From a corporate perspective, edge AI R&D was once the domain of large enterprises. However, with DeepSeek's low-cost inference technology, SMEs can now integrate powerful AI features into products like AI toys and glasses, accelerating intelligent edge hardware adoption.

Through edge AI applications, large models like DeepSeek deploy lightweight versions locally, reducing computing costs and enhancing inference efficiency. Coupled with AIoT dedicated chips, the inference process is optimized, lowering cloud computing power consumption and boosting overall ROI.

This model is ideal for smart manufacturing, smart hardware, and autonomous driving scenarios, poised to propel large-scale AI commercial deployment.

This article delves into the edge AI dividend triggered by DeepSeek:

AI ROI is undergoing a reassessment: Have massive AI investments truly boosted productivity? How will next-gen AI models like DeepSeek impact ROI calculations?

From "model capability" to "implementation efficiency": AI investment focus is shifting from "building more powerful models" to "creating a more efficient integrated architecture of sensing, perception, and intelligence".

Large models like DeepSeek necessitate a change in ROI assessment. It should no longer hinge solely on larger-scale computing power investments but prioritize computing architecture optimization.

Edge AI's rise offers new avenues for AI model commercial deployment, poised to significantly enhance AI investments' long-term value.

Both AI innovation and ROI are crucial.

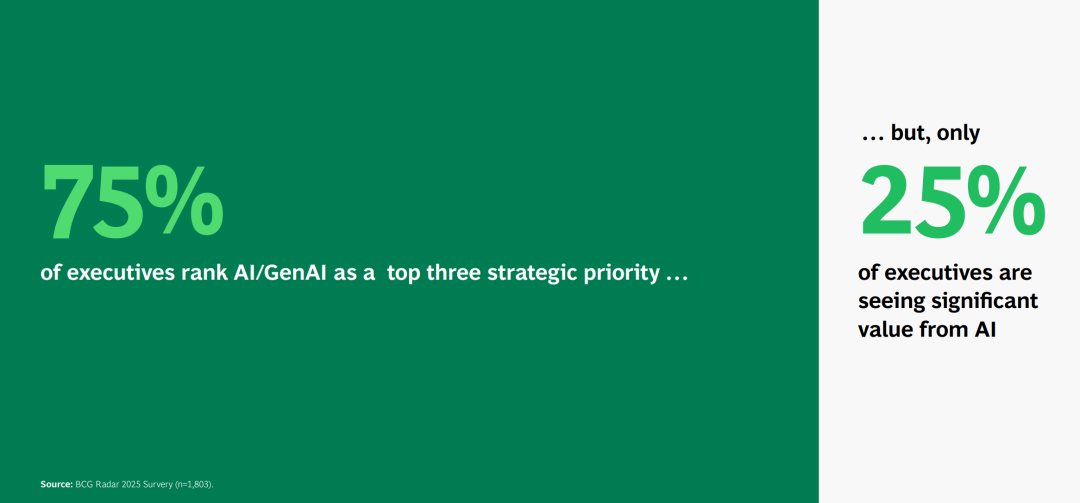

Recently, the capital market has been AI investment-obsessed, yet there's still debate over actual commercial deployment ROI. A Boston Consulting Group (BCG) survey revealed that 75% of enterprises haven't seen a return on their AI investments. The reasons behind this are thought-provoking.

In BCG's 2025 study, 1,803 global corporate executives were surveyed, with one-third planning to invest at least $25 million in technology upgrades this year.

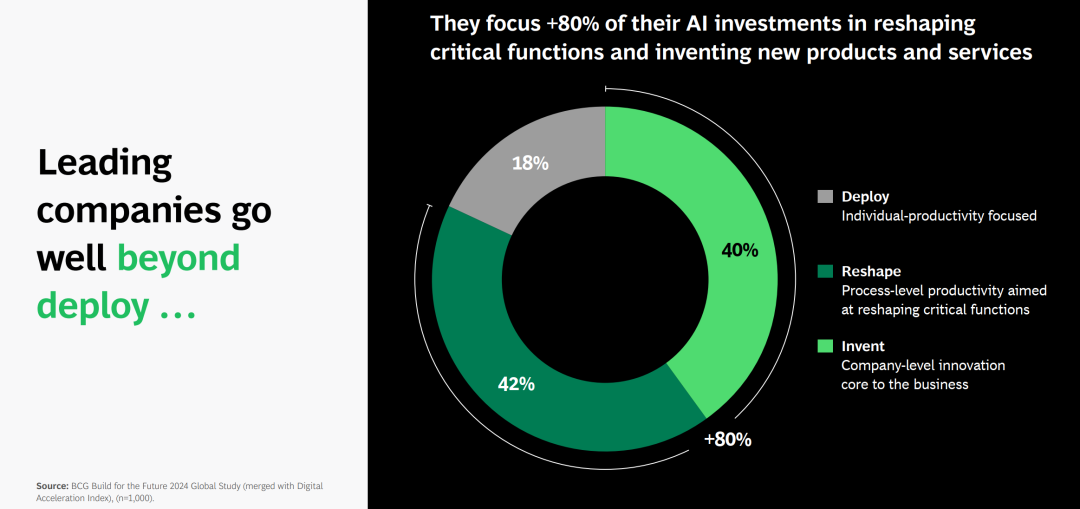

However, only about 25% of companies have seen an ROI. BCG found that companies achieving excellent ROI focus over 80% of their AI investments on reshaping key business functions and developing new products and services.

DeepSeek paves the way for creating new products, services, and business models with reduced trial-and-error costs.

Enterprises or individual developers can download DeepSeek to any laptop, enabling edge-side model operation without specialized hardware, significantly accelerating edge computing development.

Edges process data closer to the source, reducing latency and bandwidth usage. This aids IoT enterprises in predicting customer needs, taking action on their behalf, and operating efficiently in localized environments.

Understanding DeepSeek-R1 reveals its real-time data processing and analysis capabilities, enabling faster, smarter terminal and edge devices.

This capability is invaluable in real-time decision-making scenarios like autonomous vehicles, industrial automation, and smart cities. Leveraging edge LLMs, enterprises achieve faster data processing, higher prediction accuracy, and enhanced user experiences.

DeepSeek is more than "China's ChatGPT"; it signifies a global AI innovation leap. By reducing model building costs, time, and effort, more researchers and developers can experiment, innovate, and try new models.

New models like DeepSeek propel AI's widespread adoption, overcoming computing power costs, inference efficiency, and data monopoly issues, addressing ROI realization constraints. DeepSeek's algorithmic-level inference cost optimization creates more space for domestic computing power chips and computing cluster networks. Reduced software and hardware costs will also foster domestic AI applications, fostering a positive industrial development cycle.

Edge AI's Triangle Law: Collaborative optimization of "computing power x communication x storage" determines commercial value.

AI investment logic is shifting from "purely pursuing more powerful models" to "how to use AI more efficiently".

As mentioned in "Megabyte/Quectel/Mobilex/Rihai... Collaborate, Deepseek Catalyzes the 'First Year of Edge AI'?", as large AI models shift from the cloud to the edge, an "edge AI revolution" is unfolding. In this revolution, communication modules, as the bridge between the physical and digital worlds, play a vital role, attracting industry attention. In 2025, led by the DeepSeek wave, the AIoT industry may usher in the "first year of edge AI".

Why will communication module and storage hardware enterprises become key players in edge AI's core competition?

Edge AI's computing architecture reshapes AI investment logic: from "stronger" to "more efficient", collaborative optimization of computing power, communication, and storage determines commercial value.

Historically, AI investment focused on "larger parameter scale + stronger generalization ability", but future key indicators will be "lower power consumption + faster inference speed", i.e., achieving efficient AI inference and application deployment with limited computing power.

Future AI ROI assessments will not only focus on model capabilities but also measure the "computing costs vs. commercial benefits" balance. The combination of communication and computing power, or computing power and storage, will distinguish edge AI from cloud AI.

According to Dongwu Securities, edge computing power demand will grow at over double the rate from 2024 to 2027, maintaining a high double-digit growth rate from 2027 to 2030.

Under this trend, edge AI is at the forefront of AI computing architecture innovation. It not only represents the next AI application growth point but is also pivotal in redefining large models' commercial value.

Integrated computing and storage or storage and computing solutions are expected to become mainstream. Compared to multi-chip solutions, this integrated architecture offers simplified architecture, reduced costs, better power consumption management, and stronger integration. This new solution minimizes system switching latency, making AI computing smoother, ideal for smart homes, wearable devices, and industrial IoT.

This architectural upgrade not only optimizes edge devices' computing capabilities but also furthers edge AI adoption, providing a better AI computing commercialization solution.

Matrix for Reassessing Edge AI's Commercial Value

Traditional AI commercial value assessment models are no longer applicable to edge AI, necessitating new assessment dimensions and indicators.

Below is a matrix for reassessing edge AI's commercial value, comparing differences between traditional and new-generation edge AI across various dimensions:

Let's delve into this commercial value reassessment matrix:

Core Indicators: From "Absolute Performance" to "Efficiency Density"

Traditional AI models prioritize absolute performance like accuracy, but edge AI emphasizes efficiency density, i.e., achieving higher performance under limited power consumption. Accuracy per watt has become a new edge AI commercial value metric.

Value Anchor: From "Scale Worship" to "Efficiency Revolution"

Historically, AI model value was anchored on parameter count, believing larger models would yield better performance. However, in the edge AI era, inference energy efficiency ratio has emerged as a new value anchor, focusing on efficient inference with limited computing power.

Competitive Barrier: From "Data Monopoly" to "Architectural Innovation"

Traditional AI competition centered on data scale, giving data-rich enterprises an advantage. However, in edge AI, architectural innovation is the new competitive barrier. More efficient, intelligent computing architectures will determine enterprises' market positions.

Business Model: From "Software Services" to "Hardware-Software Integration"

Traditional AI business models rely on cloud API calls, with users paying based on usage. In the edge AI era, hardware and service integration has become the new norm. Enterprises achieve sustained revenue through intelligent hardware sales and subscription services.

Edge AI ROI optimization paths include leveraging low-cost edge computing chips to reduce data center resource dependence; optimizing inference efficiency and energy efficiency ratio to lower terminal device AI computing costs; and enhancing data utilization and reducing redundant computations through intelligent storage and communication technologies.

Historically, AI investments focused on model capability improvement, pursuing larger parameter scales and complex neural network architectures. However, the computing costs vs. commercial benefits balance is now the core AI investment consideration in the new era.

Future AI investment will shift from "stronger AI" to "more efficient AI" and from "pure software innovation" to "hardware-software integration". AIoT chips, edge devices, and optimization algorithms will redefine large models' commercial value.

Thus, AI's commercial value will no longer be solely determined by model capabilities but by the computing costs vs. commercial benefits balance. Only AI architectures balancing commercial value across computing power, power consumption, storage, and communication dimensions can achieve sustainable growth. Edge AI's rise will steer the industry towards a more pragmatic, sustainable development path.

Written at the end

Edge AI is reshaping AI's investment logic and value assessment system. Enterprises must shift from purely pursuing model performance to a more comprehensive, balanced AI efficiency assessment. Collaborative optimization of computing power, communication, and storage will be crucial in determining AI's commercial value.

DeepSeek demonstrates edge AI's huge potential, expected to significantly lower AI application thresholds, enabling more SMEs and innovators to join the AI wave. Simultaneously, edge AI presents new requirements for chips, communication, and storage, driving coordinated industrial chain upgrades.

We are currently compiling the "China Edge AI Panorama Report". Interested parties can scan the QR code below to participate.

References:

1. Megabyte/Quectel/Mobilex/Rihai... Collaborate, Deepseek Catalyzes the "First Year of Edge AI"? Source: IoT Wisdom

3. "Deploy a Personal Voice Assistant for Just 80 Yuan"! DeepSeek Ushers in the Era of AI Accessibility, and Edge Applications Unleash Boundless Imagination. Source: Securities Daily