Humanoid Robots Versus Autonomous Driving: Who to Follow? (Concept Stocks Attached)

![]() 03/11 2025

03/11 2025

![]() 557

557

At the dawn of 2025, the humanoid robot sector stormed the capital market with unprecedented fervor: Changsheng Bearing emerged as the sector's first 'ten-bagger' stock, and the sector index has surged over 40% since the start of the year. The market has shown tangible confidence in this 'general revolution' with real money.

However, as Tesla accelerates its Optimus mass production plans and Yushu Technology's consumer-grade robots sell out instantly, the industry still grapples with a stark paradox—why is it challenging to transform laboratory technological breakthroughs into daily assistants for thousands of households?

01. Without AGI, Are Robots Merely 'Toys'?

From the current technological standpoint, humanoid robots lacking Artificial General Intelligence (AGI) indeed struggle to shed the label of 'high-end toys'. Despite their hardware's ability to complete basic tasks, true intelligence hinges on underlying model breakthroughs. The following analysis delves into three facets: technology, data, and commercialization.

The core value of humanoid robots lies in their natural compatibility with the human physical world. All infrastructure in human society—stair heights, tool sizes, operating interface interaction logic—is designed around ergonomics. For instance, in automobile factory practical training, the UBTech Walker S series robot can already handle tasks like car door assembly and parts sorting, benefiting from its compatibility with human working scenarios.

However, this compatibility advantage necessitates general intelligence support. While current humanoid robots can perform single tasks under preset programs (like handling and quality inspection), they still rely on pre-set algorithm libraries when faced with sudden environmental changes (like parts scattering due to slippery ground) and lack independent judgment capabilities. Boston Dynamics' Atlas parkour performance may seem astonishing, but in reality, each movement set is meticulously debugged frame by frame by engineers, essentially making it a 'precision puppet'.

Robot intelligence necessitates a closed loop of 'perception-decision-execution', where data quality and model generalization ability are core bottlenecks. Taking Tesla's Optimus as an example, its technological path relies on multimodal data accumulated from autonomous driving (vision, radar, driving behavior), but the existing data is still confined to specific scenarios.

True generalization must encompass long-tail scenarios like home care and medical emergencies, placing higher demands on data diversity.

Currently, leading enterprises' solutions are polarized: UBTech accumulates industrial scenario data through factory practical training, while Physical Intelligence and others attempt to endow robots with semantic reasoning abilities using Visual Language Models (VLM). However, neither has transcended the 'task-based intelligence' framework, and there's still a generational gap from the causal reasoning and abstract generalization abilities required by AGI.

Cost and market acceptance pose dual constraints. High-precision components (like touch-sensitive dexterous hands, multi-axis joint motors) result in a unit cost reaching hundreds of thousands of dollars, far exceeding ordinary consumers' affordability. Even if Tesla claims to reduce Optimus's unit price, its functions are still limited to factory assembly lines and difficult to penetrate household scenarios.

The deeper contradiction lies in the fact that if robots can only replace 20% of human basic labor, their economic value is insufficient to justify mass production investment; to achieve a higher replacement rate, it's necessary to breach the critical point of AGI technology. This 'chicken and egg' cycle keeps current humanoid robots predominantly in the technical validation stage.

Whether humanoid robots can transcend the 'toy' stage depends on the synchronized pace of AGI technological breakthroughs and physical carrier evolution. In the short term, specialized robots for specific scenarios (like industrial inspections and medical assistance) will lead the way; in the long run, only by achieving deep integration of the 'brain' (general model) and the 'cerebellum' (motion control) can humanoid robots truly become 'digital life forms' in human society.

02. Now, More Focus Should Be on Autonomous Driving Players

As a product of the deep integration of artificial intelligence and the automotive industry, autonomous driving technology evolves according to the Society of Automotive Engineers (SAE) classification standards: spanning six levels from L0 (fully manual driving) to L5 (fully autonomous driving). Among them, L2 is defined as 'partial automation', where the vehicle simultaneously controls steering and acceleration/braking (like adaptive cruise control, lane keeping), but the driver must constantly monitor the environment and take over control when necessary.

This stage's typical characteristic is system-human collaborative decision-making, with the technical core lying in multi-sensor fusion (cameras, radars, etc.), environmental perception algorithms, and real-time control capabilities. Current mainstream models such as Tesla Autopilot and Toyota Safety Sense reside at this stage. Their underlying logic is to enhance driving safety and comfort through algorithm optimization in limited scenarios, but they cannot handle complex traffic environments.

Notably, the intelligence process of humanoid robots mirrors the technological evolution path of autonomous driving. According to public statements by XPeng Motors Chairman He Xiaopeng, the current development level of humanoid robots is akin to the nascent stage of L2 autonomous driving.

This 'semi-autonomous operation in limited scenarios' characteristic aligns closely with the 'system assistance + human fallback' model of L2 autonomous driving. The commonality between the two lies in the core bottleneck of insufficient generalization ability in environmental understanding—autonomous driving needs to conquer long-tail scenarios like extreme weather and unmarked roads, while humanoid robots must address challenges such as dynamic object grasping and multi-task coordination in unstructured environments.

From the logical deduction of technological development, enterprises with deep algorithmic layer accumulation will inevitably dominate the humanoid robot field in the future. This advantage is primarily manifested in three dimensions:

First, the cross-scenario migration capability of multimodal perception algorithms. Tesla applied the visual algorithm architecture of Autopilot to the Optimus robot, enabling it to perform flexible operations like folding clothes without prior programming, validating the commercial value of algorithm versatility.

Second, the large-scale implementation of swarm intelligence and cloud collaboration. The 20 Walker S1 robots deployed by UBTech in the Zeekr factory achieve multi-robot task scheduling and collaborative control through the 'BrainNet' architecture. Its cloud inference model's ability to process hundreds of millions of industrial datasets directly determines the robot cluster's working efficiency.

This technical route, transplanting the idea of autonomous vehicle-road coordination to robot swarm operations, reduces the single machine cost by 30% while increasing overall operational efficiency by fourfold. Notably, the reinforcement learning framework and distributed computing architecture relied upon by such systems are the core technological moats of leading autonomous driving companies.

Third, the closed-loop iteration of simulation platforms and real data. XPeng Motors applies its autonomous driving simulation test platform to humanoid robot training. Through tens of thousands of hours of collision detection and motion trajectory optimization in a virtual environment, the Iron robot's assembly error is controlled within millimeters, significantly enhancing efficiency compared to traditional debugging methods.

The synergistic effect of policies and industrial ecosystems will further amplify leading enterprises' advantages. The 'Humanoid Robot Industry Cluster Action Plan' issued by the Shenzhen Municipal Government in 2025 explicitly stated that enterprises achieving multi-robot collaborative operations will receive a 15% research and development subsidy, prompting companies like UBTech to accelerate their swarm intelligence technology layout in industrial scenarios.

Simultaneously, the capital market's high recognition of the 'algorithm + scenario' dual-wheel drive model has enabled startups like Zhiyuan Robotics, established for only two years, to attain a valuation exceeding $2 billion. Its rapidly iterated embodied intelligence model has achieved millimeter-level precision assembly in the 3C electronics manufacturing field.

It is foreseeable that the 'L3 breakthrough period' of humanoid robots (where the system dominates decision-making, and humans only intervene in emergencies) will initially occur in manufacturers with abundant algorithm reserves.

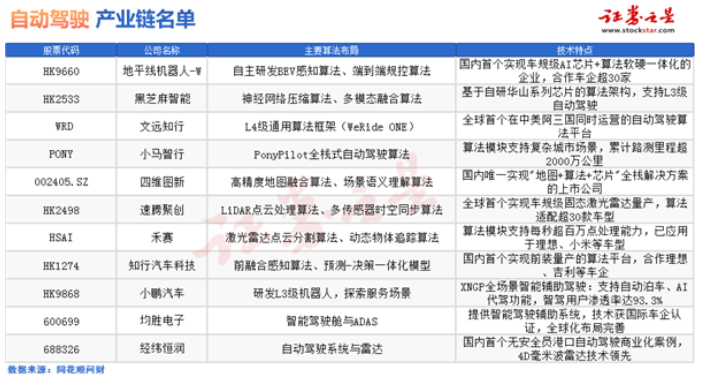

Horizon Robotics (HK9660) is the first domestic enterprise to achieve synergistic optimization of super AI chips and algorithms, with a migration volume of 300,000 on its cooperation platform. Black Sesame Technologies (HK2533) focuses on neural network compression algorithms and multimodal fusion algorithms, supporting L3 autonomous driving technology based on its self-developed Huashan series chip architecture. WeRide (WRD) has developed an L4 general algorithm platform, becoming the world's first autonomous driving algorithm platform operating concurrently in China and the United States.

Pony.ai (PONY) provides full-stack autonomous driving algorithms, and its technical modules can handle complex urban scenarios, with a cumulative road test mileage exceeding 20 million kilometers. NavInfo (002405.SZ) specializes in high-precision map fusion algorithms and semantic understanding algorithms, and is the only listed company in China that integrates a global solution of 'map + algorithm + chip'. RoboSense (HK2498), with LiDAR point cloud processing algorithms and multi-sensor spatial-temporal synchronization algorithms at its core, has pioneered mass production of automotive-grade dynamic LiDAR, compatible with 300,000 point cloud processors.

Hesai Technology (HSAI)'s LiDAR point cloud segmentation and dynamic tracking algorithms support millions of point cloud processing capabilities per second, already applied to models such as Li Auto and Xiaomi. Zhixing Automotive Technology (HK1274) has developed a front-fusion perception algorithm and an integrated model of prediction and decision-making, creating the first domestic pre-installed mass-produced algorithm platform, with cooperating automakers including Li Auto and Geely. Joyson Electronics (600699) specializes in intelligent cockpits and ADAS systems, with internationally certified technology and a global layout. HiRain Technologies (688326) leads in 4D millimeter-wave radar technology, implementing the country's first commercial case of driverless port operation. Additionally, XPeng Motors' (HK9868) XNGP intelligent assisted driving system supports automatic parking and AI valet functions, with a smart driving user penetration rate of 93.3%.

These enterprises can achieve technological migration at a lower marginal cost through the perception and prediction algorithms, high-precision control models, and large-scale data training infrastructures accumulated during the autonomous driving era. And as the industry advances to the L4 stage (fully autonomous operations), the patent barriers, ecological partner networks, and vertical scenario databases they've established will become insurmountable competitive thresholds. This industrial transformation, led by algorithmic advantages, is reshaping the productivity landscape in manufacturing, logistics, home services, and other fields.

- End -