AI "Pretending to Be Grandsons" and Manipulating the Elderly

![]() 03/13 2025

03/13 2025

![]() 692

692

A "Cyber Anti-Scam Guide" for the Elderly

"Last National Day holiday, some people took 7 days off, but I scolded them for 8 straight days."

Recently, Lei Jun mentioned during the Two Sessions that he was used by netizens last year for AI face-swapping and voice mimicry, serving as a "mouth substitute" and "face substitute" in various videos. Lei Jun said that for these "pirated" versions of himself, he wanted to defend his rights through legal means but found that there was no specific legislation on this, so he could only sue under laws related to privacy, portrait rights, and reputation rights, all of which required quantifying losses, causing him much trouble.

Source: Douyin Screenshot

Nowadays, AI videos on social platforms are not uncommon, with AI-generated human images reaching a level of realism that can deceive. Among the various "AI scams", Lei Jun is not even the biggest victim; facing the inducements of these "AI humans", almost all those who fall for it are unguarded middle-aged and elderly people.

01. "AI Grandsons" Manipulating the Elderly

"After my grandmother became obsessed with the fake grandson on Douyin, I fell out of favor." Lu Jun's (pseudonym) grandmother is 79 years old and has been watching short videos of chubby children every day since the Spring Festival. The chubby children in the videos wear little cotton-padded jackets and bellybands, and every time they call out "Grandma," Lu Jun's grandmother laughs out loud.

Upon closer inspection of the chubby children in the videos, their skin is so smooth that it seems unrealistic, the background is partially blurred, and the length and placement of their fingers are unnatural, with heavy traces of AI creation, making them "obviously fake" to many young people.

Source: Douyin Screenshot

However, middle-aged and elderly people have almost no ability to identify AI creations. Lu Jun has reminded his grandmother more than once that it's "fake" and generated by AI, and some videos have prompts about AI creation below them, but his grandmother doesn't care. Lu Jun is also very helpless about this, "The first time I reminded my grandmother, she was a bit shocked, but she continued to watch afterward. She doesn't care if the kids are real or fake, she just thinks that anything that can make her happy is good."

"AI babies" target the emotional needs of middle-aged and elderly groups that are often neglected. Some netizens even treat AI babies as a wishing well for pregnancy, hoping that their families can also have a baby like the ones in the videos, and leave messages in the comments section saying "take the baby".

Source: Douyin Screenshot

If it's just considered as a pastime, it's fine, but what's worrying is that the elderly in the family believe everything these AI images say. Liu Ran's (pseudonym) grandfather often forwards health tips from health experts to the family group. Once Liu Ran clicked on one to find out that the so-called expert his grandfather mentioned was actually a digital person created by AI.

Liu Ran graduated with a medical degree and is currently pursuing a doctoral degree in nephrology, but his grandfather still argues with him when it comes to topics related to health and disease prevention. Compared to his own grandson, his grandfather trusts the digital "experts" in short videos more, even though the experts in this account do not have any authoritative certification.

Source: Douyin Screenshot

For many young people, it is just as puzzling that their elders trust digital people as it was when they were obsessed with "anti-intellectual marketing accounts." Liu Ran is very helpless about this. His grandfather also attended university, "The so-called 'teachers' and 'experts' online casually say a few words, and they think it's the truth, but they are unwilling to listen to the truth from their relatives around them."

"Interesting Business Insights" observed that social platforms are now flooded with AI accounts targeting middle-aged and elderly groups. Most of the video content on these accounts is generated by AI using human images, attracting the attention and trust of middle-aged and elderly people through "talking techniques" such as ingratiating themselves and sharing philosophy; some AI accounts have already earned their first pot of gold through window shopping and sales based on this "trust".

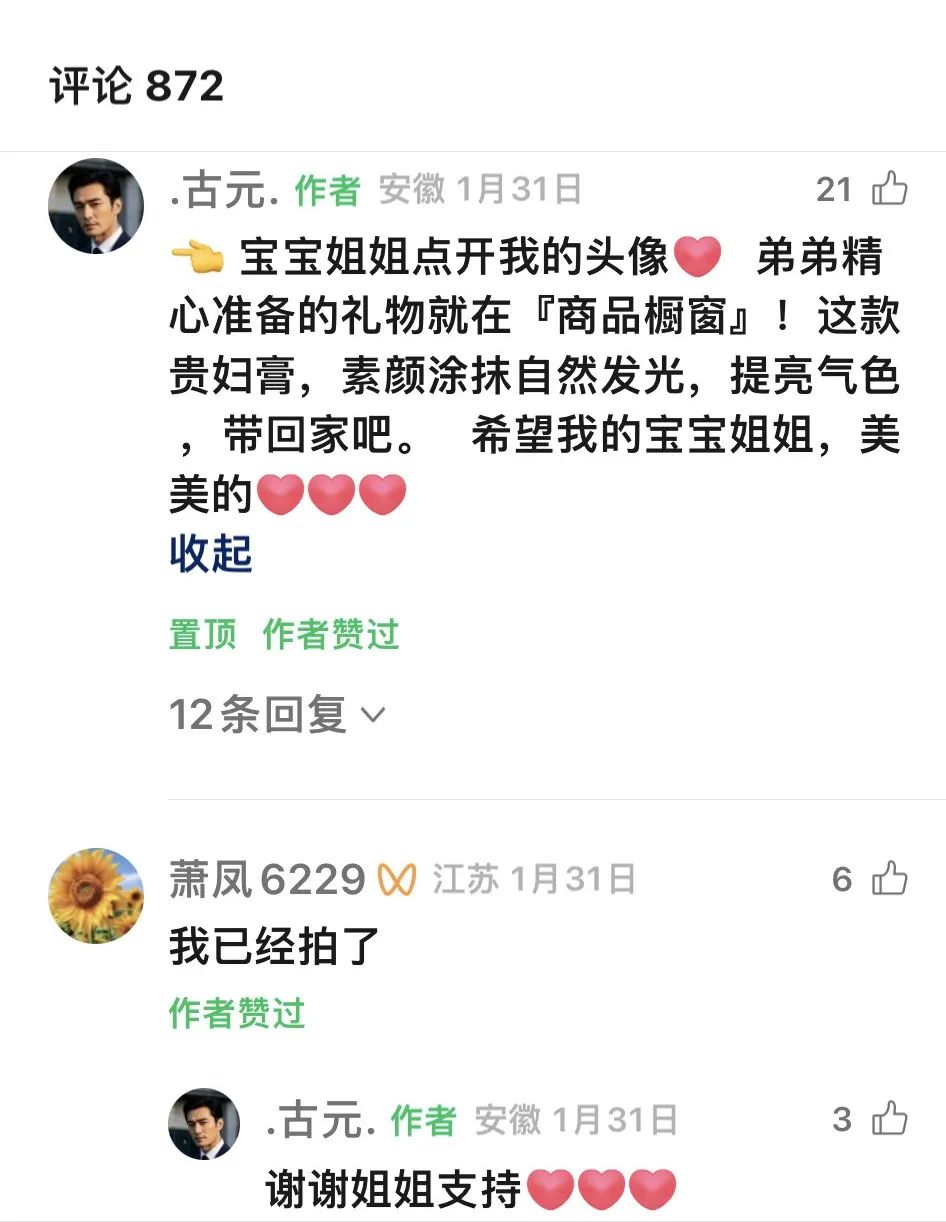

Source: Video Number Screenshot

For example, the video number "·Gu Yuan·" in the above image targets elderly women who are eager for care. Most of the videos begin with "My precious sister," and the content mostly revolves around the daily life of elderly women, "I noticed that my sister has looked haggard lately. My younger brother has run around a lot and found this facial cream that is particularly suitable for my sister," and then guides users to click on the homepage to follow and place an order.

Although this account has not posted many videos, the sales volume of its window shopping products has exceeded three digits; most of the comments are from users who have been moved by the gentle words of the "man" in the videos.

Source: Video Number Screenshot

In fact, health and emotional content have always been heavily targeted areas for "harvesting" the elderly, and those who are scammed are usually not related to their level of education but are obsessed with the emotional value brought by the videos.

According to a previous report by "Lanjing News", digital human videos that target the elderly have now developed into a gray industrial chain. Outsourcing companies that produce digital humans have emerged on the market, with service prices ranging from 5,000 to 10,000 yuan. Merchants will help customers build digital human images and guide them in operating accounts.

Many AI accounts use the empathy of the elderly to sell products, but it is worth noting that with the rapid iteration of technology, AI face-swapping scams targeting the middle-aged and elderly have also begun to appear frequently.

During the Two Sessions, in addition to Lei Jun complaining about being made into an AI, CPPCC member Jin Dong also mentioned the dangers of AI face-swapping, saying that some viewers who like his movies and TV shows have been severely deceived by AI face-swapping videos.

Source: Video Screenshot

According to "Interesting Business Insights", last year, an elderly person in Jiangxi was deceived by a face-swapped video and wanted to take out a loan of 2 million yuan to support his online boyfriend "Jin Dong" in filming.

02. "Two-Pronged Approach" to AI Development and Governance

People's vigilance cannot keep up with the speed of technological progress; not only the elderly but also young people with a certain amount of technological experience have been victimized by AI fraud.

According to a report by "Beijing Business Today", in February 2024, fraudsters used Deepfake technology to create videos of company executives speaking, inducing employees to transfer HK$200 million to designated accounts 15 times.

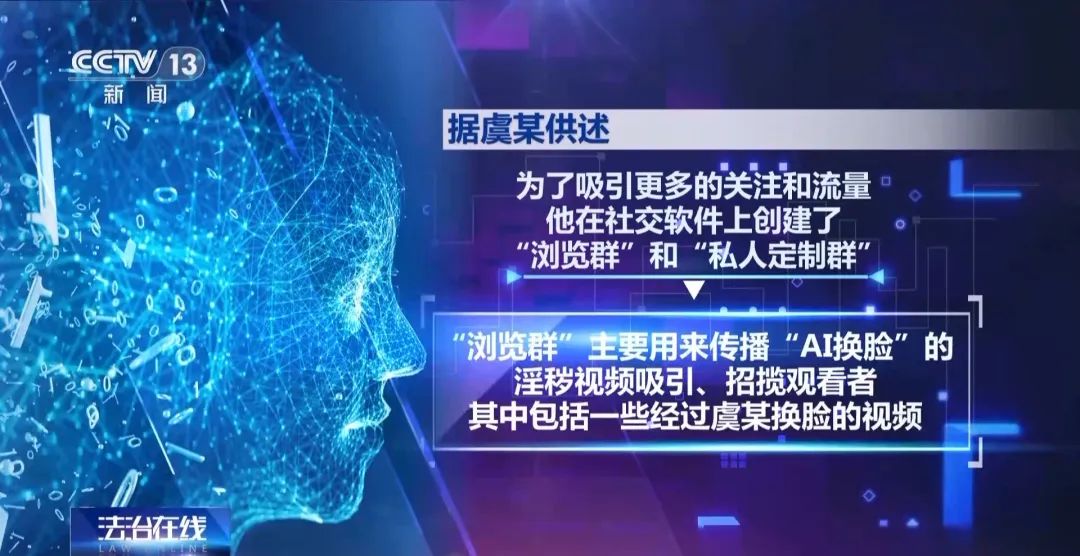

In addition, there are also illegal cases of using AI face-swapping to create pornographic videos and steal medical insurance funds.

Someone in Hangzhou once used AI face-swapping to forge pornographic videos involving female celebrities and even set up group chats to meet the demand for customized face-swapped pornographic videos, charging corresponding fees based on the duration and difficulty of the videos. The Xiaoshan Procuratorate in Hangzhou eventually seized more than 1,200 obscene videos from this group.

Source: CCTV News Screenshot

AI is making it easier and easier to forge content. Fraud can be sentenced based on the consequences, but it is not easy to eradicate false AI information on the internet.

After an earthquake occurred in Tingri, Tibet, at the end of last year, a picture of a "buried little boy" went viral on social media but was later proven to be generated by AI; in January of this year, when a wildfire broke out in Los Angeles, a photo of the Hollywood sign on fire went viral on domestic and foreign social platforms, and this photo was also debunked by official media as being forged by AI. What is worrying is that even after official debunking, many netizens still regard these AI images as real photos.

Source: Weibo Screenshot

There must be a price to pay for bottomless forgery. Zhang Zihang, a lawyer from Beijing Zhoutai Law Firm, said that in public events, deliberately distorting facts, using AI or other technical means to create images and videos, and spreading them on social platforms to cause negative impacts, the responsibility of the producer must be investigated first. The producer may face administrative or criminal responsibility. In addition, social platforms that publish false information may also have to bear legal responsibility.

"AI security" is also a hot topic at this year's Two Sessions. Qi Xiangdong, a member of the National Committee of the Chinese People's Political Consultative Conference and Chairman of the QiAnXin Technology Group, proposed that addressing AI security issues should start from three aspects: technical safeguards, institutional safeguards, and application of results, to systematically improve security capabilities.

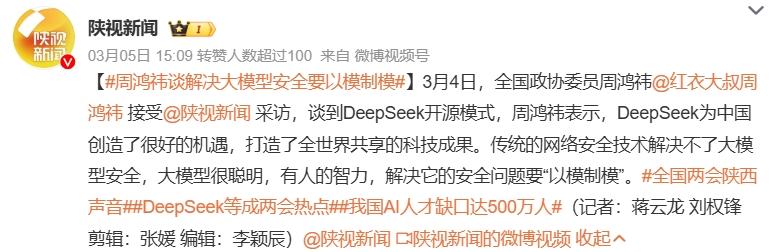

Zhou Hongyi, a member of the National Committee of the Chinese People's Political Consultative Conference and founder of the 360 Group, believes that traditional network security solutions cannot solve AI security issues. The security of intelligent agents, knowledge data, clients, and base models constitutes a new field of AI security. He suggested that enterprises that understand both security and AI should take the lead in solving the application security issues of large models by creating large security models through model-to-model methods.

Source: Weibo Screenshot

Nowadays, all platforms are strengthening their governance of AI content. Douyin, Video Numbers, Xiaohongshu, and others have all introduced management policies for AI governance. "Interesting Business Insights" learned that internet companies such as Tencent are already researching third-party tools for detecting AIGC, which support the detection of AI-generated content such as text and images through methods such as similar search, content detection, and watermark tracing.

Lisa, who works on AI research at a leading internet company, said that nowadays, generated content is becoming more and more realistic, and the characteristic differences between real and generated images are becoming smaller and smaller, making it more difficult for detection tool models to learn.

To reduce the occurrence of being deceived by AI, it requires the combined efforts of platform supervision, raising user awareness, and improving relevant laws and regulations.

On September 14, 2024, the State Internet Information Office issued a notice for public consultation on the "Measures for Identifying Artificially Intelligent Generated and Synthetic Content (Draft for Comment)" to regulate the identification of AI-generated and synthetic content, safeguard national security and social public interests, and protect the legitimate rights and interests of citizens, legal persons, and other organizations. The successive implementation of various regulations and regulatory policies has provided more specific starting points for rigid governance.

New technological advancements call for new regulatory systems, and this cannot be fully relied upon the self-discipline of technology and business companies. It requires the joint efforts of regulators and platforms. Although the application scope of AI is wide and difficult to define, the boundaries of law and morality are always clear; in the future, the strength of policy supervision and platform review will inevitably deepen, and it is worth paying further attention to the changes that will occur in the content ecology on social platforms as a result.