How Sensor Fusion Empowers Autonomous Vehicles to 'See' the World with Greater Clarity

![]() 04/07 2025

04/07 2025

![]() 554

554

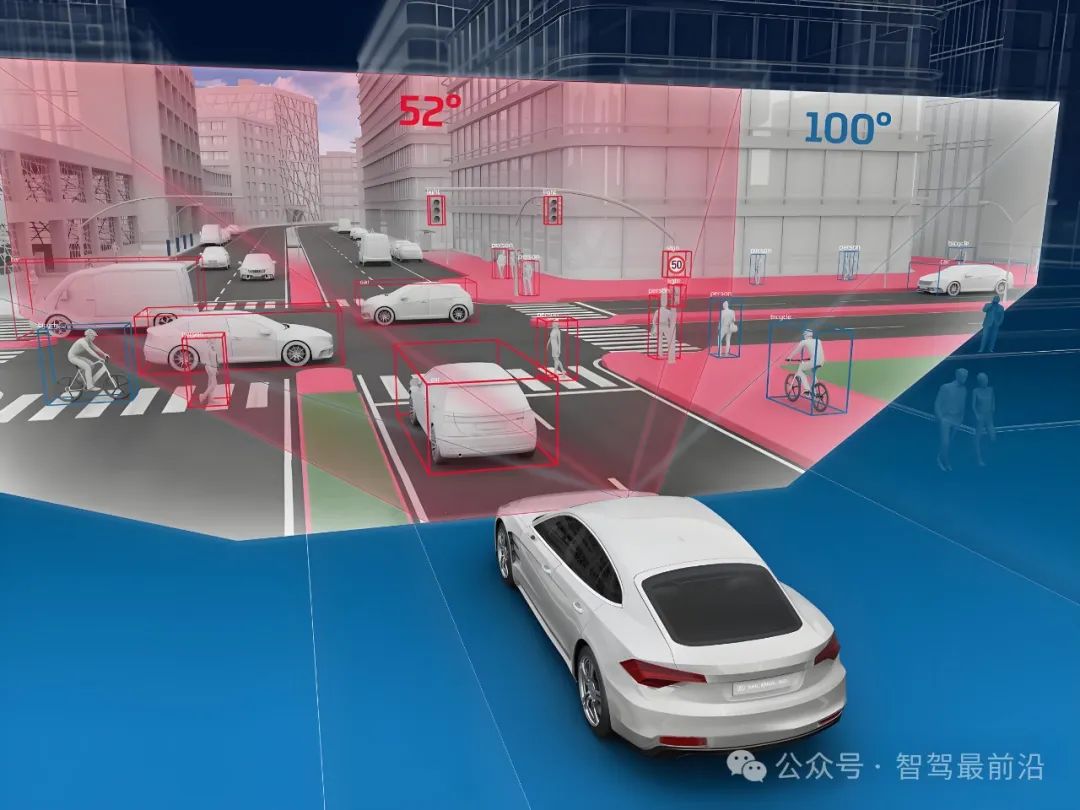

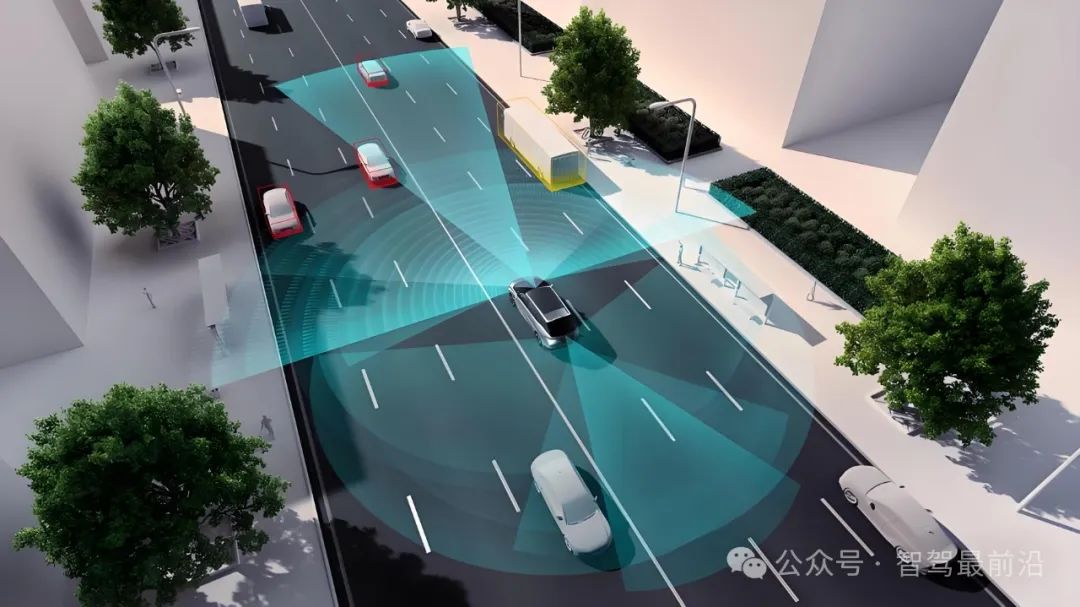

Autonomous driving technology represents a revolutionary shift in the future of transportation, striving to deliver safe, efficient, and convenient travel experiences through advanced technological means. Within this intricate system, the environmental perception system stands as a pivotal component, functioning as the 'eyes' of autonomous vehicles, enabling them to comprehend the external world and form the basis for autonomous decision-making.

The environmental perception system necessitates the real-time collection, processing, and analysis of both dynamic and static information surrounding the vehicle, including other vehicles, pedestrians, traffic signs, road conditions, and weather patterns. This information directly influences the precision and safety of autonomous driving's path planning and control decisions. Indeed, the technical prowess of the perception system directly determines the level of intelligence and the vehicle's ability to adapt to complex scenarios.

With the rapid evolution of sensor technology, artificial intelligence, and data processing capabilities, the perception system for autonomous driving has undergone significant advancements. Initially reliant on single-sensor data collection, it now achieves comprehensive environmental information perception through multi-modal sensors. Furthermore, the utilization of cooperative perception technology expands the perception range and enhances accuracy, transforming the perception system into a highly complex and technology-intensive research domain.

Overview of the Autonomous Driving Perception System

The perception system for autonomous driving serves as a core component of the vehicle's autonomous navigation and the technological bedrock for achieving high-level autonomous driving functions. Acting as both the 'eyes' and 'ears' of the vehicle, it gathers information about the external environment through various sensors, converting this data into a format that machines can comprehend and analyze. This provides vital support for the path planning and vehicle control of autonomous driving. The crux of this system lies in its capacity to perceive the dynamic environment in real-time and with precision, thereby aiding the vehicle in making safe and effective decisions.

In the realm of autonomous driving perception systems, the primary objective of environmental perception is to comprehensively understand both static and dynamic elements in the surrounding environment, encompassing lane lines, traffic signs, other vehicles, pedestrians, and potential obstacles. By accurately perceiving these elements, the vehicle can construct a clear environmental model in complex traffic scenarios, providing a solid foundation for subsequent driving decisions.

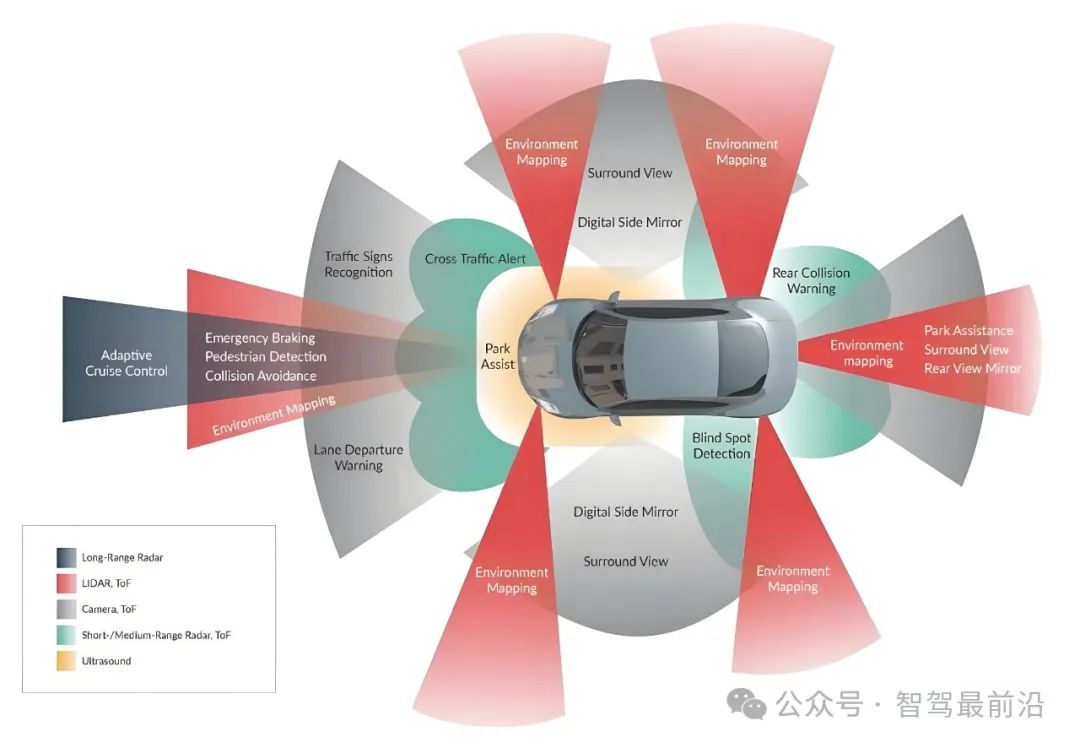

The implementation of the perception system hinges on the collaborative operation of multiple technical modules, including sensor data collection, feature extraction, data fusion, and semantic analysis. Data collection marks the inception of the perception system. Through the synergy of various sensors such as LiDAR, cameras, millimeter-wave radars, and ultrasonic radars, the perception system can address a wide range of perception needs spanning from long to short distances. Feature extraction employs intricate algorithms to extract valuable information from raw data, such as detecting vehicle boundaries, segmenting pedestrian contours, and identifying road signs. Subsequently, the system integrates information from disparate sensors into a unified environmental model through data fusion technology, mitigating potential shortcomings of individual sensors. For instance, LiDAR data offers high-precision 3D point clouds but struggles to differentiate object types, whereas cameras can supplement visual information, bolstering the system's semantic recognition capabilities.

Moreover, the design of the autonomous driving perception system must meet the criteria of efficiency and reliability. In complex driving scenarios, the system must process vast amounts of data within a brief timeframe and provide accurate recognition and analysis results. Hence, modern perception systems frequently leverage artificial intelligence technology, particularly deep learning algorithms, which have achieved remarkable progress in target recognition and classification. To address the challenges posed by diverse extreme weather and lighting conditions, the perception system has undergone multi-layered optimization in terms of sensor hardware design and algorithm robustness.

The perception system for autonomous driving is not merely a vital tool for vehicles to comprehend the external world but also the cornerstone of achieving safe and intelligent driving. With the unceasing development of technology, the perception system is progressing towards higher precision, lower cost, and enhanced robustness, solidifying its increasingly significant position within the overall technological framework of autonomous driving.

Core Technologies of the Autonomous Driving Perception System

The core technologies of the autonomous driving perception system encompass multi-modal sensors, data fusion technology, and high-precision maps and positioning technology. These technologies synergize to enable vehicles to perceive the surrounding environment comprehensively and accurately, process complex scenarios, and make real-time decisions. With the advancement of hardware performance and the incorporation of artificial intelligence algorithms, these technologies are propelling the perception system to new heights of sophistication.

Multi-modal sensor technology forms the bedrock of the perception system and is a crucial guarantee of its perception capabilities. Each sensor plays a distinct role in different application scenarios. LiDAR is extensively utilized in constructing geometric models of the surrounding environment due to its ability to provide high-precision 3D point cloud data. Especially in complex urban settings, its high spatial resolution and ranging capability significantly enhance obstacle recognition and mapping accuracy. However, the high cost of LiDAR and its performance limitations in rainy and foggy weather remain obstacles to its wider adoption. Cameras, serving as visual tools that mimic human eyes, can capture rich semantic information and are employed to recognize lane lines, traffic signs, pedestrians, and vehicle types. Cameras excel in sunny and well-lit conditions but may struggle in complex lighting scenarios such as bright light, shadows, and nighttime. Millimeter-wave radars excel in perceiving speed and distance information, particularly in rainy, snowy weather, and low-visibility environments, where they operate reliably. However, their spatial resolution is insufficient for accurately identifying static or complex-shaped objects. Ultrasonic radars are more commonly used for obstacle detection in short-distance environmental perception, such as during parking, but their detection range is relatively limited, rendering them unsuitable for complex scenarios. Consequently, to overcome the limitations of individual sensors, multi-sensor combinations have emerged as the mainstream solution for autonomous driving.

Data fusion technology serves as a pivotal means of integrating data from multiple sensors into a unified environmental model. This process compensates for the deficiencies of individual sensors by aligning and optimizing information from various sensors in both time and space. For instance, when fusing LiDAR and cameras, LiDAR provides precise spatial position and depth information, while cameras supplement semantic information like color and texture, enabling more thorough target detection and recognition through their combined use.

Data fusion is primarily categorized into three levels: sensor-level fusion, feature-level fusion, and decision-level fusion. Sensor-level fusion directly processes raw data, preserving more detailed information at an early stage but demanding higher computational performance. Feature-level fusion integrates features extracted from different sensors, effectively reducing data redundancy while improving the system's real-time performance. Decision-level fusion makes comprehensive decisions based on the outputs of each sensor after independent processing, making it suitable for complex scenarios but placing stringent demands on the reliability of fusion algorithms.

High-precision maps and positioning technology also provide indispensable environmental background information for the perception system. High-precision maps establish a static environmental benchmark for the perception system by detailing road geometry, lane widths, traffic sign and signal light locations, among other aspects. When combined with GNSS (Global Navigation Satellite System) and inertial navigation systems, vehicles can achieve centimeter-level high-precision positioning, further augmenting the environmental understanding capabilities of the perception system. Especially in urban environments, complex road structures and frequent obstructions make it challenging to meet accuracy requirements solely through sensor positioning, and high-precision maps effectively address this shortcoming.

With the widespread application of deep learning, the perception system is transitioning from traditional rule-based algorithms to data-driven intelligent solutions. Deep learning excels in tasks such as object detection, semantic segmentation, and trajectory prediction, offering reliable means for multi-target recognition and motion prediction in complex scenarios. However, this transition also imposes higher demands on hardware computing power, and striking a balance between perception algorithms and computing resources remains a crucial challenge.

In summary, the core technologies of the autonomous driving perception system have gradually achieved a leap from simple perception to precise understanding and from single tasks to comprehensive decision-making through the complementary nature of multi-modal sensors, the optimization of data fusion technology, and the integration of high-precision maps. With the continued advancement of these technologies, the perception system will exhibit higher robustness, enhanced real-time performance, and broader adaptability, laying a solid foundation for the full commercialization of autonomous driving.

Technical Evolution and Future Directions of Perception Technology

The development of perception technology for autonomous driving has transitioned from being predominantly sensor-driven to being powered by artificial intelligence, with the ultimate goal of enhancing the accuracy and robustness of environmental perception to meet real-time demands in complex traffic environments. The progress of this technology not only hinges on the improvement of hardware performance but is also profoundly influenced by deep learning algorithms and data-driven methodologies. With the rise of end-to-end learning frameworks, perception technology is gradually shifting from a modular design to a more efficient holistic solution.

In traditional perception technology, the modular approach reigns supreme, with the entire perception system being decomposed into multiple independent functional modules such as data collection, object detection, semantic segmentation, and motion prediction. While this method offers a clear structure, it also has notable shortcomings. The modular system is heavily reliant on the performance of each component, and errors in any module can cascade into subsequent tasks, undermining the overall effectiveness of the system. Additionally, the modular approach necessitates fine-tuning of each functional module, leading to high development costs and difficulties in adapting to rapidly evolving traffic scenarios.

The advent of deep learning technology has ushered in new possibilities for perception technology. Algorithms grounded in deep learning can extract features and perform object detection and classification directly from raw sensor data through extensive data training. For example, the application of Convolutional Neural Networks (CNNs) in image processing has made cameras a crucial component of the perception system, significantly boosting the accuracy of traffic sign recognition, lane line detection, and pedestrian recognition. LiDAR point cloud processing has also begun to leverage deep learning models to achieve more efficient 3D environmental modeling and obstacle recognition. These advancements enable the perception system to tackle more complex scenarios but also place higher demands on hardware computing power and data annotation.

In recent years, the emergence of end-to-end learning frameworks has presented a novel approach for the development of perception technology. Unlike traditional methods that rely on multiple independent modules, the end-to-end framework achieves full-process optimization from sensor data input to driving decision output through a unified neural network architecture. This method not only simplifies the system structure but also minimizes the accumulation of perception errors through global optimization. End-to-end perception systems typically input raw data directly into the model, then extract environmental features through deep neural networks, and output results such as target positions, trajectory predictions, and vehicle control commands. Although this approach is still in the exploratory phase, its potential has been demonstrated in several complex scenarios.

The holistic application of the end-to-end approach encounters several challenges. The primary hurdle is the stringent requirement for high-quality and diverse data during the training phase. In particular, inadequate coverage of edge scenarios can compromise the model's reliability in real-world applications. Moreover, the inherent 'black box' nature of end-to-end systems limits their interpretability, making it challenging to analyze and rectify errors in specific contexts. This lack of interpretability could impede the widespread commercialization of end-to-end perception technology in autonomous driving.

Looking ahead, the evolution of perception technology will exhibit several crucial characteristics. Firstly, cost-effective, high-performance perception hardware will emerge as a focal point of industry research. Pure vision solutions leveraging cameras are gradually supplanting costly LiDAR systems. This lightweight approach not only reduces hardware costs but also enhances the accuracy and applicability of visual perception through advanced artificial intelligence algorithms. Secondly, the collaborative perception of multi-modal sensors will be further enhanced, particularly with the support of vehicle-road coordination and V2X communication. By sharing data from roadside sensors and other vehicles, the overall performance of the perception system will be significantly bolstered. Autonomous vehicles can thereby expand their perception range and address single-vehicle perception blind spots.

As computing power improves and models are optimized, end-to-end learning methods are anticipated to achieve a genuine closed loop from perception to control. Future perception systems will be more intelligent and efficient, capable of not only gaining a comprehensive understanding of complex environments through end-to-end methods but also continuously optimizing their performance through adaptive learning to adapt to a wider array of traffic scenarios and dynamic demands. This trend will lay a solid foundation for the widespread adoption of autonomous driving technology and propel the entire industry towards a higher level of intelligence.

Application of Perception Technology in Typical Enterprises

In the realm of autonomous driving, major enterprises have developed unique perception technology systems tailored to their technical characteristics and development strategies. Whether it's Waymo's 'heavy perception' approach or Tesla's 'light perception' pure vision solution, these companies' technology choices and implementation paths reflect the diverse development trends and potential future evolution of perception systems.

As a global pioneer in autonomous driving, Waymo's perception technology system revolves around high-precision LiDAR, complemented by multi-modal sensors like cameras and millimeter-wave radars, to achieve high-accuracy perception. Waymo's 64-line LiDAR provides detailed 3D point cloud data, enabling precise modeling and real-time detection of obstacles, pedestrians, and vehicles in complex urban environments. Its cameras capture traffic lights, road signs, and other visual information, which is fused with LiDAR data to construct a more comprehensive environmental understanding. Millimeter-wave radars offer crucial support for long-range detection and all-weather adaptability. Through this multi-modal sensor collaboration, Waymo has significantly enhanced the reliability and robustness of its perception system, particularly excelling in complex urban driving scenarios. However, this solution is constrained by high costs and large equipment size, which currently hinder the large-scale commercialization of high-precision LiDAR.

In contrast to Waymo's 'heavy perception' strategy, Tesla has opted for a distinct technological path—the 'light perception' pure vision solution. Tesla believes cameras offer the most human-like perception, and by integrating multiple cameras, it achieves 360-degree panoramic coverage of the vehicle. Coupled with its proprietary deep learning algorithms and robust computing power, Tesla's visual perception system can accurately identify lane lines, traffic lights, pedestrians, and vehicles without relying on LiDAR. The crux of its technology lies in processing data streams from multi-view cameras through neural networks to accomplish tasks such as object detection, semantic segmentation, and trajectory prediction. This software-driven architecture drastically reduces hardware costs while enhancing system integration. However, the pure vision solution is heavily reliant on lighting conditions, and its performance can be significantly impacted during rainy, foggy weather, or night driving. To address this, Tesla has incorporated extensive data augmentation and model optimization strategies into its software algorithms to improve the robustness of its perception system in edge scenarios.

Another notable company is Baidu Apollo in China, which employs a multimodal sensor and high-precision map integration approach for its perception technology, aiming to provide a comprehensive solution for L4 and above autonomous driving. Baidu's perception system utilizes LiDAR, cameras, and millimeter-wave radars, while also providing vehicles with static information such as road topology, lane line positions, and traffic rules through high-precision maps. By matching dynamic perception data with high-precision maps, the system further enhances object recognition and positioning accuracy. This technical framework excels in specific scenarios like enclosed parks and fixed routes. Additionally, Baidu Apollo has integrated cooperative perception technology into its system, extending the range and capabilities of single-vehicle perception through vehicle-to-everything (V2X) communication. In high-density traffic environments, this cooperative perception effectively addresses single-vehicle blind spots while bolstering the system's global perception capabilities.

Huawei, also based in China, has demonstrated robust R&D capabilities in the field of perception technology. Huawei's perception system is centered around 'heavy perception and strong computing power,' equipped with high-resolution LiDAR, ultra-high-definition cameras, and millimeter-wave radars. Through its independently developed AI chips and algorithm platforms, it processes and fuses multimodal data in real-time. Especially in complex urban road scenarios, Huawei's perception system can accurately identify non-motorized vehicles and pedestrians while also possessing excellent anti-interference capabilities and real-time performance. This highly integrated perception solution has given Huawei a competitive edge in the autonomous driving market.

Different companies prioritize various aspects in their choice of perception technology, reflecting not only their technological strength and R&D resources but also their strategic positioning in the autonomous driving market. From Waymo's heavy perception solution to Tesla's pure vision path, to Baidu and Huawei's multimodal cooperative perception, corporate technological innovations have fueled the diverse development of perception technology. In the future, with the further reduction in hardware costs, continuous algorithm optimization, and the proliferation of cooperative perception technology, autonomous driving perception technology will become more intelligent and cost-effective, gradually progressing towards large-scale commercial application.

Challenges and Opportunities for Perception Systems

The core function of autonomous driving perception systems is to ensure vehicles can accurately and real-time perceive their surroundings. However, this technology faces numerous challenges in practical applications, while also presenting immense opportunities. The direction of technological development not only focuses on perception accuracy and reliability but also encompasses cost control, system adaptability, and deep integration with the broader autonomous driving ecosystem.

One significant challenge currently faced is the limited performance of perception systems under extreme weather and complex lighting conditions. Traditional sensors like cameras are highly sensitive to light changes, and their target recognition capabilities can drastically decline in bright light, backlight, or night scenarios. Furthermore, LiDAR can easily experience point cloud data disturbances in severe weather like rain and snow, reducing perception accuracy. Although millimeter-wave radars offer strong anti-interference capabilities, their spatial resolution is insufficient for precise recognition in complex scenarios. Ensuring system reliability across different weather conditions remains a pressing issue for perception technology.

The tension between real-time performance and computing power is another critical technical bottleneck for perception systems. Autonomous driving necessitates real-time processing and analysis of massive sensor data to respond to rapidly changing traffic scenarios. However, as sensor resolution increases, data volume grows exponentially, placing greater demands on computing hardware performance. Current edge computing hardware also has limitations in terms of power consumption and processing speed. Optimizing algorithms to achieve more efficient data processing under constrained computing power has become a key research and development direction.

Simultaneously, multi-object perception and motion prediction in complex traffic scenarios impose stringent demands on perception systems. Autonomous vehicles must identify and track multiple targets, including pedestrians, other vehicles, bicycles, and potential obstacles, in a highly dynamic environment. Especially in urban settings, where targets are dense and behavioral patterns are diverse, perception systems must not only detect targets but also accurately predict their future motion trajectories. Current prediction algorithms still experience accuracy declines in high-density target environments, yet these scenarios are crucial for the practical deployment of autonomous driving.

Despite the challenges, the development of perception systems is accompanied by immense technological opportunities. With the steady decline in sensor hardware costs, LiDAR and high-resolution cameras are gradually being commercialized, laying the groundwork for the widespread adoption of perception technology. Especially for pure vision-based solutions, supported by deep learning technology, they can not only reduce hardware dependence but also further enhance the algorithm's adaptability. In the future, integrated hardware and software solutions will further propel the development of perception systems towards lightweight and modular directions.

In the future, with the advanced development of end-to-end deep learning methods, perception systems are expected to achieve comprehensive optimization from data input to environmental modeling and decision support. Traditional modular perception architectures are being supplanted by more efficient holistic solutions, which not only improve system operational efficiency but also simplify data processing complexity. Additionally, the application of artificial intelligence in perception systems will gradually expand from traditional object detection and recognition to more advanced intelligent functions like abnormal behavior prediction and dynamic scene understanding.

Summary

Autonomous driving perception systems will play an increasingly pivotal role in future transportation transformations. They are not only the cornerstone technology for realizing vehicle intelligence but also a significant driver in promoting the development of smart transportation and smart cities. With continuous technological advancements, perception systems will provide robust technical support for the commercialization of the autonomous driving industry, ultimately ushering humanity into a new era of safer, more efficient, and more convenient intelligent transportation.

-- END --