Understanding the Differences Between Level 2 and Level 3 Autonomous Driving: Why the Hesitation?

![]() 05/29 2025

05/29 2025

![]() 983

983

As autonomous driving technology continues to evolve, it has transitioned from laboratories to the everyday lives of consumers. Many automotive companies have introduced intelligent driving models, which have become a preferred mode of daily transportation for many. However, you may have noticed a shift in promotional strategies, particularly following stricter regulations from the Ministry of Industry and Information Technology. Apart from Huawei's recent announcement of high-speed Level 3 commercial solutions, most car companies now define their intelligent driving systems as combined driving assistance, or Level 2 autonomous driving. Why are companies hesitant to promote Level 3? What are the key differences between Level 2 and Level 3?

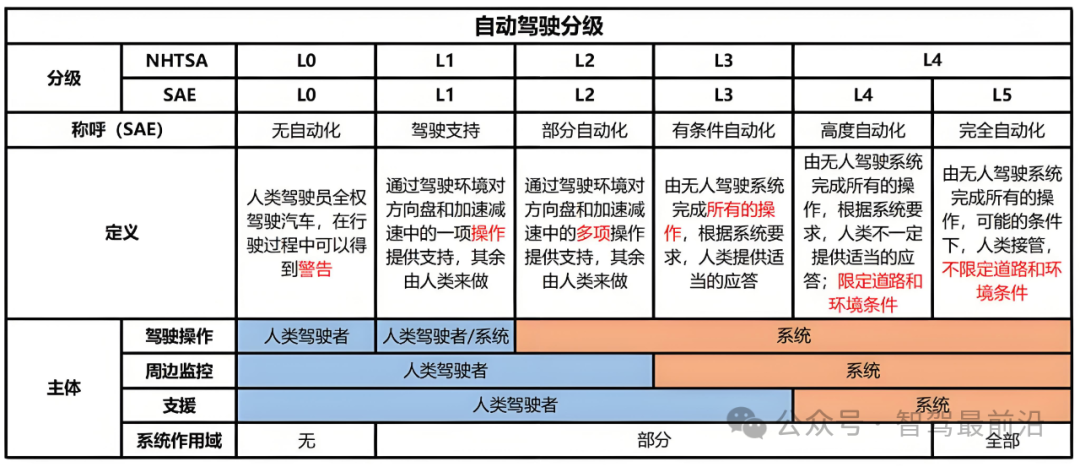

Defining Level 2 and Level 3

According to the Society of Automotive Engineers (SAE) classification:

- Level 2: In certain scenarios, the autonomous driving system can handle some operations, but humans must monitor the surroundings and be ready to take control at any time. The driver remains the primary operator, with the system providing assistance.

- Level 3: In specific scenarios, the autonomous driving system can handle all operations. Humans are required to provide appropriate responses, with the system taking the lead in driving and monitoring the environment. The driver's role is limited to support.

Differences in Perception Systems

Perception is the foundation of autonomous driving systems:

- Level 2 (L2): Relies primarily on visual cameras and millimeter-wave radars. While effective in most scenarios, they struggle with high precision in low light, direct sunlight, or sudden appearance of hidden objects.

- Level 3 (L3): Incorporates LiDAR as a standard feature, providing real-time 3D point cloud data at the million-point level. This, combined with multiple sensors, enables high-precision recognition of the surroundings even in adverse conditions like heavy rain or fog.

Differences in Computing Platforms and Algorithm Architectures

The computing power requirements differ significantly:

- L2: Uses SoCs in the 30-200 TOPS range for real-time processing of camera and radar data, executing basic path planning and target tracking algorithms.

- L3: Requires ultra-high computing platforms with 200-1000+ TOPS, capable of processing LiDAR point clouds, running multi-model fusion algorithms, and ensuring functional safety through redundancy.

Differences in System Redundancy and Functional Safety

Safety is paramount:

- L2: Redundancy mostly limited to backup sensors or single paths. High-end models may have redundant sensors but lack dual-path redundancy in critical systems.

- L3: Features full-stack redundancy with independent sensors, dual computing platforms, and redundant systems for power, communication, braking, and steering, meeting the highest ASIL-D functional safety requirements.

Differences in Software Architecture and Function Switching

Control and mode switching also differ:

- L2: Activation and deactivation rely heavily on driver input. The system monitors driver engagement and exits if hands are off the wheel or eyes are distracted.

- L3: Achieves closed-loop autonomous driving within designated operational domains. It monitors conditions, requests takeover when necessary, and executes minimum risk strategies if the driver fails to respond.

Differences in Safety Strategies and Risk Management

Risk management approaches differ:

- L2: Relies on driver proficiency and system warnings. If the driver does not respond promptly, there are limited quality assurance measures.

- L3: Designed with "risk distribution" in mind. It assesses external risks, monitors system health, and adjusts functional availability to minimize potential accidents.

Challenges Facing Level 3

Technological advancements come with increased costs:

- The cost of LiDAR alone in L3 systems can reach tens of thousands of yuan, coupled with high-end computing chips and redundant hardware, increasing the overall vehicle cost by RMB 50,000 to RMB 100,000.

- Liability concerns: Enterprises bear primary responsibility in the event of an accident, leading to caution in promoting Level 3 until the technology is proven 100% safe.

Final Thoughts

With declining costs of high-precision sensors and improving regulations, conditionally autonomous Level 3 driving is expected to become more prevalent. Advances in V2X, high-precision maps, multi-sensor fusion algorithms, and simulation technology will further enhance system safety and robustness. While the boundaries between L2 and L3 continue to blur, technology-driven layered design remains crucial for the industry's healthy development.

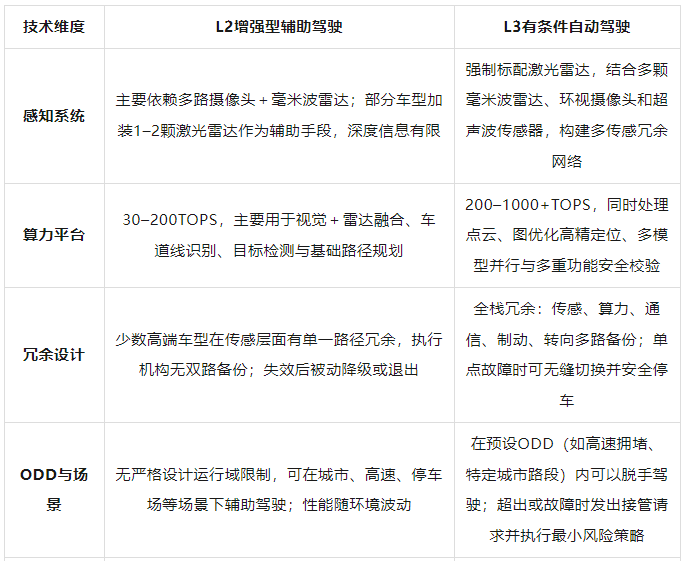

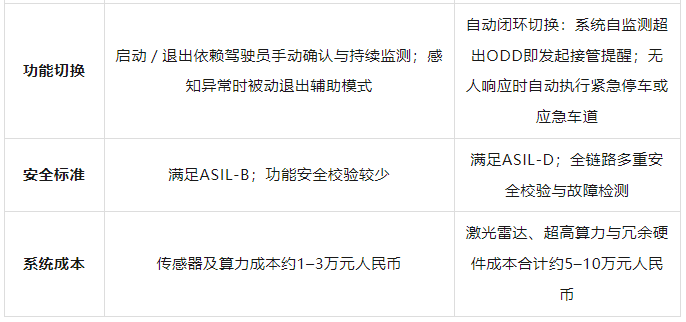

Below is a summary table for an intuitive understanding of the differences between L2 and L3:

-- END --