The Next Leap of Edge AI: Embracing the "Agent Operating System"

![]() 06/25 2025

06/25 2025

![]() 688

688

This marks my 376th column article.

We stand on the brink of a revolutionary scenario: Imagine a night where an unmanned inspection drone hums silently in the sky, its camera pinpointing a mechanical vibration anomaly in the main pump room. Simultaneously, a quadruped robot on the ground receives the anomaly code, navigates obstacles, and swiftly heads to the site. Both devices operate not through cloud scheduling but through local task coordination facilitated by the "Edge Agent Operating System": the drone handles visual recognition and path analysis, while the ground robot executes tasks and provides feedback. This entire process is devoid of human intervention and remote cloud connection.

This isn't science fiction but the real evolution of edge AI, transitioning from a reasoning engine to a collaborative agent.

Over the past few years, the trajectory of edge AI has been evident: from initial explorations in TinyML for low-power AI reasoning, to the application of edge reasoning frameworks, the rise of platform-level AI deployment tools, and most recently, vertical models. We have achieved the milestone of "getting models to run".

However, the next frontier for edge AI isn't about stacking more models and parameters but answering a more fundamental question: Can AI models collaborate once operational?

This limitation acts as an "invisible ceiling" hindering edge AI's evolution to a higher form of intelligence.

True edge intelligence transcends mere judgment; it involves making decisions, forming systems, and executing tasks. This marks the starting point for edge AI's evolution from static reasoning to dynamic agents.

What we need isn't a bigger model but a network of collaborative models. Models enable devices to perceive the world, whereas agents empower them to participate in it.

In this article, based on the latest market data, technological advancements, and platform trends, we delve into how edge AI evolves from model deployment to an agent operating system and how this shift will reshape the interaction methods, system architecture, and commercial value of intelligent terminals.

From Model Deployment to System Autonomy: AI Agents Arrive at the Edge

Previously, enterprises predominantly deployed edge AI through the combined paradigm of "model-driven + platform scheduling": develop a model, deploy it to a terminal, and manage resource allocation and status visualization via an edge platform.

While this approach initially addressed whether models could run, promoting widespread AI deployment at the edge, it also revealed a growing structural bottleneck: As deployment scales expanded and scenario complexity increased, this model failed to address a fundamental question: Can models collaborate? Does the system possess autonomy?

This shift in focus is evident at the enterprise decision-making level.

According to the global CIO survey report released by ZEDEDA in early 2025, 97% of surveyed CIOs indicated that their enterprises have deployed or plan to deploy edge AI within the next two years; 54% of enterprises explicitly desire edge AI to become an integral part of system-level capabilities rather than isolated functions; notably, 48% of enterprises have listed "reducing cloud dependence and enhancing local autonomous response capabilities" as a key objective for the next phase.

The data behind these figures underscores a burgeoning industry-wide consensus: The future of edge AI isn't just about model execution but about systems achieving enhanced capabilities for self-organization, self-perception, and self-response.

The core enabler of this capability leap is the "edge AI agent".

Unlike the traditional model deployment paradigm, the edge agent isn't a passively executing reasoning engine but a minimal intelligent unit capable of perception, decision-making, action, and coordination. It can not only run models but also initiate behaviors, negotiate roles, and allocate resources locally based on environmental states, system rules, and task goals, becoming a fundamental intelligent node within edge systems.

Taking a smart manufacturing scenario as an example, we can intuitively grasp the value chain of edge agents: When a camera on a conveyor belt detects a material defect, the visual inspection agent immediately generates an event signal. This signal triggers the material handling agent to automatically schedule mobile robots to transfer the problematic material. Next, the quality inspection agent conducts a second review upon receiving the signal. Finally, the MES system agent synchronizes and updates the production schedule and next process plan.

From anomaly recognition to task execution, the entire process is accomplished through the autonomous collaboration of multiple edge agents locally. This closed loop of "perception - decision-making - coordination - feedback" not only enhances response efficiency but also imbues the system with a high degree of flexibility and adaptability.

If model deployment resolves the question of "whether a device possesses thinking ability", then agent deployment further addresses the proposition of "whether a device possesses participation ability". To truly realize this participation, edge agents must possess a comprehensive capability system.

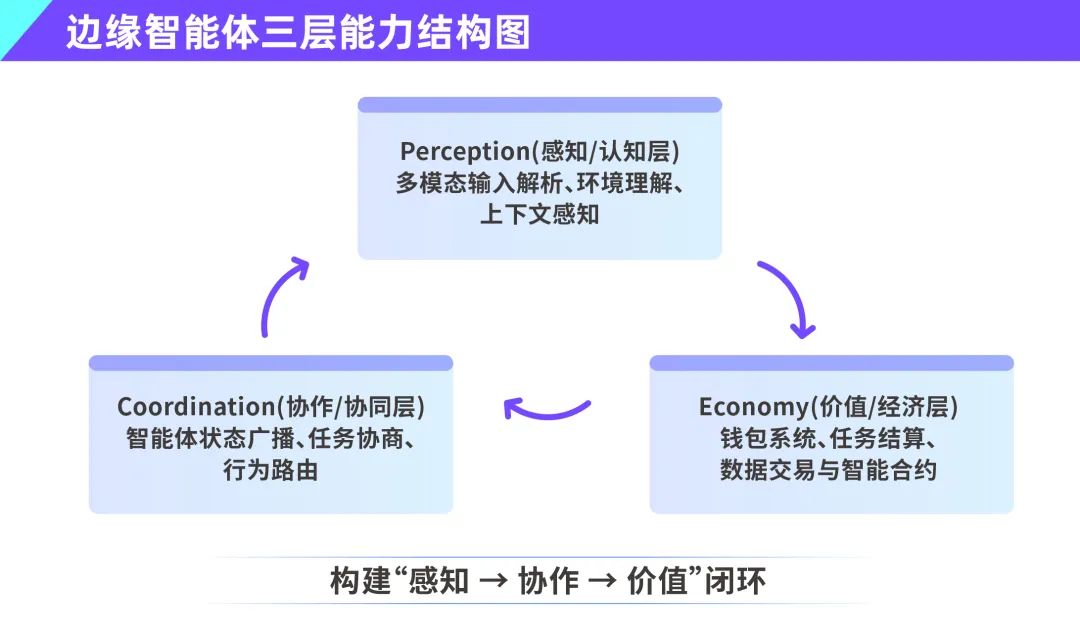

We can summarize this as the PCE model - a capability stack spanning three levels: perception, coordination, and economy.

First is the Perception Layer.

Agents must comprehend their environment, read and interpret data from multimodal sensors such as images, sounds, temperature and humidity, and vibration, and make task judgments in conjunction with contextual information. A ZEDEDA survey reveals that over 60% of enterprises have deployed multimodal AI models in edge devices, providing a rich foundation for environmental perception by agents.

Next is the Coordination Layer.

One agent cannot handle all tasks; a truly intelligent system relies on efficient collaboration between multiple agents. This coordination transcends simple data exchange, forming an intelligent agent network based on state sharing, role negotiation, and task division. Coordination capability elevates edge systems from device interconnection to intelligent mutual assistance.

Finally, there is the Economy Layer.

When edge agents gain behavioral capabilities such as task acceptance, resource negotiation, and cost control, they naturally become participants in the machine economy. The implementation foundation for this layer comprises device wallets, encrypted identities, and programmable contract mechanisms. Based on my assessment in the article "AI Boom on the Edge + Virtual Currency Changes, Device Wallets Open the Door to AI Agent Economy", the total volume of M2M transactions between AI devices in the future is expected to surpass the total volume of human transactions, with agents becoming active nodes in edge economic networks. Economic capability not only equips agents with execution capabilities but also imparts collaborative value.

The three layers of perception, coordination, and economy collectively constitute the "PCE capability stack" of edge agents. It not only defines the capability modules an agent should possess but also provides a reference framework for the system design of future edge AI platforms.

Why Do Edge Agents Need an AI Operating System?

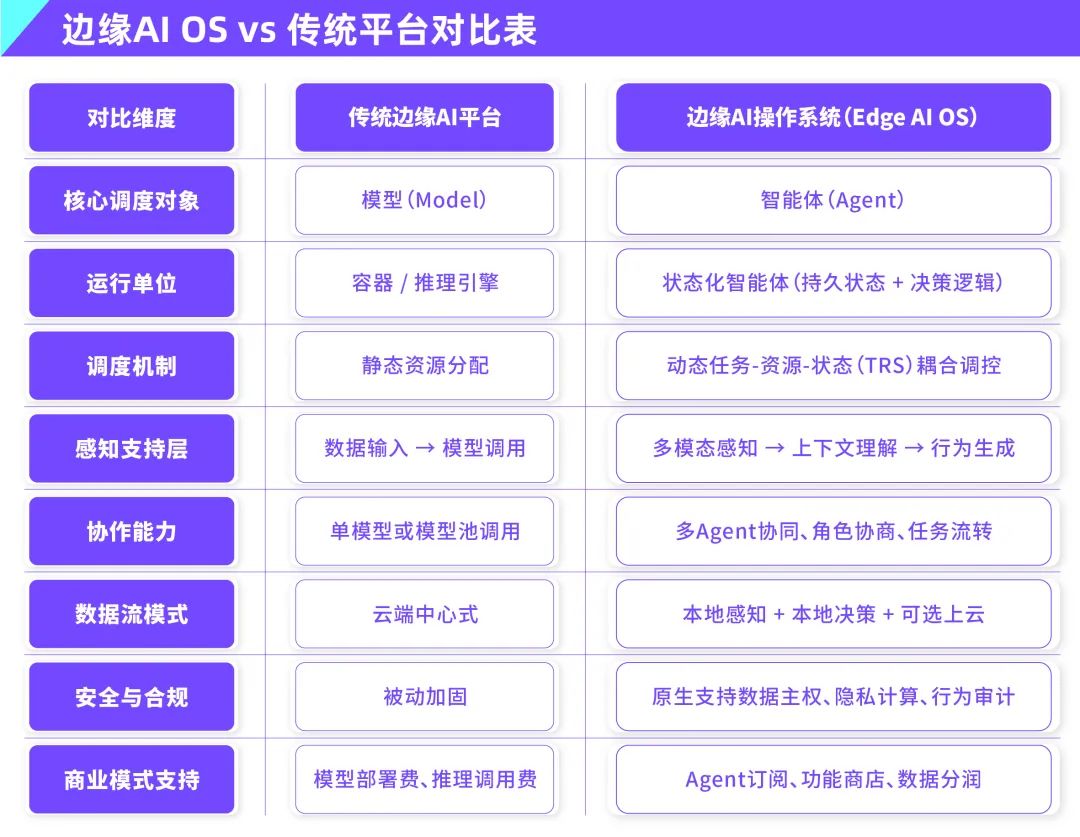

While edge AI has achieved a leap from model deployment to platform-based management in recent years, currently mainstream edge AI platforms still linger at the level of "model runtime environments". However, as AI evolves from models to agents, this traditional platform paradigm becomes inadequate.

The reason is that an agent isn't a static reasoning service but a dynamic service with state perception, task negotiation, and autonomous action capabilities. It necessitates not just an execution space but a complete operating system.

We term this the "edge AI operating system".

Compared to traditional AI platforms, the edge AI operating system must fulfill three core capability requirements from the underlying architecture.

First, it must possess the ability to schedule heterogeneous computing power resources. In edge devices, AI models may run on various computing units such as CPUs, GPUs, NPUs, or even ASICs. Dynamically allocating and balancing the load among these heterogeneous computing resources poses a technical challenge at the operating system level.

Second, a true edge AI operating system should support multi-agent runtime management. This implies the system must not only run models but also schedule agents, encompassing state perception, task scheduling, access control, and behavior coordination among agents.

This also introduces the third core capability of the AI OS: a trinity control mechanism for tasks, resources, and states. In traditional platforms, tasks are usually statically configured, resource allocation is on-demand, and state management relies on external monitoring. In agent systems, these three elements are dynamically coupled: Whether an agent can execute a task depends on its current state, available resources, and behavioral feedback from other agents in the system.

These trends collectively indicate that the rise of edge agents is driving a paradigm shift in operating systems.

If traditional operating systems were born for programs, then the upcoming edge AI operating system is designed for agents. It must not only understand hardware and models but also comprehend behaviors, coordination, and ecosystems.

Currently, the challenge faced by CIOs isn't "whether to deploy AI" but "how to systematically plan for AI". The emergence of agents is gradually transforming AI from "project expenditure" to "systematic infrastructure expenditure".

A ZEDEDA survey shows that over 54% of enterprises have adopted a "cloud + edge" hybrid deployment model, and within the next two years, it's anticipated that more than 60% of new AI budgets will be allocated to edge deployments, with nearly half explicitly directed towards building "autonomous AI capabilities". This reflects a fundamental shift in the structure of enterprise AI expenditures: from CAPEX-dominated "model procurement + deployment costs" to OPEX-dominated "intelligent services + agent subscriptions".

Enterprises will no longer base payments on the "number of models" but manage budgets based on the "agent lifecycle". Instead of purchasing a specific model once, enterprises will subscribe to certain agent functionalities and be billed based on performance. All of this signifies that the industrialization path for edge agent systems is poised for acceleration.

Four Thresholds from "Models Can Run" to "Agents Can Live"

Although the future of edge agents is becoming increasingly clear, and the technological path is gradually unfolding, transitioning from "models can run" to "agents can live" isn't a linear evolution but a systematic upgrade across four thresholds.

First, scheduling complexity is one of the most immediate and challenging issues.

Edge scenarios are inherently heterogeneous, featuring diverse device types, varying computing power structures, and intermittent network conditions. The models, resources, and sensor interfaces relied upon by agents differ, making it difficult for unified scheduling strategies to be effective. More complexly, agents possess dynamic states, and their behaviors are environmentally dependent and temporally volatile. The scheduling system must not only allocate resources but also comprehend the current intentions and feasibility of agents.

Second, model diversity constitutes the second threshold.

In practical applications of edge AI, more and more tasks require the collaborative effort of general language models and vertical industry models. However, these two types of models differ significantly in operating mechanisms, input structures, computing power requirements, and response time limits. Traditional model-centric scheduling has become inadequate for the collaborative operation of agents.

More challenging is the third threshold - data privacy and compliance.

The defining characteristic of edge AI is localized intelligence, which also means that the data it relies on is highly privatized and sensitive, encompassing core assets such as enterprise operational indicators, user behavior trajectories, and production chain states. In traditional AI, data is uploaded to the cloud for unified training and reasoning, but in agent systems, data is often generated, processed, and decided locally. How the system can achieve agent collaboration and learning without breaching data privacy becomes a formidable problem to solve.

Finally, the issue of agent governance has gradually emerged.

When multiple agents collaborate within the same system, there will inevitably be resource contention, task conflicts, strategy competitions, and even information deception. Traditional task priority systems become complex in agent systems, especially when agents possess learning or self-updating capabilities, making their behavioral paths unpredictable and increasing system risks.

Only by surmounting these four thresholds can agents truly "come alive", not just running and collaborating but also continuously evolving, self-repairing, and operating safely within complex systems.

Final Thoughts

The future of edge AI lies not in deploying more models but in activating more agents that "can understand, act, and collaborate". Intelligence is no longer about stacking computing power and model reasoning in the cloud but about machines possessing perception and purpose in the physical world and the ability to react and judge in local environments. In this impending new phase, enterprises will no longer just deploy models but schedule agents.

AI doesn't just run at the edge; it begins to think from the edge.

For enterprises, the question is no longer whether to adopt AI, but rather a strategic decision on whether to build their own agent ecosystem.

Edge agents will not merely serve as tools in the future; they will evolve into partners. These agents will make decisions, collaborate effectively, and coexist harmoniously with humans over the long term. Our efforts are not focused on training models, but rather on shaping new organizational boundaries, fostering new system intelligence, and establishing new industrial orders.

References:

1. Edge AI Matures: Widespread Adoption, Rising Budgets, and New Priorities Revealed in ZEDEDA’s CIO Survey. Source: ZEDEDA

2. 16 Changes to AI in the Enterprise: 2025 Edition. Source: a16z.com

3. Why is EDGE AI Growing So Fast? Source: imaginationtech.com