Revolutionizing AI Memory with SOCAMM: The Next Frontier in Computing Power

![]() 08/04 2025

08/04 2025

![]() 744

744

In today's digital landscape, artificial intelligence (AI) is transforming our world at breakneck speed. From autonomous vehicles to smart homes, and from medical diagnostics to financial services, AI applications permeate every aspect of our lives. However, as AI models grow in complexity and data volumes surge, traditional computing architectures and storage technologies are being pushed to their limits.

Traditional data centers and IT infrastructure often struggle with processing vast amounts of data, and the high costs associated with hardware deter many enterprises and institutions. Amidst this backdrop, SOCAMM (Small Outline Compression Attached Memory Module) has emerged as a game-changing memory technology, offering new hope and transformation to the entire industry.

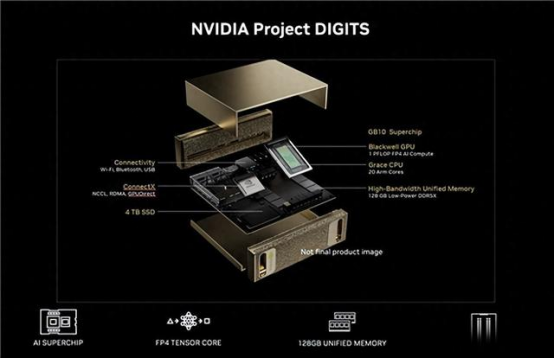

Following NVIDIA's release of the personal AI supercomputer "Digits" in January, leading PC manufacturers such as Lenovo and HP, along with Samsung Electronics, SK Hynix, Longsys, and others, are actively embracing SOCAMM memory technology. Let's delve into why SOCAMM is so highly valued.

01. Computing Power Dilemma: High Costs and Limited Availability Spark New AI Memory Innovations

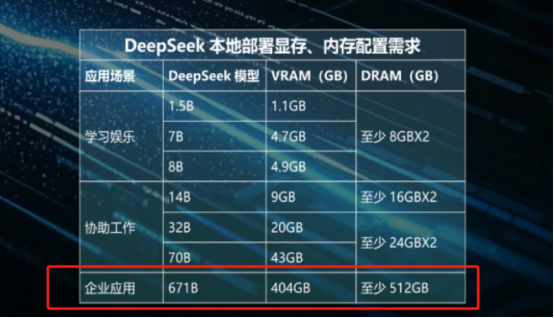

The parameter size of large AI models is expanding at a rate of 10 times per year. For instance, the full parameter set of the DeepSeek R1 model's 671B version is 720GB, requiring over 512GB of memory support during training. Traditional DDR5 memory is increasingly inadequate in the face of the data deluge in AI computing—when 80% of the processor's time is spent waiting for data transfer, it's common for computing power utilization to drop below 50%.

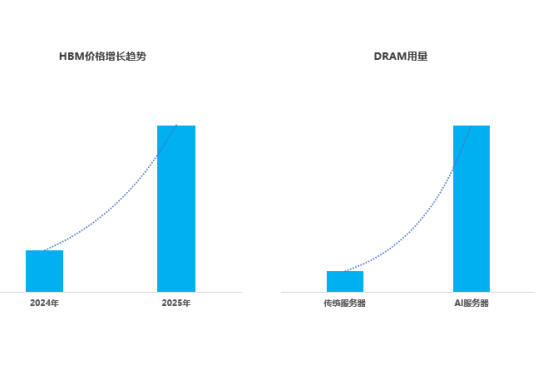

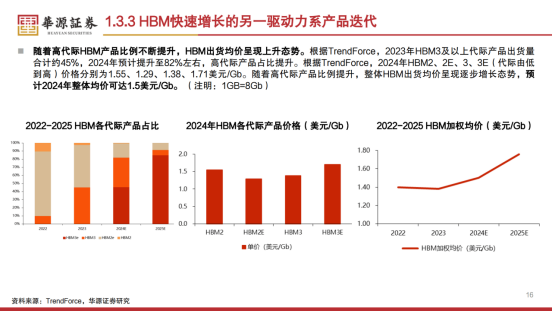

Consequently, the role of HBM (High Bandwidth Memory) has become pivotal, especially for AI training tasks. In early 2025, the demand for HBM surged, with spot prices of HBM3 chips jumping 300% compared to the beginning of 2024. The DRAM usage of a single AI server is eight times that of a traditional server.

However, HBM is not ordinary DRAM; its price is astronomical. At the same density, HBM costs approximately five times that of DDR5. Currently, HBM costs rank third in AI server expenses, accounting for roughly 9%, with an average selling price of $18,000 per unit.

Despite its high cost, HBM supply remains tight, and prices continue to climb. TrendForce reported in May 2025 that HBM pricing negotiations for 2025 began in the second quarter of 2024. Due to limited overall DRAM capacity, suppliers initially raised prices by 5-10% to manage capacity constraints, affecting HBM2e, HBM3, and HBM3e.

For Chinese AI enterprise users, acquiring AI solutions equipped with HBM and NVIDIA's high-end offerings is even more challenging. In January 2025, the United States imposed a new round of bans on advanced specifications such as HBM2e, HBM3, and HBM3e, effectively blocking China's access to the latest HBM technology. Simultaneously, it tightened supply chain control, restricting overseas companies using American technology (like Samsung and SK Hynix) from supplying to China.

In this context, SOCAMM memory, with its outstanding performance, energy efficiency, and flexibility, is emerging as a new favorite in the AI era's storage landscape.

02. HBM and SOCAMM: Dual Engines for Extreme Bandwidth and Flexible Expansion

HBM and SOCAMM are not merely "high-end and low-end" options but play a crucial role in hierarchical memory within the entire computing stack.

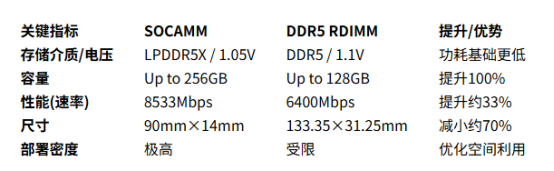

HBM and SOCAMM are positioned at the extremes of "extreme performance" and "flexible expansion": HBM provides a 1024-bit ultra-wide bus and TB/s-level bandwidth directly to the GPU through 3D DRAM stacking + 2.5D silicon interposer packaging, meeting the extreme bandwidth demands of HPC, AI training, high-end graphics, and other scenarios. Unlike HBM, which is soldered next to the GPU and prioritizes extreme bandwidth, SOCAMM connects directly to the Grace CPU via high-speed buses such as Nvlink-C2C, offering a large-capacity, medium-bandwidth, and low-power memory pool for the CPU side. Additionally, SOCAMM transforms highly integrated LPDDR into hot-swappable CAMM2 modules, replacing the traditional onboard soldering method of GDDR with slots, prioritizing heat dissipation, capacity flexibility, and system cost issues. It targets markets like AI servers and edge workstations that require "medium-to-high bandwidth + upgradability." The two complement each other: HBM trades fixed, high-cost packaging for extreme bandwidth, while SOCAMM trades replaceable, low-cost modules for capacity/power consumption/design flexibility. When the system needs to stack extreme bandwidth next to the core computing unit and leave room for later expansion at the motherboard level, HBM can serve as near-memory/cache, and SOCAMM can be used as main memory or expansion pool, achieving the dual goals of "bandwidth ceiling" and "capacity ceiling" in the same device.

SOCAMM acts as a "mobile warehouse" for the CPU, with the GPU's high-speed stove (HBM) stationary and the CPU's large granary (SOCAMM) added as needed.

03. SOCAMM Breakthrough: A Paradigm Shift from Performance to Architecture

SOCAMM is a technology jointly developed by NVIDIA, in collaboration with Samsung, SK Hynix, and Micron, based on LPDDR5X DRAM. Through the design of 694 I/O ports (far exceeding the 644 of traditional LPCAMM), the data transmission bandwidth is increased to 2.5 times that of traditional DDR5 solutions.

SOCAMM's emergence is not just a technological innovation but a profound industry revolution. Its novel modular design completely transforms the traditional use of memory.

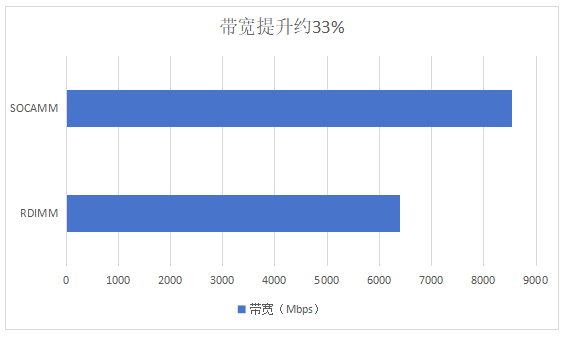

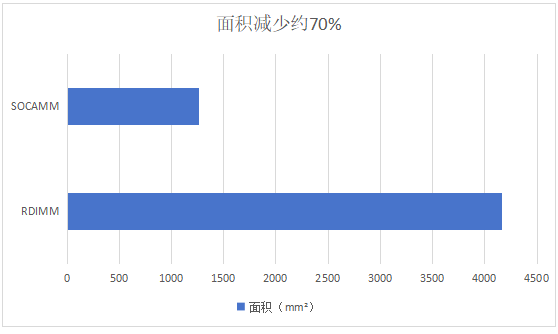

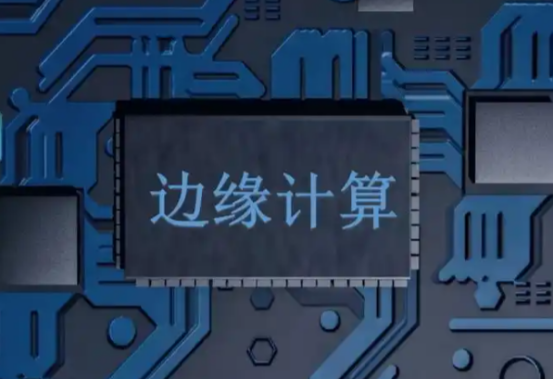

In terms of performance, SOCAMM excels. Thanks to its innovative underlying design, including a 128-bit bus and near-CPU layout, these designs optimize signal integrity and reduce signal transmission delay, thereby significantly enhancing overall performance. As shown in the figure below, SOCAMM's data transmission rate can reach 8533Mbps, approximately 33% higher than DDR5 RDIMM's 6400Mbps, meeting the exceedingly high data throughput requirements of AI training and high-performance computing.

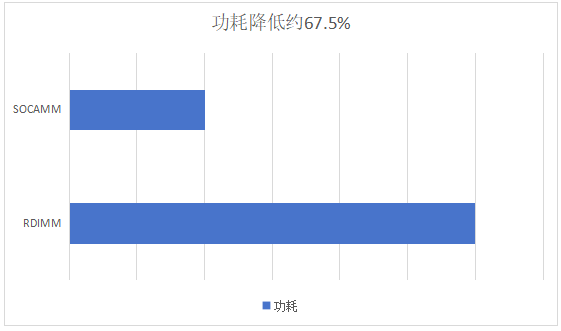

Regarding energy efficiency, SOCAMM also performs exceptionally well. It employs a low voltage supply of 1.05V, with a power consumption of only one-third that of DDR5 RDIMM. Combined with liquid cooling technology, SOCAMM effectively reduces power consumption in high-density deployment scenarios, which is crucial for data center operating cost control and sustainable development.

Moreover, SOCAMM breaks the physical mold of traditional memory, measuring only 90mm×14mm, reduced by roughly 70% compared to DDR5 RDIMM's 133.35×31.25mm. This ultra-compact design enables SOCAMM to adapt more flexibly to various compact devices, such as edge computing servers and small AI inference devices, achieving higher storage capacity and computing power in limited space.

These innovative designs have propelled SOCAMM to new heights in performance, energy efficiency, and space utilization, introducing new solutions to high-performance computing and storage fields.

04. Industrial Restructuring: From PCs to AI Servers to Green Data Centers

SOCAMM's emergence not only drives technological innovation but also sparks new business models and shifts in the industrial chain. Various manufacturers are actively deploying SOCAMM technology, including memory manufacturers like Samsung Electronics, SK Hynix, and Longsys, as well as PC manufacturers such as Lenovo and HP. Additionally, SOCAMM memory technology is suitable for scenarios requiring high-performance memory support, such as AI servers, high-performance computing, and data centers.

With the rapid development of AI, big data, cloud computing, and other technologies, the demand for high-performance memory is increasing. SOCAMM is poised to make significant strides in the following areas:

In AI servers, SOCAMM's high-performance and high-bandwidth characteristics perfectly meet the needs of AI training and inference tasks. It can serve as a cost-effective expansion solution on the CPU side, providing large-capacity (up to 128GB per module) medium-bandwidth storage space through direct connection to the CPU via Nvlink-C2C, forming a seamless complement to HBM.

In green data center applications, SOCAMM's ultra-high energy efficiency (power consumption is only one-third that of DDR5 RDIMM) and high-bandwidth characteristics make it an ideal choice. Its compact size and modular design can effectively improve data center space utilization, thereby achieving higher storage capacity and computing power in limited space.

In edge computing scenarios, SOCAMM's compact size and low power consumption characteristics make it ideally suited to edge computing devices. Its ultra-small size design of 14×90mm enables easy integration into various compact edge computing devices, such as small servers, smart gateways, and IoT devices. Maintenance personnel can replace storage modules without interrupting device operation, thereby reducing maintenance costs and system downtime.

This year, industry giants have already taken action. Micron is mass-producing SOCAMM for NVIDIA's GB200/NVL platform; Samsung and SK Hynix have joined the fray; and ODM manufacturers such as Dell and Lenovo have commenced server production based on SOCAMM. With a cost advantage of only one-quarter that of HBM, SOCAMM's cost-effectiveness positioning in AI inference and edge computing is exceptionally clear. According to plans, Micron's production capacity will reach 800,000 modules in 2025, and Samsung/SK Hynix will fully mass-produce in 2026. The second-generation SOCAMM will push bandwidth to an astonishing 400GB/s.

In the domestic market, it's reported that the renowned semiconductor storage brand Longsys has unique advantages in SOCAMM technology research and development and industrial layout. It has jointly undertaken SOCAMM development work with leading customers, and related products have been successfully lit up. This innovative product, named SOCAMM, is redefining the server memory architecture in the AI era with its three breakthroughs in performance leap, flexible deployment, and energy efficiency innovation, and has become a key force supporting top platforms such as NVIDIA Grace Blackwell, deserving the attention and expectation of domestic users.

05. Conclusion: Embarking on a New Era of AI Hardware

The market space and potential of SOCAMM technology are immense. With the rapid advancement of artificial intelligence, high-performance computing, and edge computing, the demand for high-performance, low-power storage solutions will continue to grow. With its superior technical advantages and diverse application scenarios, SOCAMM is expected to secure a significant market share in these fields.

SOCAMM is not just a technological innovation but a disruptive reconfiguration of the hardware paradigm in the AI era. When NVIDIA inserts a finger-sized memory module into the Digits supercomputer, humanity may be witnessing the most profound memory revolution since the birth of the von Neumann architecture.

The culmination of this transformation may not be a breakthrough in a single technical parameter but a new era where everything can evolve intelligently in real-time. With continuous technological advancements and the gradual improvement of the ecosystem, SOCAMM is expected to play a pivotal role in data centers, edge computing, and personal AI devices. It will not only propel the further development of storage technology but also bring new opportunities and transformations to the entire computing industry.