Pixel 9 Launched! AI Hardware Routes Diverge: Google, Apple, and Huawei Take Different Paths

![]() 08/15 2024

08/15 2024

![]() 579

579

On August 13, local time in the United States, Google's annual "Made by Google" conference for 2024 officially kicked off. At the event, Google, with AI at its core, comprehensively showcased its latest achievements and innovations in hardware and software to the outside world.

New products including the Pixel 9 series of smartphones, Pixel 9 Pro Fold foldable phones, Pixel Watch 3 smartwatches, and Pixel Buds Pro 2 wireless earbuds were all unveiled, significantly more than last year.

As per usual, September and October are peak periods of competition in the mobile phone market, with Google, Apple, Huawei, and other brands launching their annual flagship products during this time. Google's annual event was originally scheduled for October, but it was likely to preempt the competition that the conference was held early.

During the conference, while introducing the all-new Gemini, Google did not forget to subtly criticize Apple's Apple Intelligence, further highlighting the fierce competition among brands.

I'm sure you're all curious about what Google announced. Below, let me take you through a brief review.

Pixel 9 Leads Google's Suite, AI as the Highlight

The most eye-catching hardware product at this conference is undoubtedly the Pixel 9 series, which includes not only the Pixel 9, Pixel 9 Pro, and Pixel 9 Pro XL smartphones but also the second-generation foldable phone, the Pixel 9 Pro Fold, which has been incorporated into the Pixel 9 series for the first time.

Let's start with the three smartphones. The Pixel 9 standard edition measures 6.3 inches, while the Pixel 9 Pro and Pixel 9 Pro XL measure 6.3 and 6.8 inches, respectively.

The entire Pixel 9 series is equipped with Google's latest Tensor G4 processor, and its main camera uses Samsung's 50-megapixel GNK sensor. The ultra-wide-angle lens employs Sony's IMX858 sensor, which is also used in the telephoto and front cameras of the Pixel 9 Pro.

Image source: Google

To better match AI capabilities, the Pixel 9 series has upgraded its storage, starting at 12GB for the standard edition and rising to 16GB for the Pro version, catching up with mainstream domestic phones.

Compared to its predecessor, the Pixel 9 Pro Fold has significantly improved in terms of thinness and lightness, measuring 10.5mm thick when folded and 5.1mm thick when unfolded. However, with a 6.3-inch external screen and an 8-inch internal screen when unfolded, the device weighs 257g, making it heavier than almost all new-generation foldable phones.

In other aspects, the Pixel 9 Pro Fold boasts 16GB of RAM and a camera combination of 48MP wide-angle + 10.8MP ultra-wide-angle + 10.8MP telephoto lenses, offering a solid overall performance.

Image source: Google

As for the Pixel Buds Pro 2 earbuds and Pixel Watch 3 watch, I won't go into details here because the deeply integrated Gemini large model is the highlight of this conference.

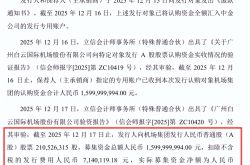

Gemini is deeply integrated with all Google applications and the Android system, enabling it to independently handle complex queries. It also boasts multi-modal processing capabilities, analyzing images, voice, and text information.

Specifically, Google's device-side AI is driven by the lightweight multi-modal model Gemini Nano and has introduced the more flexible Gemini 1.5 Flash. This equips new Pixel devices with numerous innovative AI features, including Pixel Studio, an image generator that uses a local diffusion model to create stickers and images based on text prompts, similar to Apple's Image Playground.

Furthermore, AI can edit photos, add non-existent objects, and generate customized weather reports. The camera app introduces a new feature that merges two photos, allowing photographers to appear in group shots, classifies and remembers information from screenshots, and provides a "Call Logs" feature that records and summarizes phone conversations. Gemini Live, Google's GPT-4o-rivaling voice assistant, enables users to engage in free-flowing conversations with Gemini.

Image source: Google

Like Apple's Apple Intelligence, Gemini also employs local processing. However, Google emphasizes that Gemini does not rely on third-party AI services, offering users greater peace of mind in terms of privacy and security. While this statement subtly criticizes Apple's decision to leverage ChatGPT for text generation, image creation, and other functions, it also positions Google's AI integration with third-party large models as the opposite approach.

This piqued my curiosity: is the development of proprietary large models or the integration of various large models the "correct" path for AI hardware?

AI Hardware Routes Diverge: Google, Apple, and Huawei Take Different Paths

To answer this question, I believe it's appropriate to consider Google, Apple, and Huawei, which have both hardware and software capabilities.

According to the latest data from Counterpoint, Android, iOS, and HarmonyOS held the top three spots in the global mobile phone system market share in Q1 2024, with specific shares of 77%, 19%, and 4%, respectively. These three operating systems collectively dominate the global mobile phone market, and their other hardware products are generally equipped with the same operating system to maintain ecosystem unity.

From an operating system perspective, Google and Huawei share similarities in their open approach to collaboration with third parties, while Apple maintains a tight grip on both hardware and software, excluding third-party involvement. These different operating models confer distinct characteristics, with open collaboration fostering diversity and playfulness on one hand and centralized ecosystem unity on the other.

In terms of specific functions, the AI capabilities of hardware from Google, Apple, Huawei, and most mainstream brands are similar, encompassing AI assistants, image and text generation, photo editing, and real-time translation. While there isn't much differentiation in these features, they are powered by large models.

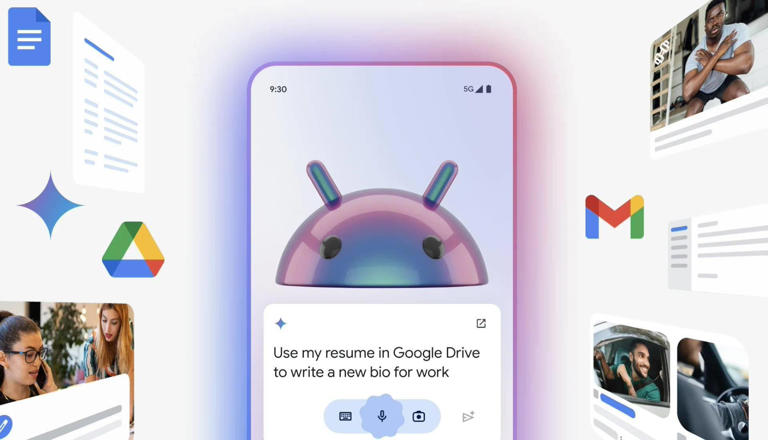

It's evident that these three players have adopted different strategies in integrating AI large models into their hardware, contrasting with their approaches to operating systems.

Interestingly, brands are learning from each other's strengths. Google has shifted from the open Android model to Gemini Nano independently handling device-side AI. Huawei, in addition to its Pangu large model, has introduced third-party collaboration models like Wenxin, iFLYTEK Spark, and Zhipu Qingyan. Apple has announced a partnership with OpenAI and is seeking more collaborators, with Google Gemini reportedly on the shortlist, though this is unlikely to materialize given Google's stance at the conference.

Image source: Apple

Google's independent AI processing strategy offers high unity and privacy, reducing the risk of leaks during handovers with third-party AI providers, akin to the high security touted by Apple's ecosystem.

Apple and Huawei, on the other hand, prefer to expand their AI arsenal by seeking more partners. With more handover steps required, their AI systems may not match Google's in terms of unity and privacy but offer greater versatility. For instance, they can be compatible with various hardware, even supporting combinations within specific industries like automotive, home appliances, drones, and robots, and providing interfaces to nurture more third-party software apps, fostering a richer product ecosystem.

AI Hardware Competition Intensifies, "Independent Processing" Not Necessarily Optimal

Almost all mobile phone brands are currently developing AI strategies that integrate on-device and cloud capabilities. Overseas, they primarily collaborate with Google and OpenAI, while domestically, they work with Chinese service providers like Baidu and ByteDance. The reason is straightforward: purely on-device large models place high demands on hardware, contributing to the trend of increasing memory in phones.

From a business model perspective, the integration of on-device and cloud capabilities better aligns with manufacturers' operations. Cost-wise, relying solely on cloud processors for AI tasks is not the most economical option due to high inference costs and concurrent processing requirements. Leveraging local resources can help manufacturers save costs.

From a user perspective, many scenarios necessitate timely AI intervention. On-device AI models reduce latency and protect user privacy by keeping sensitive data off the cloud.

Image source: Honor

Therefore, as on-device and cloud integration becomes the norm, the Apple approach may be more suitable for most brands unless they possess a comprehensive large model ecosystem like Google that can independently support device-side AI systems.

In terms of final outcomes, even Google, which boasts independent processing, hasn't demonstrated exceptional competitiveness. Its heavily promoted Pixel Screenshots and Add Me features pale in comparison to domestic brands like OPPO, Honor, and Huawei that have mastered AI applications.

In the long run, Google's strategy of not relying on third-party AI may not be optimal. As compatible hardware types and software interfaces increase, limitations will become more apparent. Other brands with more options will gradually widen the gap in AI capabilities.

Closing Remarks

It's worth noting that Google Pixel, which first emphasized AI experiences in 2016 and was among the earliest to proclaim "AI First," did so when AI mainstream technology was deep learning, which didn't extend to the diverse AI functions we see today. Google's early adoption contributed to its position as a dominant player in large models overseas.

The Pixel 9 series and other new products have undergone varying degrees of hardware and software upgrades, particularly emphasizing independent AI, demonstrating Google's confidence and anticipation for experiential changes.

However, we'll have to wait for Apple's AI launch to determine if Google's AI experience truly surpasses its rival. Lei Technology will continue to closely monitor the latest developments in the AI large model industry, and we eagerly anticipate the innovations that lie ahead.

Source: Lei Technology