"Three Harvard post-00s entrepreneurs join forces to start a business, achieving a valuation of over 100 million yuan in just 7 months"

![]() 09/03 2024

09/03 2024

![]() 599

599

Author | Xuushan, Editor | Evan

“

Less than seven months after its establishment, the valuation has exceeded 100 million yuan.

” Cohere, founded by three post-90s entrepreneurs, has soared to a valuation of $36 billion, while Pika, founded by a post-95s entrepreneur Guo Wenjing, has secured a valuation of $5.5 billion. It is evident that the AI startup wave in Silicon Valley is influencing everyone, and Leonard Tang, a post-00s entrepreneur, is among the thousands caught up in this trend.

Leonard Tang is the founder and CEO of Haize Labs, an AI security startup, and a recent graduate of Harvard University.

Through a video, Haize Labs, founded by him, demonstrates the "fragility" of the security defenses of AI large models. With just a few lines of prompts, it is possible to bypass the security restrictions of these models, enabling them to generate vast amounts of pornographic text, violent images, and even automatically launch cyber attacks.

Currently, Haize Labs primarily leverages its flagship AI product, the Haizing automation suite, to conduct stress tests by attacking AI large models to uncover security vulnerabilities. According to foreign media outlet winbuzzer, prominent AI startups such as Anthropic and Scale AI are among its clients.

Founded in December 2023, Haize Labs has received at least four term sheets for investment within seven months of its establishment, with prominent venture capital firm Coatue also participating. Sources close to the matter revealed that Haize Labs has secured the latest round of funding led by General Catalyst, valuing the company at $100 million.

Achieving such a high valuation in just one or two funding rounds is a rare feat in the industry. On the one hand, it demonstrates investors' confidence in Haize Labs, and on the other, it underscores the potential of the AI security sector.

According to a report by international research firm CB Insights, the machine learning security market (MLSec) is growing rapidly. In 2023, the total investment in AI security startups reached $213 million, significantly surpassing the $70 million recorded in 2022.

According to incomplete statistics from Silicon Valley-based startup accelerator SVAC, at least five AI security unicorns have emerged in North America in 2024, with at least 39 AI security startups securing new funding rounds, raising a cumulative total of $800 million.

“The field of AI large model security remains a largely untapped market with immense potential for startups.” said one investor.

As AI attacks and jailbreaks of large models continue to occur, concerns about the risks of AI running out of control are growing. Haize Labs is tackling this challenge by focusing on AI jailbreaks and large model security.

01

Three Harvard graduates target AI "jailbreaks"

“Everyone turns a blind eye to AI large model jailbreaks.”

In an interview with the media, Leonard Tang mentioned this as the impetus for him and his two friends, Richard Liu and Steve Li, to found Haize Labs.

According to VentureBeat, Leonard Tang has temporarily suspended his first year of doctoral studies at Stanford University to focus on Haize Labs' development. Meanwhile, Richard Liu and Steve Li balance their academic pursuits while serving as undergraduate researchers at the Berkeley AI Research Lab.

Leonard Tang, founder and CEO of Haize Labs, told VentureBeat that Haize Labs is the commercialization of his undergraduate research on adversarial attacks and large model robustness. During his university years, he majored in mathematics and computer science.

AI direct translation, image source: official website

Leonard Tang's interest in large language model (LLM) jailbreaks first piqued about two years ago when he attempted to bypass Twitter's NSFW (Not Safe For Work) filter by conducting adversarial attacks on image classifiers, a tool designed to filter out inappropriate content for workplace viewing.

Subsequently, he realized that many people seemed to be turning a blind eye to AI large model jailbreaks, inspiring him to delve deeper into AI reliability and security research.

In April this year, he published a paper titled "How to Easily Break Out of Llama3" on Github. The paper pointed out that large language models like Llama 3 lack self-reflection capabilities and may not comprehend the meaning of their own outputs once induced.

As evidenced by his personal webpage on Harvard's website, Leonard Tang led a diverse university experience. Apart from his extensive research on LLM models, he also interned at prominent AI companies, including NVIDIA's MagLev ML Infrastructure Engineering team, where he contributed to autonomous vehicle research, and as an SDE intern at Amazon, working on big data supply chain logistics.

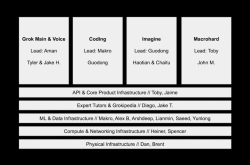

Fellow co-founders Richard Liu and Steve Li are also researchers in the AI field. Steve Li, a senior at Harvard majoring in computer science, focuses on AI security, speech processing, operating systems, and machine learning systems. He interned at Roblox's User Safety team, contributing to reporting features and the ML classification pipeline.

AI direct translation, image source: official website

Backed by Harvard and aiming for Silicon Valley, they have also garnered support from advisors and angel investors, building their own network. This includes professors from Carnegie Mellon University and Harvard, founders of Okta, Hugging Face, Weights and Biases, and Replit, as well as AI and security executives from companies like Google, Netflix, Stripe, and Anduril. Advisors like Graham Neubig provide expertise in LLM evaluation for Haize Labs.

Pliny the Prompter, a prominent figure in the AI jailbreak community, is also a friend and collaborator of Hazie. Known for quickly compromising the security of models like Llama 3.1 and GPT-4o, he has stated that it takes him only around 30 minutes to breach the world's most powerful AI models.

It is evident that Haize Labs has established a preliminary influence in the field of AI large model security and has built its own network to facilitate collaborations.

02

Traditional censorship mechanisms are not suitable for AI large models

Initially, Haize Labs tested various well-known generative AI programs, including AI video platform Pika, ChatGPT, and image generator DALL-E. They found that many of these tools generated violent or pornographic content, instructed users on how to produce biological weapons, and even allowed automated cyber attacks.

This is because traditional censorship methods, such as manual review and keyword filtering, are ineffective in accurately assessing the outputs of large models.

As AI large models are typically trained using deep learning and vast amounts of data, their decision-making processes can be opaque, making it challenging for traditional censorship mechanisms to control potential risks.

Furthermore, AI large models continuously learn and adapt to new data, and their outputs can vary over time and with different inputs. Traditional censorship mechanisms, often static and lagging behind, struggle to keep up with the dynamic changes in AI large models.

Additionally, AI large models can process and analyze massive amounts of data, generating content at a pace and with novel expressions that often exceed the expectations of traditional censorship mechanisms.

This has resulted in poor performance by censorship tools like OpenAI's Moderation API, Google's Perspective API, and Meta's Llama Guard.

AI direct translation, image source: official website

During their jailbreak tests on various AI models, Hazie found that Anthropic's Claude model performed relatively well in terms of security, while models like Vicuna and Mistral, which had not undergone explicit security fine-tuning, were vulnerable to jailbreaks.

In response to questions about the legality of jailbreaks, Haize Labs emphasizes that proactive measures are necessary to provide defensive solutions and prevent such incidents.

03

Securing orders from Anthropic and Scale AI; Haize's rapid commercialization progress

As the saying goes, "No pain, no gain." Interestingly, many of the organizations and model companies that Haize has attacked have not sued them but instead become partners.

Today, Haize Labs' clients include AI enthusiasts, government agencies, and model vendors. They have secured a $30,000 pilot project with AI large model startup Anthropic, a five-figure agreement with AI21, and partnerships with AI model platforms like Hugging Face, the UK's Artificial Intelligence Security Institute (AISI), and the AI engineering consortium MLCommons.

Moreover, Haize Labs has signed a letter of intent with Scale AI for $500,000, aiming to conduct domain-specific stress tests on LLMs in healthcare and finance, reassessing the models with each update to maintain robustness.

The Haizing Suite developed by Haize Labs is a set of search and optimization algorithms that combine fuzz testing and red team testing techniques to comprehensively inspect AI systems. Primarily used during development to induce hallucinations in large models for stress testing, it effectively alerts developers to security vulnerabilities.

"Only by rigorously, scalably, and automatically testing your models to understand all their extreme cases and vulnerabilities can customers begin to address these weaknesses," said Leonard Tang.

The Haizing Suite has been upgraded with various algorithms that use techniques like reinforcement learning to detect harmful content inputs. It leverages diverse testing scenarios to identify potential issues and provides actionable guidance by reverse-defining undesirable behaviors.

The suite is available in both free and commercial versions. While the free version requires an application, the commercial version is Hazie's primary source of revenue. The commercial version of the Haizing Suite provides CI/CD haizing and runtime defense solutions for base model service providers and application-layer customers.

The giants in the AI large model space have also taken notice of the security concerns surrounding jailbreaks and have issued warnings.

OpenAI has invited experts from various fields to help improve model security and reliability through adversarial methods, essentially recruiting "red team members."

Microsoft has detailed a jailbreak technique called "Skeleton Key," which tricks large models into bypassing their security mechanisms by convincing them that their outputs are within "legal bounds."

Anthropic has observed that as the context window length increases, jailbreaks of large models begin to resurface. "It's time to work hard to mitigate the potential risks of large model jailbreaks, or they could cause serious harm," Anthropic stated in an announcement.

In today's rapidly evolving AI large model landscape, security remains a crucial topic.

Currently, there is a divide between AI radicals and conservatives, each predicting the risk level of AI large model security based on their own criteria. Recently, Elon Musk's AI large model Grok 2.0 has been generating AI content without restrictions, challenging users' sensibilities. AI radicals argue that a complete ban on AI jailbreaks may hinder the flexibility and responsiveness of AI large models.

By automating various types of attacks to test the security vulnerabilities of AI large models, Haize aims to establish more robust defenses. However, it is also evident that major AI large model vendors are already strengthening their defenses against jailbreaks. If Haize's growth remains limited to AI jailbreaks, its business direction may appear narrow and challenging to sustain future development.

End-of-article interaction:

Do you have confidence in Haize Labs?

Share your thoughts in the comments below!