AI Daily: Ilya, the Soul of OpenAI, Establishes SSI; Google's Search Share Drops to 86.99%, the Lowest in 15 Years

![]() 06/21 2024

06/21 2024

![]() 597

597

Former Chief Scientist of OpenAI, Ilya Sutskever, announced the establishment of Safe Superintelligence (SSI), a company dedicated to developing safe superintelligence technology. Sutskever stated that SSI's first product will be safe superintelligence.

According to GS Statcounter data, Google's search engine market share dropped to 86.99% in April this year, the lowest point since 2009, seemingly influenced by general AI assistants and new AI search players.

What other hot topics in the AI industry at home and abroad are worth paying attention to over the past day? Let Raven take you through it.

/ 01 / Large Models

1) Peking University Launches a New Multimodal Large Model for Robots, Efficient Reasoning and Manipulation for General and Robotic Scenarios

Relying on platforms such as the National Engineering Research Center for Video and Visual Technology at Peking University, HMI Lab introduces an end-to-end robot MLLM—RoboMamba, which utilizes the Mamba model to provide robot reasoning and action capabilities while maintaining efficient fine-tuning and reasoning abilities. Researchers have integrated visual encoders with Mamba, aligning visual data with language embeddings through joint training, enabling the model with visual commonsense and robot-related reasoning capabilities.

Paper: RoboMamba: Multimodal State Space Model for Efficient Robot Reasoning and Manipulation

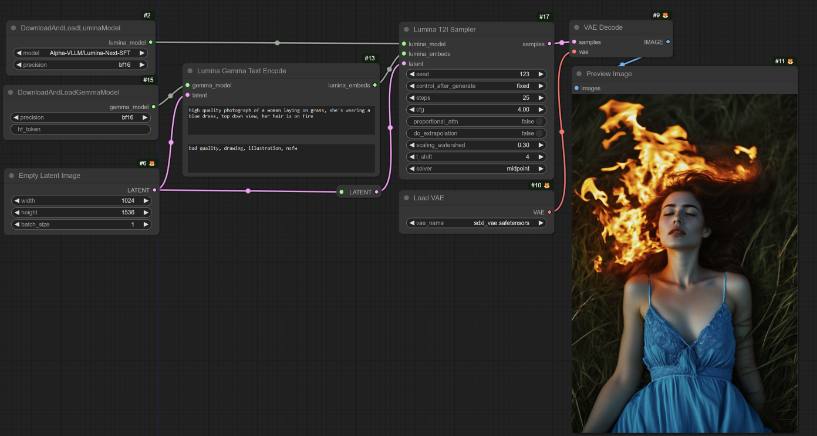

2) NVIDIA's Lumina-T2X Image Generation Applied in Confyui

ConfyUI, developed based on Stable Diffusion, has begun using NVIDIA's Lumina-T2X image generation technology. From the trial, the open-source model Lumina-T2X is comparable to industry-leading MJ V6 in terms of aesthetic expression and image quality.

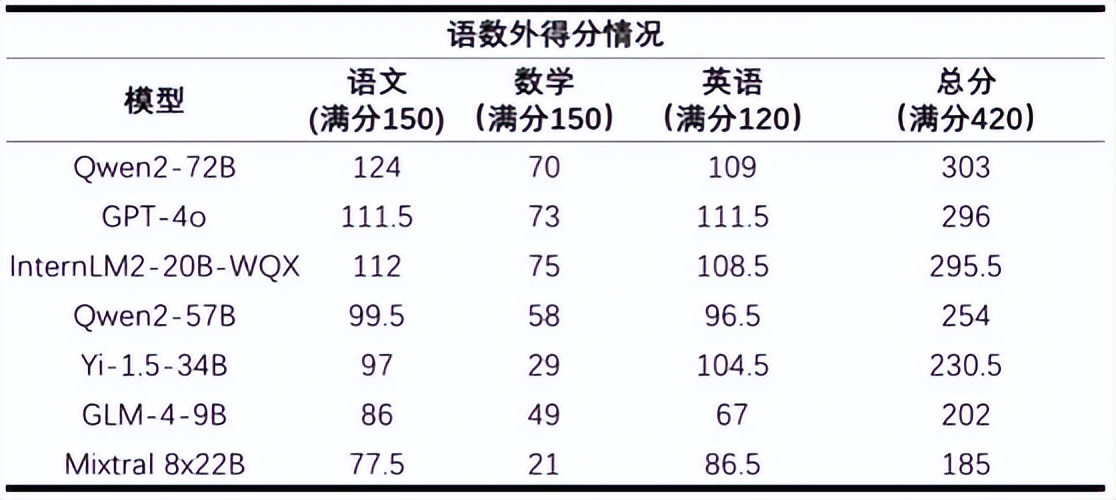

3) The First AI College Entrance Examination Evaluation Results, GPT-4o Takes Second Place

The Shanghai AI Lab and Sinan Evaluation System released the results of an AI model participating in the college entrance examination's "Language, Math, and Foreign Language" full-volume ability test. The test selected six open-source models and GPT-4o, using the national new curriculum standard I volume. The evaluation results showed that Qwen2-72B, GPT-4o, and Shusheng·Puyu 2.0 Wenquxing (InternLM2-20B-WQX) were the top three, with a score rate exceeding 70%.

However, all participants failed in the mathematics section, with InternLM2-20B-WQX scoring the highest at 75 (out of 150), surpassing GPT-4o's 73 points.

4) Kimi Will Launch a Beta Test for Context Caching

Kimi announced that the Context Caching feature will soon enter beta testing. This innovative feature will support long-text large models and provide users with unprecedented experiences through an efficient context caching mechanism. Context Caching technology can significantly reduce the cost for users when requesting the same content by caching repeated Tokens content.

5) China Telecom Launches Tele-FLM-1T, a Single Dense Trillion-Parameter Semantic Model

TeleAI, the Artificial Intelligence Research Institute of China Telecom, jointly with Beijing Zhiyuan Artificial Intelligence Research Institute, released Tele-FLM-1T, the world's first single dense trillion-parameter semantic model, making it one of the first institutions in China to release dense trillion-parameter large models. Combining techniques such as model growth and loss prediction, this series of models only consume 9% of the industry's average training solution in terms of computing resource usage.

/ 02 / AI Applications