How Do Vehicle-Mounted Binocular Cameras 'Perceive' the World?

![]() 11/17 2025

11/17 2025

![]() 514

514

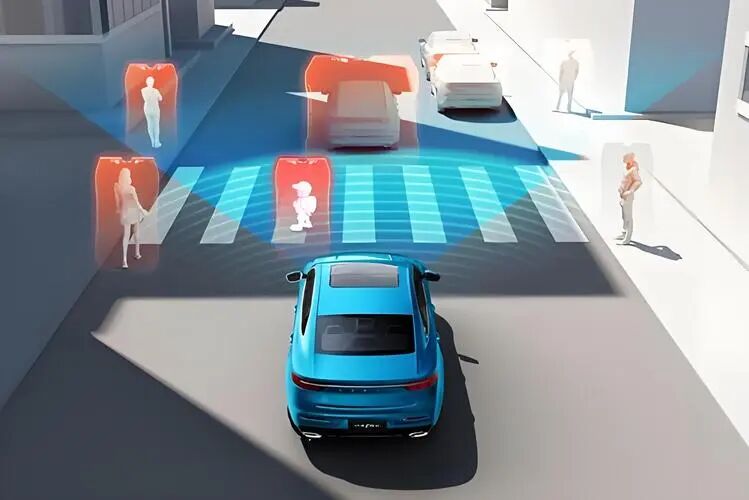

To enable a vehicle to discern what lies ahead, gauge the distance, and determine if it can approach safely, the first crucial step is to let it 'perceive' the environment clearly. Among various vehicle-mounted perception hardware, the vehicle-mounted binocular camera most closely mimics how humans perceive the world.

Image Source: Internet

Vehicle-mounted binocular cameras (also known as stereo cameras) replicate the visual mechanism of the human eye. They simultaneously capture the same scene with two cameras placed slightly apart and then compare the differences between the two images to calculate depth information.

Unlike monocular cameras, which can only recognize shapes and colors or rely on learned patterns to estimate distances, binocular systems can directly measure the distance of objects from the vehicle. This capability is vital for tasks such as collision risk assessment, precise parking, and obstacle avoidance.

Basic Principle: Reconstructing 3D Information from Two Images

In simple terms, a vehicle-mounted binocular camera consists of two cameras mounted side by side, facing forward, with a fixed lateral distance between them called the 'baseline.' The imaging positions of the same object in the left and right images usually differ, and this horizontal positional difference is known as 'disparity.' After geometric correction of the left and right images, matching points can be restricted to the same horizontal line for search, and disparity refers to the pixel offset in the horizontal direction.

Image Source: Internet

According to the imaging model, the true distance Z of an object is related to the camera's focal length f, baseline B, and disparity d through a simple equation: Z = f × B / d. In other words, the greater the disparity, the closer the object; the smaller the disparity, the farther the object. To ensure that the vehicle-mounted binocular camera adheres to this formula, precise calibration of intrinsic parameters (such as focal length and distortion coefficients) and extrinsic parameters (the positional relationship between the two cameras) is necessary. The accuracy of calibration directly determines the accuracy of depth estimation.

Vehicle-mounted binocular cameras capture only two images, which do not inherently contain depth information. To calculate depth from these two images, a series of operations are required. First, the cameras must be calibrated to obtain intrinsic and extrinsic parameters, and image distortion correction and epipolar correction must be performed to align the corresponding points of the left and right images on the same horizontal line, thereby reducing matching complexity. Next, stereo matching must be conducted, which involves finding the most likely corresponding pixel in the right image for each pixel in the left image. This is the most critical step in the entire process. Traditional methods include block matching and semi-global matching based on cost aggregation. However, in recent years, with the growing popularity of deep learning methods, more accurate and robust disparity estimation can be achieved through end-to-end cost construction, aggregation, and regression. Finally, the disparity must be converted into true depth, and confidence estimation and post-processing (such as hole filling and smoothing filtering) must be performed to output a depth map that effectively enhances the performance of downstream tasks.

Algorithms and Implementation: From Traditional Methods to Deep Learning

Early stereo vision algorithms focused on accurately defining the matching cost between pixels and optimizing local matching results into a globally consistent disparity map. Common cost functions for pixel-level matching include color differences, SAD (Sum of Absolute Differences), SSD (Sum of Squared Differences), and Census transform, which is more robust to illumination changes. After obtaining preliminary matching costs, cost aggregation and optimization steps are required to suppress noise and incorrect matches. Among these, semi-global matching (SGM) achieves an excellent balance between efficiency and accuracy by aggregating costs along multiple paths, making it a classic algorithm widely adopted in vehicle-mounted systems.

In recent years, with the significant improvement in computing power, stereo matching methods based on convolutional neural networks (CNNs) have rapidly developed. These methods leverage neural networks to learn more robust and higher-level matching features from data, perform fine matching comparisons by constructing a three-dimensional cost volume, and ultimately output a disparity map directly through regression or soft classification methods. Deep learning methods demonstrate stronger robustness in scenarios where traditional algorithms struggle, such as weak textures, repetitive textures, and severe illumination changes. However, their performance is highly dependent on the diversity and completeness of the training data, as well as their generalization ability to edge cases.

In practical projects, stereo vision is integrated into a larger perception pipeline. Single-frame disparity maps are susceptible to noise, so temporal filtering or multi-view fusion techniques are used, combined with pose information from IMUs or odometers, to align and fuse depth information from adjacent frames, thereby improving the reliability of distant targets and weakly textured areas. Additionally, the binocular system can be linked with semantic segmentation or object detection modules. Once pedestrians or vehicles are detected, finer matching and confidence assessment can be performed in these regions, enabling mutual verification between semantic and geometric information and reducing false positives and negatives.

Why Automakers and Tier-1 Suppliers Favor Binocular Cameras

Binocular cameras offer several distinct advantages over other sensors, ensuring their continued relevance in autonomous driving systems. They use passive imaging, unlike LiDAR or millimeter-wave radars that actively emit energy, eliminating electromagnetic radiation issues and making them more acceptable to the public in terms of legal compliance, privacy protection, energy consumption, and cost.

They also provide richer semantic information, as cameras directly output high-resolution color images, which are naturally advantageous for recognizing lane markings, traffic signs, signals, road textures, and pedestrian appearances. This information is crucial for semantic understanding and behavior prediction.

Image Source: Internet

The binocular system also offers high lateral resolution, enabling clear capture of fine structures such as lane markings, curbs, and sidewalk boundaries, which many ranging sensors struggle to replace.

Binocular cameras are significantly cheaper than high-precision solid-state or mechanical LiDARs, making them more suitable for sensor configurations in mass-produced vehicle models. Additionally, their compact size and low power consumption make them particularly suitable for short-range perception, parking assistance, low-speed urban driving, or as effective supplements to other expensive sensors.

The dense depth maps generated by binocular systems (as opposed to sparse point clouds) are also more suitable for certain algorithmic needs. As part of a visual subsystem, binocular systems can complement monocular vision, radars, and LiDARs to enhance overall perception robustness.

Challenges of Vehicle-Mounted Binocular Cameras

Despite their obvious advantages, vehicle-mounted binocular cameras also have inherent issues common to cameras. They are highly sensitive to environmental conditions, such as weak light, nighttime, backlighting, and rain, snow, or fog, which can significantly reduce matching quality, leading to increased noise or large-scale low-confidence regions in the disparity map. Unlike LiDAR, cameras cannot penetrate rain or fog, making it difficult to ensure safety in extreme weather conditions relying solely on binocular systems.

In areas lacking textures or with repetitive textures (such as white walls or single-color vehicle bodies), stereo matching is prone to errors or even fails to find corresponding points. For reflective or translucent objects (such as standing water or glass windows), cameras are more likely to produce false geometric information, leading to incorrect depth estimation.

The effective range of binocular systems is also limited by camera resolution and baseline design. For small distant targets (such as small obstacles tens of meters away on highways), disparity may be less than one pixel, amplifying errors.

Due to the different perspectives of the left and right cameras, certain areas may be visible in one camera but not the other, leading to missing disparity or matching errors. Binocular systems also rely heavily on calibration accuracy, as any slight geometric offset can cause systematic errors, posing challenges to long-term stability.

Key Considerations for Installing Vehicle-Mounted Binocular Cameras

Deploying binocular cameras on vehicles involves more than simply fixing the cameras to the front of the car. The baseline length, camera resolution, lens field of view, exposure strategy, and synchronization mechanism must all be carefully calculated. A longer baseline improves depth resolution for distant objects but also increases structural size and may cause disparity saturation issues at close range. For low-speed urban scenarios, a short baseline is sufficient and easier to install; for highway scenarios requiring detection of distant targets, a longer baseline, higher-resolution sensors, or longer focal length lenses are needed.

Image Source: Internet

Temporal synchronization of the cameras is also crucial, as left and right images must be captured within a very short time frame; otherwise, vehicle or surrounding object motion can lead to incorrect disparity calculations. Automotive-grade binocular modules typically achieve trigger synchronization at the hardware level and perform preliminary calibration and correction internally to reduce system integration complexity.

Calibration and calibration maintenance are often underestimated. Camera calibration requires precise estimation of intrinsic parameters (focal length, principal point, distortion) and extrinsic parameters (rotation and translation between the two cameras). After the vehicle experiences bumps or minor collisions, the camera bracket may undergo slight offsets, leading to deviations in depth estimation. Therefore, strategies for regular self-calibration or online calibration must be designed, utilizing lane markings, road planes, or other structured features to automatically correct extrinsic parameters.

Additionally, exposure and HDR strategies cannot be overlooked, as cameras must ensure image usability under complex lighting conditions such as backlighting and high contrast. Many systems adopt multi-exposure or automatic gain control to expand the dynamic range and perform illumination normalization during image processing to enhance matching robustness.

High-precision depth estimation, especially methods based on deep learning, requires significant computational resources. On cost-constrained mass-produced vehicles, trade-offs must be made. In conventional driving scenarios, lightweight or optimized traditional algorithms can be used to output preliminary depth results; when the system detects complex or critical scenarios (such as dense pedestrian areas or narrow roads), more refined depth networks can be triggered for processing. Computational-intensive tasks can also be allocated to onboard high-performance computing units, and sparsification or hierarchical processing techniques can be employed to save resources.

Final Thoughts

Binocular cameras are like a reliable tool in the autonomous driving perception toolbox. However, just as a screwdriver cannot replace a hammer, they cannot solve all perception problems. Nevertheless, in their advantageous scenarios, they can accomplish perception tasks with excellent cost-effectiveness. A truly reliable autonomous driving system is built through the seamless cooperation of a group of technological tools, each with its own strengths and weaknesses, complementing each other to achieve robustness.

-- END --