What Technologies Support Tesla's Groundbreaking First Self-Delivery?

![]() 07/01 2025

07/01 2025

![]() 789

789

On June 28, 2025, Tesla achieved a milestone when its first fully self-driving Model Y navigated from the factory to a customer's home, completing the delivery a day ahead of schedule. The journey, which included city and highway driving, was entirely autonomous, with no human intervention or remote safety operator.

Image Source: Tesla Video Number

"No one was in the car, and there was no remote operator at any time," Tesla stated. While its Full Self-Driving (FSD) and Robotaxi projects are capable of similar feats, Tesla lacked confidence in their reliability for extended unsupervised operation. This time, the Model Y's autonomous delivery traversed the city, reaching a top speed of 116 km/h and covering approximately 24 kilometers (30 minutes, indicating mostly low-speed driving), arriving safely at the owner's home without human intervention. This event signifies a significant leap in autonomous driving technology.

HW5.0 Hardware Platform: The Foundation of Autonomous Driving Perception and Computation

The success of Tesla's unmanned delivery is heavily reliant on its latest HW5.0 hardware platform, which boasts significant upgrades in both sensor configuration and computing power.

In terms of sensors, Tesla has established a precise and comprehensive perception system. Twelve high-definition cameras provide a 360-degree view with a detection range of up to 250 meters, capturing details such as distant traffic signs, surrounding vehicles' statuses, and pedestrian movements. Equipped with Samsung's custom "weather-resistant lenses" and built-in heating elements, these cameras can quickly melt snow and ice and have hydrophobic coatings to improve visibility in rain or fog. Additionally, four 4D millimeter-wave radars and ultrasonic sensors complement the cameras, forming a robust multi-sensor fusion matrix. Millimeter-wave radars are unaffected by light or weather, detecting the distance, speed, and angle of surrounding objects in real-time, while ultrasonic sensors are crucial for close-range perception and parking.

Regarding computing power, the HW5.0 platform features the Dojo supercomputer, with a computational capacity of 1.1 EFLOPS, five times that of its predecessor. This formidable power allows the system to process 25 billion pixels of data per second, ensuring smooth execution of complex neural network operations. Whether modeling the 4D environment or planning real-time paths, the system operates swiftly and accurately, enabling the vehicle to make timely and rational decisions during driving. The platform's redundant design further enhances system reliability, with dual independent computing units capable of automatic switching in 0.3 milliseconds, ensuring continued operation even if one unit fails.

EFLOPS is a unit of floating-point computational power, suitable for high-precision calculations like scientific simulations and AI training. TOPS, on the other hand, focuses on low-precision integer operations, common in edge devices like autonomous driving chips and mobile phone NPUs. A conversion factor of 1 EFLOPS = 10^6 TOPS exists theoretically but must consider precision differences in practical applications.

Dojo is a scalable computing system architecture, also known as a supercomputer architecture, with its core chip being the D1. In the autonomous driving innovation wave led by Tesla, Dojo plays a pivotal role. It is not only the core driver of Tesla's AI strategy but also a hallmark of its hardware breakthroughs. Dojo integrates computing, networking, input/output (I/O) chips, instruction set architecture (ISA), power delivery, packaging, and cooling, designed specifically for large-scale custom machine learning training algorithms. It aims to provide unparalleled computational support for Tesla's autonomous driving technology and other AI applications.

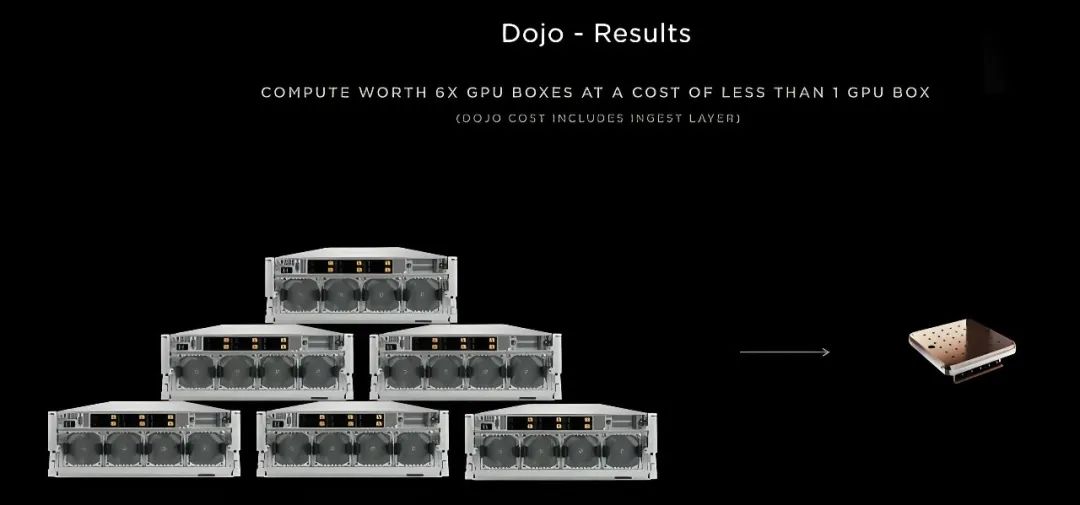

Figure: Dojo's stackable modular architecture, scalable for increased computational power

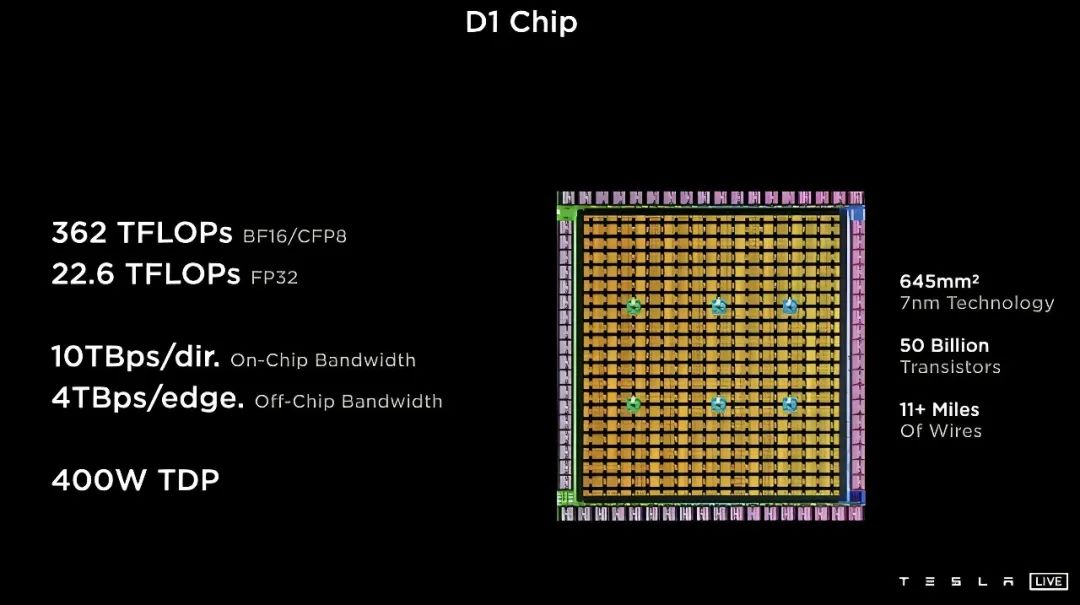

The D1 chip, a core component of the Dojo architecture, is independently developed by Tesla using TSMC's 7-nanometer process, integrating up to 50 billion transistors. Each D1 chip contains 354 custom 64-bit RISC-V cores, each equipped with 1.25MB of SRAM for efficient data and instruction storage, optimizing AI-related operations. With a TDP (Thermal Design Power) of 400W, a single D1 chip achieves 22.6 TFLOPS of FP32 performance.

Figure: D1 chip, the foundational building block of Dojo

For enhanced computational power, Tesla organizes 25 D1 chips into a 5×5 cluster, encapsulated using TSMC's InFO_SoW technology. This encapsulation ensures low latency and high bandwidth between chips, facilitating efficient integration of massive computations. A training module comprising 25 D1 chips, encapsulated in a 15kW liquid-cooled system, achieves 556 TFLOPS of FP32 performance, with each module equipped with 11GB of SRAM and connected via 9TB/s fabric links.

Multiple such training modules combine to form even larger computing clusters within the Dojo supercomputing architecture. For instance, 120 training modules constitute an ExaPOD computing cluster, housing a total of 3,000 D1 chips, demonstrating remarkable computational prowess. In practice, this power allows Dojo to process vast amounts of video data, such as 232,000 frames daily, aiding Tesla in continuously refining neural network models and enhancing functions like traffic light recognition and automatic parking.

The D1 chip, as part of Dojo, also performs impressively.

Figure: Performance comparison between D1 chip and mainstream autonomous driving chips, with D1 delivering several times the power of Orin at 400W

Software Architecture: A Paradigm Shift from Code to Neural Networks

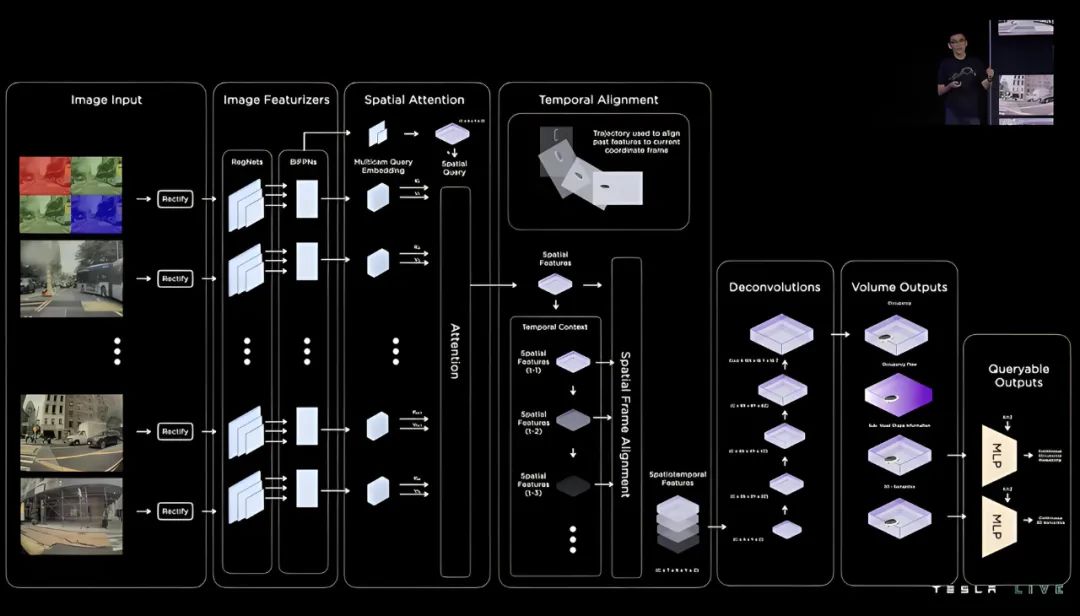

Tesla's FSD V12 software architecture represents a revolutionary advancement in autonomous driving. Unlike traditional systems reliant on C++ code, FSD V12 employs end-to-end neural network technology, directly mapping visual input to vehicle control output, mimicking human driver decision-making.

The end-to-end learning model discards complex code logic, enabling the neural network to autonomously identify crucial road scenario information and make driving decisions. For instance, when anticipating a pedestrian crossing 100 meters ahead, the system predicts the pedestrian's action probability based on historical data and current scenario features, adjusting vehicle speed in advance to ensure safety. This approach enhances system adaptability and effectively handles complex, non-standard road scenarios.

The HydraNet + Transformer architecture is another cornerstone of FSD V12. HydraNet's multi-task learning network facilitates feature sharing across tasks like object detection and lane recognition, avoiding redundant calculations and boosting computational efficiency. Transformer's attention mechanism grants the system robust environmental understanding capabilities, with its 4D vector space (3D space + time) effectively managing occlusion and dynamic obstacles. In congested traffic, where vision may be obstructed by the vehicle ahead, the system analyzes historical data and the current environment to predict potential obstacles in occluded areas and plan avoidance routes ahead of time.

The Occupancy Network enhances the system's perception of complex environments. This network divides the vehicle's surroundings into voxels, identifying various irregular obstacles like overturned vehicles and fallen cargo, and predicting their movement trends. This allows Tesla's autonomous driving system to handle special scenarios like unmarked roads and construction areas calmly, significantly improving driving safety and reliability.

Figure: Tesla FSD V12 Architecture Diagram, with "99% of functionalities powered by neural networks"

Data and Training: Intelligent Evolution Fueled by Massive Data

Data is the linchpin of autonomous driving technology development, and Tesla excels in this area. Its global fleet has amassed over 12 billion miles of real-world driving data, encompassing diverse road conditions, weather scenarios, and driving situations, providing ample material for algorithm training. Additionally, Tesla generates substantial synthetic data through simulation platforms, replicating extreme, rare scenarios like sudden natural disasters and traffic accidents, further diversifying training data and enhancing the system's ability to handle edge cases.

The Dojo supercomputer is instrumental in data processing and model training. With a total computational power of 88.5 EFLOPS (each Dojo training module containing 3,000 D1 chips boasts 1.1 EFLOPS, and the Dojo supercomputer comprises multiple such modules), Dojo efficiently processes vast video data. For example, it processes 232,000 video frames daily, continuously optimizing neural network models through analysis and learning, enhancing functions like traffic light recognition and automatic parking.

Shadow Mode is a critical method for Tesla to continually refine its autonomous driving system. During real-world driving, this mode compares the system's decisions with those of human drivers, collecting difference data. This data is fed back into the training model to further enhance the algorithm and reduce system intervention rates in practical applications. Currently, Tesla's urban road autonomous driving intervention rate has dropped to 1.2 interventions per thousand kilometers, underscoring the effectiveness of a data-driven optimization strategy.

Extreme Environment Adaptation: Technological Confidence in Handling Complex Scenarios

Autonomous driving technology faces various complex environmental challenges in real-world applications. Tesla bolsters the system's adaptability in extreme environments through coordinated hardware and software optimization.

In weather response, both hardware weather-resistant design and software algorithm optimization are essential. Weather-resistant lenses and heating elements address camera vision blur in snowy conditions, while HDR imaging and defogging algorithms enhance perception under low visibility at the software level. In rainstorm tests, FSD V12's obstacle avoidance accuracy improved by 30% compared to previous versions, thanks to the algorithm's effective rainwater interference filtering and accurate road information extraction.

Lighting conditions also affect autonomous driving system performance. Tesla's adaptive exposure adjustment and polarization filter technology mitigate glare interference from bright light, improving traffic light recognition accuracy by 40%. Whether in bright midday sun or dim evening light, the system can clearly identify road signs and traffic signals, ensuring safe vehicle operation.

For complex terrain, Tesla has conducted targeted algorithm optimization. In cities with numerous steep slopes and sharp turns, like San Francisco, FSD V12 undergoes special training and parameter adjustment, making it adept at handling scenarios like steep slope starts and sharp turns, with a 37% increase in sharp turn success rate compared to V11.

Redundancy: Fundamental Technology Ensuring AI Safety

Safety remains the focal point of autonomous driving technology. Tesla ensures reliable unmanned delivery through multiple safety and redundancy designs.

At the hardware level, a robust safety barrier is established through the integration of dual computing units, triple redundant sensor fusion (camera + radar + inertial measurement unit), and independent power supplies. Should one computing unit fail, the other seamlessly takes over to ensure system operation remains uninterrupted. Similarly, triple redundant sensor fusion ensures that even if one sensor malfunctions, the others can still provide accurate environmental perception data. On the software front, traditional control algorithms and neural networks cross-verify each other's decisions, with traditional algorithms stepping in promptly to prevent accidents in case of neural network anomalies. In emergency braking scenarios, the system boasts a deceleration of 0.8g and a 95% success rate in obstacle avoidance.

Furthermore, Tesla has implemented a real-time monitoring mechanism where the AI system continuously oversees the operational status of sensors and computing units. Upon detecting any anomaly, it can trigger an alarm or switch the system to safe mode within a mere 50 microseconds, thereby maximizing vehicle and passenger safety.

Summary

It's worth noting that while the autonomous delivery of the Model Y was successfully completed in the United States, its performance in China may not be as impressive due to restrictions on the export of domestic training data. Out of Tesla's vast 12 billion miles of real-world driving data, only a fraction originates from within China.

For instance, the upcoming national standard, 'Technical Requirements for Information Security of Complete Vehicles' (GB44495-2024), is scheduled to come into effect on January 1, 2026.

Figure: GB44495-2024 is set to take effect on January 1, 2026

This standard explicitly includes tests to prevent data from leaving the country.

Figure: Test methods designed to keep data within national borders

Given that AI relies heavily on big data for model training, the instance of a Model Y 'driving itself to its owner' should be considered a unique case. We will have to wait and see when such technology can be widely adopted by domestic users.

-- END --