"Counterattack" on Musk: Altman Claims OpenAI's Autonomous Driving Technology is "Much Better"

![]() 07/07 2025

07/07 2025

![]() 600

600

The rivalry between Sam Altman, CEO of OpenAI, and Elon Musk, CEO of Tesla, has become a hot topic in Silicon Valley.

Both are co-founders of OpenAI, but their paths diverged when Altman shifted the organization towards commercial operations. Musk accused Altman of deviating from the original mission and sued him for breaching the founding agreement. In response, Musk established xAI to compete directly with OpenAI.

Altman retaliated by releasing emails showing Musk's attempts to control OpenAI and his subsequent obstruction after being rejected.

Now, Altman may be planning a "fight fire with fire" counterattack by developing autonomous driving technology to compete with Tesla's FSD.

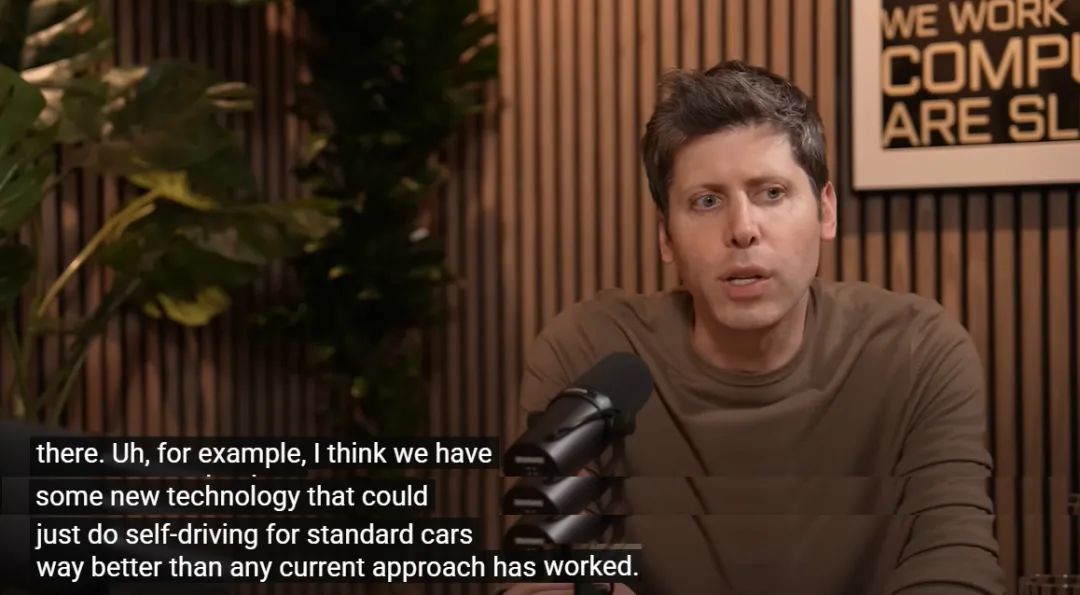

Recently, Altman appeared as a guest on his brother Jack Altman's talk show, and there were hints that he might have slipped up during the conversation.

He stated:

"I believe we have new technology that can achieve autonomous driving for standard cars significantly better than any current approach."

The phrase "much better" than "current technology" undoubtedly includes Musk's FSD.

However, Altman did not provide details about this technology or a timeline for its advancement. He simply said:

"If our AI techniques can really drive a car, that would be pretty cool."

According to The New York Times' DealBook, this technology is still in its nascent stages, with commercialization far off.

Analysts suggest that this technology involves OpenAI's Sora video software and its robotics team, but an OpenAI spokesperson declined to comment.

Previously, OpenAI did not directly explore autonomous driving but invested in companies related to this field and intelligent vehicles.

As one of the most promising applications of AI, autonomous driving has vast potential and attracts significant attention. If OpenAI indeed holds a trump card, it will undoubtedly pursue this lucrative market.

It seems that the rivalry between Altman and Musk is likely to intensify in the future.

01 What's the Trump Card?

Should we believe Altman's claim that OpenAI possesses "much better" autonomous driving technology?

Currently, the autonomous driving landscape is crowded with strong players, including Google's Waymo, Tesla, Moblieye, Qualcomm, Bosch, and numerous Chinese companies that have been exploring this field for years.

What could be OpenAI's technical approach? Recall that in early 2024, OpenAI released Sora, a text-to-video model.

Video generated by Sora

Sora can quickly produce high-fidelity videos up to one minute long based on user-input text and can also generate videos from existing static images.

Sora's videos amazed the world due to its ability to understand the physical properties and relationships between different elements in complex scenes, grasp the existence and movement of objects in the physical world, and create videos realistic enough to deceive the eye.

Almost immediately after Sora's release, the autonomous driving industry discussed using it for simulation and training, generating synthetic video data, particularly for extreme scenarios (Corner Cases), to compensate for the lack of real-world data or high costs.

However, professionals quickly pointed out that the scenes generated by Sora do not fully adhere to physical principles and may struggle to capture driving dynamics such as braking or turning. Therefore, they cannot be used as video data for training intelligent driving models.

Nonetheless, many researchers and practitioners later believed that simulation that complies with physical principles can still provide data for training. Alternatively, it can be used to reinforce training models.

Recently, the autonomous driving industry has been keen on building "world models" as the foundation for autonomous driving models. From the outset, OpenAI defined Sora as a world model capable of generating videos.

World models developed by companies like NIO and XPeng enable AI systems to create mental world maps, understand how the world works, similar to human understanding, and then drive vehicles based on this understanding.

Sora's concept partly aligns with the goal of world models to simulate the real world.

Additionally, the current mainstream approach for autonomous driving development is "big data-big model-big computing power." While OpenAI lacks driving data and has no issues with big computing power or big models, big data is crucial. If this data can be generated through simulation, it logically makes sense. However, many experts believe that relying solely on simulation data poses significant risks.

02 OpenAI's Intelligent Vehicle Business

OpenAI has not directly engaged in autonomous driving or intelligent cockpits but has some involvement through investments.

In 2023, OpenAI invested $5 million in Ghost Autonomy, an autonomous driving company that also received computing power support from Microsoft and tried to apply AI language models to autonomous driving. However, it went bankrupt in 2024.

Ghost Autonomy's autonomous vehicle

On June 10, 2025, OpenAI entered into a cooperation with Applied Intuition, an intelligent vehicle company, focusing on integrating the latest AI technology into modern vehicles to transform them into intelligent partners.

The official announcement stated that by introducing large language model-driven voice assistants and agents into vehicles, the next generation of vehicles will become productivity tools with deeply personalized experiences.

The announcement also highlighted that one of the core objectives of the cooperation is to achieve seamless connectivity between mobile devices and the intelligent systems of private vehicles. Additionally, Applied Intuition will deploy ChatGPT in multiple departments to enhance employee efficiency, optimize strategy planning, and more efficiently achieve company goals.

From these descriptions, the cooperation seems more inclined towards human-computer interaction in intelligent cockpits rather than direct application to autonomous driving.

03 From Language Models to Multimodal Models and World Models

There was once an industry view that with the rapid progress of large language models, spatial intelligence like autonomous driving could be achieved soon. However, current AI experts believe that language models alone are insufficient.

Although OpenAI stunned the world with its large language model and still uses it as its core, it has gradually expanded into the fields of multimodal models and world models.

Altman has stated that world models need to have the ability to "understand physical causality and predict event development," which, combined with the reasoning ability of large language models, may drive breakthroughs in AGI (Artificial General Intelligence).

This view is shared not only by OpenAI but also by Fei-Fei Li, the "godmother" of artificial intelligence, and Yann LeCun, Chief AI Scientist at Meta.

LeCun noted that while current AI demonstrates impressive abilities in multiple fields, it still lacks four core features of human intelligence: understanding the physical world, long-term memory, logical reasoning, and hierarchical planning.

AI without these abilities cannot drive cars.

Many experts point to world models as the solution.

When LeCun open-sourced the world model V-JEPA 2 at Meta, he stated that with the help of world models, AI no longer needs millions of training sessions to master a new ability. The world model directly informs AI how the world works, significantly improving efficiency.

This sounds somewhat like the shadow of the autonomous driving "trump card" that Altman hinted at.

At the practical level, NVIDIA, the "shovel seller" in the AI era, has already introduced a new "shovel."

At CES 2025, NVIDIA CEO Jensen Huang said, "The ChatGPT moment for robots is coming. Similar to large language models, world foundation models (World Models) are crucial for driving the development of robots and autonomous vehicles."

NVIDIA's Cosmos world foundation model is specifically designed for high-quality generation in physical interaction, industrial environments, and driving environments. It has the ability to generate realistic videos, create synthetic training data, etc., helping robots and vehicles better understand the physical world.

Illustration of NVIDIA's Cosmos World Model

In contrast to Sora, the Cosmos world foundation model is inherently "real" rather than a simulation.

In this regard, OpenAI has undoubtedly begun expanding its AI territory into spatial intelligence.

In fact, OpenAI had a robotics team very early on but dissolved it in 2021. In 2024, the robotics team was re-established and further expanded in 2025, recruiting numerous hardware robotics-related positions.

Additionally, OpenAI has partnered with Figure, a robotics startup, to provide AI model support for its humanoid robots.

The base model for humanoid robots is similar to that of autonomous driving. During the exploration of world models, if OpenAI makes a breakthrough and applies it to autonomous driving, it would be a logical step. After all, the autonomous driving market is a trillion-dollar opportunity.

Even if Altman fails to develop autonomous driving technology, disrupting Musk in a field he prides himself on would be a form of revenge.

-END-