Understanding VLM in Autonomous Driving: What Sets It Apart from VLA?

![]() 08/08 2025

08/08 2025

![]() 512

512

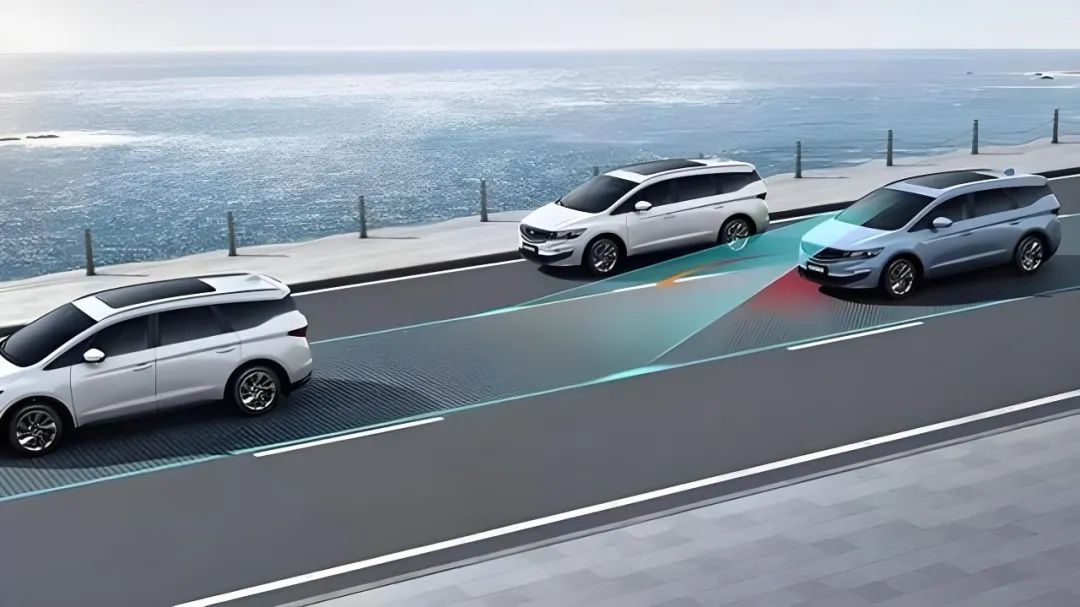

For autonomous vehicles to navigate safely through intricate and ever-evolving road environments, they must not only "see" cars, pedestrians, and road signs but also "comprehend" the instructions on traffic signs, construction notices, and passenger voice commands. We've previously delved into VLA (Related Reading: What is VLA often mentioned in autonomous driving?), a vision-language-action model. However, in various scenarios, another term, VLM, is frequently mentioned, which appears similar to VLA. So, what exactly is VLM, and how does it differ from VLA (Vision-Language-Action)?

What is VLM?

VLM, or Vision-Language Model, is an AI system that integrates the computer's ability to "understand" images and "read" text. By processing visual features and language information within a unified model, it achieves a deep comprehension of visual content and enables natural language interaction. VLM can extract object shapes, colors, positions, and even actions from images, then integrates these visual embeddings with text embeddings in a multimodal Transformer. This allows the model to map "images" to semantic concepts, generating text descriptions, answering questions, or crafting stories that align with human expression patterns. In simple terms, VLM is akin to a "brain" with both visual and linguistic senses. Upon seeing a photo, it can not only identify cats, dogs, cars, or buildings but also vividly describe them in sentences or paragraphs, significantly enhancing AI's practical value in image and text retrieval, assisted writing, intelligent customer service, and robot navigation.

How to Optimize VLM Efficiency?

VLM converts raw road images into feature representations processable by computers, typically handled by a visual encoder. Mainstream solutions include Convolutional Neural Networks (CNN) and the emerging Vision Transformer (ViT). These process images hierarchically, extracting features like road textures, vehicle outlines, pedestrian shapes, and road sign text, encoding them into vectors. The language encoder and decoder manage natural language input and output, utilizing a Transformer-based architecture. They tokenize text, learn token semantic relationships, and generate coherent language descriptions based on given vector features.

Aligning visual encoder image features with the language module is crucial for VLM. A common approach is the cross-modal attention mechanism, enabling the language decoder to focus on the most relevant image regions when generating text tokens. For instance, when recognizing "Construction ahead, please slow down," the model emphasizes salient areas like yellow construction signs, traffic cones, or excavators, ensuring text consistency with the actual scene. The system can be trained end-to-end, balancing visual feature extraction accuracy and language generation fluency, improving both through continuous iteration.

To adapt VLM to autonomous driving, training is usually divided into pre-training and fine-tuning. Pre-training involves massive online images and texts to establish general vision-language correspondence, equipping the model with cross-domain capabilities. Fine-tuning uses an exclusive autonomous driving dataset, including various road types, weather conditions, and traffic facilities, ensuring accurate sign text recognition and prompt generation adhering to traffic regulations and safety.

In applications, VLM supports multiple intelligent functions. First, real-time scene prompts, where VLM recognizes road conditions, combines signs and warnings, and generates prompts like "Road construction ahead, please slow down" or "Deep water ahead, please detour." Second, interactive semantic question answering, where passengers can ask questions like "Which lane is fastest ahead?" or "Can I turn right at the next intersection?" The system converts speech to text, combines image and map data, and responds with text like "Driving from the left lane avoids congestion, please maintain a safe distance" or "No right turn ahead, please continue straight." VLM also recognizes road sign and marker text, classifying signs and transmitting structured information like "Height limit 3.5 meters," "No U-turn," and "Under construction" to the decision-making module.

For real-time VLM operation in vehicles, an "edge-cloud collaboration" architecture is employed. Cloud-based pre-training and periodic fine-tuning distribute optimal model weights to the in-vehicle unit via OTA. The in-vehicle unit deploys a lightweight inference model optimized through pruning, quantization, and distillation, using the GPU or NPU for joint image and language inference within milliseconds. For safety prompts requiring low latency, local inference results are prioritized. For complex non-safety analyses, data is asynchronously uploaded to the cloud for in-depth processing.

Data annotation and quality assurance are vital for VLM deployment. The annotation team collects multi-view, multi-sample images under various lighting, weather, and road conditions, providing detailed text descriptions. For a highway construction scene, annotators frame construction vehicles, roadblocks, and cones, and write descriptions like "Construction on the highway ahead, left lane closed, please change lanes to the right and slow down to 60 km/h." Multiple review rounds and a weakly supervised strategy generate pseudo-labels for unlabeled images, reducing costs while maintaining data diversity and annotation quality.

Safety and robustness are core for autonomous driving. When VLM misrecognizes under adverse conditions, the system must assess uncertainty and take redundant measures. Approaches include model ensemble or Bayesian Deep Learning to calculate output confidence. When confidence falls below a threshold, the system reverts to traditional multi-sensor fusion results or prompts manual control. Cross-modal attention interpretable tools trace decision-making during accident reviews, clarifying why a prompt was generated, aiding system iteration and responsibility determination.

With large language models (LLM) and large vision models (LVM) advancements, VLM will achieve greater breakthroughs in multimodal fusion, knowledge updating, and human-machine collaboration. It will integrate radar, LiDAR, and V2X data, offering comprehensive environmental perception. Real-time updates on traffic regulations, road announcements, and weather forecasts input into the language model provide the latest background knowledge for decision-making and prompts. Passengers will receive more natural and effective driving suggestions through multimodal voice, gesture, and touchscreen inputs.

VLA vs. VLM: What's the Difference?

Both VLA and VLM are pivotal large model technologies, but how do they differ? While both belong to multimodal large model systems, they fundamentally differ in architecture, tasks, outputs, and applications. VLM primarily addresses image-language correlation, focusing on semantic image understanding and language expression. Outputs are natural language, like image descriptions and visual question answering, widely used in AI assistants, search engines, content generation, and information extraction.

VLA extends VLM, not only understanding visual and language information but also fusing them to generate executable actions. Outputs are control signals or action plans, like acceleration, braking, and turning. Thus, VLA handles perception, understanding, decision-making, and action control, crucial for "perception-cognition-execution" closed-loop systems in autonomous driving, robot navigation, and intelligent manipulators. VLM is about "understanding + explaining clearly," while VLA is about "understanding + listening + doing right." The former emphasizes information understanding and expression, while the latter focuses on autonomous behavior and decision-making capabilities.

Final Thoughts

By integrating image perception with natural language processing, the vision-language model enriches autonomous driving systems with semantic support. It not only helps vehicles "understand" complex road scenes but also efficiently interacts with human drivers or passengers using "understandable" language. Despite challenges in model size, real-time performance, data annotation, and safety assurance, with advancements in algorithm optimization, edge computing, and V2X technology, VLM is poised to become a key driver in the era of integrated "perception-understanding-decision-making," enhancing future travel safety and comfort.

-- END --