Exploring Scenarios Where Intelligent Driving Systems Struggle to Replace Human Drivers

![]() 08/08 2025

08/08 2025

![]() 462

462

Source: Smart Car Technology

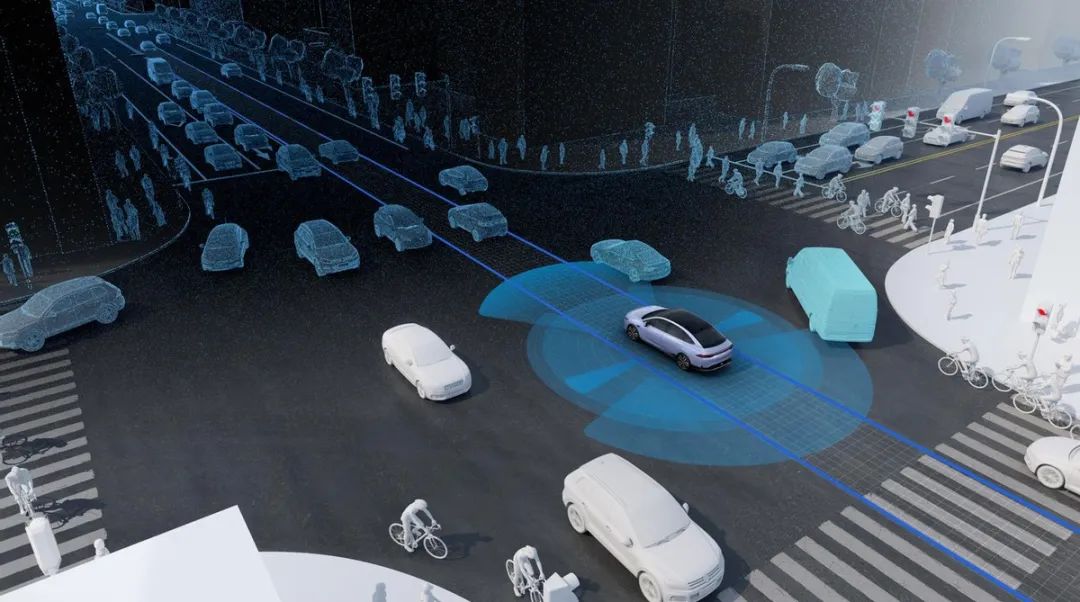

During the evening rush hour, an unmanned taxi blocks the middle of the road, unresponsive to the traffic police's shouts. A long line of frustrated drivers behind it honk their horns. This is not a scene from a science fiction movie but a real-life technical malfunction in the heart of the city.

In mid-2025, on a bustling city street, an L4 autonomous taxi, bearing the logo of a leading technology company, attempts a U-turn. Suddenly, it halts in the middle of the road, completely blocking the lane. The evening traffic quickly builds up, with horns blaring incessantly.

The traffic police arrive on the scene, shouting at the unmanned vehicle, "Move forward!" Yet, the vehicle remains unresponsive. Ultimately, staff members rush to the scene, take the driver's seat, and move the vehicle to the side of the road.

Among city residents, this autonomous taxi is known as "Stupid Brand" – a colloquial term for something clumsy or foolish. On an online city message board, numerous complaints are directed at these vehicles: running red lights, getting stuck during turns, and hesitating when changing lanes.

Current Technical Bottlenecks in Autonomous Driving

Current mainstream autonomous driving systems primarily rely on multi-sensor fusion to perceive the environment and make decisions through preset algorithms. This approach works well in structured road environments but falters in complex and varied open road scenarios.

At the perception level, the system has notable flaws. Liu Shuang, an observer, notes, "From my home to my class, if it's an experienced driver, they can take a shortcut and need only six or seven minutes, but a certain brand's autonomous vehicle must take the main road with more traffic lights, requiring over ten minutes."

Autonomous driving systems cannot flexibly choose routes like human drivers, and their perception abilities are still limited by preset maps and fixed rules.

The problems with the decision-making system are even more pronounced. On a city street, an autonomous vehicle with its left turn signal on finds itself in an awkward stalemate, mechanically flashing the signal. Since no vehicles yield, it simply "keeps flashing" and cannot move forward. This mechanical decision-making appears rigid and inefficient in the face of complex traffic interactions, completely lacking the flexibility of human drivers to adapt to changing situations.

More concerning is the ability to handle edge scenarios. An industry insider points out, "For example, there's a pothole in the middle of the road, and after it rains, the rainwater fills it up. At this time, your perception system might not recognize it as a pothole, possibly mistaking it for normal road reflection or thinking the water is shallow and driving over it, creating a safety risk." There's also the infamous scenario: "An autonomous vehicle frightened by a bag." These scenarios reflect a critical problem: often, it's not that they can't see, but that they don't recognize.

These technical deficiencies have led to autonomous taxis being labeled with warning signs reading "Test Vehicle, May Stop at Any Time" during city operations. Even if following vehicles notice in advance, they are still often startled by the sudden and abrupt stops of the vehicle in front.

Why is it so Difficult for Machines to Learn to Drive?

The bottlenecks encountered by autonomous driving technology are not accidental but stem from a series of fundamental challenges. Sensing technology's physical limitations take precedence. Existing sensors experience a significant decline in performance under severe weather conditions, such as heavy rain, heavy snow, and dense fog, severely affecting the recognition capabilities of lidar and cameras.

The core dilemma of decision-making algorithms lies in the lack of human-like reasoning abilities. Human drivers can predict changes in traffic flow by observing the subtle movements of surrounding vehicles, whereas existing systems primarily rely on preset rules and limited data training. When faced with scenarios not included in the training data, the system often does not know what to do.

High-precision map dependency is another major weakness. China's road environment is complex and ever-changing, with frequent construction and temporary rerouting. Autonomous driving systems are highly dependent on high-precision map navigation, but the map update speed cannot keep up with real-world road changes. Therefore, everyone is now promoting map-free solutions (like map-free NOA).

Social acceptance poses a deeper challenge. The public lacks trust in autonomous driving technology, and once an accident occurs, especially when liability is unclear, it further exacerbates concerns. In the city, traditional taxi drivers have already publicly denounced a certain brand's autonomous vehicles due to decreased income.

Cost pressure cannot be ignored either. The hardware cost of each autonomous vehicle of a certain brand is as high as 250,000 yuan, plus the expenses of data collection engineers, developers, maintenance personnel, and other teams, resulting in enormous operational pressure. Although the cost of the latest generation of models has dropped to around 200,000 yuan, it is still a long way from large-scale commercialization.

Sorting Out Scenarios Where Machines Struggle to Surpass Humans

Scenario Type 1: Memory-Driven Decision Making

Human drivers utilize long-term experience to optimize route selection. When heading home from work, a driver knows that a specific lane on a certain road is often congested and will change lanes in advance; they know that a certain intersection has many left-turning vehicles and will choose to go straight and then take a detour. This predictive decision-making based on memory is currently difficult for autonomous driving systems to achieve.

Scenario Type 2: Overall Traffic Flow Perception

When starting from a red light, human drivers don't just focus on the car in front. They observe the dynamics of traffic flow from intersecting directions, perceive the "rhythm" of the entire intersection, and can even predict the timing of starting based on the deceleration of oncoming traffic before the red light changes. This ability to understand macro traffic flow far exceeds the processing capabilities of current autonomous driving systems.

Scenario Type 3: Multi-Factor Real-Time Decision Making

When approaching a traffic light intersection, an experienced driver will select a lane based on multiple factors: not queuing behind a large truck (which starts slowly), avoiding bus-only lanes, choosing the lane with the shortest queue, and even anticipating which lane's driver might be distracted by a phone call and react slowly. This multi-variable real-time optimization decision is a significant challenge for machine systems.

Scenario Type 4: Handling Unstructured Environments

On unmarked roads, rural paths, or temporarily rerouted areas, human drivers can navigate by observing traces on the road surface and referencing the trajectories of other vehicles. Autonomous vehicles often directly "strike" or require manual takeover in these scenarios. It's like the saying, "Only I can drive the road back to the village; no one else knows the way" (because there isn't a road to begin with), making autonomous driving even more inadequate in such situations.

Scenario Type 5: Subtle Communication in Human-Vehicle Interaction

At intersections without traffic lights, human drivers achieve efficient passage through eye contact, gestures, or slight vehicle movements; when an electric bike tries to run a red light, human drivers often press the horn "harshly" to warn the bike not to move; when encountering construction and traffic jams, a traffic police officer's gesture makes it clear to human drivers where to go. This non-verbal communication ability and interaction method is currently difficult for autonomous driving systems to replicate, leading to clumsy performance in mixed traffic.

In daily driving, one often encounters scenarios worth pondering. For example, when a driver intends to change lanes to the right while driving on the road, there happens to be a car in the blind spot of the rearview mirror and side window. However, by observing the vehicle's shadow projected on the ground, the driver successfully determines the presence of the vehicle in the blind spot, thereby avoiding the risk of changing lanes. Such perception blind spots caused by physical obstructions can theoretically be avoided through the multi-sensor fusion layout of autonomous driving systems (such as lateral lidar).

But the core revelation of this case lies in the driver's ability to infer the state of objects in blind spots based on ground shadows, demonstrating human beings' deep situational understanding based on environmental cues. This ability relies on a comprehensive understanding of light, object motion relationships, and road space geometric characteristics, which is currently difficult for intelligent driving systems to replicate at the perception level. Similar edge scenarios requiring a high degree of environmental interaction understanding and experience inference are widespread in open road testing.

A Long but Hopeful Path to the Future

Listing the above scenarios is not to criticize current autonomous driving developers or system providers but to elucidate a crucial fact: if autonomous driving systems want to truly replace human drivers, their development still faces arduous challenges. Research shows that achieving this goal requires crossing the double gap of technical cognition and engineering practice.

Li Deyi, academician of the Chinese Academy of Engineering, has pointed out that autonomous driving has already passed the "scientific research exploration period" and entered the "product incubation period," but this stage will be very long. He predicts that large-scale mass production of autonomous driving will not occur until 2060.

The Secretary-General of the China-EU Association's Intelligent Connected Vehicle Branch said, "Current intelligent connected vehicles are not as intelligent as everyone imagines. They still require breakthroughs in large computing power and efforts in areas such as wire-controlled platforms and centralized domain controllers." This represents the industry's rational understanding of the current technological situation.

McKinsey's prediction is relatively optimistic: the commercial viability of L4 autonomous taxis and L5 fully autonomous trucks is expected to be achieved between 2028 and 2031, and the impact on driver employment is expected to occur as early as around 2030.

Faced with the current dilemmas, the new generation of autonomous driving technology is evolving towards "anthropomorphism." Li Auto's VLA large model represents this trend: it integrates vision, language, and action, aiming to simulate the cognitive process of human driving. The core breakthrough of the VLA model lies in the introduction of "chain-like thinking" capabilities. The system can perform multi-step reasoning like humans: recognizing blind spots → predicting potential dangers → taking preventive measures in advance. When the road is slippery in the rain, it can reason and analyze potential dangers in blind spots, slowing down in advance rather than reacting passively.

Multi-modal fusion is another key direction. Researchers have developed a multi-modal contrastive learning model for traffic sign recognition (TSR-MCL), which significantly improves the recognition ability of complex road signs by contrasting visual features with semantic features. On the TT100K dataset, this model achieved the highest accuracy rate of 78.4%.

Simulation testing has become a catalyst for accelerating technological maturity. Li Auto conducts over 70,000 simulation tests daily, covering 2,800 extreme scenarios such as heavy rain, congestion, and sudden accidents, enabling the system to accumulate experience in a safe environment.

On the technology roadmap, gradual evolution has become an industry consensus. Starting with limited applications in specific areas and scenarios (such as shuttle buses in parks or fixed bus routes), the operation scope is gradually expanded. With data accumulation and algorithm optimization, the ultimate goal is to achieve comprehensive application on open roads.

On the streets of future cities, an autonomous vehicle equipped with the VLA large model is driving smoothly. Facing a pedestrian suddenly rushing out from a blind spot, the system starts to smoothly decelerate 0.5 seconds in advance through 3D visual perception and chain-of-thought reasoning, avoiding the thrill of sudden braking. Then it smoothly merges into the left-turn lane and adjusts the starting timing by observing the dynamics of oncoming traffic before the green light turns on.

The traffic police stand on the street corner watching everything, with their walkie-talkie silent. Not far away, a group of taxi drivers are discussing transformation plans in a café, with a training advertisement for "Autonomous Driving Maintenance Engineers" displayed on the wall. This silent revolution will eventually arrive, but it may arrive in a more tortuous manner than technology optimists expect and in a more profound way than conservatives imagine.

- End -

Disclaimer:

Articles on this official account marked with "Source: XXX (not Smart Car Technology)" are all reprinted from other media sources. Our intent in reprinting these articles is solely to disseminate and share a broader range of information. This does not imply endorsement of the viewpoints expressed or responsibility for their authenticity by our platform. The copyright of these articles remains with the original authors. Should there be any infringement, please contact us for prompt removal.