Is LiDAR Essential for L4 Autonomous Driving, or is Pure Vision Sufficient?

![]() 08/08 2025

08/08 2025

![]() 680

680

A recent Dongchedi test has sparked significant discussions in the industry. Tesla, which primarily relies on pure vision, achieved top rankings with its outstanding performance. This has given rise to a crucial question: is LiDAR necessary to achieve L4 autonomy, or is pure vision sufficient?

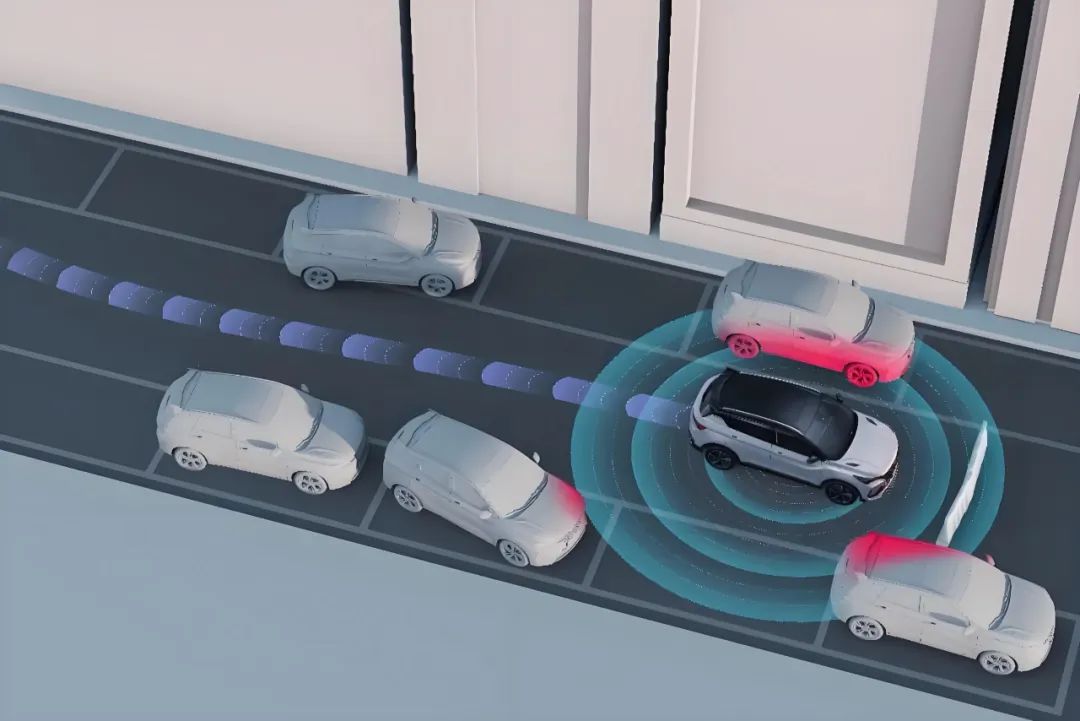

The transition from L2 assisted driving to L4 fully autonomous driving signifies not only an upgrade in algorithms and computing power but also new requirements for the vehicle's environmental perception capabilities. In autonomous driving systems, environmental perception functions similarly to human vision, hearing, and touch. Cameras mimic human eyes, capturing rich color and texture information; millimeter-wave radars are akin to hearing, detecting obstacles in darkness or severe weather; while LiDARs possess both depth measurement and precise positioning capabilities, enabling the reconstruction of the surrounding environment in three-dimensional space. To achieve L4-level "full autonomous driving," the system must operate stably in any foreseeable scenario, adhering to the "diversity and redundancy" design principles. Different types of sensors complement each other, avoiding blind spots and safety hazards caused by single perception failures.

LiDAR vs. Pure Vision

The pure vision solution relies solely on cameras to collect image data. While its hardware cost is low and integration with existing camera systems is straightforward, it is highly sensitive to changes in lighting and weather. In scenes with strong backlight, nighttime, heavy rain, dust storms, etc., camera images may experience overexposure, underexposure, or severe noise, leading to increased recognition and positioning errors in backend algorithms. Since these extreme scenarios often fall under the "long-tail scenarios," once perception errors occur, the system may exhibit "hallucination" phenomena—i.e., "Garbage in, Garbage out," posing a direct threat to safety. Therefore, many believe that the pure vision solution is currently more suitable for L2 assisted driving rather than L4 scenarios with stringent safety redundancy requirements.

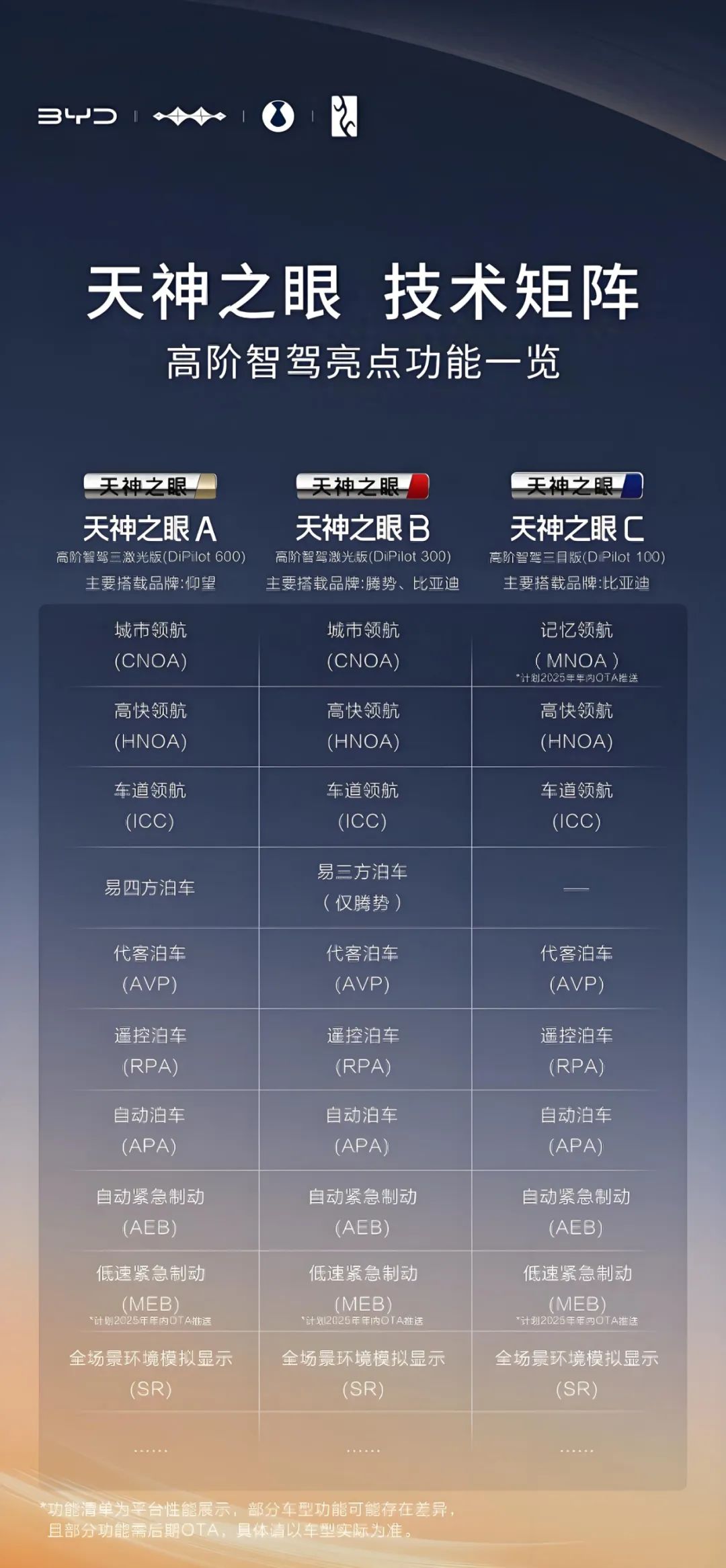

For instance, BYD's Tianshen Eye C, an intelligent driving system for ordinary passenger cars, adopts a pure vision solution with a sensor combination of 5 millimeter-wave radars + 12 cameras (with a front-view trinocular camera as the core) + 12 ultrasonic radars. In contrast, its higher-end models, Tianshen Eye A and Tianshen Eye B, are both equipped with LiDARs.

By emitting laser pulses and measuring the return time, LiDAR can accurately calculate the distance to the object's surface and generate high-precision point cloud maps. This three-dimensional point cloud data maintains stable performance under different lighting and meteorological conditions, crucial for constructing accurate environmental models. Compared to cameras, LiDAR's ranging accuracy typically reaches the centimeter level, effectively perceiving the specific positions and shapes of obstacles such as pedestrians, bicycles, and vehicles even in nighttime or low-light environments. Point cloud data is also easier to match with high-definition maps, significantly improving positioning accuracy and scene understanding capabilities.

However, LiDAR is not omnipotent. Under meteorological conditions like rain, snow, and fog, it can be interfered with by water droplets and snowflakes, generating noisy point clouds. Early mechanical rotating LiDARs also had drawbacks such as large size, high cost, and relatively limited lifespan, which once constrained their deployment in large-scale commercial vehicles. In recent years, solid-state LiDAR and hybrid scanning LiDAR technologies have continuously matured, with volume and cost gradually decreasing, but there is still a gap compared to cost-effective cameras. Based on considerations of cost, size, and maintenance, the pure vision solution remains attractive, especially in application scenarios with less stringent safety redundancy requirements than L4.

Tesla's pure vision solution is highly effective, primarily due to the power of its FSD system. Tesla FSD V12, a significant version iteration of Tesla's full self-driving system, adopts an end-to-end neural network architecture for the first time, integrating perception, decision-making, and control stages into a single neural network model.

The end-to-end large model architecture aims to unify perception, decision-making, and control into a black box model, simplifying the system architecture. However, such large models lack interpretability, making it difficult to trace and debug prediction anomalies, posing significant challenges to safety certification and fault diagnosis. In contrast, the modular tandem approach achieves layered implementation through perception, prediction, planning, and other stages. Each module functions independently, offering higher interpretability and controllability, facilitating rapid fault location and targeted repair strategies.

Author's Viewpoint

At the forefront of intelligent driving, it is believed that, for current autonomous driving technology, perception fusion is the feasible solution to achieve L4. For L4 autonomous driving, it must meet the requirements of "fail-operational" and "minimal risk maneuver." That is, regardless of failures in the perception module, decision-making module, or control execution, the vehicle must safely bring itself to a minimal risk state without relying on human intervention. This requires the sensor system to provide sufficient environmental information through redundant sensors when any single module fails. When cameras temporarily fail due to obstruction or dirt, LiDARs and millimeter-wave radars can continue to perceive; similarly, when LiDARs generate noise due to snow accumulation or raindrop interference, the visual information provided by cameras can compensate. Such multi-modal fusion can provide a solid guarantee for the "zero accident" goal of L4.

In fact, the choice between LiDAR and pure vision solutions is also influenced by ROI (Return on Investment). LiDAR and its related high-performance processing hardware significantly increase the vehicle's BOM (Bill of Materials) cost; whereas the pure vision solution only requires mass-produced CMOS cameras and relatively inexpensive computing platforms, making the hardware cost more acceptable to ordinary passenger car manufacturers. Therefore, for passenger car manufacturers whose primary goal is to sell vehicles and do not plan to operate Robotaxi services, the economic incentive to deploy LiDAR is not strong. Conversely, if automakers aim to operate Robotaxi fleets, they must strike a balance between safety redundancy and cost, often choosing diversified sensors to meet L4 operational needs.

LiDAR and pure vision solutions also differ in their performance in recognizing "long-tail scenarios." The so-called "long-tail scenarios" refer to exceptionally rare but safety-critical abnormal road conditions, such as intricate roadblocks in construction zones, debris scattered by trucks, wildlife crossing at night, etc. Robotaxi operators typically combine generative AI and reinforcement learning techniques to construct numerous long-tail scenarios on simulation platforms and use them to train multi-modal perception systems, enhancing robustness against extreme scenarios. Without the assistance of three-dimensional point clouds, pure vision solutions find it more challenging to reliably reconstruct and make decisions in a few extreme scenarios, making it difficult to meet the commercial safety standard of "zero accidents."

In summary, achieving L4-level autonomous driving is not a one-size-fits-all solution relying solely on a single sensor or algorithm. LiDAR has natural advantages in obtaining depth information and adapting to harsh environments, while cameras excel in cost and rich semantic information. Although the pure vision solution has demonstrated considerable potential in L2 assisted driving scenarios, to meet L4's stringent requirements for multi-scenario redundancy, safety interpretability, and robustness in long-tail scenarios, more innovations and breakthroughs are needed in hardware, algorithms, and simulation training.

In the foreseeable future, multi-modal sensor fusion will remain the mainstream path to fully autonomous driving; the pure vision solution is likely to serve more as a cost optimization or supplementary technology in specific scenarios, evolving synergistically with LiDAR and other sensors. Only through the synergy of multi-sensors, full algorithm architectures, and large-scale simulation validation can safe and reliable L4 autonomous driving be achieved.

-- END --