Envisioning the Cybersecurity Industry with "AII in AGENT"

![]() 08/11 2025

08/11 2025

![]() 525

525

Currently, AI Agents are still shaped by human definitions, but the horizon beckons a future where L5-level agents, harnessing the Swarm Framework and community openness, forge an "operating system for the AI era," fundamentally reshaping human-machine collaboration.

Recall August 2023, the inaugural ISC conference post the generative AI explosion. Amidst the uncertainty surrounding generative AI, interviews with Zhou Hongyi post-conference inevitably steered towards AI-related topics.

That year marked Zhou Hongyi's resurgence, as he boldly embraced AI, proclaiming it not a bubble but the next industrial revolution. Swiftly, he unveiled 360 Brain, a self-developed general large model, positioning 360 at the forefront of the domestic AI industry.

Two years hence, while the industry grapples with disillusionment and conversational experiences, Zhou Hongyi foresaw the immense potential of AI AGENTs. As the trend converges towards AGENTs, he stands at the vanguard, armed with swarm-based nano-agents.

This aligns with the ISC·AI Conference's theme: AII in AGENT – a shift from passive defense to active immunity, employing smarter AI AGENTs to reinvigorate cybersecurity and the broader internet landscape.

Over the past two years, generative AI has evolved from short-text Q&A to long-text generation and reasoning large models. While its progress in understanding and generating natural language is evident, enterprise applications highlight its shortcomings.

As Zhou noted at ISC, current large models lack robust reasoning abilities, often functioning as knowledge Q&A models. Moreover, many models cannot independently invoke tools or solve problems.

The advent of Deepseek earlier this year partially addressed the issue of model reasoning. However, in complex scenarios, a large model with sole reasoning ability is akin to a human brain – powerful but insufficient.

In practical business, whether digital or real-world, tasks demand the orchestration of multiple tools. Today's reasoning large models, though capable, lack the maturity to become productivity tools.

Nano-AI agents represent a potential evolutionary path for generative AI, transforming from toys to tools. As Zhou puts it, "The evolution of large models into agents is inevitable." Current agents can comprehend goals, plan tasks, invoke tools, retain memory, and seamlessly deliver from demand to result. They also leverage various tools, including large models, to plan and execute intricate tasks.

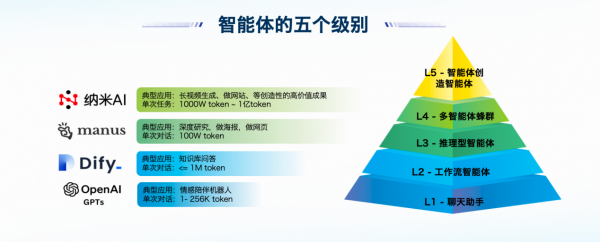

At the conference, Zhou outlined the evolution and characteristics of agents across L1 to L4 stages.

——L1 Chat Assistant: Essentially a chat tool, excelling in providing suggestions or emotional support, akin to a "toy-level" agent like GPTs.

——L2 Low-Code Workflow Agent: Evolving from toy to tool, but requiring human intervention for process setup. AI executes tasks, enhancing productivity through tool manipulation.

——L3 Reasoning Agent: Capable of autonomous AI planning to accomplish tasks, akin to creating human employees with domain expertise. They possess strong individual capabilities but may encounter bottlenecks in complex, cross-domain problems due to limited collaborative planning abilities.

——L4 Nano-AI: Realizing "multi-agent swarm collaboration," where multiple expert agents flexibly "group" like building blocks, enabling multi-layer nesting and collaborative division of labor.

Performance-wise, nano-AI can continuously execute complex 1000-step tasks, consuming 5 to 30 million tokens with a 95.4% success rate.

Multi-agent collaboration necessitates a platform-based approach, with a few L4 agents coordinating numerous L3 expert-level agents. Nano-AI's "Multi-Agent Swarm Collaboration Space" facilitates memory sharing among agents, averting coordination dilemmas. From task planning to collaborative execution and self-iteration, everything occurs seamlessly on the platform.

Currently, over 50,000 L3 agents populate the platform, enabling ordinary users to construct their own "Manus" through natural language. Under the Swarm Framework, these L3 agents form L4 teams with aligned steps and goals, efficiently tackling ultra-long, complex tasks like "generating a 10-minute cinematic masterpiece with one sentence," reducing effort from 2 hours to 20 minutes.

As the internet enters the AI era, cybersecurity, often overlooked by the general public, is deeply infiltrated by AI technology. This poses significant challenges to both attackers and defenders:

Cybersecurity experts remain scarce, with lengthy training cycles and varied response strategies based on company data types, hindering growth. Even with large model assistance, handling basic tasks is manageable, but complex, routine operations pose protective capability implementation hurdles.

Conversely, attackers can train "agent hackers" via AI to automate attacks without human hackers present. Depending on computational resources, these agents can be mass-produced, with one human hacker managing dozens, exponentially increasing capabilities.

As the "digital brain" of security operations experts, security agents center on security large models, possessing the timing ability to invoke tools and execute processes, fully replicating senior experts' analysis, decision-making, and operational capabilities.

Deploying security agents isn't foolproof. In industrial control and government decision-making, agent illusions might lead to equipment misoperation or policy deviations. Even a 0.1% misdiagnosis rate in medical agents can cause significant accidents under tens of millions of invocations.

Dynamic RAG enhancement mitigates this by embedding industry knowledge bases (e.g., national standards GB/T, industrial equipment parameters) into the agent reasoning process, ensuring authoritative data retrieval before answer generation. For instance, a government processing agent can automatically link to the latest State Council document library, reducing leeway. Additionally, 360's large model guard's "data detoxification" module filters contradictory training data and injects domain rules.

Another approach is the CoVE verification chain. After generating a response, agents self-inspect, disassemble key propositions, generate verification questions, and cross-verify using relevant database tools. Physical feedback mechanisms, like converting control instructions into API calls, reading sensor data, and confirming execution results, form a decision-making closed loop.

Not just 360, any company needing cybersecurity can create its own security agents. Compared to general AI firms, 360's confidence stems from its unique cybersecurity data, knowledge, tools, workflows, large models, and agent creation platform. Each component is a puzzle piece, each business a barrier.

Beyond customized security agents, users can tailor agents to their needs. With the current trend towards agent "customization," deploying agents familiar with enterprise situations and providing tailored protection is feasible.

As Zhou noted, 2025 is the "safe" first year for agents and the birth year of security agents. Building on large models' "brain," security agents add "hands and feet," enabling active attack-defense and tool utilization. In business, this equates to adding new-quality productivity and combat effectiveness.

From inception, 360 agents avoided "computational power involution," focusing on scenario-based, vertical productivity, leveraging security field expertise. With a closed-loop security system integrating "intelligent identification, intelligent shield, intelligent control," and nano-AI search as the landing product, it became users' first AI touchpoint.

Since the year's beginning, 360's L4 "expert" agents have spanned multiple verticals, with collaborative task error rates plummeting by nearly 90%. This vision of human "CEOs" and cyber team AI AGENTs, where users deploy over 50,000 agents like "BOSS Direct Hire" and form exclusive inter-company collaboration teams, is no longer a dream.

While AI Agents still need human definitions, near-future L5 agents, leveraging the Swarm Framework and community openness, will forge an "operating system for the AI era," redefining human-machine collaboration.

If agents truly infiltrate daily life, human work will involve defining, planning, managing, and supervising agents. Agents will become digital employees, handling tedious tasks, while humans must learn to collaborate with them.

Thus, individuals will become super-individuals, and agent-heavy companies will transform into super-organizations, potentially reshaping the economic landscape and operational logic.

This "business personnel leading AI innovation" model accelerates AI technology application and grounding, making agents true "digital employees."

As Zhou predicts, "In the era of agents, those who cannot command AI will be eliminated." This strategy propels 360 from a cybersecurity provider to an AI ecosystem leader.