Baidu Intelligent Cloud Crafts the Optimus Prime of the AI Era

![]() 08/29 2025

08/29 2025

![]() 558

558

Lately, the discourse around AI Infra, or artificial intelligence infrastructure, has intensified within the AI domain. Following the unveiling of DeepSeek V3.1, an official remark adapting to the next generation of domestic chips, Cambrian's market valuation saw a comprehensive upward adjustment. Meanwhile, Baidu's Kunlun chip secured a billion-level order in China Mobile's centralized procurement project. Both the industry and the investment community have witnessed a significant uptick in their expectations for AI's foundational software and hardware.

What lies beneath this shift? Why is AI Infra emerging as a new buzzword avidly pursued by the AI industry? How can we attain an AI Infra that meets industry aspirations?

As I pondered this question, an intriguing name came to mind: Optimus Prime from "Transformers." When Shanghai Film Dubbing Studio introduced this iconic animated series in 1984, they named the Autobot leader "Qiantianzhu" in Chinese. Compared to the Hong Kong and Taiwan versions of "Ke Bowen" and "Wuditieniu," "Qiantianzhu," derived from the tale of Gong Gong angrily striking Buzhou Mountain in "Huainanzi," is both informative and elegant. This name encapsulates the protagonist's rugged bravery and combat prowess while embodying its ability to support all and its steadfast, reliable spiritual core.

In the AI era, we aspire for intelligent technology to break through ceilings and explore uncharted territories previously inaccessible to humans. However, to achieve this, we first need a solid infrastructure capable of propelling AI models to new heights.

On August 28, the 2025 Baidu Cloud Intelligence Conference took place in Beijing. The comprehensively upgraded Baige 5.0 introduced during the conference might just be that AI Optimus Prime.

Broadly speaking, AI Infra refers to the hardware and software system used for deploying, running, managing, and optimizing AI models, encompassing familiar AI computing power, AI inference engines, and a suite of model development and invocation tools. AI infra has been around since the inception of AI technology, but why has it recently garnered widespread attention?

The core reason is that, in the nascent stages of large model development, the demand for AI infra across industries primarily revolved around supporting large model training and inference. The focus on industrial innovation and competitiveness was more on the models themselves. However, as large models integrate with various industrial scenarios and the scale of model invocation grows, it has become apparent that infrastructure is not merely about training and inference but can create new value for models based on the infrastructure, enhancing their training and inference efficiency and user experience. If previously, the industry's requirement for AI Infra was to run models, the current goal has shifted to relying on AI Infra to secure the future.

For instance, today we can apply DeepSeek on various platforms, but user experience differs significantly across them. Some platforms experience lag, while others have generally excessive inference times. With a slight comparison, users naturally gravitate towards platforms offering smoother, faster large model usage. This is the competitive edge AI Infra differences confer upon enterprises.

Among AI Infra's various related capabilities, AI computing power is undeniably the most crucial. Robust AI computing power supply is not only the bedrock for model training and inference but also a decisive factor in model performance and commercial prospects.

Generally, AI Infra platforms focused on computing power determine models' upper limits in three aspects: providing low-latency, high-efficiency model access for seamless AI inference; achieving robust model stability to ensure uninterrupted, non-degraded services; and enhancing resource utilization so fewer hardware can handle more requests, thereby reducing overall AI costs.

A so-called weak AI Infra can cause large models to falter. AI infra is no longer a uniform prerequisite but can itself become the core source of AI competitiveness. To enable models to transcend imagination's limits, we must first craft the Optimus Prime of AI infra.

The newly upgraded Baige aims to define infrastructure as solid and reliable as Optimus Prime.

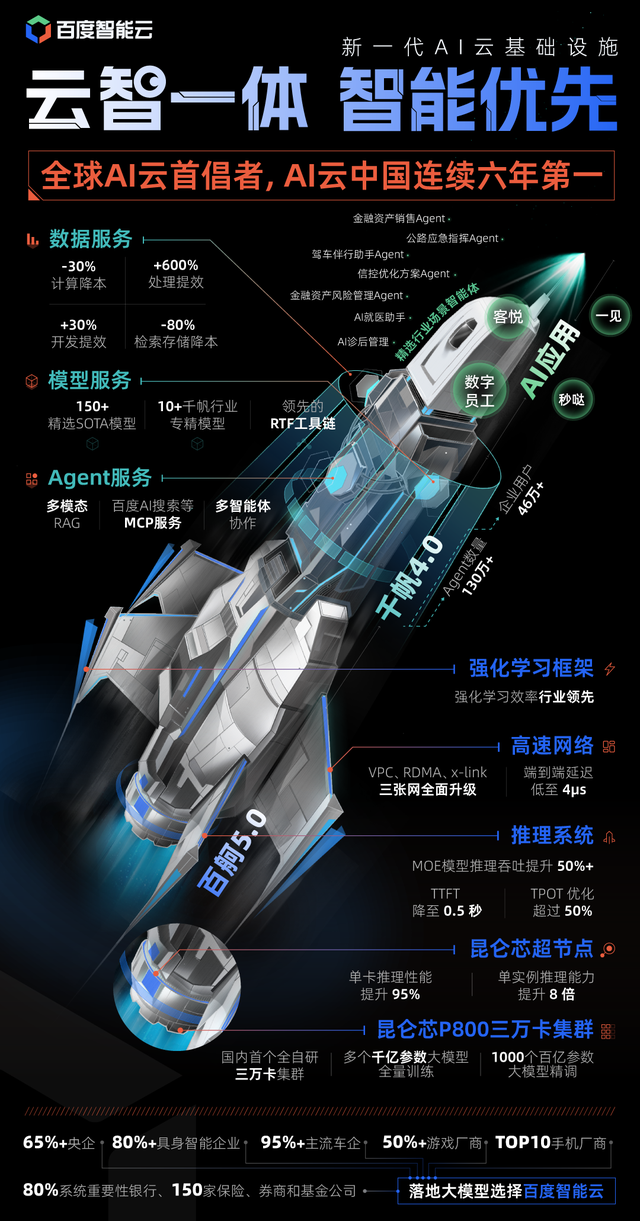

The efficacy of AI computing infrastructure stems from multiple layers, including network, computing, and inference. The highlight of Baige's new upgrade is that it doesn't improve AI computing power acquisition from a single aspect but brings about nearly comprehensive strength growth. In other words, Baige is defining what the Optimus Prime of AI computing power entails from all dimensions.

Baidu Intelligent Cloud's newly released Baige AI computing platform 5.0 has undergone a comprehensive upgrade in networks, computing, inference systems, and training and inference efficiency, achieving leapfrog iterations that solidify its learning efficiency leadership, thereby comprehensively breaking the AI computing efficiency bottleneck.

As the saying goes, a tripod has three legs, and a triangle is stable. Specifically, Baige 5.0's supporting role is constructed by three extremely stable pillars:

1. Network pillar.

Baige 5.0 has comprehensively upgraded the VPC, RDMA, and X-Link networks frequently involved in large model computing. The high-speed VPC network supports 200Gbps (GigaBytes) of jumbo frame transmission, thereby significantly boosting model training and inference efficiency. Baige's self-developed HPN network can support 100,000-card RDMA network interconnection in a single cluster and compress end-to-end latency to 4us. Facing MoE models with ultra-large parameter scales, Baige has created Baidu's self-developed X-Link protocol, enabling faster communication between experts, thereby enhancing MoE models' training and inference effectiveness.

2. Computing pillar.

Faced with the inevitable demand for large-scale AI computing clusters from ultra-large models, Baige has also explored super nodes. The newly released Kunlun chip super node employs Kunlun chips, fully stack self-developed by Baidu and recognized by key national industries. By forming a super node with 64 cards, the Kunlun chip super node achieves a 95% increase in single-card performance and an 8-fold boost in single-instance inference capability. Public clouds offer the best way for enterprises to obtain large-scale AI computing power. Leveraging Baige, users can effortlessly deploy trillion-parameter large models within minutes using a single cloud instance. Based on Baidu Intelligent Cloud's powerful technical prowess, Kunlun chip super nodes excel in various capabilities, making Baige a top-tier existence in domestic AI Infra, truly achieving the strength to support the sky.

3. Inference pillar.

As large models become the daily rigid demand for more users, improving model inference capability has entered a critical growth stage. To this end, Baige 5.0 has been newly upgraded with an inference system that employs three core strategies—"decoupling," "adaptive," and "intelligent scheduling"—to conduct highly refined management and optimization of AI inference resources such as computing power, memory, and network, thereby achieving a magnitude of performance improvement and ultimately enhancing MoE inference throughput models by over 50% and achieving a TTFT as low as 0.5 seconds.

Returning to the DeepSeek inference deployment scenario discussed earlier, in the newly upgraded Baige, DeepSeek R1's inference throughput can be increased by 50%, enabling Baige users to effectively obtain model experience and resource utilization efficiency far surpassing similar platforms.

Simultaneously, the reinforcement learning framework released by Baige can achieve ultimate compression of computing power resources and a comprehensive iteration to improve training and inference efficiency. The framework no longer treats training and inference as two independent processes but realizes highly coordinated and seamless integration akin to an industrial assembly line. This working mode maximizes resource utilization efficiency and will elevate mainstream reinforcement learning models' overall efficiency to new industry heights. Currently, it supports Baidu's reinforcement learning model training in vertical fields like finance, education, programming, and customer service and will become a key opportunity for all industry sectors to embrace reinforcement learning models.

With the support of these three pillars, Baige 5.0 can elevate model computing efficiency to the extreme. Only then can models further transcend limits and freely explore the vast realm of intelligence.

To enable models to pierce the sky, we need to transform AI Infra into Optimus Prime—this is the infrastructure strength defined by Baige 5.0.

In "Transformers," Optimus Prime's most famous line is "Autobots, transform and roll out." In the real world, Baige 5.0's mantra should be: "Enterprise intelligence, transform and roll out based on AI Infra."

We can examine how the most concerned industries and fields break through industrial limitations and achieve the goal of turning infrastructure into AI productivity through Baige via two stories.

Story 1: "Robot Transformation."

Embodied intelligence is currently a hotbed for technological development, entrepreneurship, and investment in China. Baidu Intelligent Cloud has supported the embodied intelligence "national team" encompassing innovation centers in Beijing, Shanghai, Zhejiang, and Guangdong and also provides computing power support for over 20 key enterprises in the industry chain.

The reason Baidu Intelligent Cloud is favored is that embodied intelligence is in its nascent industry stages and urgently needs robust computing power support while maximizing model development efficiency. Baige's product technology accumulation in efficient computing power scheduling, model training and inference acceleration, etc., can significantly enhance embodied intelligence models' development efficiency. In the high-speed development stage of embodied intelligence, Baige provides critically important support for efficiency and resource utilization, enabling robots to "transform" towards practical, implementable directions better and faster.

Story 2: "Creators, Roll Out."

Vast is an AI company focused on general model research and development, dedicated to establishing a 3D UGC content platform by creating mass-level 3D content creation tools. Its main products are 3D large models provided for industries such as games, CG movies, animation, architectural interiors, XR/VR, and digital twins. With the Vast solution, users can generate spatial 3D assets within a minute by simply uploading images, making it a rising star in this field.

During business development, Vast found it needed to face a lengthy model training cycle and high computing power costs, and every step from data preparation to model training and inference had to be precise and error-free, posing a high test of the team's technical capabilities. By utilizing the Baige platform, Vast obtained high-performance cloud-native AI computing support specifically designed for large models. This includes comprehensive cluster operation and maintenance support and full lifecycle management of tasks, as well as advanced features like training/inference acceleration, fault tolerance, and intelligent fault diagnosis. Through powerful AI infrastructure and mature AI engineering capabilities, Baige effectively reduced Vast's large model training time, saved resource costs, and significantly lowered the overall threshold for model training and inference. Ultimately, it enabled Vast to develop a new generation of 3D large models faster and better, ultimately allowing designers and content creators to obtain efficient solutions for exploring AI possibilities.

Baige's profound understanding and effective support for intelligent business are deeply rooted in Baidu Intelligent Cloud's accumulation in the AI cloud domain. According to IDC's "China AI Public Cloud Service Market Share, 2024: Evolving Towards Generative AI" released on August 18, China's AI public cloud service market reached 19.59 billion yuan in 2024. Baidu Intelligent Cloud ranked first with a market share of 24.6%, retaining its position as the champion of China's AI public cloud market for six consecutive years and ten times in total, fully demonstrating the foresight and implementation results of Baidu Intelligent Cloud's pioneering "cloud-intelligence integration" strategy. Deeply understanding AI business and providing robust AI computing power support is actually a scarce capability in the public cloud market. And this is also becoming a solid moat for Baidu Intelligent Cloud.

Baige, functioning as the cornerstone of computing power within Baidu Intelligent Cloud's ecosystem, is experiencing rapid growth and expansion. From the narratives presented above, it becomes evident that Baige 5.0, akin to Optimus Prime, empowers enterprises to shatter the boundaries of the AI era. It accomplishes a holistic AI infrastructure upgrade by significantly reducing training time, conserving resource expenditures, enhancing model performance, and lowering the barrier to model development.

Leveraging its capabilities, enterprises can embark on their exploration, innovation, and mastery of the unknown with greater ease and swiftness. The pinnacle of AI exploration in this era could very well be defined by the AI infrastructure prowess showcased by Baige.