The "Dream Machine" AI Video Model Soars in Popularity: Flaws Abound, but It Works!

![]() 06/14 2024

06/14 2024

![]() 624

624

Over the past six months, AI-generated videos have been advancing intermittently. After Sora's introduction by OpenAI earlier this year sparked unprecedented discussions, Vidu, claimed to be the first domestically developed large-scale video model, and subsequent video generation models released by domestic manufacturers such as ByteDance, Tencent, and Kuaishou, have garnered occasional attention from the outside world. Just a few days ago, Leitech also had an internal beta experience of Kuaishou's large-scale video model "Keling".

However, AI-generated videos have indeed gained popularity again in the past few days.

As soon as it was released, "Dream Machine" caught fire across social networks.

On June 12, startup Luma AI released its new AI video generation model, Dream Machine, and opened it up for public testing. Soon, not only did the official release a series of sample videos, but a plethora of videos generated by netizens using "Dream Machine" also emerged on social networks.

For example, the modern-style sample video has a high level of presentation for girls and cats, especially the cat's head and eye movements.

Image compressed, Image/ Luma AI

There are also fantasy-style videos, where the generated characters or objects are indeed fantastical, even with a touch of Lovecraftian horror.

Image compressed and edited, Image/ Luma AI

Moreover, "Dream Machine" not only supports generating videos from text but also supports generating videos based on images and text. Therefore, you can also see girls jumping out of "Girl with a Pearl Earring" and videos that real estate agents might like, such as "How to turn a landscape photo into a landscape video".

Even some people have begun using "Dream Machine" to create a visual story telling about "a day in the life," including a portrayal of an American middle school student from waking up in the morning to going to school and then to a dance.

Not only are users having fun with it, but overseas and domestic media have also noticed the popularity of "Dream Machine." However, some domestic media have clearly overstated it, claiming that it surpasses Sora or is more realistic and fluid than Sora. We'll leave those claims aside for now, but where does "Dream Machine" get its support for generating 120-second videos?

In fact, "Dream Machine" only supports generating 5-second videos. The official website states that generating videos takes 120 seconds, excluding the waiting time. And if you open the sample videos on the official website separately, you'll find that they are all 5 seconds long (unless edited).

Image/ Luma AI

This video duration pales in comparison to the 16-second duration of the domestic video model Vidu (which recently claimed to have extended to 32-second audio videos), let alone Sora, which has pushed the AI-generated video duration to 60 seconds.

According to official information released by OpenAI, the main contributor to Sora's ability to achieve a breakthrough in video duration is its diffusion Transformer architecture, which replaces the U-Net architecture with the Transformer architecture based on the Diffusion model.

What about "Dream Machine"? Currently, Luma AI has not disclosed specific details.

Of course, you can't say that a 5-second video duration is too short because currently, a large number of video generation models can only generate 5-second videos, including Kuaishou's Keling, which claims to be able to generate up to 2-minute videos, but at least for now, it can only generate 5-second videos. And we can't just look at the "video duration" dimension alone; we also have to consider the usability and potential of the visuals.

Impressive performance, but is the content reliable?

Frankly speaking, "Dream Machine" gave me a pretty impressive first impression. Let's start with the official sample videos.

Image compressed, Image/ Luma AI

For example, in this segment, a man holding a gun cautiously advances in a room with a dangerous atmosphere.

Apart from the consistency between the main character and the background, what may be most surprising is the change in lighting. Not only is there a clear reflection of light on the gun, but on the man's face, you can also see the originally eerie red light gradually shifting from warm to cold as the character moves, converging with nearby light sources, including changes in brightness that conform to basic physical laws.

There's also a segment where an explosion occurs in an abandoned house, and the camera moves from far to near. Although there are still fixed white rods appearing out of nowhere, during the camera movement, both the unchanged furniture and the paper scraps flying around due to airflow changes can be considered intuitive.

In addition, "Dream Machine" also demonstrates its potential as an animation creation tool. For example, in one video, the camera shifts from the character's front to back, already closely resembling a close-up shot in animation creation.

Image compressed, Image/ Luma AI

However, these are ultimately selected by the official. Whether it's text, image, or video generation models, the official demos will definitely be carefully selected to find the relatively better ones, which everyone can understand. But from the perspective of ordinary users, it's easy to mistake them for the average level of the model.

In the actual content created and shared by netizens, even among those few impressive works, you can still see more or less errors.

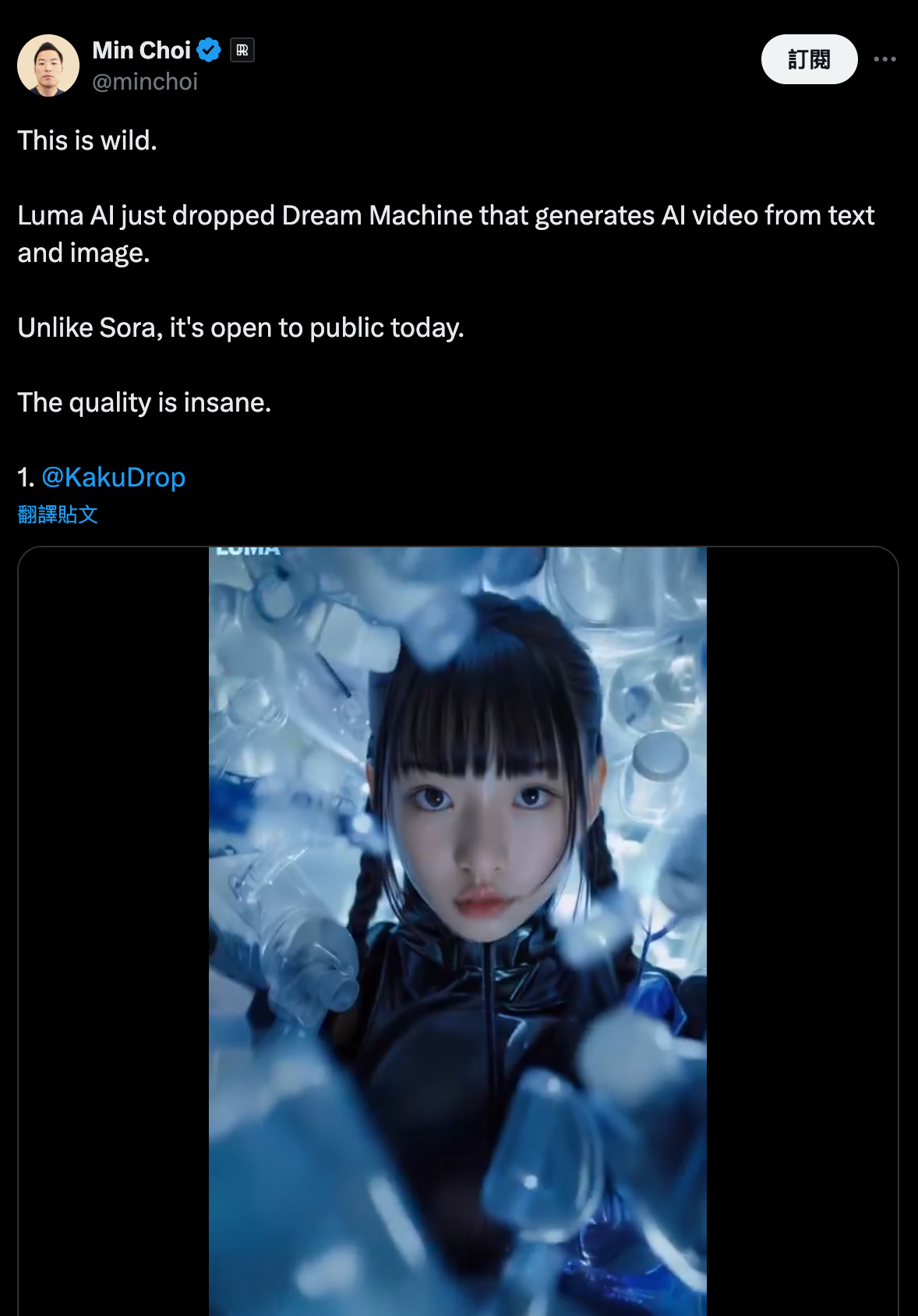

For example, @minchoi created a beautiful girl video using "Dream Machine," with several segments that rival real-life footage.

Image/ X

However, there are still rendering issues with the character's hands, and the character's form still changes to some extent, which is even more apparent in the previously mentioned video of "Girl with a Pearl Earring".

Image compressed, Image/ Luma AI

In addition, consistency issues also manifest in style, with some starting out as 2D animation style and gradually shifting towards 3D animation style.

Image compressed, Image/ Luma AI

I also tried creating a video with "Dream Machine" using the prompt, "A group of people walking down a street at night with umbrellas on the windows of stores." The actual effect was quite poor: The characters were moving backwards strangely, with weird actions behind them holding umbrellas, and the umbrellas were flying in the air.

Image compressed, Image/ Luma AI

Still, there are some advantages, such as the reflection on the road surface and the consistency between the background and the characters.

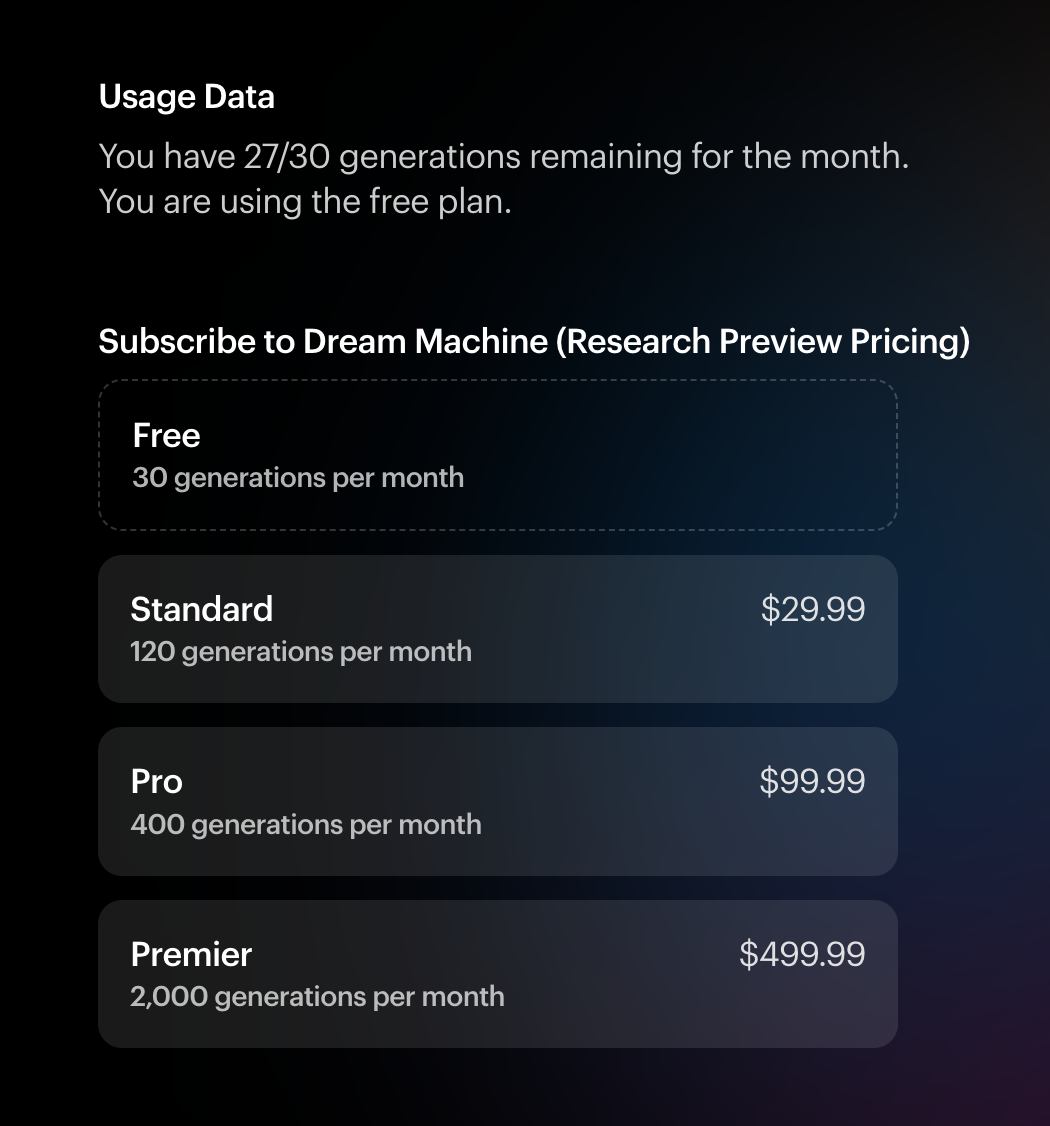

Even so, these issues haven't stopped the creative enthusiasm of the vast majority of netizens. After all, compared to Sora, "Dream Machine" is at least publicly available and offers 30 free generations per month. And compared to most available video generation models, "Dream Machine" has made significant progress in consistency.

In addition to free users, "Dream Machine" currently offers three paid options, including a standard tier for $29.99, a professional tier for $99.99, and a premium tier for $499.99, with the difference being the number of videos that can be generated each month.

Image/ Luma AI

For ordinary users, these prices may seem outrageous, but for those creators who have started making money on TikTok through "Dream Machine," they are likely still within acceptable range.

From AI drawings to AI videos, the big models are fighting again.

AI videos didn't start with "Dream Machine," and they certainly didn't start with Sora. In fact, as early as 2022, when AI painting was already stunning the world, AI videos began to attract a lot of attention.

First, we need to go back to that time in 2022, when ChatGPT was still in the making (released at the end of the year). In the eyes of the general public, the field where AI technology was developing the fastest was AI painting.

In April 2022, OpenAI released a new version of its text-to-image generation program, DALL-E 2, and an image generated by DALL-E 2 of "an astronaut riding a horse in space" began to go viral on social networks, giving many artists a real concern about "losing their jobs".

Image/ OpenAI

Including subsequent Midjourney, they both have higher resolution and lower latency compared to previous products in generating images. Although Stable Diffusion started the latest, with its open-source advantage, it surpassed Midjourney and DALL-E in user attention and usage scope, and made the most significant progress in the initial stages.

In fact, AI painting had already begun to "invade" all aspects of society at the time, whether it was the award-winning "Space Opera" (generated by Midjourney) or various companies starting to experiment with directly generating advertisements, posters, and even content works through AI painting.

If images can be generated by AI, how far away are videos? As we all know, videos are essentially composed of frame-by-frame images. So in 2022, Google and Meta actually started a competition in AI-generated videos, with Meta's Make-A-Video and Google's Imagen Video, both of which are video diffusion models that directly generate videos from text, and their underlying technology is still AI painting.

Image/ Meta

At that time, AI-generated videos were generally no more than 5 seconds long, with low resolution and minimal changes in the frame, making them more like making images "move" rather than true videos. More importantly, limited by their status as large companies and inertia, neither Google nor Meta chose to open them up for use by users and creators, and their influence was mostly limited to within the industry.

In contrast, AI video startups like Runway, Synthesia, and Pika were more "flexible." On the Gen-2 released last year, Runway not only improved the quality of video generation but also added features like Motion Slider and Camera Motion, giving users more control over the videos.

Pika, which gained popularity for a while last year, is also a relatively popular AI video generation tool. Due to its high image quality, it was even called the "video version of Midjourney." Compared to Runway Gen-2, Pika further gave creators more control to ensure content controllability and scalability, such as planning generation down to the level of eyes and expressions.

Since then, both Stable Diffusion and Midjourney have also successively released versions that generate videos, bringing AI-generated videos into a period of contention. But regardless of which one, there isn't much difference in the visual performance of AI-generated videos, with more differences at the product level.

Until Sora came out with its Transformer architecture and dominated from the start.

Large language models are changing AI video generation.

The shock and discussions caused by Sora are obvious to all, and some even believe that Sora will be a fast track to AGI (Artificial General Intelligence). Whether Sora can truly understand the operating laws of the physical world is a separate discussion, but it is certain that Sora has completely changed the development trajectory of AI video generation technology.

Image compressed and edited, Image/ OpenAI

One of the most impressive technological breakthroughs of Sora lies in its output video duration. When other companies could only generate videos for a few seconds, Sora broke through the 60-second mark.

In fact, even the newly released "Dream Machine" can only generate videos for a few seconds. Once longer videos are needed, the second, third, and Nth videos generated are prone to deformation, resulting in excessive differences between the front and back frames, making them unusable.

In addition, AI-generated videos generally suffer from temporal coherence issues, but in a Sora-generated video of a puppy, the puppy maintains coherence even after pedestrians completely block the screen, and the subject does not undergo significant changes. Another aspect that has been mentioned many times is "simulation," which can simulate actions that conform to the rules of the physical world very well.

And Sora's advantages largely stem from its core differences in architecture. Therefore, after Sora, the new technical route combining Transformer architecture with diffusion models quickly gained widespread attention, including Vidu (jointly developed by Shengshu Technology and Tsinghua University), PixVerse from Aishi Technology, and Kuaishou's Keling, all adopting this route.

From this perspective, although Luma AI has not disclosed the architectural design adopted by "Dream Machine," considering the consistency and logical performance demonstrated in the generated videos, it's hard to believe that "Dream Machine" is purely based on a diffusion model. It's highly probable that it has also borrowed from Sora's approach of integrating Transformer architecture into the diffusion model.

Of course, this is just a guess. But for AI videos, this is increasingly becoming an inevitability.

Source: Leitech